Applicability of impact evaluation methodologies in development and social action initiatives

By: Elena Ripoll

First approaches to impact assessment in organizations

Acknowledgement Note:

This project was carried out as part of the “Carreras con Impacto” program during the mentorship phase. You can find more information about the program in this entry.

Abstract

This project explores the applicability of impact measurement methodologies in various development and social action initiatives. It focuses on small organizations working in areas such as poverty reduction, education, and community development, which often face challenges in assessing the impact of their projects due to a lack of resources and knowledge, among other reasons.

The project aims to systematize and analyze various impact evaluation methodologies, facilitating their selection and application to improve program effectiveness in organizations where they are not yet applied. The document includes a comparative table of methodologies and a guide with key questions to help organizations implement them. It addresses aspects such as strategy, success factors, and challenges in impact evaluation, while discussing the limitations of such evaluations. In summary, it seeks to raise awareness of the importance of impact evaluations in order to optimize resources and improve the outcomes of development and social action programs.

Contextualization of the Problem

This project stems from the recognition that many development and social action initiatives lack adequate evaluations of their impact. While there are proven methodologies for assessing the impact of interventions, many organizations, especially smaller ones, do not have the resources or knowledge needed to implement them effectively (Pavitt, 1991; Klevorick et al., 1995; World Bank, 2006).

Evaluation involves making value judgments about the activities and outcomes of a policy, strategy, or project, comparing them against a predefined standard (Real Academia Española, n.d.). Program evaluations can focus on various elements, such as the needs of the target population, efficiency, costs, and others. However, for the purposes of this project, we focus on impact evaluation. This type of evaluation is particularly important in development and social action programs because it measures the actual changes generated by an intervention (J-Pal, n.d.). By doing so, it provides solid evidence of the program’s effectiveness, helps improve its design, and justifies the expansion or replication of the intervention in other contexts (EvalCommunity, 2024).

The concept of impact refers to significant and measurable improvements in people’s well-being as a direct result of an intervention. This includes Evaluating not only immediate results, but also long-term effects and the scale of those outcomes (GiveWell, 2024). In turn, the concept of development refers to the process through which a region improves its economy and quality of life, focusing on economic growth and poverty reduction (World Bank, 2006). It emphasizes the critical interrelationship between economic growth, social development, and long-term sustainability. By promoting a holistic approach, sustainable development requires making complex decisions that balance multiple factors, avoiding the temptation of immediate gratifications that could cause irreversible harm to people and the planet (Center for Sustainable Development, Columbia University, n.d.; United Nations, 2024).

The concept of social action refers to activities carried out by individuals, organizations, or groups aimed at improving people’s well-being, promoting social justice, and addressing issues related to poverty, exclusion, and inequality (Giddens, 2006). Like sustainable development, social action adopts an integrated approach, balancing immediate needs with long-term goals to avoid negative effects on the social fabric and to foster cohesion and participation (Amartya Sen, 1999).

Evaluating Impact

Impact evaluation is presented as a targeted effort, designed before the intervention, which generates causal evidence by measuring whether the desired effects on beneficiaries were achieved, and to what extent the objectives were met. This type of evaluation helps identify which components of a program work best and which need adjustments; it also ensures that resources are used optimally to achieve the best possible results (J-Pal, n.d.).

Moreover, it requires close collaboration with program implementers, who contribute their expertise in formulating the right questions and are responsible for verifying whether the programs are truly achieving the intended impact in solving the problem. These evaluations are typically conducted by independent entities or professionals to ensure the objectivity and credibility of the results, which presents both an advantage in terms of transparency and accountability and a challenge for smaller or resource-limited entities (Fowler, 2000). Despite this, the importance of investing in impact evaluation is justified since the results generate knowledge about the practices undertaken or the objectives achieved, benefiting multiple programs or contexts (J-Pal, n.d.; Baker, 2000).

This work is conceived as a bridge between academic methodologies and effective practices, aiming to collect and systematize approaches that allow for the exchange and application of information in a simplified and intuitive manner, to optimize the impact of development and social action initiatives. The purpose is to provide a review to evaluate interventions in the areas of development and social action and thereby increase their scalability and effectiveness. As a project, it is aimed at small organizations, those with fewer than 50 employees, dedicated to addressing specific social challenges, such as poverty reduction, education, or community development.

These organizations are characterized by having clearly defined goals, closely aligned with their mission; their broader objectives often include improving the quality of life for vulnerable populations and promoting sustainable and inclusive development. A key characteristic is community participation, along with transparency and accountability to their stakeholders. Moreover, they tend to be more flexible and innovative, allowing them to quickly adapt to changing circumstances and local needs, implementing context-specific solutions. However, limited financial and human resources present a constant challenge in terms of efficiency, creativity, and evaluation (Marín, 2003). Although many adopt recognized frameworks and tools to provide rigorous and credible reports, they often lack the skills or resources to carry out these evaluations effectively. Due to the characteristics provided, these organizations have been selected as the focus of this study, since, while they do not have sufficient resources to conduct exhaustive impact evaluations, they are capable of carrying out projects that reach a large number of people, making it necessary to monitor their effects (Awan, 2020).

The justification for the project lies in the need to address the lack of impact evaluation in social and development interventions, an aspect that is often not on the agenda of these small entities despite its importance (Edwards et al., 1995). This need has been highlighted by organizations such as the Organization for Economic Co-operation and Development (OECD, 2023), which points out that “impact measurement is crucial to identifying what works, for whom, and in what context,” thereby allowing organizations to improve the effectiveness of their interventions and optimize resource allocation. Similarly, the Rockefeller Foundation, a pioneer in impact evaluation, emphasizes that “small and medium-sized organizations face unique challenges in impact measurement due to a lack of resources and internal capacity,” highlighting the need for approaches and tools tailored to their contexts (Rockefeller Foundation, 2023).

Objectives

General Objective

To conduct a literature review of impact evaluation methodologies and develop a guide for selecting the most appropriate methodology for development and social action programs implemented by small organizations, aimed at improving cost-effectiveness and optimizing their activities.

Specific Objectives

Objective 1: Search for and gather information on impact measurement methodologies applicable to organizations that develop development and social action programs.

Objective 2: Analyze and categorize the methodologies found according to recurring points of debate in impact evaluation, with an emphasis on their adherence to comparative criteria and the definition of performance indicators tailored to their programs.

Objective 3: Develop a guide with key questions to assist in selecting the most appropriate impact evaluation methodologies, according to key elements of the organization or program.

Methodology

The methodological process began by defining the concept and identifying the characteristics of the target organizations. Entities with fewer than 50 employees, engaged in development and social action projects, were selected. This focus is justified by the specific orientation of these organizations toward targeted areas, their efficient concentration of resources, their agility in responding to localized needs, and their ability to make a significant impact. Additional characteristics influencing their projects were also identified, such as scalability, cost-effectiveness, and the long-term sustainability of their interventions.

For the selection of methodologies, a systematic literature review and analysis of specialized sources were conducted to gather information on various impact evaluation methodologies. Both qualitative and quantitative approaches were analyzed, and methodologies aligned with the project’s goals and scope were selected. These were classified based on comparative criteria, a process that culminated in the creation of a graphical table that organizes the methodologies, designed to be a tool for facilitating selection in different organizational contexts.

Finally, based on the information collected, a guide was developed to assist in the selection and implementation of impact evaluation tools. This guide includes key questions that aid decision-making, taking into account: the nature of the project (social development, sustainability, education, etc.), the available resources (budget, personnel, etc.), and the specific needs of the organization (such as scalability or adaptability).

Results

Before introducing the results, it is important to contextualize their relevance and utility within the proposed framework of this project. The primary goal was to conduct a systematic review of impact evaluation methodologies and develop a guide to help select the most suitable one for development and social action programs. To achieve this, a search and collection of various methodologies used in impact evaluations of development and social action programs were conducted, and comparative criteria between them were identified. Additionally, a guide was created to assist organizations in developing an approach for impact evaluation, serving as a tool for this purpose. Two main results were obtained:

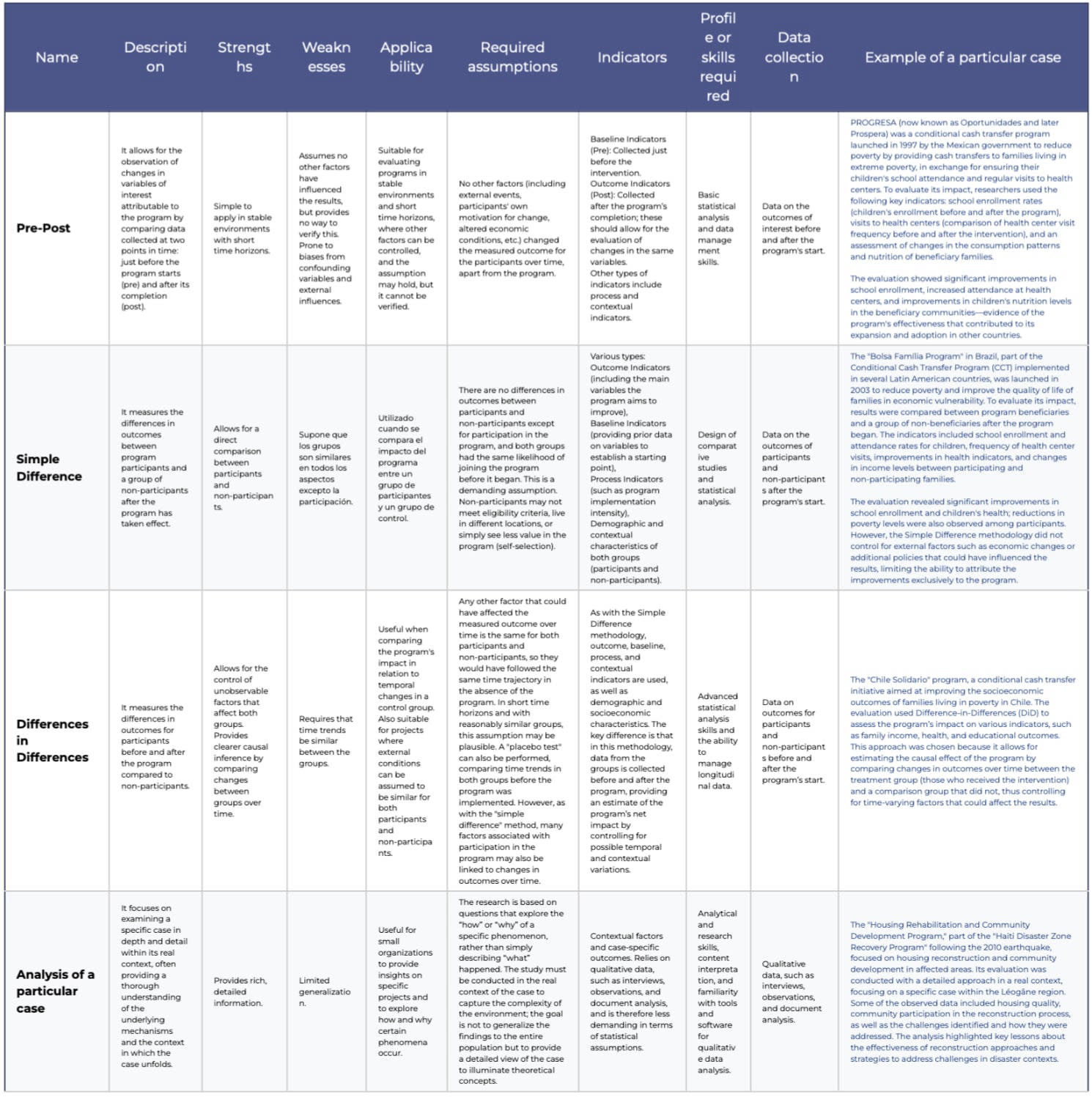

Impact Evaluation Methodologies Table

Table 1, which follows this text, is a crucial component of this proposal. It systematically organizes the most relevant methodologies for impact evaluation, considering their characteristics. It summarizes different evaluation methodologies, highlighting their main features, strengths, weaknesses, applicability, and case study examples. The table is intended to act as a map that guides the selection of the most suitable methodology, facilitating informed decision-making and enhancing the ability of small organizations to measure and optimize the impact of their initiatives.

Guide of Key Questions

Table 1 offers an overview of various impact evaluation methodologies, highlighting the key criteria for comparing methodologies and selecting the most appropriate one for evaluating programs in each case. These criteria determine the suitability of each approach based on its strengths, weaknesses, and the specific context of the program. Building on this, a guide of key questions was developed to assist in the selection of impact evaluation methodologies.

To create this guide, two organizations were taken as key references. The first is Eido Research, an organization specializing in helping Christian organizations measure both their social and spiritual impact; their approach provides key tools for formulating questions that explore both tangible and intangible outcomes and helps understand which aspects of an intervention are most important to evaluate (Eido Research, n.d.). The second organization, Charity Entrepreneurship, uses a research-based approach and offers tools for prioritizing the most relevant questions to maximize the impact of an intervention—addressing efficiency, cost-effectiveness, or the different time horizons of an intervention (Charity Entrepreneurship, 2020). Both organizations also bring an evidence-based approach, helping to develop questions aimed at effective data collection.

a) Strategy, Desired Outcomes, and Success Factors

The implementation of a development or social action program must begin with a precise definition of its objectives and the strategy to achieve them. It is essential to identify the expected outcomes and the factors that will contribute to success. This includes establishing the specific changes aimed for and the concrete actions that will ensure the intervention’s effectiveness (Eido Research, n.d.). The following graphic offers a visual representation of a strategic model for success in social program implementation. This model, based on Eido Research’s methodology, provides a clear and structured guide for planning and evaluating interventions through five key steps: identifying the situation to be addressed, supporting the people involved, defining the desired outcomes, developing activities aligned with the objectives, and determining success factors.

Thus, the desired outcomes must be clearly defined and differentiated between short- and long-term goals. It is important that they are based on empirical evidence when possible, or in the absence of that, on theoretical or ethical foundations that guide decision-making (Eido Research, n.d.). It is also crucial to identify both direct and indirect beneficiaries, as well as the stakeholders involved, considering their roles and impact on the strategy (not only the individuals directly engaged, but also partner organizations and key actors participating in the initiative’s ecosystem).

It’s worth emphasizing that the use of pilots is another key element in strategy design and success evaluation. These tests allow for the refinement of the model, validation of the innovation’s feasibility in real-world contexts, measurement of demand, and documentation of both social outcomes and implementation costs, as noted by the Global Innovation Fund. This ensures that the intervention is aligned with the beneficiaries’ needs and expectations, maximizing its social impact (Global Innovation Fund, n.d.).

b) Key Factors in Impact Evaluation

To measure and improve the impact of social and development interventions, it is essential to identify and consider certain key factors that influence evaluation results. These factors not only guide the development of evaluations but are also critical for interpreting their results effectively and in alignment with the project’s objectives. In this context, we have highlighted five key principles that reflect the logic behind Eido, specifically adapted to support the purpose of our project. These principles are presented below.

Each proposed principle emphasizes a comprehensive view of the evaluation process. They ensure that the analysis is not only precise regarding tangible results (as highlighted by the “fruitful” approach) but also addresses the diversity and context in which the interventions take place (“contextual”). Furthermore, the principles ensure that evaluations do not solely measure immediate changes but also consider the underlying causes and long-term effects (“causal” and “transcendental”). Finally, the “relational” approach reinforces the importance of aligning individual well-being with community and environmental well-being, thereby promoting more sustainable development that aligns with the project’s values.

Other key factors that stand out in impact evaluation are the evidence and robustness of the intervention. Evidence provides a solid foundation for making informed decisions and mitigating risks when implementing development projects. In the context of the Global Innovation Fund, it helps identify which projects have the greatest potential to generate large-scale social benefits, facilitating decision-making on their feasibility and the need for further testing (GIF, n.d.). As projects progress, continuous feedback based on evidence allows for quick adjustments and improvements in implementation, ensuring more accurate and effective results. Robustness is equally important; analyzing whether the intervention holds up under changes in tests or metrics used, and verifying whether different sources of evidence confirm or contradict the results. This analysis ensures that conclusions remain consistent and reliable across different approaches (J-PAL, n.d.).

| Relational | Interconnection between the individual, the community, and the environment. By incorporating the relationship with the environment and the community into impact evaluation, sustainability and overall well-being are promoted, ensuring that personal and community growth are aligned. |

| Fruitful | Emphasis on the measurement of tangible indicators, such as changes in beliefs, practices, and experiences, which facilitate impact evaluation through concrete and communicable results to stakeholders. This is essential for securing the necessary support and funding. |

| Contextual | Recognition of the diversity and uniqueness of each individual. This allows for a more precise capture of impact, offering a nuanced evaluation tailored to the specific context and maturity of the beneficiaries. |

| Causal | Identification of both change and its cause, ensuring that results are the direct outcome of the activities performed and not of external factors, allowing the impact to be traced back to specific activities. |

| Transcendental | Evaluation is not an end in itself, but a means to achieve deeper understanding and growth. Interpreting the data from a transcendental perspective ensures that evaluations align with the core mission and values of the project. |

Table 2. Five key factors for analyzing the approach to social interventions. Note: Own elaboration based on the Eido Research logical framework.

Lastly, a crucial factor is the appropriate use of indicators. The Inter-American Development Bank (IDB), in collaboration with the Project Management Institute, has developed a specific methodology useful for development projects called “Project Management for Results” (PM4R). In this methodology, the definition of indicators is framed as SMART: specific, measurable, achievable, relevant, and time-bound. These indicators allow for clear and precise monitoring and evaluation of progress, ensuring that the results are quantifiable and aligned with the project’s objectives (IDB, n.d.). Additionally, they must be realistic and achievable within the available resources, with a well-defined timeframe to ensure the expected results are achieved before the project’s conclusion.

Discussion on evaluation methodologies

At this point, it is important to provide a discussion on the project, its coherence, and its development. This analysis not only addresses the need to evaluate the progress achieved so far, but also allows for identifying areas for improvement and opportunities for adjustment in the future. This section offers a detailed analysis of the selection and evaluation of methodologies applied in development and social action projects. The comparison between different approaches helps identify the advantages, limitations, and challenges associated with each one, thus ensuring better alignment with the specific context of the project.

First, section 5.1 presents a diagram that visualizes the main comparative criteria for selecting methodologies, providing a foundation for their analysis. Then, in section 5.2, the key variables and factors that determine the applicability and effectiveness of each methodology are examined. Finally, section 5.3 explores the challenges and limitations inherent in these evaluations, such as externalities and the difficulties in controlling certain contextual factors.

Discussion Points: Comparative Criteria for Methodologies

The comparative analysis of evaluation methodologies requires the consideration of several key factors that can influence the effectiveness and suitability of each approach. This section presents a diagram that summarizes the “Seven Comparative Criteria for Methodology Selection.” These criteria provide a useful framework for evaluating the various available methodologies, enabling a more informed and tailored selection for the specific context of each project.

The diagram helps visualize how each methodology may differ in terms of its benefits, limitations, and technical requirements, which facilitates the decision-making process when considering which methodology is most appropriate based on the project’s objectives, available resources, and variables to be measured. Additionally, these criteria emphasize the importance of having clear indicators and robust data collection—critical factors for ensuring the validity and reliability of the results obtained. From this comparative framework, key variables that determine the methodology selection will be further explored in subsequent sections.

Second Scenario: Key Variables in the Comparison of Methodologies

When dividing the comparative criteria, several common trends and underlying variables emerged that could influence the choice of an impact evaluation methodology. These variables affect the previously mentioned comparative criteria and may tip the balance toward one methodology over another, so they must be considered according to the specific characteristics of the program or project being evaluated.

In this section, these performance indicators are identified and defined, acting as key variables that affect the established comparative criteria. While these indicators are not always visible in the initial analysis, they play a crucial role in selecting the most suitable methodology. Taking these variables into account will help ensure that the chosen methodology is not only effective in general terms but also appropriate for the specific context and needs.

Program Environment. The stability of the environment plays an important role in the effectiveness of methodologies applied to development programs. In stable environments, it is easier to obtain reliable results since there are fewer external factors that could distort the program’s impact. On the other hand, in long-term programs, trends become more evident, allowing for the observation of cumulative effects and changes over time (J-Pal, n.d.).

Characteristics of the Control Group.

It is crucial that the control group and the treatment group be as similar as possible, except for their exposure to the program, so that any observed differences can be attributed to the intervention. Additionally, certain methodologies assume that both groups would have followed the same temporal evolution without the program, so it is essential to ensure that this premise is valid to avoid biases.

Contextual and External Factors. In contexts where there are multiple interventions or external policies that could influence the results, a methodology must be selected that can isolate the specific impact of the program in question. Furthermore, in situations where changes are difficult to predict, a qualitative approach is required to capture unanticipated dynamics.

Available Data. Some methodologies require the collection of detailed quantitative data before and after the intervention to accurately assess its impact. Other, more qualitative methodologies rely on narrative data to interpret the context and observed changes. The accuracy and quality of the data over time are essential, as a lack of consistency can compromise the results.

Challenges, Limitations, and Externalities in Impact Evaluation

Impact evaluation faces several significant challenges. After reviewing the literature, the most important ones have been identified and are presented schematically below.

Many public policies are based more on opinions than evidence due to political pressures and the urgency of implementing solutions. Despite the increase in impact studies, there is still a considerable gap between relevant questions and the available evidence. This is compounded by the complexity of social contexts, making it difficult to create a valid counterfactual, which can lead to distrust in the results obtained (J-Pal, n.d.).

Impact evaluation is often considered retrospectively, which prevents programs from being designed appropriately from the start. Governments tend to prefer immediate results, which can be an obstacle for more in-depth evaluations that require time to yield reliable outcomes (Held, 1997). There is also cultural resistance, where evaluation is seen as a tool for oversight rather than learning, which limits its adoption.

Even when rigorous evidence is available, it is not always used effectively. This may be due to the difficulty of identifying valid scientific evidence, the lack of an evaluation culture oriented toward learning, and the perception that results obtained in a specific context are not applicable to others, reducing the use of existing evidence (Head, 2016).

On the other hand, when assessing the feasibility and potential impact of an intervention, it is crucial to identify limiting factors that may influence its success. According to studies by Charity Entrepreneurship, these factors include the availability of funding and talent, execution difficulty, the possibility of externalities, and the likelihood of success. Evaluating these barriers allows for the prioritization of interventions that maximize impact, ensuring that resources are directed toward the most promising and effective initiatives (CE, 2019).

Funding availability can restrict the scalability of the intervention. It is essential to assess how much funding is available and whether it will be sufficient to scale the initiative, also considering the possibility of attracting new sources of funding as the program progresses (Emerson et al., 2000).

The difficulty of finding specialized skills can hinder the effective implementation of the intervention. It is necessary to assess whether the initiative requires hard-to-find skills and whether it is possible to attract the necessary talent, considering the competition from other organizations in the same field. The robustness of success metrics is fundamental to determining the long-term effectiveness of the intervention (World Economic Forum, 2020) (UNDP, 2020).

Counterfactual Replaceability. It is necessary to analyze how many other organizations are addressing the same issue and how effective they are in their interventions. This includes evaluating whether the intervention is truly necessary or if there are already government efforts or NGOs covering part of the problem (MacAskill, 2015).

Externalities, or the side effects that the intervention could generate, both positive and negative. It is crucial to evaluate whether the intervention could discourage others in the same field or, conversely, catalyze significant growth. Additionally, collateral effects that could amplify or reduce the direct impacts of the intervention are examined (World Bank, 2021).

Conclusions

Impact evaluation methodologies are essential for verifying whether social and development programs achieve the desired effects and measuring the magnitude of these results. They allow for program redesigns, help understand the underlying mechanisms that generate public goods, and ensure that resources are used efficiently. However, despite the increase in rigorous evidence production, its use remains limited among smaller organizations. This is partly due to barriers such as the lack of evaluation planning from the outset, the fear of evaluating in complex social contexts, and the preference for immediate solutions over long-term evaluations.

In essence, evaluating a program’s impact allows for the optimization of resources and improvement of results by identifying which components work best. It ensures that programs genuinely address the identified problems and generates public goods, as the knowledge gained can be applied in other contexts, amplifying social impact and justifying the evaluation costs. It offers answers to questions about a program’s effects or the uncertainty surrounding the best strategy to pursue.

Despite the declared challenges, this document aims to continue promoting a culture of evaluation and learning, breaking down the barriers that limit its use. Impact evaluation should be viewed as a tool to improve quality of life, allowing public policies to be based on solid evidence. Only then can we ensure that social programs are effective, scalable, and sustainable, contributing to both local and global well-being.

Additional Recommendations

To support organizations in impact evaluation, there are several courses, resources, and platforms that can help organizations enhance their evaluation capabilities. After reviewing several of these during the development of this document, the following are especially recommended:

BetterEvaluation: Offers a wide range of educational materials, practical tools, and detailed guides for impact evaluation. Its platform includes webinars and workshops that can be highly useful for organizations looking to strengthen their evaluation capacities.

MITx JPAL101SPAx Impact Evaluation of Social Programs: An online course to gain deep knowledge about the impact evaluation of social programs, providing practical tools and methodologies for implementation.

Global Impact Investing Network (GIIN): Provides resources, guides, and case studies on impact evaluation in the field of impact investing.

Challenges and Limitations

The development of this project revealed various challenges and limitations, both methodological and conceptual, which influenced the depth and scope of the results. A significant initial obstacle was the lack of specificity in the methodologies used by many organizations in their impact evaluations; despite their websites claiming to use impact evaluations, they did not provide sufficient detail to allow for deeper exploration. This lack of clarity made it difficult to compare and analyze data across different interventions, highlighting the need for greater transparency and standardization in evaluation approaches, both to enhance report credibility and to increase stakeholder confidence in evaluation results.

Moreover, the use of advanced methodologies, such as econometric techniques, poses a challenge even for professionals trained in social sciences. This technical barrier can limit the ability of many organizations to employ rigorous methodologies that ensure more accurate evaluations.

Another conceptual challenge was the confusion surrounding key terms such as “measurement” and “evaluation.” Early in the project, these terms were used interchangeably in several sources, making it difficult to precisely define the project’s scope. It wasn’t until delving into specialized courses, like those offered by J-PAL, that a clear differentiation between the two was established. It is understood that such confusion is common in the field of social development and can hinder the precision of analyses if not properly addressed.

Although other types of evaluations, such as those focused on processes or contextual needs, were considered for investigation, the limited scope of the project led to a focus on impact evaluation. This decision was based on the ability of this approach to measure the real changes generated by an intervention, which was deemed a priority for the project’s purpose. However, recognizing the relevance of other approaches could have provided a broader perspective on how different contexts and environments can influence the success of interventions. Similarly, a more detailed analysis of current trends could have been made, including a discussion on the methodologies used in impact evaluations of case studies.

Finally, there were difficulties in deciding how much weight to give to small conceptual nuances. Terms like “initiative,” “program,” “project,” or “element” were sometimes used as synonyms to facilitate the document’s flow, even though in some cases the differences between them are significant. Finding a balance between terminological precision and text clarity was a constant challenge and could be considered an opportunity for improvement in future projects.

Overall, despite the limitations encountered, the project has successfully met its primary objective of analyzing the effectiveness of interventions, leaving the door open for more in-depth future studies that address these issues with greater precision and breadth.

Executive summary: Impact evaluation methodologies are crucial for assessing the effectiveness of development and social action initiatives, but many small organizations lack the resources to implement them properly; this project reviews methodologies and provides guidance for selecting appropriate approaches.

Key points:

Impact evaluations measure significant changes in well-being resulting from interventions, helping improve program design and justify expansion.

A table summarizing key impact evaluation methodologies was created, comparing their features, strengths, and weaknesses.

A guide with key questions was developed to help organizations select suitable evaluation methodologies based on their specific context and needs.

Key factors in impact evaluation include evidence, robustness, appropriate indicators, and consideration of relational, contextual, and causal aspects.

Challenges include lack of planning for evaluation, preference for immediate results over long-term assessment, and difficulty applying evidence across contexts.

Recommendations include promoting an evaluation culture, using standardized approaches, and leveraging available educational resources on impact evaluation.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.