“In the day I would be reminded of those men and women,

Brave, setting up signals across vast distances,

Considering a nameless way of living, of almost unimagined values.”

Emrik

My latest tragic belief is that in order to improve my ability to think (so as to help others more competently) I ought to gradually isolate myself from all sources of misaligned social motivation. And that nearly all my social motivation is misaligned relative to the motivations I can (learn to) generate within myself. So I aim to extinguish all communication before the year ends (with exception for Maria).

I’m posting this comment in order to redirect some of this social motivation into the project of isolation itself. Well, that, plus I notice that part of my motivation comes from wanting to realify an interesting narrative about myself; and partly in order to publicify an excuse for why I’ve ceased (and aim to cease more) writing/communicating.

I appreciate the point, but I also think EA is unique among morality-movements. We hold to principles like rationality, cause prioritization, and asymmetric weapons, so I think it’d be prudent to exclude people if their behaviour overly threatens to loosen our commitment to them.

And I say this as a insect-mindfwl vegan who consistently and harshly denounces animal experimentation whenever I write about neuroscience[1] (frequently) in otherwise neutral contexts. Especially in neutral contexts.

- ^

Evil experiments are ubiquitous in neuroscience btw.

- ^

Thank you for appreciating! 🕊️

Alas, I’m unlikely to prioritize writing except when I lose control of my motivations and I can’t help it.[1] But there’s nothing stopping someone else extracting what they learn from my other comments¹ ² ³ re deference and making post(s) from it, no attribution required.

(Arguably it’s often more educational to learn something from somebody who’s freshly gone through the process of learning it. Knowledge-of-transition can supplement knowledge-of-target-state.)

Haphazardly selected additional points on deference:

Succinctly, the difference between Equal-Weight deference and Bayes

“They say . | Then I can infer that they updated from to by multiplying with a likelihood ratio of . And because C and D, I can update on that likelihood ratio in order to end up with a posterior of . | The equal weight view would have me adjust down, whereas Bayes tells me to adjust up.”

“Ask the experts. They’re likely the most informed on the issue. Unfortunately, they’re also among the groups most heavily selected for belief in the hypothesis.”

- ^

It’s sort of paradoxical. As a result of my investigations into social epistemology 2 years ago, I came away with the conclusion that I ought to focus ~all my learning-efforts on trying to (recursively) improve my own cognition, with ~no consideration for my ability to teach anyone anything of what I learn. My motivation to share my ideas is an impurity that I’ve been trying hard to extinguish. Writing is not useless, but progress toward my goal is much faster when I automatically think in the language I construct purely to communicate with myself.

To clarify, I’m mainly just sceptical that water-scarcity is a significant consideration wrt the trajectory of transformative AI. I’m not here arguing against water-scarcity (or data poisoning) as an important cause to focus altruistic efforts on.

Hunches/reasons that I’m sceptical of water as a consideration for transformative AI:

I doubt water will be a bottleneck to scaling

My doubt here mainly just stems from a poorly-argued & uncertain intuition about other factors being more relevant. If I were to look into this more, I would try to find some basic numbers about:

How much water goes into the maintenance of data centers relative to other things fungible water-sources are used for?

What proportion of a data center’s total expenditures are used to purchase water?

I’m not sure how these things work, so don’t take my own scepticism as grounds to distrust your own (perhaps-better-informed) model of these things.

Assuming scaling is bottlenecked by water, I think great-power conflict are unlikely to be caused by it

Assuming conflicts happen due to water-bottleneck, I don’t think this will significantly influence the long-term outcome of transformative AI

Note: I’ll read if you respond, but I’m unlikely to respond in turn, since I’m trying to prioritize other things atm. Either way, thanks for an idea I hadn’t considered before! : )

I think this is 100% wrong, but 100% the correct[1] way to reason about it!

I’m pretty sure water scarcity is a distraction wrt modelling AI futures; but it’s best to just assert a model to begin with, and take it seriously as a generator of your own plans/actions, just so you have something to iterate on. If you don’t have an evidentially-sensitive thing inside your head that actually generates your behaviours relevant to X, then you can’t learn to generate better behaviours wrt to X.

Similarly: To do binary search, you must start by planting your flag at the exact middle of the possibility-range. You don’t have a sensor wrt to the evidence unless you plant your flag down.

- ^

One plausible process-level critique is that… perhaps this was not actually your best effort, even within the constraints of producing a quick comment? It’s important to be willing to risk thinking&saying dumb things, but it’s also important that the mistakes are honest consequences of your best effort.

A failure-mode I’ve commonly inhabited in the past is to semi-consciously handicap myself with visible excuses-to-fail, so that if I fail or end up thinking/saying/doing something dumb, I always have the backup-plan of relying on the excuse / crutch. Eg,

While playing chess, I would be extremely eager to sacrifice material in order to create open tactical games; and when I lost, I reminded myself that “ah well, I only lost because I deliberately have an unusual playstyle; not because I’m bad or anything.”

- ^

I first learned this lesson in my youth when, after climbing to the top of a leaderboard in a puzzle game I’d invested >2k hours into, I was surpassed so hard by my nemesis that I had to reflect on what I was doing. Thing is, they didn’t just surpass me and everybody else, but instead continued to break their own records several times over.

Slightly embarrassed by having congratulated myself for my merely-best performance, I had to ask “how does one become like that?”

My problem was that I’d always just been trying to get better than the people around me, whereas their target was the inanimate structure of the problem itself. When I had broken a record, I said “finally!” and considered myself complete. But when they did the same, they said “cool!”, and then kept going. The only way to defeat them, would be by not trying to defeat them, and instead focus on fighting the perceived limits of the game itself.

To some extent, I am what I am today, because I at one point aspired to be better than Aisi.

More wisdom from Eliezer (from a quote I found via Nevin’s comment):

“But how does Nemamel grow up to be Nemamel? She was better than all her living competitors, there was nobody she could imitate to become that good. There are no gods in dath ilan. Then who does Nemamel look up to, to become herself?”

… If she’d ever stopped to congratulate herself on being better than everyone else, wouldn’t she then have stopped? Or that’s what I remember her being quoted as saying. Which frankly doesn’t make that much sense to me? To me it seems you could reach the Better Than Everybody key milestone, celebrate that, and then keep going? But I am not Nemamel and maybe there’s something in there that I haven’t understood yet.”

… It was the pride of the very smart people who are smarter than the other people, that they look around themselves, and even if they aren’t the best in the world yet, there’s still nobody in it who seems worthy to be their competitor, even the people who are still better than them, aren’t enough better. So they set their eyes somewhere on the far horizon where no people are, and walk towards it knowing they’ll never reach it.”

… And that’s why when people congratulated her on being better than everybody, she was all, ‘stop that, you only like me that much because you’re thinking about it all wrong’, compared to some greater vision of Civilization that was only in her own imagination.”

It took me 24 minutes from when I decided to start (half-way through reading this post), but that could be reduced if I had these tips at the start.

The website requires you to enable cookies. If you (like me) select “no” before you realize this, you can enable them again like this.[1] (Safe to ignore otherwise.)

Yes, you can simply copy-paste Ben’s suggestions above into sections Policy proposal, Animal Welfare, and Further comments.

The only question I wrote anything in is Q29 in the About you section, selecting “Other (please specify)”:

Q29. …your reason for taking part... I am not responding on behalf of an organization, but I am mostly pasting a template from a policy proposal suggested by an acquaintance here:

I am from Norway, but I care to write this response because I don’t wish for the animals to suffer unnecessarily. I can answer on behalf of the policy response if you wish to contact me, or you may contact the author of that document.

- ^

On Chrome:

1) to the right of the URL bar, select Cookies and site data → Manage on-device site data

2) delete both cookies there

3) refresh page, and you will now be prompted again

And a follow-up on why I encourage the use of jargon.

Mutation-rate ↦ “jargon-rate”

I tend to deliberately use jargon-dense language because I think that’s usually a good thing. Something we discuss in the chat.

I also just personally seem to learn much faster by reading jargon-dense stuff.

As long as the jargon is apt, it highlights the importance of a concept (“oh, it’s so generally-applicable that it’s got a name of its own?”).

If it’s a new idea expressed in normal words, the meaning may (ill-advisably) snap into some old framework I have, and I fail to notice that there’s something new to grok about it. Otoh, if it’s a new word, I’ll definitely notice when I don’t know it.

I prefer a jargon-dump which forces me to look things up, compared to fluent text where I can’t quickly scan for things I don’t already know.

I don’t feel the need to understand everything in a text in order to benefit from it. If I’m reading something with a 100% hit-rate wrt what I manage to understand, that’s not gonna translate to a very high learning-rate.

To clarify: By “jargon” I didn’t mean to imply anything negative. I just mean “new words for concepts”. They’re often the most significant mutations in the meme pool, and are necessary to make progress. If anything, the EA community should consider upping the rate at which they invent jargon, to facilitate specialization of concepts and put existing terms (competing over the same niches) under more selection-pressure.

I suspect the problems people have with jargon is mostly that they are *unable* to change them even if they’re anti-helpfwl. So they get the sense that “darn, these jargonisms are bad, but they’re stuck in social equilibrium, so I can’t change them—it would be better if hadn’t created them in the first place.” The conclusion is premature, however, since you can improve things either by disincentivizing the creation of bad jargon, *or* increasing people’s willingness to create them, so that bad terms get replaced at a higher rate.

That said, if people still insist on trying to learn all the jargon created everywhere because they’ll feel embarrassed being caught unaware, increasing the jargon-rate could cause problems (including spending too much time on the forum!). But, again, this is a problem largely caused by impostor syndrome, and pluralistic ignorance/overestimation about how much their peers know. The appropriate solution isn’t to reduce memetic mutation-rate, but rather to make people feel safer revealing their ignorance (and thereby also increasing the rate of learning-opportunities).

Naive solutions like “let’s reduce jargon” are based on partial-equilibrium analysis. It can be compared to a “second-best theory” which is only good on the margin because the system is stuck in a local optimum and people aren’t searching for solutions which require U-shaped jumps[1] (slack) or changing multiple variables at once. And as always when you optimize complex social problems (or manually nudge conditions on partial differential equations): “solve for the general equilibrium”.

- ^

A “U-shaped jump” is required for everything with activation costs/switching costs.

If evolutionary biology metaphors for social epistemology is your cup of tea, you may find this discussion I had with ChatGPT interesting. 🍵

(Also, sorry for not optimizing this; but I rarely find time to write anything publishable, so I thought just sharing as-is was better than not sharing at all. I recommend the footnotes btw!)

Glossary/metaphors

Howea palm trees ↦ EA community

Wind-pollination ↦ “panmictic communication”

Ecological niches ↦ “epistemic niches”

Inbreeding depression ↦ echo chambers

Outbreeding depression (and Baker’s law) ↦ “Zollman-like effects”

At least sorta. There’s a host of mechanisms mostly sharing the same domain and effects with the more precisely-defined Zollman effect, and I’m saying “Zollman-like” to refer to the group of them. Probably I should find a better word.

Background

Once upon a time, the common ancestor of the palm trees Howea forsteriana and Howea belmoreana on Howe Island would pollinate each other more or less uniformly during each flowering cycle. This was “panmictic” because everybody was equally likely to mix with everybody else.

Then there came a day when the counterfactual descendants had had enough. Due to varying soil profiles on the island, they all had to compromise between fitness for each soil type—or purely specialize in one and accept the loss of all seeds which landed on the wrong soil. “This seems inefficient,” one of them observed. A few of them nodded in agreement and conspired to gradually desynchronize their flowering intervals from their conspecifics, so that they would primarily pollinate each other rather than having to uniformly mix with everybody. They had created a cline.

And a cline once established, permits the gene pools of the assortatively-pollinating palms to further specialize toward different mesa-niches within their original meta-niche. Given that a crossbreed between palms adapted for different soil types is going to be less adaptive for either niche,[1] you have a positive feedback cycle where they increasingly desynchronize (to minimize crossbreeding) and increasingly specialize. Solve for the general equilibrium and you get sympatric speciation.[2]

Notice that their freedom to specialize toward their respective mesa-niches is proportional to their reproductive isolation (or inversely proportional to the gene flow between them). The more panmictic they are, the more selection-pressure there is on them to retain 1) genetic performance across the population-weighted distribution of all the mesa-niches in the environment, and 2) cross-compatibility with the entire population (since you can’t choose your mates if you’re a wind-pollinating palm tree).[3]

From evo bio to socioepistemology

I love this as a metaphor for social epistemology, and the potential detrimental effects of “panmictic communication”. Sorta related to the Zollman effect, but more general. If you have an epistemic community that are trying to grow knowledge about a range of different “epistemic niches”, then widespread pollination (communication) is obviously good because it protects against e.g. inbreeding depression of local subgroups (e.g. echo chambers, groupthink, etc.), and because researchers can coordinate to avoid redundant work, and because ideas tend to inspire other ideas; but it can also be detrimental because researchers who try to keep up with the ideas and technical jargon being developed across the community (especially related to everything that becomes a “hot topic”) will have less time and relative curiosity to specialize in their focus area (“outbreeding depression”).

A particularly good example of this is the effective altruism community. Given that they aspire to prioritize between all the world’s problems, and due to the very high-dimensional search space generalized altruism implies, and due to how tight-knit the community’s discussion fora are (the EA forum, LessWrong, EAGs, etc.), they tend to learn an extremely wide range of topics. I think this is awesome, and usually produces better results than narrow academic fields, but nonetheless there’s a tradeoff here.

The rather untargeted gene-flow implied by wind-pollination is a good match to mostly-online meme-flow of the EA community. You might think that EAs will adequately speciate and evolve toward subniches due to the intractability of keeping up with everything, and indeed there are many subcommunities that branch into different focus areas. But if you take cognitive biases into account, and the constant desire people have to be *relevant* to the largest audience they can find (preferential attachment wrt hot topics), plus fear-of-missing-out, and fear of being “caught unaware” of some newly-developed jargon (causing people to spend time learning everything that risks being mentioned in live conversations[4]), it’s unlikely that they couldn’t benefit from smarter and more fractal ways to specialize their niches. Part of that may involve more “horizontally segmented” communication.

Tagging @Holly_Elmore because evobio metaphors is definitely your cup of tea, and a lot of it is inspired by stuff I first learned from you. Thanks! : )

- ^

Think of it like… if you’re programming something based on the assumption that it will run on Linux xor Windows, it’s gonna be much easier to reach a given level of quality compared to if you require it to be cross-compatible.

- ^

Sympatric speciation is rare because the pressure to be compatible with your conspecifics is usually quite high (Allee effects ↦ network effects). But it is still possible once selection-pressures from “disruptive selection” exceed the “heritage threshold” relative to each mesa-niche.[5]

- ^

This homegenification of evolutionary selection-pressures is akin to markets converging to an equilibrium price. It too depends on panmixia of customers and sellers for a given product. If customers are able to buy from anybody anywhere, differential pricing (i.e. trying to sell your product at above or below equilibrium price for a subgroup of customers) becomes impossible.

- ^

This is also known (by me and at least one other person...) as the “jabber loop”:

This highlight the utter absurdity of being afraid of having our ignorance exposed, and going ’round judging each other for what we don’t know. If we all worry overmuch about what we don’t know, we’ll all get stuck reading and talking about stuff in the Jabber loop. The more of our collective time we give to the Jabber loop, the more unusual it will be to be ignorant of what’s in there, which means the social punishments for Jabber-ignorance will get even harsher.

- ^

To take this up a notch: sympatric speciation occurs when a cline in the population extends across a separatrix (red) in the dynamic landscape, and the attractors (blue) on each side overpower the cohering forces from Allee effects (orange). This is the doodle I drew on a post-it note to illustrate that pattern in different context:

I dub him the mascot of bullshit-math. Isn’t he pretty?

(Publishing comment-draft that’s been sitting here two years, since I thought it was good (even if super-unfinished…), and I may wish to link to it in future discussions. As always, feel free to not-engage and just be awesome. Also feel free to not be awesome, since awesomeness can only be achieved by choice (thus, awesomeness may be proportional to how free you feel to not be it).)

Yes! This relates to what I call costs of compromise.

Costs of compromise

As you allude to by the exponential decay of the green dots in your last graph, there are exponential costs to compromising what you are optimizing for in order to appeal to a wider variety of interests. On the flip-side, how usefwl to a subgroup you can expect to be is exponentially proportional to how purely you optimize for that particular subset of people (depending on how independent the optimization criteria are). This strategy is also known as “horizontal segmentation”.[1]

The benefits of segmentation ought to be compared against what is plausibly an exponential decay in the number of people who fit a marginally smaller subset of optimization criteria. So it’s not obvious in general whether you should on the margin try to aim more purely for a subset, or aim for broader appeal.

Specialization vs generalization

This relates to what I think are one of the main mysteries/trade-offs in optimization: specialization vs generalization. It explains why scaling your company can make it more efficient (economies of scale),[2] why the brain is modular,[3] and how Howea palm trees can speciate without the aid of geographic isolation (aka sympatric speciation constrained by genetic swamping) by optimising their gene pools for differentially-acidic patches of soil and evolving separate flowering intervals in order to avoid pollinating each other.[4]

Conjunctive search

When you search for a single thing that fits two or more criteria, that’s called “conjunctive search”. In the image, try to find an object that’s both [colour: green] and [shape: X].

My claim is that this analogizes to how your brain searches for conjunctive ideas: a vast array of preconscious ideas are selected from a distribution of distractors that score high in either one of the criteria.

10d6 vs 1d60

Preamble2: When you throw 10 6-sided dice (written as “10d6”), the probability of getting a max roll is much lower compared to if you were throwing a single 60-sided dice (“1d60″). But if we assume that the 10 6-sided dice are strongly correlated, that has the effect of squishing the normal distribution to look like the uniform distribution, and you’re much more likely to roll extreme values.

Moral: Your probability of sampling extreme values from a distribution depends the number of variables that make it up (i.e. how many factors convolved over), and the extent to which they are independent. Thus, costs of compromise are much steeper if you’re sampling for outliers (a realm which includes most creative thinking and altruistic projects).

Spaghetti-sauce fallacies 🍝

If you maximally optimize a single spaghetti sauce for profit, there exists a global optimum for some taste, quantity, and price. You might then declare that this is the best you can do, and indeed this is a common fallacy I will promptly give numerous examples of. [TODO…]

But if you instead allow yourself to optimize several different spaghetti sauces, each one tailored to a specific market, you can make much more profit compared to if you have to conjunctively optimize a single thing.

Thus, a spaghetti-sauce fallacy is when somebody asks “how can we optimize thing more for criteria ?” when they should be asking “how can we chunk/segment into cohesive/dimensionally-reduced segments so we can optimize for {, …, } disjunctively?”

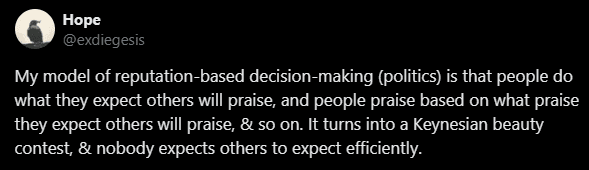

People rarely vote based on usefwlness in the first place

As a sidenote: People don’t actually vote (/allocate karma) based on what they find usefwl. That’s a rare case. Instead, people overwhelmingly vote based on what they (intuitively) expect others will find usefwl. This rapidly turns into a Keynesian Status Contest with many implications. Information about people’s underlying preferences (or what they personally find usefwl) is lost as information cascades are amplified by recursive predictions. This explains approximately everything wrong about the social world.

Already in childhood, we learn to praise (and by extension vote) based on what kinds of praise other people will praise us for. This works so well as a general heuristic that it gets internalized and we stop being able to notice it as an underlying motivation for everything we do.

- ^

See e.g. spaghetti sauce.

- ^

Scale allows subunits (e.g. employees) to specialize at subtasks.

- ^

Every time a subunit of the brain has to pull double-duty with respect to what it adapts to, the optimization criteria compete for its adaptation—this is also known as “pleiotropy” in evobio, and “polytely” in… some ppl called it that and it’s a good word.

- ^

This palm-tree example (and others) are partially optimized/goodharted for seeming impressive, but I leave it in because it also happens to be deliciously interesting and possibly entertaining as examples of a costs of compromise. I want to emphasize how ubiquitous this trade-off is.

- 's comment on Fund me please—I Work so Hard that my Feet start Bleeding and I Need to Infiltrate University by (LessWrong; May 21, 2024, 3:31 AM; 4 points)

- 's comment on Emrik Quicksays by (LessWrong; May 26, 2024, 9:34 AM; 3 points)

- 's comment on Emrik’s Quick takes by (May 27, 2024, 7:06 PM; 2 points)

- ^

I predict with high uncertainty that this post will have been very usefwl to me. Thanks!

Here’s a potential missing mood: if you read/skim a post and you don’t go “ugh that was a waste of time” or “wow that was worth reading”[1], you are failing to optimise your information diet and you aren’t developing intuition for what/how to read.

- ^

This is importantly different from going “wow that was a good/impressive post”. If you’re just tracking how impressed you are by what you read (or how useful you predict it is for others), you could be wasting your time on stuff you already know and/or agree with. Succinctly, you need to track whether your mind has changed—track the temporal difference.

- ^

[weirdness-filter: ur weird if you read m commnt n agree w me lol]

Doing private capabilities research seems not obviously net-bad, for some subcategories of capabilities research. It constrains your expectations about how AGI will unfold, meaning you have a narrower target for your alignment ideas (incl. strategies, politics, etc.) to hit. The basic case: If an alignment researcher doesn’t understand how gradient descent works, I think they’re going to be less effective at alignment. I expect this to generalise for most advances they could make in their theoretical understanding of how to build intelligences. And there’s no fundamental difference between learning the basics and doing novel research, as it all amounts to increased understanding in the end.

That said, it would in most cases be very silly to publish about that increased understanding, and people should be disincentivised from doing so.

(I’ll delete this comment if you’ve read it and you want it gone. I think the above can be very bad advice to give some poorly aligned selfish researchers, but I want reasonable people to hear it.)

EA: We should never trust ourselves to do act utilitarianism, we must strictly abide by a set of virtuous principles so we don’t go astray.

Also EA: It’s ok to eat animals as long as you do other world-saving work. The effort and sacrifice it would take to relearn my eating patterns just isn’t worth it on consequentialist grounds.

Sorry for the strawmanish meme format. I realise people have complex reasons for needing to navigate their lives the way they do, and I don’t advocate aggressively trying to make other people stop eating animals. The point is just that I feel like the seemingly universal disavowment of utilitarian reasoning has been insufficiently vetted for consistency. If we claim that utilitarian reasoning can be blamed for the FTX catastrophe, then we should ask ourselves what else we should apply that lesson to; or we should recognise that FTX isn’t a strong counterexample to utilitarianism, and we can still use it to make important decisions.

(I realised after I wrote this that the metaphor between brains and epistemic communities is less fruitfwl than it seems like I think, but it’s still a helpfwl frame in order to understand the differences anyway, so I’m posting it here. ^^)

TL;DR: I think people should consider searching for giving opportunities in their networks, because a community that efficiently capitalises on insider information may end up doing more efficient and more varied research. There are, as you would expect, both problems and advantages to this, but it definitely seems good to encourage on the margin.

Some reasons to prefer decentralised funding and insider trading

I think people are too worried about making their donations appear justifiable to others. And what people expect will appear justifiable to others, is based on the most visibly widespread evidence they can think of.[1] It just so happens that that is also the basket of information that everyone else bases their opinions on as well. The net effect is that a lot less information gets considered in total.

Even so, there are very good reasons to defer to consensus among people who know more, not act unilaterally, and be epistemically humble. I’m not arguing that we shouldn’t take these considerations into account. What I’m trying to say is that even after you’ve given them adequate consideration, there are separate social reasons that could make it tempting to defer, and we should keep this distinction is in mind so we don’t handicap ourselves just to fit in.

Consider the community from a bird’s eye perspective for a moment. Imagine zooming out, and seeing EA as a single organism. Information goes in, and causal consequences go out. Now, what happens when you make most of the little humanoid neurons mimic their neighbours in proportion to how many neighbours they have doing the same thing?

What you end up with is a Matthew effect not only for ideas, but also for the bits of information that get promoted to public consciousness. Imagine ripples of information flowing in only to be suppressed at the periphery, way before they’ve had a chance to be adequately processed. Bits of information accumulate trust in proportion to how much trust they already have, and there are no well-coordinated checks that can reliably abort a cascade past a point.

To be clear, this isn’t how the brain works. The brain is designed very meticulously to ensure that only the most surprising information gets promoted to universal recognition (“consciousness”). The signals that can already be predicted by established paradigms are suppressed, and novel information gets passed along with priority.[2] While it doesn’t work perfectly for all things, consider just the fact that our entire perceptual field gets replaced instantly every time we turn our heads.

And because neurons have been harshly optimised for their collective performance, they show a remarkable level of competitive coordination aimed at making sure there are no informational short-circuits or redundancies.

Returning to the societal perspective again, what would it look like if the EA community were arranged in a similar fashion?

I think it would be a community optimised for the early detection and transmission of market-moving information—which in a finance context refers to information that would cause any reasonable investor to immediately make a decision upon hearing it. In the case where, for example, someone invests in a company because they’re friends with the CEO and received private information, it’s called “insider trading” and is illegal in some countries.

But it’s not illegal for altruistic giving! Funding decisions based on highly valuable information only you have access to is precisely the thing we’d want to see happening.

If, say, you have a friend who’s trying to get time off from work in order to start a project, but no one’s willing to fund them because they’re a weird-but-brilliant dropout with no credentials, you may have insider information about their trustworthiness. That kind of information doesn’t transmit very readily, so if we insist on centralised funding mechanisms, we’re unknowingly losing out on all those insider trading opportunities.

Where the architecture of the brain efficiently promotes the most novel information to consciousness for processing, EA has the problem where unusual information doesn’t even pass the first layer.

(I should probably mention that there are obviously biases that come into play when evaluating people you’re close to, and that could easily interfere with good judgment. It’s a crucial consideration. I’m mainly presenting the case for decentralisation here, since centralisation is the default, so I urge you keep some skepticism in mind.)

There are no way around having to make trade-offs here. One reason to prefer a central team of highly experienced grant-makers to be doing most of the funding, is that they’re likely to be better at evaluating impact opportunities. But this needn’t matter much if they’re bottlenecked by bandwidth—both in terms of having less information reach them and in terms of having less time available to analyse what does come through.[3]

On the other hand, if you believe that most of the relevant market-moving information in EA is already being captured by relevant funding bodies, then their ability to separate the wheat from the chaff may be the dominating consideration.

While I think the above considerations make a strong case for encouraging people to look for giving opportunities in their own networks, I think they apply with greater force to adopting a model like impact markets.

They’re a sort of compromise between central and decentralised funding. The idea is that everyone has an incentive to fund individuals or projects where they believe they have insider information indicating that the project will show itself to be impactfwl later on. If the projects they opportunistically funded at an early stage do end up producing a lot of impact, a central funding body rewards the maverick funder by “purchasing the impact” second-hand.

Once a system like that is up and running, people can reliably expect the retroactive funders to make it worth their while to search for promising projects. And when people are incentivised to locate and fund projects at their earliest bottlenecks, the community could end up capitalising on a lot more (insider) information than would be possible if everything had to be evaluated centrally.

(There are of course, more complexities to this, and you can check out the previous discussions on the forum.)

- ^

This doesn’t necessarily mean that people defer to the most popular beliefs, but rather that even if they do their own thinking, they’re still reluctant to use information that other people don’t have access to, so it amounts to nearly the same thing.

- ^

This is sometimes called predictive processing. Sensory information comes in and gets passed along through increasingly conceptual layers. Higher-level layers are successively trying to anticipate the information coming in from below, and if they succeed, they just aren’t interested in passing it along.

(Imagine if it were the other way around, and neurons were increasingly shy to pass along information in proportion to how confused or surprised they were. What a brain that would be!)

- ^

As an extreme example of how bad this can get, an Australian study on medicinal research funding estimated the length of average grant proposals to be “between 80 and 120 pages long and panel members are expected to read and rank between 50 and 100 proposals. It is optimistic to expect accurate judgements in this sea of excessive information.” -- (Herbert et al., 2013)

Luckily it’s nowhere near as bad for EA research, but consider the Australian case as a clear example of how a funding process can be undeniably and extremely misaligned with the goal producing good research.

- ^

Hm, I think you may be reading the comment from a perspective of “what actions do the symbols refer to, and what would happen if readers did that?” as opposed to “what are the symbols going to cause readers to do?”[1]

The kinds of people who are able distinguish adequate vs inadequate good judgment shouldn’t be encouraged to defer to conventional signals of expertise. But those are also disproportionately the people who, instead of feeling like deferring to Eliezer’s comment, will respond “I agree, but...”

- ^

For lack of a better term, and because there should be a term for it: Dan Sperber calls this the “cognitive causal chain”, and contrasts it with the confabulated narratives we often have for what we do. I think it summons up the right image.

When you read something, aspire to always infer what people intend based on the causal chains that led them to write that. Well, no. Not quite. Instead, aspire to always entertain the possibility that the author’s consciously intended meaning may be inferred from what the symbols will cause readers to do. Well, I mean something along these lines. The point is that if you do this, you might discover a genuine optimiser in the wild. : )

- ^

Ideally, EigenTrust or something similar should be able to help with regranting once it takes off, no? : )

Really intrigued by the idea of debates! I was briefly reluctant about the concept at first, because what I associate with “debates” is usually from politics, religious disputes, debating contests, etc. where the debaters are usually lacking so much of essential internal epistemic infrastructure that the debating format often just makes it worse. Rambly, before I head off to bed:

Conditional on it being good for EA to have more of a culture for debating, how would we go about practically bring that about?

I wonder if EA Global features debates. I haven’t seen any. It’s mostly just people agreeing with each other and perhaps adding some nuance.

You don’t need to have people hostile towards each other in order for it to qualify as “debate”, I do think one of the key benefits of debates is that the disagreement is visible.

For one, it primes the debaters to hone in on disagreements, whereas perhaps EA in-group are overly primed to find agreements with each other in order to be nice.

Making disagreements more visible will hopefwly dispel the illusion that EA as a paradigm is “mostly settled”, and get people to question assumptions. This isn’t always the best course of action, but I think it’s still very needed on the margin, and could get into why if asked.

If the debate (and the mutually-agreed-upon mindset of trying to find each others’ weakest points) is handled well, it can onlookers feel like head-on disagreeing is more ok. I think we’re mostly a nice community, reluctant to step on toes, so if we don’t see any real disagreements, we might start to feel like the absence of disagreement is the polite thing to do.

A downside risk is that debating culture is often steeped in the “world of arguments”, or as Nate Soares put it: “The world is not made of arguments. Think not “which of whese arguments, for these two opposing sides, is more compelling? And how reliable is compellingness?” Think instead of the objects the arguments discuss, and let the arguments guide your thoughts about them.”

We shouldn’t be adopting mainstream debating norms, it won’t do anything for us. What I’m excited about is the idea making spaces for good-natured visible disagreements where people are encouraged to attack each others’ weakest points. I don’t think that mindset comes about naturally, so it could make sense to deliberately make room for it.

Also, if you want people to debate you, maybe you should make a shortlist of the top things you feel would be productive to debate you on. : )

Just wanted to say, I love the 500-word limit. A contest that doesn’t goodhart on effort moralization!

Principles are great! I call them “stone-tips”. My latest one is:

It’s one of my favorite. ^^ It basically very-sorta translates to bikeshedding (idionym: “margin-fuzzing”), procrastination paradox (idionym: “marginal-choice trap” + attention selection history + LDT), and information cascades / short-circuits / double-counting of evidence… but a lot gets lost in translation. Especially the cuteness.

The stone-tip closest to my heart, however, is:

I think EA is basically sorta that… but a lot gets confusing in implementation.