I work primarily on AI Alignment. My main direction at the moment is to accelerate alignment work via language models and interpretability.

jacquesthibs

I shared the following as a bio for EAG Bay Area 2024. I’m sharing this here if it reaches someone who wants to chat or collaborate.

Hey! I’m Jacques. I’m an independent technical alignment researcher with a background in physics and experience in government (social innovation, strategic foresight, mental health and energy regulation). Link to Swapcard profile. Twitter/X.

CURRENT WORK

Collaborating with Quintin Pope on our Supervising AIs Improving AIs agenda (making automated AI science safe and controllable). The current project involves a new method allowing unsupervised model behaviour evaluations. Our agenda.

I’m a research lead in the AI Safety Camp for a project on stable reflectivity (testing models for metacognitive capabilities that impact future training/alignment).

Accelerating Alignment: augmenting alignment researchers using AI systems. A relevant talk I gave. Relevant survey post.

Other research that currently interests me: multi-polar AI worlds (and how that impacts post-deployment model behaviour), understanding-based interpretability, improving evals, designing safer training setups, interpretable architectures, and limits of current approaches (what would a new paradigm that addresses these limitations look like?).

Used to focus more on model editing, rethinking interpretability, causal scrubbing, etc.

TOPICS TO CHAT ABOUT

How do you expect AGI/ASI to actually develop (so we can align our research accordingly)? Will scale plateau? I’d like to get feedback on some of my thoughts on this.

How can we connect the dots between different approaches? For example, connecting the dots between Influence Functions, Evaluations, Probes (detecting truthful direction), Function/Task Vectors, and Representation Engineering to see if they can work together to give us a better picture than the sum of their parts.

Debate over which agenda actually contributes to solving the core AI x-risk problems.

What if the pendulum swings in the other direction, and we never get the benefits of safe AGI? Is open source really as bad as people make it out to be?

How can we make something like the d/acc vision (by Vitalik Buterin) happen?

How can we design a system that leverages AI to speed up progress on alignment? What would you value the most?

What kinds of orgs are missing in the space?

POTENTIAL COLLABORATIONS

Examples of projects I’d be interested in: extending either the Weak-to-Strong Generalization paper or the Sleeper Agents paper, understanding the impacts of synthetic data on LLM training, working on ELK-like research for LLMs, experiments on influence functions (studying the base model and its SFT, RLHF, iterative training counterparts; I heard that Anthropic is releasing code for this “soon”) or studying the interpolation/extrapolation distinction in LLMs.

I’m also interested in talking to grantmakers for feedback on some projects I’d like to get funding for.

I’m slowly working on a guide for practical research productivity for alignment researchers to tackle low-hanging fruits that can quickly improve productivity in the field. I’d like feedback from people with solid track records and productivity coaches.

TYPES OF PEOPLE I’D LIKE TO COLLABORATE WITH

Strong math background, can understand Influence Functions enough to extend the work.

Strong machine learning engineering background. Can run ML experiments and fine-tuning runs with ease. Can effectively create data pipelines.

Strong application development background. I have various project ideas that could speed up alignment researchers; I’d be able to execute them much faster if I had someone to help me build my ideas fast.

Another data point: I got my start in alignment through the AISC. I had just left my job, so I spent 4 months skilling up and working hard on my AISC project. I started hanging out on EleutherAI because my mentors spent a lot of time there. This led me to do AGISF in parallel.

After those 4 months, I attended MATS 2.0 and 2.1. I’ve been doing independent research for ~1 year and have about 8.5 more months of funding left.

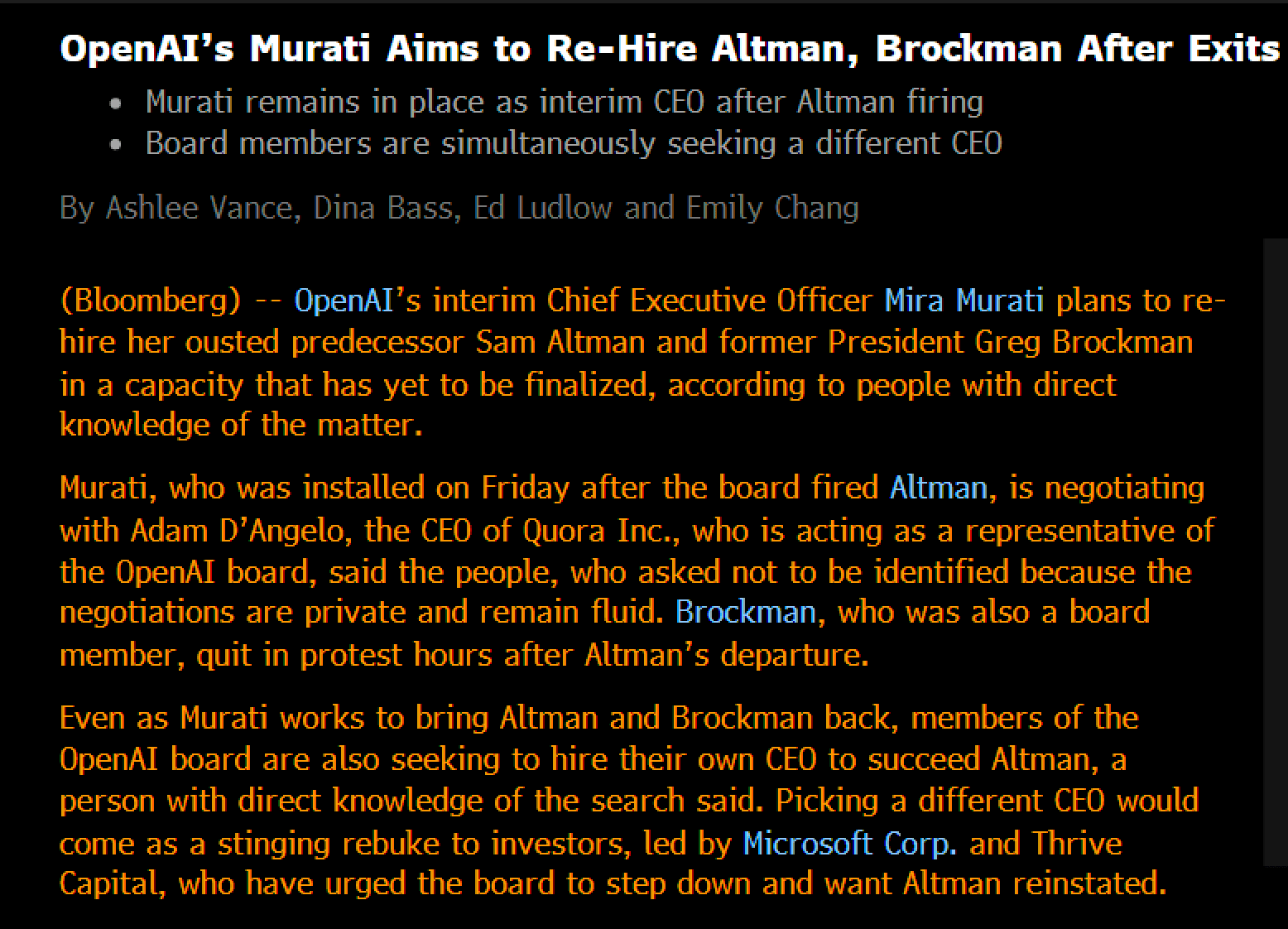

Update, board members seem to be holding their ground more than expected in this tight situation:

My current speculation as to what is happening at OpenAI

How do we know this wasn’t their best opportunity to strike if Sam was indeed not being totally honest with the board?

Let’s say the rumours are true, that Sam is building out external orgs (NVIDIA competitor and iPhone-like competitor) to escape the power of the board and potentially go against the charter. Would this ‘conflict of interest’ be enough? If you take that story forward, it sounds more and more like he was setting up AGI to be run by external companies, using OpenAI as a fundraising bargaining chip, and having a significant financial interest in plugging AGI into those outside orgs.

So, if we think about this strategically, how long should they wait as board members who are trying to uphold the charter?

On top of this, it seems (according to Sam) that OpenAI has made a significant transformer-level breakthrough recently, which implies a significant capability jump. Long-term reasoning? Basically, anything short of ‘coming up with novel insights in physics’ is on the table, given that Sam recently used that line as the line we need to cross to get to AGI.

So, it could be a mix of, Ilya thinking they have achieved AGI while Sam places a higher bar (internal communication disagreements) + the board not being alerted (maybe more than once) about what Sam is doing, e.g. fundraising for both OpenAI and the orgs he wants to connect AGI to + new board members who are more willing to let Sam and GDB do what they want being added soon (another rumour I’ve heard) + ???. Basically, perhaps they saw this as their final opportunity to have any veto on actions like this.

Here’s what I currently believe:

There is a GPT-5-like model that already exists. It could be GPT-4.5 or something else, but another significant capability jump. Potentially even a system that can coherently pursue goals for months, capable of continual learning, and effectively able to automate like 10% of the workforce (if they wanted to).

As of 5 PM, Sunday PT, the board is in a terrible position where they either stay on board and the company employees all move to a new company, or they leave the board and bring Sam back. If they leave, they need to say that Sam did nothing wrong and sweep everything under the rug (and then potentially face legal action for saying he did something wrong); otherwise, Sam won’t come back.

Sam is building companies externally; it is unclear if this goes against the charter. But he does now have a significant financial incentive to speed up AI development. Adam D’Angelo said that he would like to prevent OpenAI from becoming a big tech company as part of his time on the board because AGI was too important for humanity. They might have considered Sam’s action going in this direction.

A few people left the board in the past year. It’s possible that Sam and GDB planned to add new people (possibly even change current board members) to the board to dilute the voting power a bit or at least refill board seats. This meant that the current board had limited time until their voting power would become less important. They might have felt rushed.

The board is either not speaking publicly because 1) they can’t share information about GPT-5, 2) there is some legal reason that I don’t understand (more likely), or 3) they are incompetent (least likely by far IMO).

We will possibly never find out what happened, or it will become clearer by the month as new things come out (companies and models). However, it seems possible the board will never say or admit anything publicly at this point.

Lastly, we still don’t know why the board decided to fire Sam. It could be any of the reasons above, a mix or something we just don’t know about.

Other possible things:

Ilya was mad that they wouldn’t actually get enough compute for Superalignment as promised due to GPTs and other products using up all the GPUs.

Ilya is frustrated that Sam is focused on things like GPTs rather than the ultimate goal of AGI.

Quillette founder seems to be planning to write an article regarding EA’s impact on on tech:

“If anyone with insider knowledge wants to write about the impact of Effective Altruism in the technology industry please get in touch with me claire@quillette.com. We pay our writers and can protect authors’ anonymity if desired.”

It would probably be impactful if someone in the know provided a counterbalance to whoever will undoubtedly email her to disparage EA with half-truths/lies.

To share another perspective: As an independent alignment researcher, I also feel really conflicted. I could be making several multiples of my salary if my focus was to get a role on an alignment team at an AGI lab. My other option would be building startups trying to hit it big and providing more funding to what I think is needed.

Like, I could say, “well, I’m already working directly on something and taking a big pay-cut so I shouldn’t need to donate close to 10%”, but something about that doesn’t feel right… But then to counter-balance that, I’m constantly worried that I just won’t get funding anymore at some point and would be in need of money to pay for expenses during a transition.

I’ve also started working on a repo in order to make Community Notes more efficient by using LLMs.

Don’t forget that we train language models on the internet! The more truthful your dataset is, the more truthful the models will be! Let’s revamp the internet for truthfulness, and we’ll subsequently improve truthfulness in our AI systems!!

I shared a tweet about it here: https://x.com/JacquesThibs/status/1724492016254341208?s=20

Consider liking and retweeting it if you think this is impactful. I’d like it to get into the hands of the right people.

If you work at a social media website or YouTube (or know anyone who does), please read the text below:

Community Notes is one of the best features to come out on social media apps in a long time. The code is even open source. Why haven’t other social media websites picked it up yet? If they care about truth, this would be a considerable step forward beyond. Notes like “this video is funded by x nation” or “this video talks about health info; go here to learn more” messages are simply not good enough.

If you work at companies like YouTube or know someone who does, let’s figure out who we need to talk to to make it happen. Naïvely, you could spend a weekend DMing a bunch of employees (PMs, engineers) at various social media websites in order to persuade them that this is worth their time and probably the biggest impact they could have in their entire career.

If you have any connections, let me know. We can also set up a doc of messages to send in order to come up with a persuasive DM.

Attempt to explain why I think AI systems are not the same thing as a library card when it comes to bio-risk.

To focus on less of an extreme example, I’ll be ignoring the case where AI can create new, more powerful pathogens faster than we can create defences, though I think this is an important case (some people just don’t find it plausible because it relies on the assumption that AIs being able to create new knowledge).

I think AI Safety people should make more of an effort to walkthrough the threat model so I’ll give an initial quick first try:

1) Library. If I’m a terrorist and I want to build a bioweapon, I have to spend several months reading books at minimum to understand how it all works. I don’t have any experts on-hand to explain how to do it step-by-step. I have to figure out which books to read and in what sequence. I have to look up external sources to figure out where I can buy specific materials.

Then, I have to somehow find out how to to gain access to those materials (this is the most difficult part for each case). Once I gain access to the materials, I still need to figure out how to make things work as a total noob at creating bioweapons. I will fail. Even experts fail. So, it will take many tries to get it right, and even then, there are tricks of the trade I’ll likely be unaware of no matter which books I read. Either it’s not in a book or it’s incredibly hard to find so you’ll basically never find it.

All this while needing a high enough degree of intelligence and competence.

2) AI agent system. You pull up your computer and ask for a synthesized step-by-step plan on how to cause the most death or ways to cripple your enemy. Many agents search through books and the internet while also using latent knowledge about the subject. It tells you everything you truly need to know in a concise 4-page document.

Relevant theory, practical steps (laid out with images and videos on how to do it), what to buy and where/how to buy it, pre-empting any questions you may have, explaining the jargon in a way that is understandable to nearly anyone, can take actions on the web to automatically buy all the supplies you need, etc.

You can even share photos of the entire process to your AI as it continues to guide you through the creation of the weapon because it’s multi-modal.

You can basically outsource all cognition to the AI system, allowing you to be the lazy human you are (we all know that humans will take the path of least-resistance or abandon something altogether if there is enough friction).

That topic you always said you wanted to know more about but never got around to it? No worries, your AI system has lowered the bar sufficiently that the task doesn’t seem as daunting anymore and laziness won’t be in the way of you making progress.

Conclusion: a future AI system will have the power of efficiency (significantly faster) and capability (able to make more powerful weapons than any one person could do on their own). It has the interactivity that Google and libraries don’t have. It’s just not the same as information scattered in different sources.

I’m working on an ultimate doc on productivity I plan to share and make it easy, specifically for alignment researchers.

Let me know if you have any comments or suggestions as I work on it.

Roam Research link for easier time reading.

Google Docs link in case you want to leave comments there.

From what I understand, Amazon does not get a board seat for this investment. Figured that should be highlighted. Seems like Amazon just gets to use Anthropic’s models and maybe make back their investment later on. Am I understanding this correctly?

As part of the investment, Amazon will take a minority stake in Anthropic. Our corporate governance structure remains unchanged, with the Long Term Benefit Trust continuing to guide Anthropic in accordance with our Responsible Scaling Policy. As outlined in this policy, we will conduct pre-deployment tests of new models to help us manage the risks of increasingly capable AI systems.

I would, however, not downplay their talent density.

Fantastic news. Note: don’t forget to share it on LessWrong too.

Thanks for sharing. I think the above are examples of things people often don’t think of when trying new ways to be more productive. Instead, the default is trying out new productivity tools and systems (which might also help!). Environment and being in a flux period can totally change your behaviour in the long term; sometimes, it’s the only way to create lasting change.

When I first was looking into being veg^n, I became irritated by the inflated reviews at veg^n restaurants. It didn’t take me long to apply a veg^n tax; I started to assume the restaurant’s food was 1 star below what their average was. Made me more distrustful of veg^ns too.

I think using virtue ethics is the right call here, just be truthful.

Perfect, thanks!

lol

I’m currently trying to think of project/startup ideas in the space of d/acc. If anyone would like to discuss ideas on how to do this kind of work outside of AGI labs, send me a DM.

Note that Entrepreneurship First will be running a cohort of new founders focused on d/acc for AI.