I recently heard the Radio Bostrom audio version of the Unilateralist’s Curse after only having read it before. Something about the narration made me think that it lends itself very well to an explainer video.

Tobias Häberli

[Edit after months: While I still believe these are valid questions, I now think I was too hostile, overconfident, and not genuinely curious enough.] One additional thing I’d be curious about:

You played the role of a messenger between SBF and Elon Musk in a bid for SBF to invest up to 15 billion of (presumably mostly his) wealth in an acquisition of Twitter. The stated reason for that bid was to make Twitter better for the world. This has worried me a lot over the last weeks. It could have easily been the most consequential thing EAs have ever done and there has—to my knowledge- never been a thorough EA debate that signalled that this would be a good idea.

What was the reasoning behind the decision to support SBF by connecting him to Musk? How many people from FTXFF or EA at large were consulted to figure out if that was a good idea? Do you think that it still made sense at the point you helped with the potential acquisition to regard most of the wealth of SBF as EA resources? If not, why did you not inform the EA community?

Source for claim about playing a messenger: https://twitter.com/tier10k/status/1575603591431102464?s=20&t=lYY65-TpZuifcbQ2j2EQ5w

I think that it’s supposed to be Peter Thiel (right) and Larry Page (top) in the cover photo. They are mentioned in the article, are very rich and look to me more like the drawings.

Release shocking results of an undercover investigation ~2 weeks before the vote. Maybe this could have led to a 2-10% increase?

My understanding is, that they did try to do this with an undercover investigation report on poultry farming. But it was only in the news for a very short time and I’m guessing didn’t have a large effect.

A further thing might have helped:Show clearly how the initiative would have improved animal welfare.

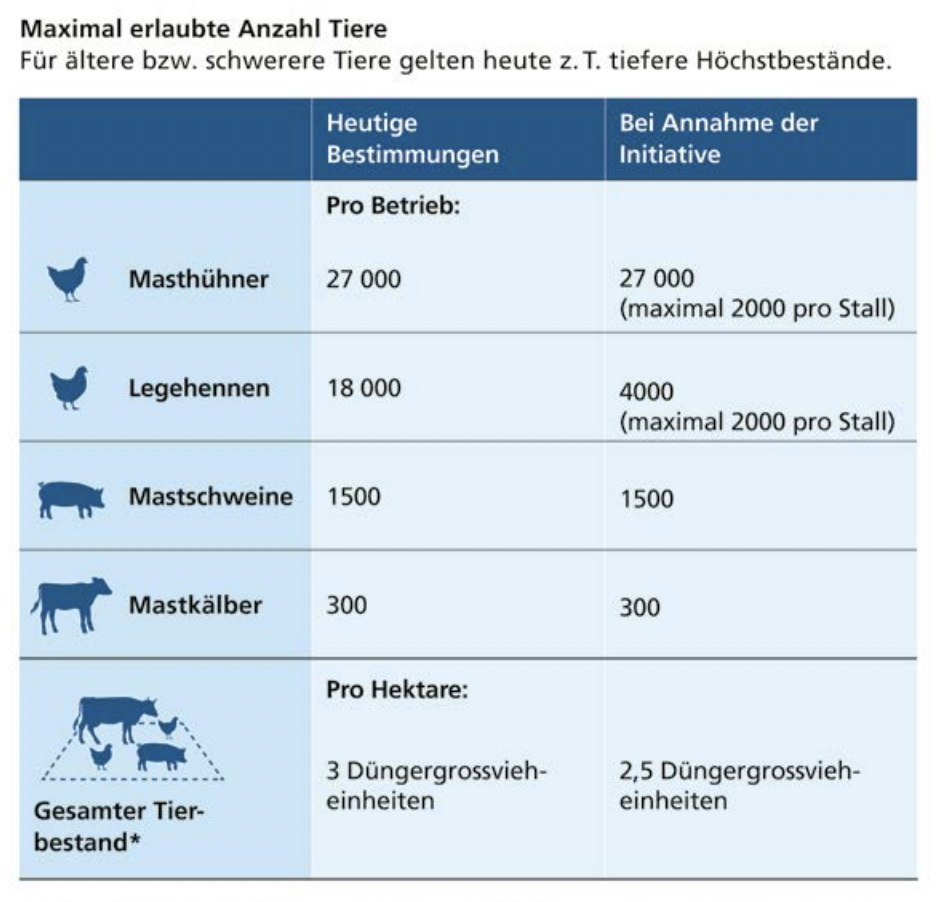

The whole campaign was a bit of a mess in this regard. In the “voter information booklet” the only clearly understandable improvement was about maximum livestocks – which only affected laying hens. This lead to this underwhelming infographic in favour of the initiative [left column: current standards, righ column: standards if initiative passes].

The initiative committee does claim on their website, that the initiative will lead to more living space for farmed animals. But it never advertised how much. I struggled to find the space requirement information with a quick google search, before a national newspaper reported on it.

I looked into evidence for the quote you posted for one hour. While I think the phrasing is inaccurate, I’d say the gist of the quote is true. For example, it’s pretty understandable that people jump from “Emile Torres says that Nick Beckstead supports white supremacy” to “Emile Torres says that Nick Beckstead is a white supremacist”.

White Supremacy:

In a public facebook post you link to this public google doc where you call a quote from Nick Beckstead “unambiguously white-supremacist”.

You reinforce that view in a later tweet:

https://twitter.com/xriskology/status/1509948468571381762

You claim that the writing of Bostrom, Beckstead, Ord, Greaves, etc. is “very much about the preservation of white Western civilization”:

https://twitter.com/xriskology/status/1527250704313856000

You also tweeted about a criticism of Hilary Greaves in which you “see white supremacy all over it”:

https://twitter.com/xriskology/status/1229107714015604736

Genocide:

On another facebook post you agree with Olle Häggström [note: Häggström actually strongly disagrees with this characterization of their position] that Bostrom’s idea of transhumanism and utilitarianism in Letters from Utopia “is a recipe for moral disaster—for genocide, white supremacy, and so on.”

Eugenics:

In your Salon article you call some of Bostrom’s ideas “straight out of the handbook of eugenics”.

https://www.salon.com/2022/08/20/understanding-longtermism-why-this-suddenly-influential-philosophy-is-so/

You reinforce this view in the following tweet:

https://twitter.com/xriskology/status/1562003541539037186

You also say that “Longtermism is deeply rooted in the ideology of eugenics”.

https://twitter.com/xriskology/status/1557338332702572545

Racism:

You called Sam Harris “quite racist”:

https://twitter.com/xriskology/status/1384425549091774466

In this tweet you strongly imply that some of Bostrom’s views are indistinguishable from scientific racism:

https://twitter.com/xriskology/status/1569365203049140224

There’s also this tweet that describes the EA community as welcoming to misogynists, neoreactionaries, and racists:

https://twitter.com/xriskology/status/1510708370285776902

Toby Ord touches on that in The Precipice.

For example here (at 11:40)

[link post] How effective altruism went from a niche movement to a billion-dollar force

But the same study also found that only 41% of respondents from the general population placed AI becoming more intelligent than humans into the ‘first 3 risks of concern’ out of a choice of 5 risks.

Only for 12% of respondents was it the biggest concern. ‘Opinion leaders’ were again more optimistic – only 5% of them thought AI intelligence surpassing human intelligence was the biggest concern.Question: “Which of the potential risks of the development of artificial intelligence concerns you the most? And the second most? And the third most?”

Option 1: The risks related to personal security and data protection.

Option 2: The risk of misinterpretation by machines.

Option 3: Loss of jobs.

Option 4: Artificial intelligence that surpasses human intelligence.

Option 5: Others

I recently found a Swiss AI survey that indicates that many people do care about AI.

[This is only very weak evidence against your thesis, but might still interest you 🙂.]Sample size:

Population – 1245 people

Opinion Leaders – 327 people [from the economy, public administration, science and education]The question:

“Do you fear the emergence of an “artificial super-intelligence”, and that robots will take power over humans?”

From the general population, 11% responded “Yes, very”, and 37% responded “Yes, a bit”.

So, half of the respondents (that expressed any sentiment) were at least somewhat worried.

The ‘opinion leaders’ however are much less concerned. Only 2% have a lot of fear and 23% have a bit of fear.

From a welfarist perspective, and under the assumption that going vegan/vegetarian isn’t an option, one challenge might be:

“Should we promote grass-fed beef consumption instead?”A very rough estimate (might be off by orders of magnitude):

Cows have at least 400′000 kcal according to this back-of-the-envelope calculation.

A large mussel has maybe 20 kcal according to the USDA.

I’m super uncertain if I’m comfortable with giving mussels approx. 1⁄20′000 the moral worth compared to cows. Even after reading, for example, this blog post arguing The Ethical Case for Eating Oysters and Mussels.

[Edit: If bivalves mainly substitute fish, then this challenge might be missing the issue.]

Nice analysis – thank you for posting!

While I agree that bivalves are very likely at most minimally sentient, I’d feel more comfortable with people promoting bivalve aquaculture at scale if the downside risks are clearer to me.

Do you have any sense of exactly how unlikely it is that bivalves suffer?

That’s very cool!

Does it adjust the karma for when the post was posted?

Or does it adjust for when the karma was given/taken?

For example:

The post with the highest inflation-adjusted karma was posted 2014, and had 70 upvotes out of 69 total votes in 2019 and now sits at 179 upvotes out of 125 total votes. Does the inflation adjustment consider that the average size of a vote after 2019 was around 2?

How well does this represent your views to people unfamiliar with it as a term in population ethics?

It might sound as if you’re an EA only concerned about affecting persons (as in humans, or animals with personhood).

Would it be possible for the usernames to be searchable inside the forum’s search function but not searchable through other search engines (e.g. Google)? Afaik it should at least be possible for the user page/ profile not to be indexed.

And would it help with these problems?

It might be the combination of small funding and local knowledge about people’s skills that is valuable. For example, funding a person that is (currently) not impressive to grantmakers but impressive if you know them and their career plans deeply.

This hasn’t been implemented yet, was it forgotten about or just not worth it?

This might be the best intervention EAs could work on because it is making a lot of future economists extremely happy!

Moonshot EA Forum Feature Request

It would be awesome to be able to opt-in for “within-text commenting” (similar to what happens when you enable commenting in a google doc) when posting on the EA Forum.

Optimally those comments could also be voted on.