On Comparative Advantage & AGI

Cross-posted from my substack.

Epistemic status: pretty confident, but willing to believe I’ve missed something somehow, hoping somebody smart will point it out if so.

Level: pretty introductory, though assumes a bit of economics knowledge—if you’ve thought carefully or extensively about this particular topic I don’t expect you to find much of it novel.

Introduction

In a world with advanced Artificial General Intelligence, how do non-capital owners survive if their labour is outcompeted by AI?

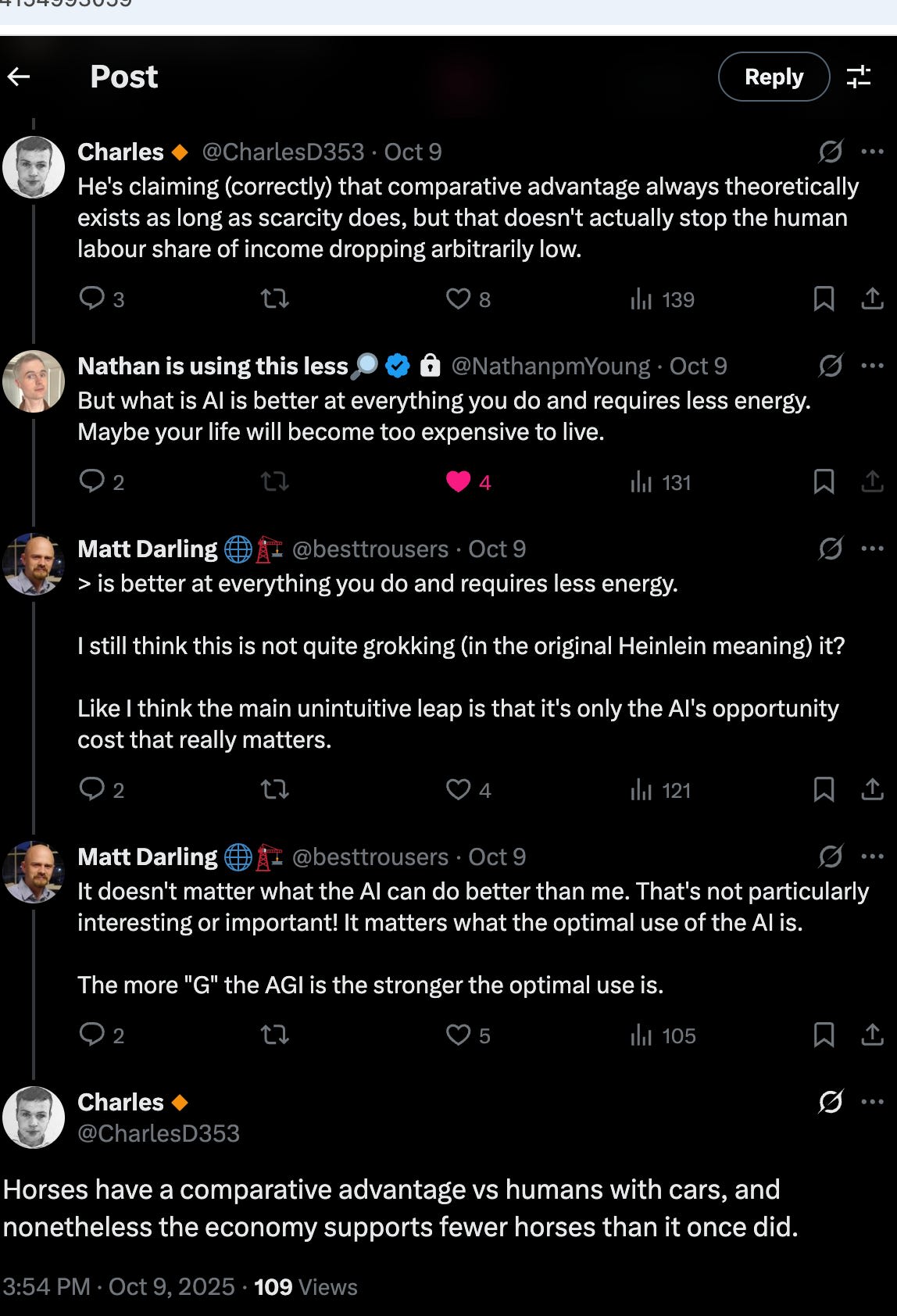

Recently, I found myself responding to one of the many Matts of economist/policy Twitter about the relevance of comparative advantage arguments for AI.

Also recently, the excellent Seb Krier of DeepMind posted about a tool to illustrate comparative advantage he had built:[1]

The tool does what it says on the tin—it shows how comparative advantage works, and how in an environment with both AI and human labourers, even if the AIs were substantially better than humans at everything, comparative advantage would still ensure there were things the humans should do to jointly maximise economic output.

He does, in a response under the post, acknowledge that this is not sufficient to ensure human flourishing and we must rely on other mechanisms for that.

What are these other mechanisms? And why do we need them—why isn’t comparative advantage sufficient on its own? That’s what this post is about. Let’s start with why we need them.[2]

Comparative advantage alone doesn’t mean you get to eat

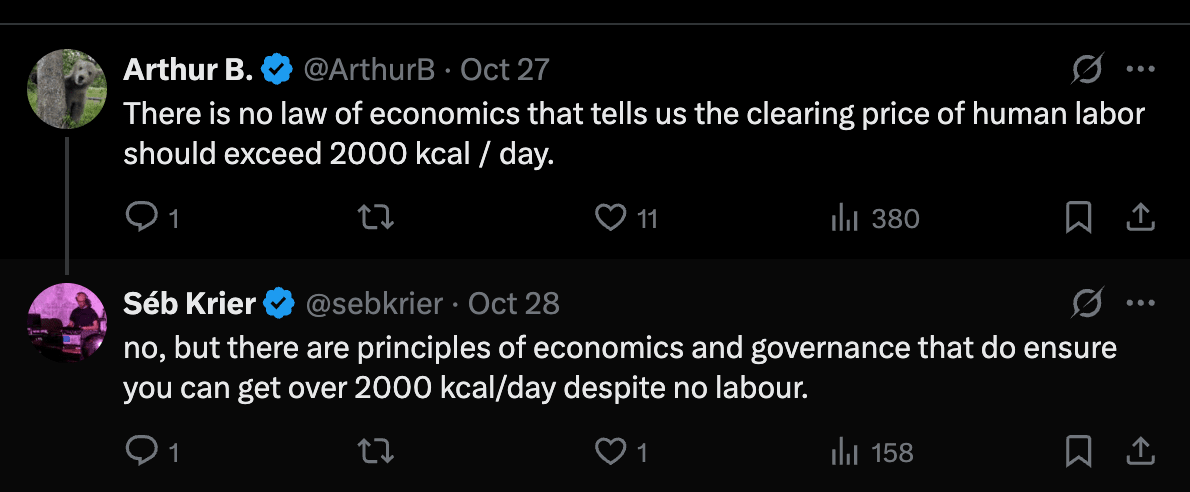

This is Arthur B’s point above, and mine when I pointed out that there are fewer horses these days than in the past.

It is simply a claim that the market clearing price of labour does not have to be sufficient for people to get as much resources as they want. Comparative advantage tells you which actor should do which task in order to maximise output, not what anyone gets paid.

We can see this even today, without needing to imagine a future very different from our present. Around 20% of people in Africa are undernourished, with 78% (!), or one billion people, unable to afford a healthy diet. In Western countries, there is strong empirical evidence and a clear intuitive case for why e.g. some cognitively disabled individuals have market clearing wages substantially below minimum wage thresholds.

If we take sufficiently capable AI as given, we are all cognitively and physically disabled individuals for the purpose of this conversation. If AI labour is sufficiently cheap, and additional workers are sufficiently easy to spin up, the market clearing price of human labour should theoretically approach 0.

Naturally whether AI can fully replace everything is a point of contention—in particular there is a question of whether or not there are some things AI will never be able to do as well as a human, due to some humans having preferences for having humans do them, such as entertainment, professional sports, and companionship, among many examples. But just as we still keep horses around for largely entertainment purposes and yet have far fewer horses[3] than we did before the invention of the automobile, there is no iron law of economics which ensures there has to be as many jobs for humans as there are humans who’d like jobs, or that they will pay above a threshold. There might be new jobs, but they are not ensured by the principle of comparative advantage alone.[4]

How we can ensure everyone eats anyway

If the market clearing price for a large chunk of human labour is below subsistence, or even below an amount sufficient to ensure a very high standard of living that could be afforded in a wealthy post AGI economy, we may want some means other than rewards for labour to ensure people get a high standard of living or a share in the prosperity generated by AI.

Western countries already have substantial systems for this purpose funded out of general taxation—Social Security and disability allowances in the US for example, or state pensions and state subsidised healthcare. Expansion of these to some sort of basic income and/or further service guarantees is one option. This does however carry some risks of countries taking much larger shares for themselves or not going beyond helping their own citizens.

Another alternative once proposed was the OpenAI nonprofit’s profit cap structure [5]for investors in the for-profit entity, where after a threshold all profits were to be used for the good of humanity. “Our goal (a) is to ensure that most of the value … we create if successful benefits everyone.” If such a mechanism were to be used, deciding who counts as “everyone” and what share they get is uncertain.

There are further alternatives, but in spirit many of them are quite similar—redistribution via a government or charitable intermediary, or direct ownership rights to AI profits.

- ^

I am aware of Simon Lermen’s recent post responding to the existence of Seb’s tool. I believe it read far too much into what Seb was doing with his tool and as a result ended up arguing against a position nobody actually held. This post is much more narrow in scope.

- ^

In this post I will treat other concerns about AGI/ASI such as out of control or human controlled AI killing everybody as out of scope.

- ^

See Our World in Data here, global horse populations have declined by 40% since 1890, but in Europe that decline is 90%.

- ^

It appears to me that the crux here is almost always beliefs about capabilities rather than a disagreement about economics. That “sufficiently cheap and capable AI would drive the market clearing price of labour towards zero” is an immediate consequence of the two claims “sufficiently cheap” and “sufficiently capable” with regard to any task.

- ^

RIP

“The tool does what it says on the tin—it shows how comparative advantage works, and how in an environment with both AI and human labourers, even if the AIs were substantially better than humans at everything, comparative advantage would still ensure there were things the humans should do to jointly maximise economic output.”

The tool is absurd and makes no sense to me. Humans are going to do arts and craft while ASI does 10,000x super AI research? My response tried to hit exactly the point I believe: Comparative Advantage does not meaningfully apply here, AI can get better things for whatever it pays us by doing it itself. The conditions for CA to apply are simply not meaningfully met.

You seem to be hung up on proving comparative advantage “not applying” for some reason, but there is basically no circumstance where it does not apply, in theory.

It simply doesn’t matter, because human equilibrium wages can still go to zero regardless of comparative advantage still applying, and that matters far more. Comparative advantage is a distraction—that is the point of this post.

It can kill you and turn your atoms into compute hardware, what does CA say about this?