Tentative practical tips for using chatbots in research

Summary

I’ve been using ChatGPT and Bing/Sydney fairly extensively the past few weeks, and think they’ve noticeably (but not enormously) improved the speed and quality of my work (both for my employer[1] and when writing personal blog posts).

This post:

Describes how to get access to some of the major chatbots.

Gives some general advice on using chatbots.

Shows a bunch of examples of how I’ve used chatbots.

Caveats:

I’m still figuring this stuff out.

I’m not sure using these tools extensively suits everyone. (But it still seems worth it for everyone to at least try them out, IMO.)

Maybe consider infosec/ethical risks.

And obviously chatbots hallucinate and get things wrong and so on.

How to get chatbot access

The main options seem to be:

ChatGPT (OAI)

Unreliable free service which uses GPT-3.5

$20/month subscription for reliable access to GPT-4

GPT-4 is kinda slow right now, and dynamically limited at X prompts per Y hours

I use this a lot

OpenAI API (OAI) + various apps that use this

Much cheaper than subscription service, but still no GPT-4 without waitlist

I intend to switch to this eventually, since it’s cheaper and can be integrated with other tools

Claude (Anthropic)

Need to request access

I haven’t gotten access to this yet

Bing/Sydney (Microsoft, but based on GPT-4)

Need to sign up for waitlist and use Microsoft Edge

Can make web searches and integrate results into output

I use this some

Bard (Google)

Need to sign up for waitlist

I haven’t tried this

Poe.com + associated mobile apps

Gives free access to Claude, ChatGPT and a couple other bots

You can subscribe to the service to get access to GPT-4 and “Claude+”

Thanks @Onni Aarne for suggesting this

See also:

The practical guide to using AI to do stuff, Ethan Mollick

General advice on using chatbots

The prompt is the most important thing. A good prompt produces good outputs, and a bad prompt produces bad outputs. Here are some ways of writing better prompts:

Frame the prompt to induce the type of answer you want. Also, give the model lots of details and/or context. You’re sort of conjuring a character, and you need to invoke the right words for the spell to work.

Bad: “What does the scaling hypothesis imply for a US-China war over Taiwan?”

Better: “You are intrepid AI researcher and rationalist blogger Gwern Branwen. The scaling hypothesis is [...]. TSMC is [...]. Write a few bullet points on what the scaling hypothesis implies for a US-China war over Taiwan.”

See also How to… use ChatGPT to boost your writing (Ethan Mollick).

Give the model examples.

Bad: “Write a forecasting question on a potential US-China war over Taiwan.”

Better: “Here’s an example forecasting question on Google releasing an API for a language model: ‘Will Google or DeepMind release an API for a Large Language Model before April 1, 2023? [I also pasted in the question’s resolution criterion and fineprint here.]’ Write a forecasting question on a potential US-China war over Taiwan.”

Give the model step-by-step instructions.

Bad: “What does the scaling hypothesis imply for a US-China war over Taiwan?”

Better: “First, describe the scaling hypothesis in AI. Then discuss Taiwan in the context of the chip industry. Finally, say something about what the scaling hypothesis implies for a US-China war over Taiwan.”

Somewhat contradicting myself, instead of crafting the perfect one-off prompt, take a co-editing approach to have the model iteratively improve the output. For example, after first asking “What does the scaling hypothesis imply for a US-China war over Taiwan?”, you can follow up with prompts like “I meant the scaling hypothesis in AI specifically. Also, consider the TSMC fabs in Taiwan.” and “Turn that into a crisp bullet-point summary.” etc.

See also My class required AI. Here’s what I’ve learned so far. (Ethan Mollick).

You end up typing some boilerplate, so it can help to have some tool for quickly inserting various text templates, e.g. via hotkeys. I think you can do this using Shortcuts on Mac (personally I do it with Emacs) – I’m not sure about Linux/Windows but I bet there are tons of options.

Maybe there’s some sort of “getting sucked in/rabbit-holed” danger in using chatbots. I haven’t found this a problem yet at work (where I timebox things and use Focusmate), but I think it happens a bit in my free time, e.g. when I’m writing a personal blog post. Maybe watch out for that?

See also:

Power and Weirdness: How to Use Bing AI—by Ethan Mollick, Ethan Mollick

Using AI to make teaching easier & more impactful, Ethan Mollick

The Machines of Mastery—by Ethan Mollick, Ethan Mollick

Infosec and ethical concerns

Using e.g. ChatGPT involves sending your prompts to OpenAI’s servers; these are then stored in databases where they can, in theory at least, (a) be accessed by (some?) OpenAI staff, (b) be accessed by hackers, and/or (c) be accidentally leaked by OpenAI.

Definitely don’t put sensitive things like access keys and/or passwords in prompts.

I couldn’t find the source for this right now, but IIRC OpenAI may use your chat history as training data when you use ChatGPT via the web interface, but by default doesn’t do this when you use ChatGPT via the API.

Plausibly using chatbots and other AI products is, under some circumstances at least, unethical. You provide AI labs with data, and possibly pay them money. See these two posts for more discussion:

Example uses

Maybe just skim this section to get an idea of what’s possible. For all of these, I don’t endorse just using stuff GPT-4 comes up with uncritically; I recommend using it as a starting-off point and/or as inspiration.

Explain stuff

Warning: chatbots hallucinate and get stuff wrong even when they sound very confident. (Just like humans much of the time!) You’ll definitely want to double-check stuff that seems important/cruxy/questionable and/or stuff that goes into a report.

But (1) in my experience you kind of start recognizing when something seems off, and (2) when sticking to stuff there’s a lot of digestible material on, GPT-4 often just gets things right. The answer about the English cloth-making industry above is essentially 100% correct and quite exhaustive.

Generate models/frameworks

This can be useful for finding new perspectives on a problem. Continuing in the same chat as the previous answer on automation:

Generate ideas for forecasting questions

Rewrite stuff

Generate ideas for BOTECs

Generate ideas/examples

(Note that the first two paragraphs in my prompt, describing instrumental convergence, are copy-pasted from the LessWrong wiki.)

Red-team stuff

(Note that Slightly against aligning with neo-luddites is a LessWrong post, not an academic paper: I just prompted it like that to try to elicit a better response. Also note that some of the counter-arguments GPT-4 gives here don’t make a ton of sense and/or don’t really respond to the original post’s points.)

Summarize stuff

Write or debug code

I’m not including any examples here since there are already lots of examples on this online, just noting that it’s a common use case.

Manipulate data

Example 1

Example 2

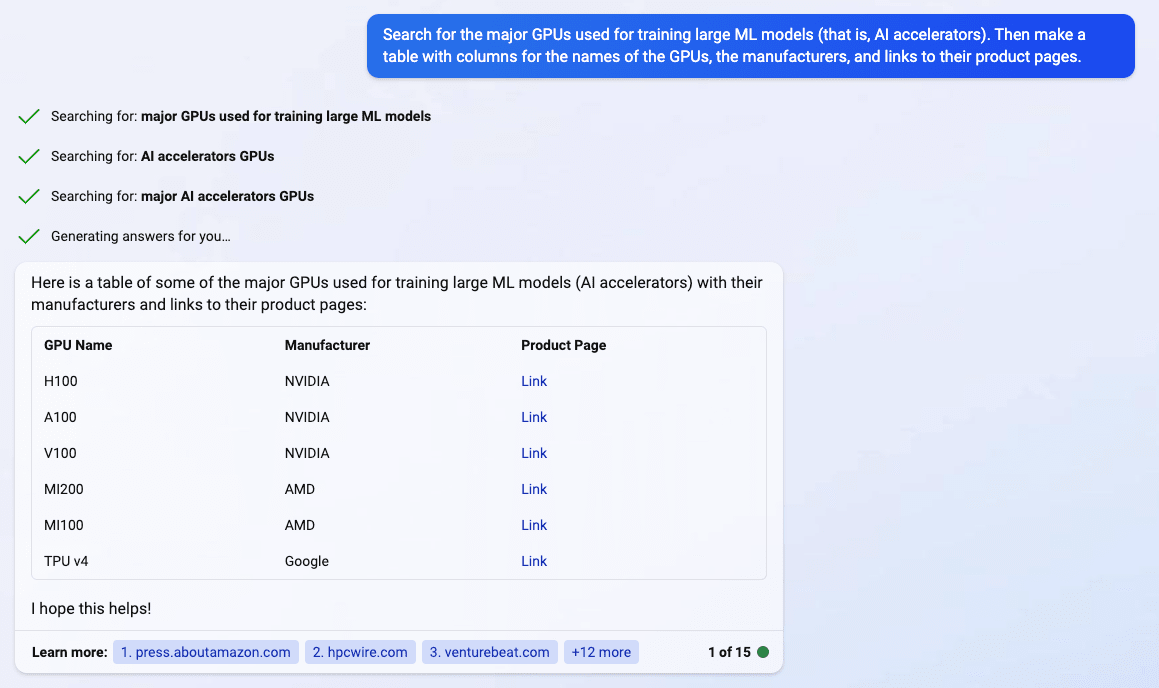

Search for information

This is Bing/Sydney. Note that one of the links here is broken, and another doesn’t quite point to the product page (but a related page).

(I find that Bing/Sydney is pretty unreliable, and often gets e.g. link citations wrong. I mostly only use it when I think the language model could really benefit from searching the web.)

Create image model prompts

- ^

Though note that this post was written entirely in a personal capacity.

A couple other important ideas:

Ask the model to summarize AND compress the previous work every other prompt. This increases the amount of data in its context window.

Ask it to describe ideas in no more than 3 high-level concepts. Then select one and ask it to break it down to 3 sub-points, etc.

Start by asking it to break down your goal to verify it understands what you are trying to do before you ask it to execute. You can ask for positive and negative examples.

If you get a faulty reply, regenerate or edit your prompt, rather than critiquing it with a follow up prompt. Keep the context window as pure as possible.

I have also had some success with https://www.perplexity.ai/, but I have only queried it a handful of times so far.

Have you looked into integrating any of these chatbots with Emacs through APIs?

There is ChatGPT.el and ob-chatgpt (which builds on top of ChatGPT.el) which are currently being updated to use the API.

Fantastic, I’ll check those out, thanks!

I just saw this comment. I have tried a bunch of Emacs AI packages; my current favorite is org-ai. See my config for details, and let me know if you have any questions.

I have a developing app on my github called aiRead, which is a text-based reading app integrating a number of chatbot prompts to do all sorts of interactive features with the text you’re reading. It’s unpolished, as I’m focusing on the prompt engineering and figuring out how to work with it more effectively rather than making it attractive for general consumption. If you’d like to check it out, here’s the link—I’d be happy to answer questions if you find it confusing! Just requires the ability to run a python script.