MIRI’s 2019 Fundraiser

(Crossposted from the MIRI blog)

MIRI’s 2019 fundraiser is now live, December 2–31!

Over the past two years, huge donor support has helped us double the size of our AI alignment research team. Hitting our $1M fundraising goal this month will put us in a great position to continue our growth in 2020 and beyond, recruiting as many brilliant minds as possible to take on what appear to us to be the central technical obstacles to alignment.

Our fundraiser progress, updated in real time (including donations made during the Facebook Giving Tuesday event):

Donate

MIRI is a CS/math research group with a goal of understanding how to reliably “aim” future general-purpose AI systems at known goals. For an introduction to this research area, see Ensuring Smarter-Than-Human Intelligence Has A Positive Outcome and Risks from Learned Optimization in Advanced Machine Learning Systems. For background on how we approach the problem, see 2018 Update: Our New Research Directions and Embedded Agency.

At the end of 2017, we announced plans to substantially grow our research team, with a goal of hiring “around ten new research staff over the next two years.” Two years later, I’m happy to report that we’re up eight research staff, and we have a ninth starting in February of next year, which will bring our total research team size to 20.[1]

We remain excited about our current research directions, and continue to feel that we could make progress on them more quickly by adding additional researchers and engineers to the team. As such, our main organizational priorities remain the same: push forward on our research directions, and grow the research team to accelerate our progress.

While we’re quite uncertain about how large we’ll ultimately want to grow, we plan to continue growing the research team at a similar rate over the next two years, and so expect to add around ten more research staff by the end of 2021.

Our projected budget for 2020 is $6.4M–$7.4M, with a point estimate of $6.8M,[2] up from around $6M this year.[3] In the mainline-growth scenario, we expect our budget to look something like this:

Looking further ahead, since staff salaries account for the vast majority of our expenses, I expect our spending to increase proportionately year-over-year while research team growth continues to be a priority.

Given our $6.8M budget for 2020, and the cash we currently have on hand, raising $1M in this fundraiser will put us in a great position for 2020. Hitting $1M positions us with cash reserves of 1.25–1.5 years going into 2020, which is exactly where we want to be to support ongoing hiring efforts and to provide the confidence we need to make and stand behind our salary and other financial commitments.

For more details on what we’ve been up to this year, and our plans for 2020, read on!

1. Workshops and scaling up

If you lived in a world that didn’t know calculus, but you knew something was missing, what general practices would have maximized your probability of coming up with it?

What if you didn’t start off knowing something was missing? Could you and some friends have gotten together and done research in a way that put you in a good position to notice it, to ask the right questions?

MIRI thinks that humanity is currently missing some of the core concepts and methods that AGI developers will need in order to align their systems down the road. We think we’ve found research paths that may help solve that problem, and good ways to rapidly improve our understanding via experiments; and we’re eager to add more researchers and engineers’ eyes and brains to the effort.

A significant portion of MIRI’s current work is in Haskell, and benefits from experience with functional programming and dependent type systems. More generally, if you’re a programmer who loves hunting for the most appropriate abstractions to fit some use case, developing clean concepts, making and then deploying elegant combinators, or audaciously trying to answer the deepest questions in computer science—then we think you should apply to work here, get to know us at a workshop, or reach out with questions.

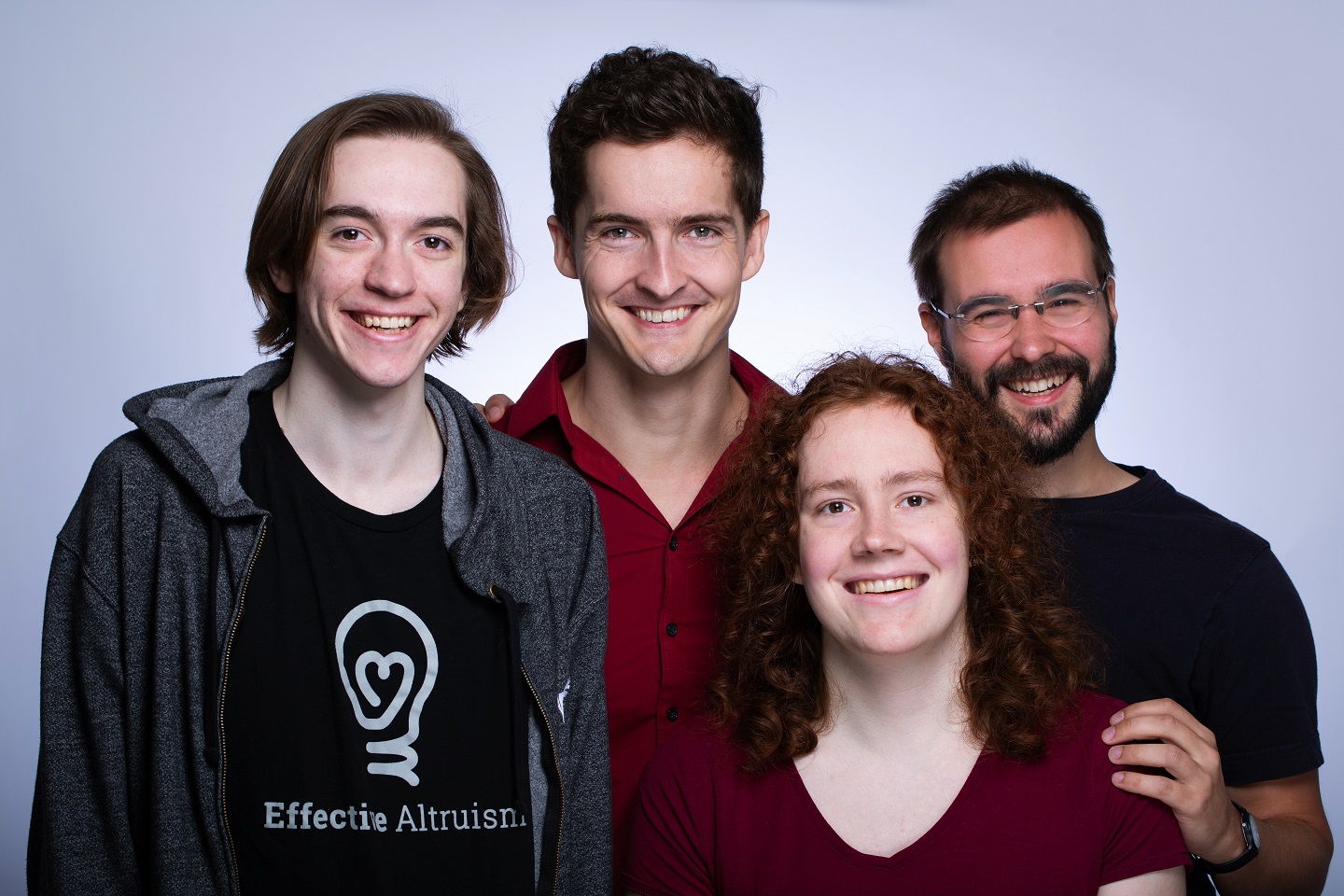

As noted above, our research team is growing fast. The latest additions to the MIRI team include:

Jeremy Schlatter, a software engineer who previously worked at Google and OpenAI. Some of the public projects Jeremy has contributed to include OpenAI’s Dota 2 bot and a debugger for the Go programming language.

Seraphina Nix, joining MIRI in February 2020. Seraphina graduates this month from Oberlin College with a major in mathematics and minors in computer science and physics. She has previously done research on ultra-lightweight dark matter candidates, deep reinforcement learning, and teaching neural networks to do high school mathematics.

Rafe Kennedy, who joins MIRI after working as an independent existential risk researcher at the Effective Altruism Hotel. Rafe previously worked at the data science startup NStack, and he holds an MPhysPhil from the University of Oxford in Physics & Philosophy.

Our workshop program is the best way we know of to bring promising talented individuals into what we think are useful trajectories towards being highly-contributing AI researchers and engineers. Having established an experience that participants love and that we believe to be highly valuable, we plan to continue experimenting with new versions of the workshop, and expect to run ten workshops over the course of 2020, up from eight this year.

These programs have led to a good number of new hires at MIRI as well as other AI safety organizations, and we find them valuable for everything from introducing talented outsiders to AI safety, to leveling up people who have been thinking about these issues for years.

If you’re interested in attending, apply here. If you have any questions, we highly encourage you to shoot Buck Shlegeris an email.

Our MIRI Summer Fellows Program plays a similar role for us, but is more targeted at mathematicians. We’re considering running MSFP in a shorter format in 2020. For any questions about MSFP, email Colm Ó Riain.

2. Research and write-ups

Our 2018 strategy update continues to be a great overview of where MIRI stands today, describing how we think about our research, laying out our case for working here, and explaining why most of our work currently isn’t public-facing.

Given the latter point, I’ll focus in this section on spotlighting what we’ve written up this past year, providing snapshots of some of the work individuals at MIRI are currently doing (without any intended implication that this is representative of the whole), and conveying some of our current broad impressions about how our research progress is going.

Some of our major write-ups and publications this year were:

“Risks from Learned Optimization in Advanced Machine Learning Systems,” by Evan Hubinger, Chris van Merwijk, Vladimir Mikulik, Joar Skalse, and Scott Garrabrant. The process of generating this paper significantly clarified our own thinking, and informed Scott and Abram’s discussion of subsystem alignment in “Embedded Agency.”

Scott views “Risks from Learned Optimization” as being of comparable importance to “Embedded Agency” as exposition of key alignment difficulties, and we’ve been extremely happy about the new conversations and research that the field at large has produced to date in dialogue with the ideas in “Risks from Learned Optimization”.Thoughts on Human Models, by Scott Garrabrant and DeepMind-based MIRI Research Associate Ramana Kumar, argues that the AI alignment research community should begin prioritizing “approaches that work well in the absence of human models.”

The role of human models in alignment plans strikes us as one of the most important issues for MIRI and other research groups to wrestle with, and we’re generally interested in seeing what new approaches groups outside MIRI might come up with for leveraging AI for the common good in the absence of human models.“Cheating Death in Damascus,” by Nate Soares and Ben Levinstein. We presented this decision theory paper at the Formal Epistemology Workshop in 2017, but a lightly edited version has now been accepted to The Journal of Philosophy, previously voted the second highest-quality academic journal in philosophy.

The Alignment Research Field Guide, a very accessible and broadly applicable resource both for individual researchers and for groups getting off the ground.

Our other recent public writing includes an Effective Altruism Forum AMA with Buck Shlegeris, Abram Demski’s The Parable of Predict-O-Matic, and the many interesting outputs of the AI Alignment Writing Day we hosted toward the end of this year’s MIRI Summer Fellows Program.

Turning to our research team, last year we announced that prolific Haskell programmer Edward Kmett joined the MIRI team, freeing him up to do the thing he’s passionate about—improving the state of highly reliable (and simultaneously highly efficient) programming languages. MIRI Executive Director Nate Soares views this goal as very ambitious, though would feel better about the world if there existed programming languages that were both efficient and amenable to strong formal guarantees about their properties.

This year Edward moved to Berkeley to work more closely with the rest of the MIRI team. We’ve found it very helpful to have him around to provide ideas and contributions to our more engineering-oriented projects, helping give some amount of practical grounding to our work. Edward has also continued to be a huge help with recruiting through his connections in the functional programming and type theory world.

Meanwhile, our newest addition, Evan Hubinger, plans to continue working on solving inner alignment for amplification. Evan has outlined his research plans on the AI Alignment Forum, noting that relaxed adversarial training is a fairly up-to-date statement of his research agenda. Scott and other researchers at MIRI consider Evan’s work quite exciting, both in the context of amplification and in the context of other alignment approaches it might prove useful for.

Abram Demski is another MIRI researcher who has written up a large number of his research thoughts over the last year. Abram reports (fuller thoughts here) that he has moved away from a traditional decision-theoretic approach this year, and is now spending more time on learning-theoretic approaches, similar to MIRI Research Associate Vanessa Kosoy. Quoting Abram:

Around December 2018, I had a big update against the “classical decision-theory” mindset (in which learning and decision-making are viewed as separate problems), and towards taking a learning-theoretic approach. [… I have] made some attempts to communicate my update against UDT and toward learning-theoretic approaches, including this write-up. I talked to Daniel Kokotajlo about it, and he wrote The Commitment Races Problem, which I think captures a good chunk of it.

For her part, Vanessa’s recent work includes the paper “Delegative Reinforcement Learning: Learning to Avoid Traps with a Little Help,” which she presented at the ICLR 2019 SafeML workshop.

I’ll note again that the above are all snapshots of particular research directions various researchers at MIRI are pursuing, and don’t necessarily represent other researchers’ views or focus. As Buck recently noted, MIRI has a pretty flat management structure. We pride ourselves on minimizing bureaucracy, and on respecting the ability of our research staff to form their own inside-view models of the alignment problem and of what’s needed next to make progress. Nate recently expressed similar thoughts about how we do nondisclosure-by-default.

As a consequence, MIRI’s more math-oriented research especially tends to be dictated by individual models and research taste, without the expectation that everyone will share the same view of the problem.

Regarding his overall (very high-level) sense of how MIRI’s new research directions are progressing, Nate Soares reports:

Progress in 2019 has been slower than expected, but I have a sense of steady progress. In particular, my experience is one of steadily feeling less confused each week than the week before—of me and other researchers having difficulties that were preventing us from doing a thing we wanted to do, staring at them for hours, and then realizing that we’d been thinking wrongly about this or that, and coming away feeling markedly more like we know what’s going on.

An example of the kind of thing that causes us to feel like we’re making progress is that we’ll notice, “Aha, the right tool for thinking about all three of these apparently-dissimilar problems was order theory,” or something along those lines; and disparate pieces of frameworks will all turn out to be the same, and the relevant frameworks will become simpler, and we’ll be a little better able to think about a problem that I care about. This description is extremely abstract, but represents the flavor of what I mean by “steady progress” here, in the same vein as my writing last year about “deconfusion.”

Our hope is that enough of this kind of progress gives us a platform from which we can generate particular exciting results on core AI alignment obstacles, and I expect to see such results reasonably soon. To date, however, I have been disappointed by the amount of time that’s instead been spent on deconfusing myself and shoring up my frameworks; I previously expected to have more exciting results sooner.

In research of the kind we’re working on, it’s not uncommon for there to be years between sizeable results, though we should also expect to sometimes see cascades of surprisingly rapid progress, if we are indeed pushing in the right directions. My inside view of our ongoing work currently predicts that we’re on a productive track and should expect to see results we are more excited about before too long.

Our research progress, then, is slower than we had hoped, but the rate and quality of progress continues to be such that we consider this work very worthwhile, and we remain optimistic about our ability to convert further research staff hours into faster progress. At the same time, we are also (of course) looking for where our research bottlenecks are and how we can make our work more efficient, and we’re continuing to look for tweaks we can make that might boost our output further.

If things go well over the next few years—which seems likely but far from guaranteed—we’ll continue to find new ways of making progress on research threads we care a lot about, and continue finding ways to hire people to help make that happen.

Research staff expansion is our biggest source of expense growth, and by encouraging us to move faster on exciting hiring opportunities, donor support plays a key role in how we execute on our research agenda. Though the huge support we’ve received to date has put us in a solid position even at our new size, further donor support is a big help for us in continuing to grow. If you want to play a part in that, thank you.

Donate

This number includes a new staff member who is currently doing a 6-month trial with us.

These estimates were generated using a model similar to the one I used last year. For more details see our 2018 fundraiser post.

This falls outside the $4.4M–$5.5M range I estimated in our 2018 fundraiser post, but is in line with the higher end of revised estimates we made internally in Q1 2019.

Nice update!

Does MIRI know of any large, likely grants (from Open Phil or others) that are in the pipeline but aren’t reflected in the fundraising thermometer?

Thanks :)

All grants we know we will receive (or are very likely to receive) have already been factored into our reserves estimates, which together with our budget estimate for next year, is the basis for the $1M fundraising goal. We haven’t factored in any future grants where we’re uncertain if we’ll get the grant, uncertain of the size or structure of the grant, etc.