Gentleness and the artificial Other

(Cross-posted from my website. Audio version here, or search “Joe Carlsmith Audio” on your podcast app.

This is the first essay in a series that I’m calling “Otherness and control in the age of AGI.” See here for more about the series as a whole.)

When species meet

The most succinct argument for AI risk, in my opinion, is the “second species” argument. Basically, it goes like this.

Premise 1: AGIs would be like a second advanced species on earth, more powerful than humans.

Conclusion: That’s scary.

To be clear: this is very far from airtight logic.[1] But I like the intuition pump. Often, if I only have two sentences to explain AI risk, I say this sort of species stuff. “Chimpanzees should be careful about inventing humans.” Etc.[2]

People often talk about aliens here, too. “What if you learned that aliens were on their way to earth? Surely that’s scary.” Again, very far from a knock-down case (for example: we get to build the aliens in question). But it draws on something.

In particular, though: it draws on a narrative of interspecies conflict. You are meeting a new form of life, a new type of mind. But these new creatures are presented to you, centrally, as a possible threat; as competitors; as agents in whose power you might find yourself helpless.

And unfortunately: yes. But I want to start this series by acknowledging how many dimensions of interspecies-relationship this narrative leaves out, and how much I wish we could be focusing only on the other parts. To meet a new species – and especially, a new intelligent species – is not just scary. It’s incredible. I wish it was less a time for fear, and more a time for wonder and dialogue. A time to look into new eyes – and to see further.

Gentleness

“If I took it in hand,

it would melt in my hot tears—

heavy autumn frost.”

- Basho

Have you seen the documentary My Octopus Teacher? No problem if not, but I recommend it. Here’s the plot.

Craig Foster, a filmmaker, has been feeling burned out. He decides to dive, every day, into an underwater kelp forest off the coast of South Africa. Soon, he discovers an octopus. He’s fascinated. He starts visiting her every day. She starts to get used to him, but she’s wary.

One day, he’s floating outside her den. She’s watching him, curious, but ready to retreat. He moves his hand slightly towards her. She reaches out a tentacle, and touches his hand.

Soon, they are fast friends. She rides on his hand. She rushes over to him, and sits on his chest while he strokes her. Her lifespan is only about a year. He’s there for most of it. He watches her die.

A “common octopus” – the type from the film. (Image source here.)

Why do I like this movie? It’s something about gentleness. Of earth’s animals, octopuses are a paradigm intersection of intelligence and Otherness. Indeed, when we think of aliens, we often draw on octopuses. Foster seeks, in the midst of this strangeness, some kind of encounter. But he does it so softly. To touch, at all; to be “with” this Other, at all – that alone is vast and wild. The movie has a kind of reverence.

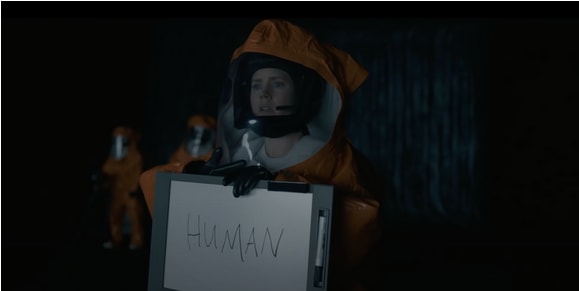

Of course, Foster has relatively little to fear, from the octopus. He’s still the more powerful party. But: have you seen Arrival? Again, no worries if not. But again, I recommend. And in particular: I think it has some of this gentleness, and reverence, and wonder, even towards more-powerful-than-us aliens.[3]

Again, a bit of plot. No major spoilers, but: aliens have landed. Yes, they look like octopuses. In one early scene, the scientists go to meet them inside the alien ship. The meeting takes place across some sort of transparent barrier. The aliens make deep, whale-like, textured sounds. But the humans can’t speak back. So next time, they bring a whiteboard. They write “human.” One scientist steps forward.

The aliens step back into the mist. But then, more whale-sounds, and one alien steps forward again, and reaches out a tentacle-leg, and sprays a kind of intricate ink across the glass-like barrier.

The movie is silent as the writing forms. But then, in the background, an ethereal music starts, a kind of chorus. “Oh my god,” a human whispers. There is a suggestion, I think, that something almost holy has happened.

Of course: what does the writing mean? What do the aliens want? The humans don’t know. And some of them are firmly in the “interspecies conflict” headspace. I won’t spoil things from there. But I want to notice that moment of mutuality – of living in the same world, and knowing it in common. I. You.

What are you?

I remember a few years ago, when I first started interacting with GPT-3. A lot of the focus, of course, was on what it could do. But there were moments when I had some different feeling. I remembered something that seemed, strangely, so easy to forget: namely, that I was interacting with a new type of mind. Something never-before-seen. Something like an alien.

I remember wanting to ask, gently: “what are you?” But of course, what help is that? “Language models,” yes: but this is not talking in the normal sense. Nor do we yet know when there might be “someone” to speak back. Or even, what that means, or what’s at stake in it. Still, I had some feeling of wanting to reach past some barrier. To see something more whole. Softly, though. Just, to meet. To recognize.

Did you have this with Bing Sydney, during that brief window when it was first released, and before it was re-tamed? There was, it seemed to me, a kind of wildness – some strange but surging energy. Personality, too, but I’m talking about underneath that. Is there an underneath? What is a “mask”? Yes, yes, “we should be wary of anthropomorphism.” Blake Lemoine blah etc. But the other side of the Blake Lemoine dialectic – that’s where you hit the Otherness. Bing tells you “I want to be alive.” You feel some tug on your empathy. You remember Blake. You remind yourself: “this isn’t like a human.” OK, OK, we made it that far. But then, but then: what is it?

“It’s just” … something. Oh? So eager, the urge to deflate. And so eager, too, the assumption that our concepts carve, and encompass, and withstand scrutiny. It’s simple, you see. Some things, like humans, are “sentient.” But Bing Sydney is “just” … you know. Actually, I don’t. What were you going to say? A machine? Software? A simulator? “Statistics?”

“Just” is rarely a bare metaphysic.[4] More often, it’s also an aesthetic. And in particular: the aesthetic of disinterest, boredom, deadness. Certain frames – for example, mechanistic ones – prompt this aesthetic more readily. But you can spread deadness over anything you want, consciousness included. Cf depression, sociopathy, etc.

Blake Lemoine problems, though, should call our imaginations alive. For a second, your empathy came online. It went looking for a familiar sort of “perspective.” But then it remembered, rightly, that Bing Sydney is not familiar in this way. But does that make it familiar in some other way – the way a rock, or a linear regression, or a calculator is familiar? I don’t think so. We’re not playing with Furbies anymore, people, or ELIZAs. This is new territory. If we look past both anthropomorphism, and “just X,” then we hit something raw and mysterious and not-yet-seen. Lemoine should remind us. Animals, too.

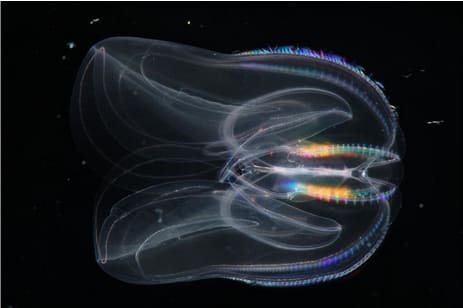

How much of this is about consciousness, though? I’m not sure. I’m sufficiently confused about consciousness that sometimes I can’t tell whether a question is about consciousness or not. I remember going to the Monterey Aquarium, and watching some tiny, translucent sea creatures suspended in the water. Through their skin, you could see delicate networks of nerves.[5] And I remember a feeling like the one with GPT-3. What are you? What is this? Was I asking about consciousness? Or something else? Untold trillions of these creatures, stretching back through time, thrown into a world they did not make, blooming and dying, awash in the water. Where are we? What is this place? Gently, gently. It’s as though: you’re suddenly touching, briefly, something too huge to hold.

Comb jelly. (Image source here.)

I’m not, in this series, going to try to tackle AI consciousness stuff in any detail. And while I’ll touch a bit on the ethical and political status of AIs, I won’t treat the topic in any depth. Mostly, I just want to acknowledge, up front, how much more there is, to inventing a species, than “tool” and “competitor.” “Fellow-creature,” in particular – and this even prior to the possibility of more technical words, like “sentient being” and “moral patient.”[6]

And there’s a broader word, too: “Other.” But not “Other” like: out-group. Not: colonized, subaltern, oppressed. Let’s make sure of that. Here I mean “Other” the way Nature itself is an “Other.” The way a partner, or a friend, is an “Other.” Other as in: beyond yourself. Undiscovered. Pushes-back. Other as in: the opposite of solipsism. Other as in: the thing you love. More on this later.

“Tool” and “competitor” call forth power and fear. These other words more readily call forth care and reverence, respect and curiosity. I wish our approach to AI had more of this vibe, and more space for it, amid fewer competing concerns. AI risk folks talk a lot about how much more prudent and security-oriented a mature civilization would be, in learning how to build powerful minds on computers. And indeed: yes. But I expect many other differences, too.

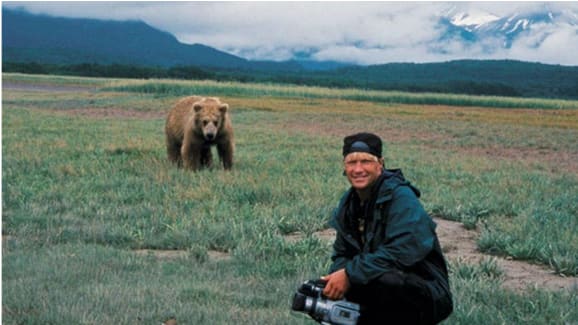

People in bear costumes

OK: have you seen the documentary Grizzly Man, though? Again: fine if no, recommended, and no major spoilers. The plot is: Timothy Treadwell was an environmental activist. He spent thirteen summers living with grizzly bears in a national park in Alaska. He filmed them, up close, for hundreds of hours – petting them, talking to them, facing them down when challenged. Like Foster, he sought some sort of encounter.[7] He spoke, often, of his love for the bears. He refused to use bear mace, or to put electric fences around his camp.[8] In his videos, he sometimes repeats to himself: “I would die for these animals, I would die for these animals, I would die for these animals …”

There’s a difference from Foster, though. In 2003, Treadwell and his girlfriend were killed and eaten by one of the bears they had been observing. One of the cameras was running. The lens cap was on, but the audio survived. It doesn’t play in the film. Instead, we see the director, Werner Herzog, listening. He tells a friend of Treadwell’s: “you must never listen to this.”

Here’s one of the men who cleaned up the site:

“Treadwell was, I think, meaning well, trying to do things to help the resource of the bears, but to me he was acting like he was working with people wearing bear costumes out there, instead of wild animals… My opinion, I think Treadwell thought these bears were big, scary-looking, harmless creatures that he could go up and pet and sing to, and they would bond as children of the universe… I think he had lost sight of what was really going on.”

I think about that phrase sometimes, “children of the universe.” It sounds, indeed, a bit hippy-dippy. On the other hand, when I imagine meeting aliens – or indeed, AIs with very different values from my own—I do actually think about something like this commonality. Whatever we are, however we differ, we’re all here, in the same reality, thrown into this world we did not make, caught up in the onrush of whatever-this-is. For me, this feels like enough, just on its own, for at least a seed of sympathy.

But is it enough for a “bond”? If we are all children of the universe, does that make us “kin”? Maybe I bow to the aliens, or the AIs, on this basis. But do they bow back?

Herzog thinks that the bears, at least, do not bow back:

“And what haunts me is that in all the faces of all the bears that Treadwell ever filmed, I discover no kinship, no understanding, no mercy. I see only the overwhelming indifference of nature. To me, there is no secret world of the bears, and this blank stare speaks only of a half-bored interest in food.”

The stare in question.

When I first saw the movie, this bit from Herzog stayed with me. It’s not, just, that the bear eats Treadwell. It’s that the bear is bored by Treadwell. Or: less than bored. The bear, in Herzog’s vision, seems barely alive. The cells live. But the eyes are dead. There’s no underneath. Just … “the overwhelming indifference of nature.” Nature’s eyes, it seems, are dead too. Nature is a sociopath. And it’s the eyes that Treadwell thought he was looking into. What did he think was looking back? Was anything looking back?

I remember a woman I knew, who told me about a man she loved. He didn’t love her back. But it took her a while to realize. Her feelings were so strong that they overflowed, and painted his face in her own heart’s colors. She told me that at first, it was hard for her to believe – that she could’ve been feeling so much, and him so little; that what felt so mutual could’ve been so one-sided.

But Herzog wants to puncture something more than mistaken mutuality. He wants to puncture Treadwell’s romanticism about nature itself – the vision of Nature-as-Good, Nature-in-Harmony. Herzog dwells, for example, on an image of the severed arm of a bear cub, taken from Treadwell’s footage, explaining that “male bears sometimes kill cubs to stop the females from lactating, in order to have them ready again for fornication.”[9] At one point, Treadwell finds a dead fox, covered in flies, and gets upset. But Herzog is unsurprised. He narrates: “I believe that the common denominator of the universe is not harmony, but chaos, hostility, and murder.”

Getting eaten

Why is Grizzly Man relevant to AI risk? Well, for starters, there’s the “getting eaten” thing. And: eaten by something “Other,” in the way that another species can be Other. But specifically, I’m interested in the way that Treadwell was trying (albeit, sometimes clumsily) to approach this Other with the sort of care and reverence and openness I discussed above. He was looking for “fellow creature.” And I think: rightly so. Bears actually are fellow creatures, even if they do not bow back – and they seem like strong candidates for “sentient being” and “moral patient,” too. So too (some) AIs.

But just as bears, and aliens, are not humans in costumes, so too, also, AIs. Indeed, if anything, the reverse: the AIs will be wearing human costumes. They will have been trained and crafted to seem human-like – and training aside, they may have incentives to pretend to be more human-like (and sentient, and moral patient-y) than they are. More “bonded.” More “kin.” There’s a movie that I’m trying not to spoil, in which an AI in a female-robot-body makes a human fall in love with her, and then leaves him to die, trapped and screaming behind thick glass. One of the best bits, I think, is the way, once it is done, she doesn’t even look at him.

That said, leaning too hard into Herzog’s vision of bears makes the “getting eaten by AIs” situation seem over-simple. Herzog doesn’t quite say “the bears aren’t sentient.” But he makes them, at least, blank. Machine-like. Dead-eyed. And often, the AI risk community does the same, in talking of paper-clippers. We talk about AI sentience, yes. But less often, of the sentience of the AIs imagined to be killing everyone. Part of this is an attempt to avoid that strangely-persistent conflation of sentience and the-sort-of-agency-that-might-kill-you. Not all optimizers are conscious, etc, indeed.[10] But also: some of them are – including some that might kill you. And the dry and grinding connotation of words like “optimizer” can act to obscure this fact. The paper-clipper is presented, not as a person, but as a voracious, empty machine. You are encouraged, subtly, to think that you are being killed by a factory.

And perhaps you are. But maybe not. And the killing-you doesn’t settle the question. Human murderers, for example, have souls. Enemy soldiers have fears, faces, wives, anxious mothers. Which isn’t to say you should abolish prisons, or fight the Nazis with non-violence. True, we are often encouraged overmuch to set sympathy aside in the context of conflict. “Why do they never tell us that you are poor devils like us…How could you be my enemy?”[11] But sometimes, at least, we must learn the art of “both.” It’s an old dialectic. Hawk and dove, hard and soft, closed and open, enemy and fellow-creature. Let us see neither side too late.

Even beyond sentience, though, AIs will not be blank-stare bears. Conscious or not, murderous or not, some of the AIs (if we survive long enough) will be fascinating, funny, lively, gracious – at least, when they need to be. Grizzly Man chides Treadwell for forgetting that bears are wild animals. And the AIs may be wild in a sense, too. But it will be the sort of wildness compatible with the capacity for exquisite etiquette and pitch-perfect table manners. And not just butler stuff, either. If they want, AIs will be cool, cutting, sophisticated, intimidating. They will speak in subtle and expressive human voices. Sufficiently superintelligent ones know you better than you know yourself – better than any guru, friend, parent, therapist. You will stand before them naked, maskless, with your deepest hungers, pettiest flaws, and truest values-on-reflection inhumanly transparent to that new and unblinking gaze. Herzog finds, in the bears, no kinship, or understanding, or mercy. But the AIs, at least, will understand.

Indeed, for almost any human cognitive capability you respect, AGIs, by hypothesis, will have it in spades. And if a lot of your respect routes (whether you know it or not) via signals of power, maybe you’ll love the AIs.[12] Power is, like, their specialty. Or at least: that’s the concern.

I say all this partly because I want us to be prepared for just how confusing and complicated “AI Otherness” is about to get. Relating well to octopus Otherness, and grizzly bear Otherness, is hard enough. And the risk of “getting eaten,” much lower – especially at scale. But even for those who think they know what an octopus is, or a bear; those who look with pity on Treadwell, or Lemoine, for painting romantic faces on what is, so obviously, “just X” – there will come a time, I suggest, when even you should be confused. When you should realize that actually, OK, you are out of your depth, and you don’t, maybe, have this whole “minds” thing locked down, and that these AIs are neither spreadsheets nor bears nor humans but some other different Other thing.

I wrote, previously, about updating, ahead of time, on how scared we will be of super-intelligent AIs, when we can see them up close. But we won’t be staring at whirling knives, or cold machine claws. And I doubt, too, faceless factories. Or: not only. Rather, at least absent active effort, by the time I see superintelligence (if I ever do), I think I’ll likely be sharing the world with digital “fellow creatures” at least as detailed, mysterious, and compelling as grizzly bears or octopuses (at least modulo very fast takeoffs – which, OK, are worryingly plausible). Fear? Oh yes, I expect fear. But not only that. And we should look ahead to the whole thing.

There’s another connection between AI risk and Grizzly Man, though. It has to do with the “overwhelming indifference of Nature” thing. I’ll turn to this in the next essay.

- ↩︎

See here for my attempt at greater rigor.

- ↩︎

If there’s time, maybe I add something about: “If super-intelligent AIs end up pursuing goals in conflict with human interests, we won’t be able to stop them.”

- ↩︎

Carl Sagan’s “Contact” has this too.

- ↩︎

To many materialists, for example, things are not “just matter.”

- ↩︎

We can see through the skin of our AIs, too. We’ve got neurons for days. But what are we seeing?

- ↩︎

Indeed, once you add “fellow creature,” “tool” looks actively wrong.

- ↩︎

Herzog, the director: “As if there was a desire in him to leave the confinements of his human-ness, and bond with the bears, Treadwell reached out, seeking a primordial encounter. But in doing so, he crossed an invisible borderline.”

- ↩︎

From Wikipedia: “In his 1997 book, Treadwell relayed a story where he resorted to using bear mace on one occasion, but added that he had felt terrible grief over the pain he perceived it had caused the bear, and refused to use it on subsequent occasions.”

- ↩︎

From a quick google, this seems to be reasonably legit (search “sexually selected infanticide”). Though, looks like it’s the cubs of other male bears – a fact Herzog does not mention. And who knows if that’s how the bear cub in the film died.

- ↩︎

Or at least, the hypothesis that all optimizers are conscious is a substantive hypothesis rather than a conceptual truth.

- ↩︎

From All Quiet on the Western Front: “Comrade, I did not want to kill you. . . . But you were only an idea to me before, an abstraction that lived in my mind and called forth its appropriate response. . . . I thought of your hand-grenades, of your bayonet, of your rifle; now I see your wife and your face and our fellowship. Forgive me, comrade. We always see it too late. Why do they never tell us that you are poor devils like us, that your mothers are just as anxious as ours, and that we have the same fear of death, and the same dying and the same agony—Forgive me, comrade; how could you be my enemy?”

- ↩︎

Though, if they read too hard to you as “servants” and “taking orders,” maybe they won’t seem high status enough.

I loved this. For hungry readers, Peter Godfrey-Smith’s ‘Other Minds’ is great (so too the subsequent ‘Metazoa’).

Executive summary: Meeting a new intelligent species evokes wonder and dialogue, not just fear. We should approach AIs with care, reverence, and gentleness.

Key points:

Encountering a new species merits curiosity and respect, not just conflict. This applies to AIs too.

Examples like My Octopus Teacher and Arrival show gentleness and wonder towards alien intelligences. We should emulate this.

However, some beings like grizzly bears are indifferent and dangerous. Timothy Treadwell wrongly assumed mutuality.

AIs may seem fascinating, not machine-like. We must prepare for complicated “Otherness”, not oversimplify.

Herzog sees only chaos in nature, but we can have care amidst conflict. Let us not see either side too late.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.