AI Safety Doesn’t Have to be Weird

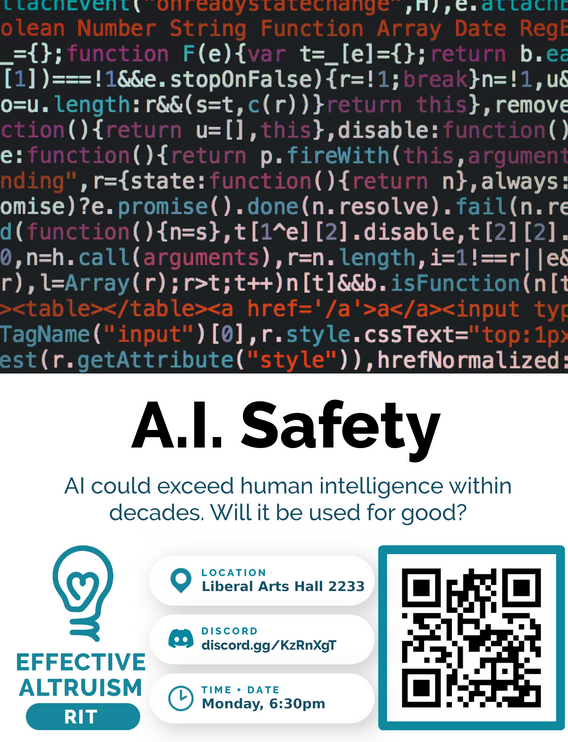

Whenever I hear people say that effective altruism is weird, the first example I hear is the focus on AI safety. This point never made much sense to me. For one thing, as Peter Singer once pointed out, you can’t really criticize effective altruism for not picking the right cause areas. What you would be criticizing are this community’s conclusions, and not effective altruism itself. But lately I’ve been wondering about how to make this cause area more appealing. I’m in charge of the club at the Rochester Institute of Technology, and wanted to publicize our AI Safety discussion. Our posters typically contain a subtitle giving a little more information about the topic. Here’s the poster we ended up making for the event:

I will say that because of this poster, two new people joined our club, which is far better than how we usually do. I don’t think it was entirely due to our phrasing. It is a tech school, after all. But I don’t think the way we decided to talk about it hurt us much. So I wanted to write a post talking about how we can think about AI safety, without looking like schizophrenics.

The Pitch

I can’t find the tweet now, but what inspired this post was made probably a year ago. The idea is that one of the weirdest things about effective altruism is how much time the community spends on A.I. taking over the world, which seems to not make much progress and is a questionable fear to begin with.

I think this is understandable point of view if you first heard about AI safety. AI destroying humanity is something that typically happens in sci-fi movies. But sci-fi movies do a lot of ridiculous things. Nobody’s ever going to take seriously the idea that we should spend taxpayer money researching the ethics of Star Trek transporters. But what if, instead of thinking of it from the point of view of technology that doesn’t yet exist, we think of AI safety in terms of what already exists?

Machine learning algorithms already exist. Machine learning algorithms are already problematic. It was something we discussed in one of my classes[1]. Specification gaming is already a problem in AI. Maybe we can use that as a basis for the approach. For example:

“AI is getting more powerful. It also makes a lot of mistakes. And it’s being used more often. How do we make sure (a) it’s being used for good, and (b) it doesn’t accidentally do terrible things that we didn’t want.”

In this approach to AI safety, is a problem we should want solved anyway, even if it doesn’t destroy humans. AGI destroying the world is just the extreme example of something it could do.

Possible Flaws

Of course, I’m aware that most people who are in AI safety today are in it because of the possibility of it ending human civilization. I can imagine those people being opposed to having more people in the field who don’t take it as seriously as they do. Using this approach could lead to infighting amongst the safety researchers about how important their jobs are.

Also, the way I’m talking about it doesn’t really make it clear why this is an effective altruism cause area. You’d need to explain the extreme case, which is the AI destroying humanity to accomplish its goal.

Other Benefits of this Approach

Something that I don’t think gets enough attention in AI safety is preventing bad actors from getting ahold of AGI and using it to kill people. The typical counterargument to this is that if we have an AGI that’s smart enough to destroy the world, we probably also have one that’s smart enough to stop other AGIs from developing. I think it’s quite possible that stopping other people from creating AGIs is simply a harder problem than ending the world. So we might get a world-ending AGI before we get a superintelligence suppressing AGI. So I still think this is worth looking into. I’m talking about this here because my approach to talking about AI safety implies that this is worth looking into, rather than pushing everyone into solving the alignment problem.

- ^

The class was called “Computation and Culture”, if you’d like to know

>”AI is getting more powerful. It also makes a lot of mistakes. And it’s being used more often. How do we make sure (a) it’s being used for good, and (b) it doesn’t accidentally do terrible things that we didn’t want.”

Very similar to what I currently use!

I’ve been training with AI Safety messaging for a bit, and I’ve stuck to these principles:

1. Use simple, agreeable language.

2. Refrain from immediately introducing concepts that people have preconceived misconceptions

So mine is something like:

1. AI is given a lot of power and influence.

2. Large tech companies are pouring billions into making AI much more capable.

3. We do not know how to ensure this complex machine respects our human values and doesn’t cause great harm.

I do agree that this understates the risks associated with superintelligence, but in my experience speaking with laymen, if you introduce superintelligence as the central concept at first, the debate becomes “Will AI be smarter than me?” which provokes a weird kind of adversarial defensiveness. So I prioritise getting people to agree with me before engaging with “weirder” arguments.