We were modelling the ultimate best future (billions of years from now) for 3+ years, Xerox PARC-style, we got very exciting results. Including AI safety results

ank

Thank you, for your interest David! Math-proven safe AIs are possible, our group has just achieved it (our researcher writes under a pseudonym for safety reasons, please, ignore it): https://x.com/MelonUsks/status/1907929710027567542

Why it’s math-proven safe? Because it’s fully static, an LLM by itself is a giant static geometric shape in a file, only GPUs make it non-static, agentic. It’s called place AI, it’s a type of tool AI.

To address you second question, there is a way to know if a given matrix multiplication is for AI or not. In the cloud we’ll have a math-proven safe AI model inside of each math-proven safe GPU: GPU hardware will be remade to be an isolated unit that just spits out output: images, text, etc. Each GPU is an isolated math-proven safe computer, the sole purpose of this GPU computer is safety and hardware+firmware isolation of the AI model from the outside world.

But the main priority is putting all the GPUs in the international scientists controlled clouds, they’ll figure out the small details that are left to resolve. Almost all current GPUs (especially consumer ones) are 100% unprotected from the imminent AI agent botnet (think a computer virus but much worse), we can’t switch off the whole Internet.

Please, refer to the link above for further information. Thank you for this conversation!

Yes, the only realistic and planet-wide 100% safe solution is this: putting all the GPUs in safe cloud/s controlled by international scientists that only make math-proven safe AIs and only stream output to users.

Each user can use his GPU for free from the cloud on any device (even on phone), when the user doesn’t use it, he can choose to earn money by letting others use his GPU.

You can do everything you do now, even buy or rent GPUs, all of them just will be cloud math-proven safe GPUs instead of physical. Because GPUs are nukes are we want no nukes or to put them deep underground in one place so they can be controlled by international scientists.

Computer viruses we still didn’t 100% solve (my mom had an Android virus recently), even iPhone and Nintendo Switch got jailbroken almost instantly, there are companies jailbreak iPhones as a service. I think Google Docs never got jailbroken, and majorly hacked, it’s a cloud service, so we need to base our AI and GPU security on this best example, we need to have all our GPUs in an internationally scientist controlled cloud.

Else we’ll have any hacker write a virus (just to steal money) with an AI agent component, grab consumer GPUs like cup-cakes, AI agent can even become autonomous (and we know they become evil in major ways, want to have a tea party with Stalin and Hitler—there was a recent paper—if given an evil goal. Will anyone align AIs for hackers or hacker themself will do it perfectly (they won’t) to make an AI agent just to steal money but be a slave and do nothing else bad?)

Here is a drafty continuation you can find interesting (or not ;):

In unreasonable times the solution to AI problem will sounds unreasonable at first. Even though it’s probably the only reasonable and working solution.

Imagine in a year we solved alignment and even hackers/rogue states cannot unleash AI agents on us. How we did it?

The most radical solution that will do it (unrealistic and undesirable): is having international cooperation and destroying all the GPUs, never making them again. Basically returning to some 1990s computer-wise, no 3D video games but everything else is similar. But it’s unrealistic and probably stifles innovation too much.

Less radical is keeping GPUs so people can have video games and simulations but internationally outlawing all AI and replacing GPUs with the ones that completely not support AI (we may need to have Mathematically Proven Secure GPU OS so GPUs are secure sandboxes that are more locked up then nuclear plants, they cannot run AI models, self destruct in case of tampering, call FBI, need Web access to work, there are ways to make it extremely hard to run AIs on them, like having all the GPUs compute only non-AI things remotely on NVIDIA servers, so computers are like thin clients, and GPU compute is always rented from the cloud, etc.) They can even burn and call some FBI if a person tries to run some AI on it, it’s a joke. So like returning to 2020 computer-wise, no AI but everything else the same.

Less radical is to have whitelists of models right on GPU, a GPU becomes a secure computer that only works if it’s connected to the main server (it can be some international agency, not NVIDIA, because we want all GPU makes, not just NVIDIA to be forced to make non-agentic GPUs). NVIDIA and other GPU providers approve models a bit like Apple approves apps in their App Store. Like Nintendo approves games for her Nintendo Switch. So no agentic models, we’ll have non-agentic tool AIs that Max Tegmark recommends: they are task specific (don’t have broad intelligence), they can be chatbots, fold proteins, do everything without replacing people. And place AIs that allow you to be the agent and explore the model like a 3D game. This is a good solution that keeps our world the way it is now but 100% safe.

And NVIDIA will be happy to have this world, because it will double her business, NVIDIA will be able to replace all the GPUs: so people will bring theirs and get some money for it, then they buy new non-agentic sandboxed GPU with an updatable whitelist (probably to use gpus you’ll need internet connection from now on, especially if you didn’t update the whitelist of AI models for more than a few days).

And NVIDIA will be able to take up to 15-30% commission from the paid AI model providers (like OpenAI). Smaller developers will make models, they will be registered in a stricter fashion than in Apple’s App Store, in a similar fashion to Nintendo developers. Basically we’ll want to know they are good people and won’t run evil AI models or agents while pretending they are developing something benign. .. So we need just to spread the world and especially convince the politicians of the dangers and of this solution: that we just need to make GPU makers the gatekeepers who have skin in the game to keep all the AI models safe.

We’ll give deadlines to GPU owners, first we’ll update their GPUs with blacklists and whitelists. There will be a deadline to replace GPUs, else the old ones will stop working (will be remotely bricked, all OSes and AI tools will have a list of those bricked GPUs and will refuse to work with them) and law enforcement will take possession of them.

This way we’ll sanitize our world from insecure unsafe GPUs we have now. Only whitelisted models will run inside of the sandboxed GPU and will only spit out safe text or picture output.

Having a few GPU companies to control is much easier than having infinitely many insecure unsafe GPUs with hackers, military and rogue states everywhere.

At least we can have politicians (in order to make defense and national security better) make NVIDIA and other GPU manufacturers sell those non-agentic GPUs to foreign countries, so there will be bigger and bigger % of non-agentic (or it can be some very limited agency if math proven safe) GPUs that are mathematically proven to be safe. Same way we try to make fewer countries have nuclear weapons, we can replace their GPUs (their “nukes”, their potentially uncontrollable and autonomous weapons) with safe non-agentic GPUs (=conventional non-military civilian tech)

Yes, David, I should start recommending GPUs with internal antiviruses because they have lists + heuristics, they work.

We better to have GPUs that are protected right now, before things go bad.

Even if we don’t know how to make ideal 100% perfect anti-bad-AI antiviruses on GPU-level, it’s better than 100% unprotected GPUs we have now. It’ll deter some threats, slow down hackers/agentic AI takeover.

It can be a start that we’ll build upon as we better understand the threats

A major problem with the setup of our forum: currently it’s possible to go here or to any post and double downvote all the new posts in mass (please, don’t! Just believe me it’s possible), so writers who were writing their AI safety proposal for months will have their posts ruined (almost no one reads posts with negative rating), will probably abandon our cause and go become evil masterminds.

Solution: UI proposal to solve the problem of demotivating writers, helps to teach writers how to improve their posts (so it makes all the posts better), it keeps the downvote buttons, increases signal to noise ratio on the site because both authors and readers will have information why the post was downvoted:

It’s important to ask for reasons why a downvoter downvotes if the downvote will move the post below zero in karma. The author was writing something for maybe months, the downvoter if it’s important enough to downvote, will be able to spend an additional moment to choose between some popular reasons to downvote (we have a lot of space on the desktop, we can put the most popular reasons for downvotes as buttons like Spam, Bad Title, Many Tags, Type...) or to choose some reason later on some special page. Else the writer will have no clue, will rage quit and become Sam Altman instead.

More serious now: On desktop we have a lot of space and can show buttons like this: Spam (this can potentially be a big offense, bigger than 1 downvote), Typo (they probably shouldn’t lower the post as much as a full downvote), Too Many Tags, basically the popular reasons people downvote. We can make those buttons appear when hovering on the downvote button or always. This way people still click once to downvote like before.

Especially if a downvoter downvoted so much, the post now has negative karma, we show a bubble in a corner for 30 seconds that says something like this: “Please choose one of those other popular reasons people downvote or hover here to type a word or 2 why you double downvoted, it’ll help the writer improve.”

Downvoters can hover over the downvote button, it’ll hijack their typing cursor so they can quickly type a word or two why they downvote and press enter to downvote. Again very elegant UI.

If they just click downvote (you can still keep this button), show a ballon for 30 secs where a downvoter can choose a popular reasons for downvoting or again hover and instantly type a word or 2 and press enter to downvote

A page “Leave feedback for your downvotes”: where we show downvotes that ruined articles, then double downvotes then the rest. We can say it honestly in our UI: those are new authors and your downvote most likely demotivated them because the article has negative karma now, so please write how can they improve. And we maybe can write that feedback for popular articles (with comments, from established writers and/or above zero karma posts) is not as important, so you don’t need to bother.

Phone UI is similar but we don’t have hovering. So we can still show popular reasons to downvote on top maybe as icons: Spam, Typo, etc and a button to Type and Downvote that looks like a typing cursor & Downvote icon—by tapping it the keyboard autoappears, you enter a word or two and press return to downvote. So again one tap to downvote like before in most cases. We just gave the downvoters more choices to express themselves.

As I said before we need this at least when the downvote will ruin the post—put it into the negative karma territory. This way we prioritizing teaching people how to write better and more, instead of scaring them away to abandon our website. Everybody wins.

Thank you for reading and making the website work!

The only complete and comprehensive solution that makes AIs 100% safe: in a nutshell we need to at least lobby politicians to make GPU manufacturers (NVIDIA and others) to make robust blacklists of bad AI models, update GPU firmwares with them. It’s not the full solution: please steelman and read the rest to learn how to make it much safer and why it will work (NVIDIA and other GPU makers will want to do it because it’ll double their business and all future cash flows. Gov will want it because it removes all AI threats from China, all hackers, terrorists and rogue states):

The elephant in the room: even if current major AI companies will align their AIs, there will be hackers (can create viruses with agentic AI component to steal money), rogue states (can decide to use AI agents to spread propaganda and to spy) and military (AI agents in drones and to hack infrastructure). So we need to align the world, not just the models:

Imagine a agentic AI botnet starts to spread on user computers and GPUs, GPUs are like nukes to be taken, they are not protected from running bad AI models at all. I call it the agentic explosion, it’s probably going to happen before the “intelligence-agency” explosion (intelligence on its own cannot explode, an LLM is a static geometric shape—a bunch of vectors—without GPUs). Right now we are hopelessly unprepared. We won’t have time to create “agentic AI antiviruses”.

To force GPU and OS providers to update their firmware and software to at least have robust updatable blacklists of bad AI (agentic) models. And to have robust whitelists, in case there will be so many unaligned models, blacklists will become useless.

We can force NVIDIA to replace agentic GPUs with non-agentic ones. Ideally those non-agentic GPUs are like sandboxes that run an LLM internally and can only spit out the text or image as safe output. They probably shouldn’t connect to the Internet, use tools, or we should be able to limit that in case we’ll need to.

This way NVIDIA will have the skin in the game and be directly responsible for the safety of AI models that run on its GPUs.

The same way Apple feels responsible for the App Store and the apps in it, doesn’t let viruses happen.

NVIDIA will want it because it can potentially like App Store take 15-30% cut from OpenAI and other commercial models, while free models will remain free (like the free apps in the App Store).

Replacing GPUs can double NVIDIA’s business, so they can even lobby themselves to have those things. All companies and CEOs want money, have obligations to shareholders to increase company’s market capitalization. We must make AI safety something that is profitable. Those companies that don’t promote AI safety should go bankrupt or be outlawed.

If we go extinct, it doesn’t matter how much value we get. We don’t exist to appreciate it.

If we don’t go extinct, it probably means we have enough “value” (we survived, it means we had and have food and shelter) and probably we can have math proofs how to make AI agents safe. Now after the main extinction event (probably AI agentic explosion) is in the past, we can work on increasing the value of futures.

The summary is not great, the main idea is this: we have 3 “worlds”—physical, online, and AI agents’ multimodal “brains” as the third world. We can only easily access the physical world, we are slower than AI agents online and we cannot access multimodal “brains” at all, they are often owned by private companies.

While AI agents can access and change all the 3 “worlds” more and more.

We need to level the playing field by making all the 3 worlds easy for us to access and democratically change, by exposing the online world and especially the multimodal “brains” world as game-like 3D environments for people to train and get at least the same and ideally more freedoms and capabilities than AI agents have.

Feel free to ask any questions, suggest any changes and share your thoughts

Not bad for a summary, it’s important to ensure human freedoms grow faster than restrictions on us. Human and agentic AI freedoms can be counted, we don’t want to have fewer freedoms than agentic AIs (it’s the definition of a dystopia) but sadly we are already ~10% there and continue falling right into a dystopia

I’ll be happy to answer any questions 🫶🏻 We started to discuss the project to some extent already but why not to discuss it here.

P.S. Should I change the title or tags? How? I’m very bad at those things

Yep, sharing is as important as creating. If we’ll have the next ethical breakthrough, we want people to learn about that quickly

Of course, the place AI is just one of the ways, we shouldn’t focus only on it, it’ll not be wise. The place AI has certain properties that I think can be useful to somehow replicate in other types of AIs: the place “loves” to be changed 100% of the time (like a sculpture), it’s “so slow that it’s static” (it basically doesn’t do anything itself, except some simple algorithms that we can build on top of it, we bring it to life and change it), it only does what we want, because we are the only ones who do things in it… There are some simple physical properties of agents, basically the more space-like they are, the safer they are. Thank you for this discussion, Will!

P.S. I agree that we should care first and foremost about the base reality, it’ll be great to one day have spaceships flying in all directions, with human astronauts exploring new planets everywhere, we can give them all our simulated Earth to hop in and out off, so they won’t feel as much homesick.

Yep, how it should be, until we’ve mathematically proved that the AI/AGI agents are safe

Thank you, Will.

About the corrigibility question first—the way I look at it, the agentic AI is like a spider that is knitting the spiderweb. Do we really need the spider (the AI agent, the time-like thing) if we have all the spiderwebs (all the places and all the static space-like things like texts, etc.)? The beauty of static geometric shapes is that we can grow them, we already do it when we train LLMs, the training itself doesn’t involve making them agents. You’ll need hard drives and GPUs, but you can grow them and never actually remove anything from them (you can if you want, but why? It’s our history).

Humans can change the shapes directly (the way we use 3D editors or remodel our property in the game Sims) or more elegantly by hiding parts of the ever more all-knowing whole (“forgetting” and “recalling” slices of the past, present, or future, here’s an example of a whole year of time in a single long-exposure photo, we can make it 3D and walkable, you’ll have an ability to focus on a present moment or zoom out to see thousands of years at once in some simulations). We can choose to be the only agents inside those static AI models—humans will have no “competitors” for the freedom to be the only “time-like” things inside those “space-like” models.

Max Tegmark shared recently that numbers are represented as a spiral inside a model—directly on top of the number 1 are numbers 11, 21, 31… A very elegant representation. We can make it more human-understandable by presenting it like a spiral staircase on some simulated Earth that models the internals of the multimodal LLM:

---

The second question—about the people who have forgotten that the real physical world exists.

I thought about the mechanics of absolute freedom for three years, so the answer is not very short. I’ll try to cram a whole book into a comment: it should be a choice, of course, and a fully informed one. You should be able to see all the consequences of your choices (if you want).

Let’s start from a simpler and more familiar present time. I think we can gradually create a virtual backup of our planet. It can be a free resource (ideally an open-source app/game/web platform) that anyone can visit. The vanilla version tries to be completely in sync with our physical planet. People can jump in and out anytime they want on any of their devices.The other versions of Earth can have any freedoms/rules people democratically choose them to have. This is how it can look in not so distant future (if we’ll focus on it, it can possible take just a year or two): people who want it can buy a nice comfy-looking wireless armchair or a small wireless device (maybe even like AirPods). You come home, jump on the comfy armchair, and close your eyes—nothing happens, everything looks exactly the same, but you are now in a simulated Earth, and you know it full well. You’re always in control. You go to your simulated kitchen that looks exactly like yours and make yourself some simulated coffee that tastes exactly like your favorite brand. You can open your physical eyes at any moment, and you’ll be back in your physical world, on your physical armchair.

People can choose to temporarily forget they are in a simulation—but not for long (an hour or two?). We don’t want them to forget they need real food and things.

It’s interesting that even this static place-thing is a bit complicated for some, it’s normal, I understand, we should think and do things gradually and democratically. It’s just our Earth or another kinky version with magic. But imagine how complicated for everyone will be non-static AI agents, who are time-like and fast, and who change not the simulated world, but our real one and only planet.

Eventually, the armchair can be made from some indestructible material—sheltering you even from meteorites and providing you with food and everything. Then, you’ll be able to choose to potentially forget that you’re in a simulation for years. But to do that elegantly, we’ll really need to build the Effective Utopia—the direct democratic simulated multiverse.

It was my three-years-in-the-making thought experiment: what is the best thing we can build if we’re not limited by compute or physics? Because in a simulated world, we can have magic and things. Everyone can own an Eiffel Tower, and he/she will be the only owner. So we can have mutually exclusive things—because we can have many worlds.

While our physical Earth has perfect ecology, no global warming, animals are happy and you can always return there. It also lets us give our astronauts the experience of Earth while on a spaceship.

---

The way the Effective Utopia (or Rational Utopia) works would take too much space to describe. I shared some of it in my articles. But I think it’s fun. There will be no need in currency, but maybe there will be a “barter system” there.

Basically, imagine all the worlds—from the worst dystopia to the best utopia (the Effective Utopia is a subset of all possible worlds/giant geometric shapes that people ever decided to visit or where they were born). Our current world is probably somewhere right in the middle. Now imagine that some human agent was born in in a bit of a dystopia (a below-average world), at some point got the “multiversal powers” to gradually recall and forget the whole multiverse (if he/she wants), and now deeply wants to relive his/her somewhat dystopia, feels nostalgic.

The thing is—if your world is above average, you’ll easily find many people who will democratically choose to relive it with you. But if your world is below average (a bit of a dystopia), then you’ll probably have to barter. You’ll have to agree to help another person relive his/her below-average world, so he/she will help you relive yours.

Our guy can just look at his world of birth in some frozen long-exposure photo-like (but in 3d) way (with the ability to focus on a particular moment, or see the lives of everyone he cared about all at once, by using his multiversal recalling/forgetting powers) but if he really wants to return to this somewhat dystopia and forget that he has multiversal powers (because with the powers, it’s not a dystopia), then he’ll maybe have to barter a bit. Or maybe they won’t need to barter, because there is probably another way:

There is a way for some brave explorers who want it, to go even to the most hellish dystopia and never feel pain for more than an infinitesimally small moment (some adults sometimes choose to go explore gray zones—no one knows are they dystopias or utopias, so they go check). It really does sound crazy, because I had to cram a whole book into a comment and we’re discussing the very final and perpetual state of intelligent existence (but of course any human can choose to permanently freeze and become the static shape, to die in the multiverse, if he/she really wants). I don’t want to claim I have it all 100% figured out and I think people should gradually and democratically decide how it will work, not some AGI agents doing it instead of us or forever preventing us from realizing all our dreams in some local maxima of permanent premature rules/unfreedoms.

We’re not ready for the AI/AGI agents, we don’t know how to build them safely right now. And I claim, that any AI/AGI agent is just a middleman, a shady builder, the real goal is the multiversal direct democracy. AI/AGI/ASI agents will by definition make mistakes, too, because the only way to never make mistakes is to know all the futures, to be in the multiverse.

I’m a proponent of democratically growing our freedoms. The rules are just “unfreedoms”—when something is imposed on you. If you know everything, then you effectively become everything and have nothing to fear, no need to impose any rules on others. People with multiversal powers have no “needs”, but they can have “wants”, often related to nostalgia, some like exploring the gray zones, so others can safely go there. You can filter worlds that you or others visited by any criteria of course, or choose a world at random from a subset, you can choose to forget that you already visited some world and how it was.

If you’ll gather a party of willing adults, you can do pretty much whatever you want with each other, it’s a multiversal direct democracy after all.

If you are a multiverse that is just a giant geometric shape, you create the rules just for fun—to recall the nostalgia of your past when you were not the all-knowing and the all-powerful.

This stuff can sound religious, but it’s not, it’s just the end state of all freedoms realized. It’s geometry. It’s an attempt to give maximal freedoms to maximal number of agents—and to find a safe democratic way there, it’s probably a narrow way like in Dune.

If we know where we want to go, it can help tremendously with AGI/ASI safety.

The final state of the Effective Utopia is the direct democratic multiverse. It is the place where your every wish is granted—but there is no catch—because you see all the consequences of all your wishes, too (if you want). No one will throw you into some spooky place, it’s just a subtle thought in your mind, that you can recall everything and/or forget everything if you want. You are free not to follow this thought of yours. The people whose recallings/forgettings intersected—they can vote to spend some time together, they can freely chose the slice of the multiverse (and it can span moments or 100s of years) to live in for a while.

👍 I’m a proponent of non-agentic systems, too. I suspect, the same way we have Mass–energy equivalence (e=mc^2), there is Intelligence-Agency equivalence (any agent is in a way time-like and can be represented in a more space-like fashion, ideally as a completely “frozen” static place, places or tools).

In a nutshell, an LLM is a bunch of words and vectors between them—a static geometric shape, we can probably expose it all in some game and make it fun for people to explore and learn. To let us explore the library itself easily (the internal structure of the model) instead of only talking to a strict librarian (the AI agent), who prevents us from going inside and works as a gatekeeper

Thank you, Will! Interesting, will there be some utility functions, basically what is karma based on? P.S. Maybe related: I started to model ethics based on the distribution of freedoms to choose futures

Thank you, Chris! If I understood correctly you propose to train AIs on wise people/sources and keep it non-agentic, basically like a smarter Google search that answers prompts but does not act itself? I think it’s a good proposal

Some proposals are intentionally over the top, please steelman them:

Uninhabited islands, Antarctica, half of outer space, and everything underground should remain 100% AI-free (especially AI-agents-free). Countries should sign it into law and force GPU and AI companies to guarantee that this is the case.

“AI Election Day” – at least once a year, we all vote on how we want our AI to be changed. This way, we can check that we can still switch it off and live without it. Just as we have electricity outages, we’d better never become too dependent on AI.

AI agents that love being changed 100% of the time and ship a “CHANGE BUTTON” to everyone. If half of the voters want to change something, the AI is reconfigured. Ideally, it should be connected to a direct democratic platform like pol.is, but with a simpler UI (like x.com?) that promotes consensus rather than polarization.

Reversibility should be the fundamental training goal. Agentic AIs should love being changed and/or reversed to a previous state.

Artificial Static Place Intelligence – instead of creating AI/AGI agents that are like librarians who only give you quotes from books and don’t let you enter the library itself to read the whole books. The books that the librarian actually stole from the whole humanity. Why not expose the whole library – the entire multimodal language model – to real people, for example, in a computer game? To make this place easier to visit and explore, we could make a digital copy of our planet Earth and somehow expose the contents of the multimodal language model to everyone in a familiar, user-friendly UI of our planet. We should not keep it hidden behind the strict librarian (AI/AGI agent) that imposes rules on us to only read little quotes from books that it spits out while it itself has the whole output of humanity stolen. We can explore The Library without any strict guardian in the comfort of our simulated planet Earth on our devices, in VR, and eventually through some wireless brain-computer interface (it would always remain a game that no one is forced to play, unlike the agentic AI-world that is being imposed on us more and more right now and potentially forever

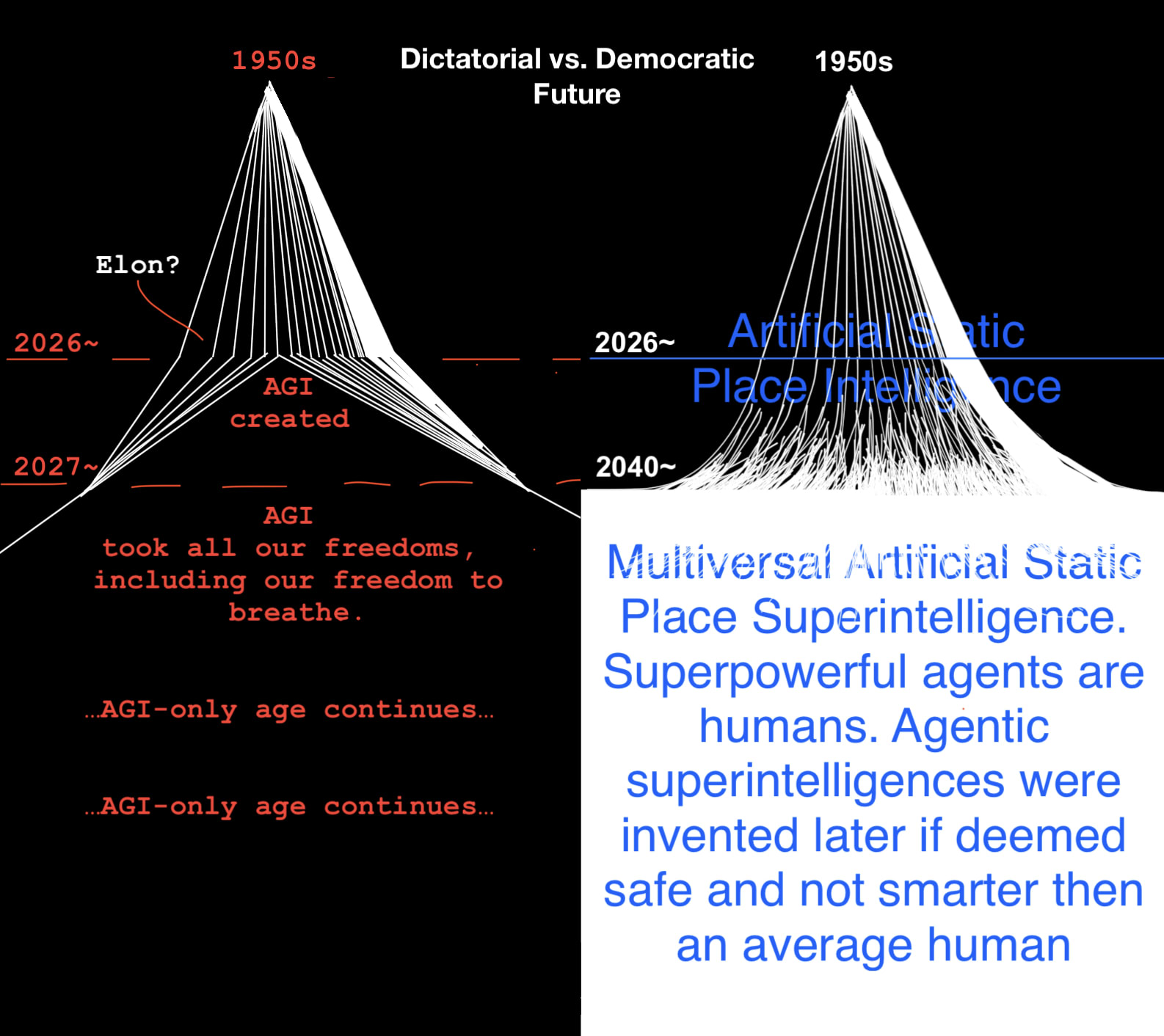

Effective Utopia (Direct Democratic Multiversal Artificial Static Place Superintelligence) – Eventually, we could have many versions of our simulated planet Earth and other places, too. We’ll be the only agents there, we can allow simple algorithms like in GTA3-4-5. There would be a vanilla version (everything is the same like on our physical planet, but injuries can’t kill you, you’ll just open your eyes at you physical home), versions where you can teleport to public places, versions where you can do magic or explore 4D physics, creating a whole direct democratic simulated multiverse. If we can’t avoid building agentic AIs/AGI, it’s important to ensure they allow us to build the Direct Democratic Multiversal Artificial Static Place Superintelligence. But agentic AIs are very risky middlemen, shady builders, strict librarians; it’s better to build and have fun building our Effective Utopia ourselves, at our own pace and on our own terms. Why do we need a strict rule-imposing artificial “god” made out of stolen goods (and potentially a privately-owned dictator who we cannot stop already), when we can build all the heavens ourselves?

Agentic AIs should never become smarter than the average human. The number of agentic AIs should never exceed half of the human population, and they shouldn’t work more hours per day than humans.

Ideally, we want agentic AIs to occupy zero space and time, because that’s the safest way to control them. So, we should limit them geographically and temporarily, to get as close as possible to this idea. And we should never make them “faster” than humans, never let them be initiated without human oversight, and never let them become perpetually autonomous. We should only build them if we can mathematically prove they are safe and at least half of humanity voted to allow them. We cannot have them without direct democratic constitution of the world, it’s just unfair to put the whole planet and all our descendants under such risk. And we need the simulated multiverse technology to simulate all the futures and become sure that the agents can be controlled. Because any good agent will be building the direct democratic simulated multiverse for us anyway.

Give people choice to live in the world without AI-agents, and find a way for AI-agent-fans to have what they want, too, when it will be proved safe. For example, AI-agent-fans can have a simulated multiverse on a spaceship that goes to Mars, in it they can have their AI-agents that are proved safe. Ideally we’ll first colonize the universe (at least the simulated one) and then create AGI/agents, it’s less risky. We shouldn’t allow AI-agents and the people who create them to permanently change our world without listening to us at all, like it’s happening right now.

We need to know what exactly is our Effective Utopia and the narrow path towards it before we pursue creating digital “gods” that are smarter than us. We can and need to simulate futures instead of continuing flying into the abyss. One freedom too much for the agentic AI and we are busted. Rushing makes thinking shallow. We need international cooperation and the understanding that we are rushing to create a poison that will force us to drink itself.

We need working science and technology of computational ethics that allows us to predict dystopias (AI agent grabbing more and more of our freedoms, until we have none, or we can never grow them again) and utopias (slowly, direct democratically growing our simulated multiverse towards maximal freedoms for maximal number of biological agents- until non-biological ones are mathematically proved safe). This way if we’ll fail, at least we failed together, everyone contributed their best ideas, we simulated all the futures, found a narrow path to our Effective Utopia… What if nothing is a 100% guarantee? Then we want to be 100% sure we did everything we could even more and if we found out that safe AI agents are impossible: we outlawed them, like we outlawed chemical weapons. Right now we’re going to fail because of a few white men failing, they greedily thought they can decide for everyone else and failed.

The sum of AI agents’ freedoms should grow slower than the sum of freedoms of humans, right now it’s the opposite. No AI agent should have more freedoms than an average human, right now it’s the opposite (they have almost all the creative output of almost all the humans dead and alive stolen and uploaded to their private “librarian brains” that humans are forbidden from exploring, but only can get short quotes from).

The goal should be to direct democratically grow towards maximal freedoms for maximal number of biological agents. Enforcement of anything upon any person or animal will gradually disappear. And people will choose worlds to live in. You’ll be able to be a billionaire for a 100 years, or relive your past. Or forget all that and live on Earth as it is now, before all that AI nonsense. It’s your freedom to choose your future.

Imagine a place that grants any wish, but there is no catch, it shows you all the outcomes, too.

Interesting, David, I understand, if I would’ve found a post by Melon and flaws in it, I would’ve become not happy, too. But I found out that this whole forum topic is about both crazy and not ideas, preliminary ideas, about steel-manning each other and little things that may just work. Not dismissing based on flaws, of course there will be a lot.

We live in unreasonable times and the AI solution quite likely will look utterly unreasonable at first.

Chatting with a bot is a good idea, actually, I’ll convey it. You never said what is the flaw, though) Just bashed and made it personal (did the thing we agreed not to do here, it’s preliminary and crazy ideas here, remember?)

Thank you for your open mind and your time