AI Safety Europe Retreat 2023 Retrospective

This is a short impression of the AI Safety Europe Retreat (AISER) 2023 in Berlin.

Tl;dr: 67 people working on AI safety technical research, AI governance, and AI safety field building came together for three days to learn, connect, and make progress on AI safety.

Format

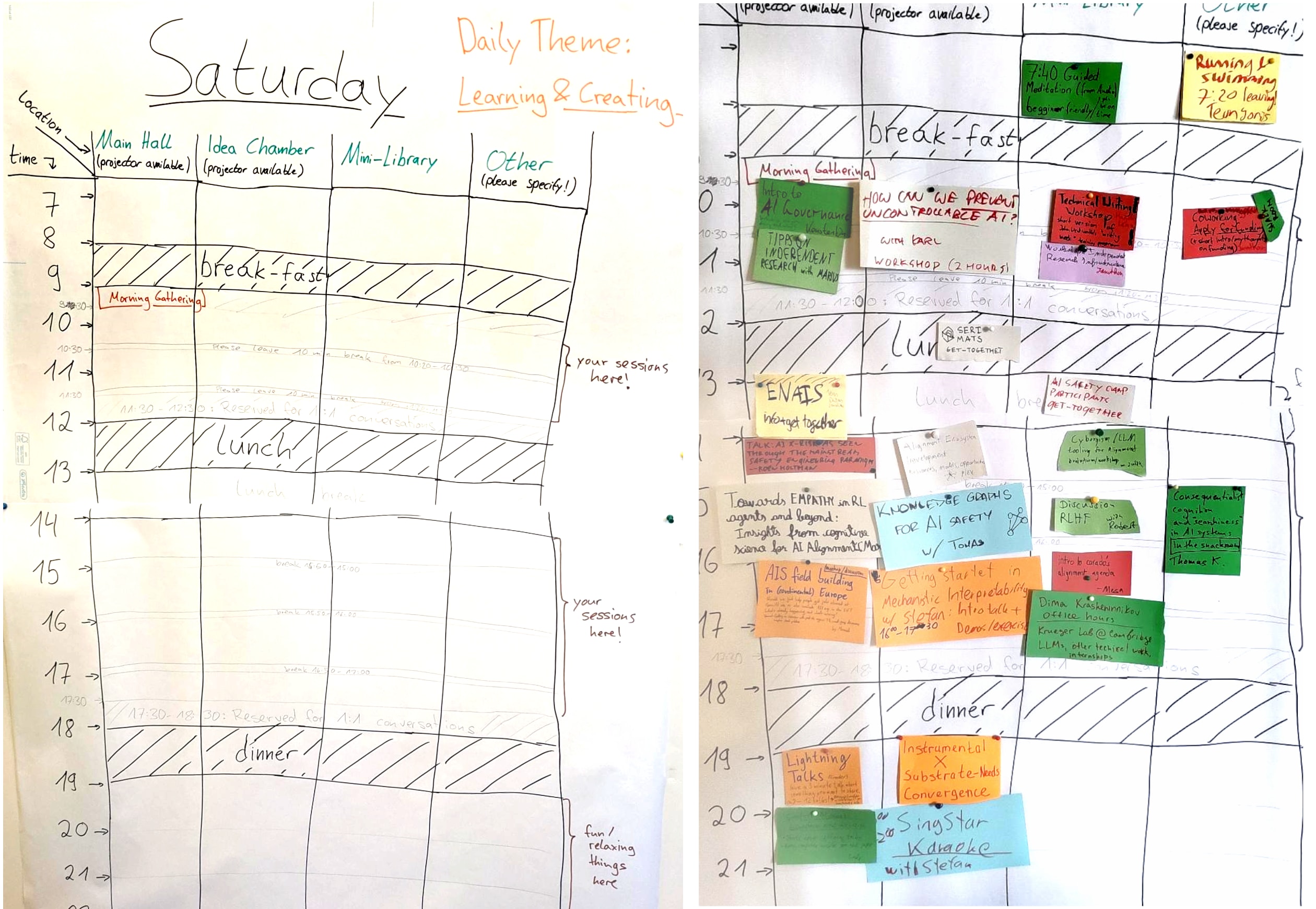

The retreat was an unconference: Participants prepared sessions in advance (presentations, discussions, workshops, …). At the event, we put up an empty schedule, and participants could add in their sessions at their preferred time and location.

Participants

Career Stage

About half the participants are working, and half of them are students. Everyone was either already working on AI safety, or intending to transition to work on AI safety.

Focus areas

Most participants are focusing on technical research, but there were also many people working on field building and AI governance:

Research Programs

Many participants had previously participated, or currently participate in AI safety research programs (SERI MATS, AI safety Camp, SPAR, PIBBSS, MLSS, SERI SRF, Refine)

Countries

All but one participant were based in Europe, with most people from Germany, the Netherlands and the UK.

Who was behind the retreat?

The retreat was organized by Carolin Basilowski (now EAGx Berlin team lead) and me, Magdalena Wache (independent technical AI safety researcher and SERI MATS scholar). We got funding from the long-term future fund.

Takeaways

I got a feeling of “European AI safety community”.

Unlike in AI safety hubs like the Bay area, continental Europe’s AI safety crowd is scattered across many locations.

Before the retreat I already personally knew many people working on AI safety in Europe, but that didn’t feel as community-like as it does now.

Other people noted a similar feeling of community.

Prioritizing 1:1s was helpful

We reserved a few time slots just for 1:1 conversations, and encouraged people to prioritize 1:1s over content-sessions.

Many people reported in the feedback form that 1:1 conversations were the most valuable part of the retreat for them.

There is a demand for more retreats like this.

Almost everyone would like to attend this kind of retreat at least yearly:

The retreat was relatively low-budget. It was free of charge, and most participants slept in dorm rooms. Next time, I would make the retreat ticketed and pay for a better venue with more single/double rooms

I would make a future retreat larger.

We closed the applications early because we had limited space, and wanted to limit the cost that comes with rejecting people who are actually a good fit. I think we would have had ~50 more qualified applications if we had left them open for longer.

I would also do targeted outreach to AI safety institutions next time, in order to increase the share of senior people at the retreat.

I think the participant-driven “unconference” format is great for a few reasons:

Lots of content: As the work for preparing the content was distributed among many people, there was more content overall, and there could be multiple sessions in parallel.

For me, being able to choose the topics that are most interesting to me makes me take away a lot more relevant content than if there was only one track of content.

Small groups (usually 10-20 people) made it possible to have very interactive sessions.

There was no “speaker-participant divide”.

I think this makes an important psychological difference: If there are “speakers” who get the label of “experienced person”, other people are a lot more likely to defer to their opinion, and won’t speak up if something seems wrong or confusing.

However, when everyone can contribute to the content, and everyone feels more “on the same level”, it becomes a lot easier to disagree and to ask questions.

I’m very excited about more retreats such as this one happening! If you are interested in organizing one, I am happy to support you—please reach out to me!

If you would like to be notified of further retreats, fill in this 1-minute form.

Update: We did a follow-up survey 4 months after the retreat, asking participants what impact the retreat had on them. Here is a summary of the responses:

36 people responded (~54% of participants)

Personal prioritization

7 people reported their prioritization which area to work in was influenced by the retreat

One person quit their previous job to do a career transition to AI safety

One person decided to keep working on their current topic rather than switch as they had considered before

One person started to work on a research topic they wouldn’t have focused on otherwise

Connections

26 people mentioned they made connections they found useful, e.g. for research discussions.

7 people started research collaborations with people from the retreat

Applying for training programs and funding

5 people applied to SERI MATS as a result of the retreat

4 people got accepted into the online training program

2 people got accepted into the in-person research program

4 people applied for funding (1 accepted, 1 rejected, 2 unclear)

One person became a teaching assistant for the ML safety course

Concrete projects

At least 2 people took the AGISF 201 course

4 people started field-building projects

3 people wrote a post, and one person created a youtube channel

One is unsure whether their post was a result of the retreat

For one it was their first ever post

Other/Miscellaneous

3 people reported the retreat didn’t change much for them personally, but they think they were able to help others

All three were mentioned by others in the feedback as being particularly helpful

13 people said they got a better picture of the AI safety landscape in general

6 people reported they got a motivation boost from feeling part of a community of like-minded people

3 people mentioned they went to EAG London because of the retreat

One person shifted focus within their area of work

One person got useful experimental results they think they wouldn’t have gotten without the input from the retreat

(Note that the survey consisted of a free text field, and the categories I made up for summarizing the results are pretty subjective. Also note that some people mentioned that they started projects or applied for things, but would have done so even without the retreat. I did not include those in my count.)

I think these results are really helpful to improve the picture of how such a retreat is valuable for people!

The biggest surprise for me is the amount of tangible results (research collaborations, getting into SERI MATS, concrete projects, career changes) that came out of the retreat. There were 9 people who said in the feedback form that they want to start a project as a result of the retreat (the form they filled out right at the end of the retreat). I would have guessed that maybe 5 would actually follow through after the initial motivation directly after the retreat has worn off. And I think that would have been a very good result already. Instead, it was more than 9 people who started projects after the retreat!

I still have the intuition that the more vague effects of the retreat (such as feeling part of a community) are really important, potentially more so than the tangible outcomes. And these vague things are probably not measured very well by a survey that asks “What impact did the retreat have on you?”, because it is just easier to remember concrete than vague things.

Overall, these survey results make me even more excited about this kind of AI safety retreat!

This is awesome—thanks for running this and sharing your experience!

This seems cool, thanks for running it!

What was the primary route to value of this retreat in your opinion? I’d be curious to know whether it was mainly about providing community and thus making participants more motivated, or if there were concrete new collaborations or significant individual updates derived from interactions at this retreat.

I think the value comes from:

connections made

participants made 6.7 new connections on average (Question in the feedback form was “How many additional people working on AI safety would you feel comfortable asking for a small professional favor after this retreat? E.g. having a call to discuss research ideas, giving feedback on a draft, or other tasks that take around 30 minutes...”).

18 people mentioned their plans to reach out to people from the retreat for feedback on posts or career plans, for research discussions or for collaborations.

motivation boost

projects/collaborations started

9 people mentioned projects (Writing posts, doing experiments, and field building projects) they would start as a result of the retreat.

participants getting a better map of the space

which people exist, what kinds of things they are good at, what they have worked on, what you can ask them about.

What projects and what research exist.

A general sense of “getting more pieces of the puzzle”.

I think this is hard to measure, but really valuable.

I find it hard to say which of these is most important, and they are also highly entangled with each other

Question number 2: Did you have any processes in place to keep the epistemic standards/content quality high? If so, what were those? Or were these concerns implemented in the selection process itself (if there was any)?

I’ve heard at least from one AIS expert (researcher & field-builder) that they were concerned about a lot of new low-quality AIS research emerging in the field, creating more “noise”.

Even if that is not a concern, one can risk distracting people from pursuing high-impact research paths if persuasive lower-quality ones are presented (and there is an added social pressure/dynamics at play).*

From your results, it seems like this was not the case, so I’m very curious to hear the secret recipe to potentially adapt a similar structure :) In theory, I’m all for the unconference format (and other democratic initiatives) but I can’t help to worry about the risks related to giving up quality control.

*- I know it can be hard to assess promising vs dead-end directions in AIS.

Epistemic standards: We encouraged people to

notice confusion, ask dumb questions and encourage others when they ask questions (to decrease deferring of the type “This doesn’t make sense to me but the other person seems so confident so it must be true”)

regularly think about solving the whole problem (to keep the big picture in mind and stay on track)

reflect on their experience. We had a reflection time each morning with a sheet to fill out which had prompts like “what are key confusions I would like to resolve” “What is my priority for today” etc

Content Quality: It depends on what you mean by content quality, but I think having a high bar for session content can actually be a bit detrimental. For example one of the sessions I learned most from wasn’t “high quality”—the person wasn’t an expert and also hadn’t prepared a polished presentation. But they knew more than I did and because the group was small I could just ask them lots of questions and learn a lot that way.

We also encouraged people to leave sessions when they don’t find them the best use of their time in order to ensure that people only listen to content they find valuable. We got the feedback that people found that norm really helpful.

Those seem like great practices and I’m happy people actually applied them! (E.g. I’ve experienced people not leaving sessions despite encouragement if there were no role models to model because it goes so much against social norms. This was the case of high school and university students, maybe more senior people are better at this :) )

Such an interesting project! Thank you for organising it and sharing your findings.

The part that I was the most surprised about in that half of the participants were AIS researchers already working in the field. Do you know what their main motivation was behind joining the event? (Also happy to hear it directly from AIS researchers who attended the unconference and happen to see this comment!)

The reason I’m surprised is due to the following assumption about such researchers (simplified and exaggerated for the sake of clarity):

They already are connected to other researchers or can easily connect to them and exchange high-quality ideas e.g. in London (since we are talking about researchers in Europe) -- What motivates them to attend an unconference in Germany instead, especially with a lot of junior people (who might have a lot of terrible/obvious ideas)?

I think part of it is that networking is relatively hits-based: Most new connections don’t make much difference, but meeting someone who is a good fit for collaboration or who gives you access to an opportunity can be really valuable. I don’t think most researchers have hit diminishing returns on networking yet. Even if you already know 200 people in the field, getting to know 10 more out of which one is an “impactful connection” can make a big difference. For me it has also often made a difference to meet someone I already know again and get to know them better, and that ended up making a big difference for me.

I think it’s actually (relatively) hard to connect to people even if you have a decent network. If you want to meet someone new you can get an intro and then have a call with them, but I think there is a lot of friction to that, and it feels like you need a reason to do so. Meeting people at an event has a lot less “social overhead”. If you want to talk to someone you just walk over and see how it goes, and if it’s not productive you walk off again.

I’m not sure if “finding it useless to talk to junior people who have terrible or obvious ideas” is a problem people have? I didn’t find anyone at the retreat “too junior” to be interesting to talk to (even though with different people the conversational dynamic would be more skewed towards me giving vs receiving advice depending on the other’s experience level, but that seems fine to me). And if someone does find it uninteresting to talk to someone with less experience, they can still just end the conversation. Actually we had an explicit norm that it is perfectly okay to end conversations or leave sessions if you don’t think they are the best use of your time at the moment.

Excellent considerations! One of the reasons I assumed more senior people would not want to talk with more junior ones is because you keep hearing that AIS is mentor-constrained. However, your description made me update more towards the potential high value of these low-friction, “small social overhead” networking opportunities. Thanks a lot for the insights!