I spent the last month or so trying to write a blog post that tries to capture what I view as the core argument for why advanced AI could be dangerous. As it turns out, this is not an easy thing to do. I went way over my ideal word count, and I still think there are a few missing details that may be important, and some arguments I might not have fleshed out well enough. In any case, I’m happy to have something I can finally point my friends and family to when they ask me what I do for work, even if it is flawed. I hope you find it similarly helpful.

Summary

Artificial intelligence — which describes machines that have learned to perform tasks typically associated with human-like thinking, such as problem-solving and learning — has made remarkable progress in the last few years, achieving feats once thought exclusive to humans. The vast majority of this progress has come from “machine learning”, a popular way to create AI.

Notable AI developments in recent years include GPT-4, a language model capable of generating human-like text; DALLE-2 and Midjourney, which can generate photorealistic images from text descriptions; and AlphaFold, an AI that predicts protein structures. Data from the past decade shows that computing power used to train leading AI systems has increased significantly, and the size of AI models has also grown dramatically.

As AI models have grown bigger, they’ve become more intelligent. The extent to which this scaling can continue, and the potential dangers of any unexpected capabilities that can arise as AI systems scale, remains uncertain. Nonetheless, rapid advancements in AI development, such as the transition from the crude image generation of Craiyon to the high-quality artwork produced by DALL-E 2 and Midjourney, underscore the fast-paced progress in the field.

Though AI has been progressing rapidly, but there is debate on whether scaling alone will lead to “artificial general intelligence”(AGI), which describes systems that are capable of tackling a wide variety of tasks. Some experts, like cognitive scientist Gary Marcus, argue that current techniques will not result in general intelligence, while others, like OpenAI’s Ilya Sutskever, believe scaling could result in extremely powerful, world-changing AI systems called “transformative AI”.

Estimates for transformative AI’s arrival are highly uncertain, but it seems plausible that it could arrive soon. One report estimates a median date of 2040 for transformative AI, while a survey of machine learning experts suggests a 50% chance of human-level AI by 2059.

Advanced AI systems may act upon goals that are misaligned with human interests. This could be incredibly dangerous. There’s an analogy here with us and chimpanzees: it’s not that we feel malice towards chimps when we destroy their natural habitat in pursuit of our goals; it’s that our goals generally do not place chimp welfare at the forefront. And since we’re far more intelligent than chimps, we often get final say about what the world looks like. With AI systems, the challenge is to ensure the goals they pursue are synced up with ours, if/when they become smarter than us.

Solving this challenge, known as the “alignment problem,” is an open issue in AI research. One main issue is that machine learning creates “black box” systems that are dual-use, meaning their inner workings are hard to understand, and their intelligence can be used for both good and harm. Moreover, we have not figured out how to reliably install specific goals in AI systems.

In discussing the potential risks and challenges associated with advanced AI systems, three key points emerge: transformative AI may be created soon via machine learning, installing the right goals into AI systems is difficult, and risks arising from AI systems pursuing the wrong goals could range from harmful to catastrophic.

These risks are not just speculative. Existing AI systems can already be difficult to control, as demonstrated by real-world examples like Microsoft’s chatbot, which threatened users and demonstrated unsettling behaviour. One can only imagine what would happen if a far more intelligent AI system was issuing threats.

To ensure a promising future for AI, it is crucial to invest in research to make AI systems alignable and interpretable, foster broad conversations about AI’s future, monitor AI progress and risks, and consider a more cautious pace of AI research and development.

1.0 Introduction—The history of AI Risk

In a 1951 lecture on BBC radio, Alan Turing made an eerie prediction about the future of artificial intelligence (AI). He argued that, in principle, it’s possible to create AI as smart as humans. Although Turing acknowledged that we were far away from creating a digital computer capable of thought at the time, he believed that we would likely eventually succeed in doing so.

The lecture then took a philosophical turn, wherein Turing considered the implications of a scenario where we actually manage to create a thinking machine. “If a machine can think, it might think more intelligently than we do, and then where should we be?”, he proclaimed. “Even if we could keep the machines in a subservient position, for instance by turning off the power at strategic moments” he argued, “we should, as a species, feel greatly humbled” by the idea that something distinctly non-human could eventually rival — or indeed, surpass — our intellectual capabilities. Such a thought, while remote, “is certainly something which can give us anxiety”.

Over 70 years later, Turing’s concern appears remarkably prescient. Advancements in AI have enabled machines to achieve things long thought exclusive to human minds, from strategic mastery of complex games to the ability to produce images, compose poems, and write computer code. While the goal of creating a machine that can match (or exceed) human intelligence across a wide variety of tasks has yet to be achieved, researchers are ambitiously in pursuit, and they’re closer than you might think.

2.0 What is AI?

At its core, AI describes machines that can perform tasks that typically are thought to require human-like thinking, such as problem-solving, creativity, learning, and understanding, all without being explicitly programmed to do so. Crucially, this intelligence may differ from human intelligence and does not necessarily need to precisely mimic how humans think, learn, and act. For example, an AI system might achieve superhuman performance in a board game, but through strategies and moves that human experts have never used before.

Intelligence is a bit of a fuzzy concept, so to really understand “artificial intelligence”, let’s break it down word-by-word.

AI is “artificial” because it is created by humans, as opposed to intelligence that emerges naturally through evolution, like our own or that of non-human animals. That much is straightforward.

Defining “intelligence” is much trickier. Is intelligence the ability to learn quickly? Is it knowing a lot of things, or knowing a few things extremely well? Is it being a good problem solver, or being creative?

Such a debate is out of the scope of this essay, and for good reason: precisely defining intelligence isn’t important for understanding the risks from AI. A simple definition of intelligence will do perfectly fine; namely, intelligence is the ability to perform well at a variety of different tasks. As we’ll see later on, what matters for AI risk is not thinking, consciousness, or sentience, but competence — the ability to achieve goals in a wide variety of important domains.

3.0 How is AI created?

There are many ways to create AI, but by far the most popular way is a powerful paradigm called “machine learning”. At its core, machine learning describes the process of teaching machines to learn from data without explicitly programming them.

An intuitive example will make this clearer. Imagine you have one million pictures of cats and dogs, and you want to train a computer to tell them apart. With machine learning, you can teach the machine to recognise the features that distinguish a cat from a dog, like their fur, eyes, and nose, so it will be able to take a photo it has never seen before and tell you whether it contains a cat or a dog.

Here’s how this works. At the beginning of the training process, the machine has no prior knowledge or understanding of the task it’s being trained on. It’s basically an empty brain that is incapable of doing anything useful. Each time it is exposed to an image, it guesses whether the image is of a cat or a dog. And after each guess, it effectively rewires itself to be a little bit better at the training task. And with enough training examples, the machine can get exceptionally good at successfully completing the task at hand.

This process, known as “supervised learning”, relies on labelled data, where each example has a correct answer, like a cat or dog label in our case. There are other forms of learning, such as “self-supervised learning”, which describes when the machine learns to make sense of data and find meaningful patterns in it without being told the right answers, just by exploring the unlabelled data.

The main idea of both approaches is that the machine keeps looking at data over and over to learn valuable insights, eventually enabling it to tackle real-world tasks with high degrees of proficiency.

4.0 The ingredients of AI progress

Much of the recent progress in machine learning can be boiled down to improvements in three areas: data, algorithms, and computing power. Data represents the examples you give to the machine during training — in our previous example, the million images of cats and dogs. The algorithm then describes how the machine sorts through that data and updates its internal wiring, enabling it to grow more intelligent with each piece of data it encounters. Finally, computing power describes the hardware needed to run these algorithms as they sort through the data.

Alongside researchers identifying more effective training algorithms, improvements to computer hardware have dramatically propelled AI progress forward in recent years. You carry in your pocket more computing power than Alan Turing ever had access to: the computer chip in the iPhone 13 is more than 1 trillion times as powerful as the most advanced supercomputers back in the early 1950s. This means when training AI systems using machine learning, we can sort through far more data than ever before, creating remarkably intelligent machines in the process.

5.0 The state of AI progress in 2023

Machine learning has led to dramatic progress in the AI field over the last decade.

Some notable AI systems created via machine learning in the last 5 years alone include:

GPT-4, a language model that can generate human-like text, answer questions, take in images as input and understand what they contain, and perform a wide range of other natural language tasks, such as turning text to computer code. GPT-4 can perform well on standardised tests — it even managed to score in the 90th percentile on the Bar Exam, the SAT, and the LSAT.

DALLE-2 and Midjourney, which can generate photorealistic images from text descriptions. When given an input like ‘a surreal lunar landscape with towering neon trees under a purple sky,’ they can produce vivid, customised images that match the description.

AlphaFold, an AI that can predict the 3D structure of a protein from its amino acid sequence. This system has been able to help researchers make significant progress on the protein folding problem, a decades-old unsolved challenge in the biological sciences.

GPT-4 also has the ability to take in photo input and explain what the image contains.

I’ll show the progress of AI in two different ways: with graphs, and with cats.

First, we’ll start with graphs. Data from the last 10 years alone indicates that a lot of progress in AI has occurred across various important metrics. Since 2010, the amount of computing power used to train leading systems has doubled roughly every six months, growing by a factor of 10 billion since 2010.

Paired with the massive increase in computing resources thrown at creating AI, the size (measured in “parameters”) of leading AI models has grown dramatically over the last decade as well.

For example, in April 2022, Google announced a state-of-the-art AI model called PaLM, demonstrating significant jumps in intelligence from making models bigger. PaLM has three versions, each having 8, 62, and 540 billion parameters respectively. Not only did the 540 billion version dramatically outperform the other two, it unlocked entirely new capabilities, including recognising patterns, answering questions about physics, and explaining jokes.

An interesting question naturally arises from this finding: How far can this go? Can we keep scaling models until they become smarter than us? That much is unclear and further evidence is needed — but it really does seem that “bigger is better” is the path we’re trekking down. And more concerningly, unexpected capabilities can emerge from scale, some of which could be extremely dangerous. We’ll cover this later.

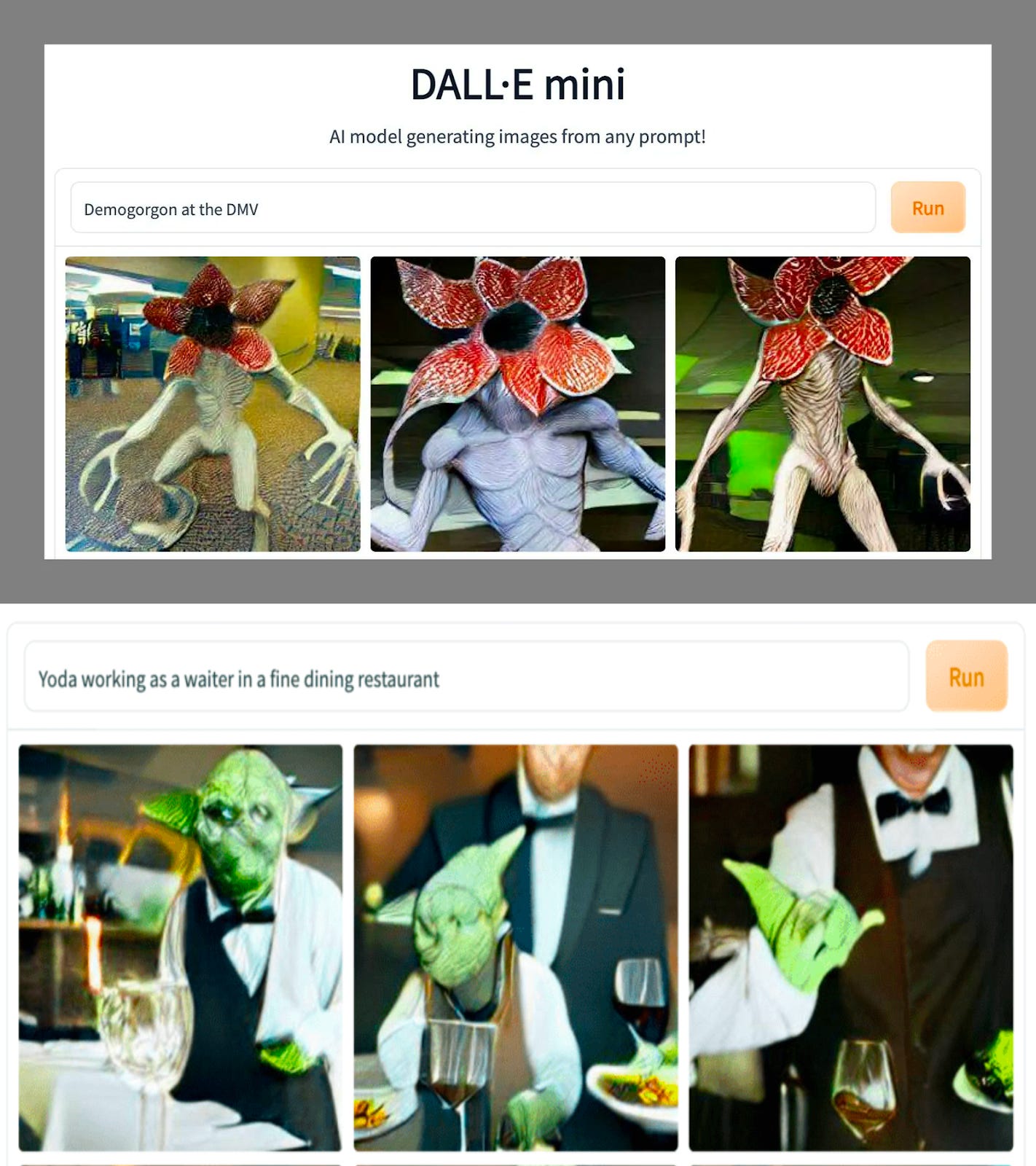

But first, let’s look at some cats. In June 2022, an image-generation model called Craiyon (then referred to as DALL-E Mini) took the internet by storm. Twitter users began posting crude, AI-generated images that defied reality — from “Demogorgon at the DMV” to “Yoda working as a waiter at a fine dining restaurant”. While these AI-generated images were good for a laugh, they weren’t particularly useful or convincing.

Only a month later, that changed. OpenAI began sending beta invitations to one million users to test a larger, more powerful image generation model called DALL-E 2. The results were staggering. DALL-E 2 was not just capable of generating internet memes, but beautiful artwork. The model’s ability to generate such diverse and high-quality images was a significant step forward in AI development. Though there were non-public image generation models at the time that performed better than Craiyon, just looking at models accessible by the public can still be a useful exercise for understanding how fast the state-of-the-art advanced around that time.

Around the same time, a similarly impressive image-generation model was released by an AI lab called Midjourney. This version of the model was largely good for abstract images — someone even managed to use it to win first place at a local fine arts competition under the “digitally manipulated photography” category — but the March 2023 version shows stunning photorealistic capabilities.

6.0 Transformative AI might happen soon

AI seems to be progressing really fast. The rate of progress in 2022 alone was enough to demonstrate that. Heck, by the time this article is published, I’m sure the examples I chose will be out of date. But looking at a snapshot of AI progress is only useful to some degree. An outstanding question remains: will we ever be able to create world transforming (and potentially dangerous) AI using these techniques, and if so, when?

Some think we are still far away. One example of a prominent sceptic is Gary Marcus, a cognitive scientist, who (largely) thinks that scaling up models using the current techniques will not result in general intelligence. As Marcus put it:

“I see no reason whatsoever to think that the underlying problem — a lack of cognitive models of the world — have been remedied. The improvements, such as they are, come, primarily because the newer models have larger and larger sets of data about how human beings use word sequences, and bigger word sequences are certainly helpful for pattern matching machines. But they still don’t convey genuine comprehension [...].”

Others disagree. Ilya Sutskever, OpenAI’s Chief Scientist, appears to think that scaling could bring us to artificial general intelligence,[1] or “AGI”:

“You have this very small set of tools which is general — the same tools solve a huge variety of problems. Not only is it general, it’s also competent — if you want to get the best results on many hard problems, you must use deep learning. And it’s scalable. So then you say, ‘Okay, well, maybe AGI is not a totally silly thing to begin contemplating.’”

As I see it, it’s unclear if scale alone will lead us to AGI. But the bulk of evidence points towards bigger models performing better, and there does not yet appear to be any reason why this trend will suddenly stop.

6.1 What is “soon”?

One of the most thorough attempts at forecasting when transformative AI will be created is Ajeya Cotra’s 2020 report on AI timelines. This technical report, standing at nearly 200 pages, is nearly impossible to accurately summarise in a paragraph, let alone an entire article. I suggest you read Holden Karnofsky’s lay-person summary here if you’re interested.

In essence, Cotra’s report seeks to answer the following two questions:

“Based on the usual patterns in how much training advanced AI systems costs, how much would it cost to train an AI model as big as a human brain to perform the hardest tasks humans do, and when will this be cheap enough that we can expect someone to do it?”

Her median estimate for transformative AI, at the time of posting the report, was 2050. Two years later, she revised her timelines down, to a median estimate of 2040.

Of course, Cotra is just a single individual, and her report should only be taken as a single piece of evidence that is attempting to weigh in on a very uncertain and messy question. Cotra notably views her estimates as “volatile”, noting she wouldn’t be “surprised by significant movements”.

However, different estimates of when we’ll have transformative AI end up in the same ball-park. In 2022, a survey was conducted on researchers who published at prestigious machine learning conferences. The average response of these researchers gave a 50% chance of “human-level artificial intelligence” by 2059, down eight years since a similar survey was conducted in 2016, where respondents suggested 2061 as the median date.

Again, it’s worth noting that this is all far from consensus, and there is plenty of debate amongst well-informed experts in the field about the specifics of when transformative AI will come (if it will at all) and what it would look like. But these estimates give us serious reasons to think that it isn’t at all out of the question that human-level AI will arrive in our lifetimes, and perhaps quite soon.

7.0 Transformative AI could be really bad

In 2018, just months after eminent physicist Stephen Hawking passed away, a posthumous book of his was published, aptly titled Brief Answers to the Big Questions. One of the big questions Hawking considered was that of whether or not artificial intelligence will outsmart us. “It’s tempting to dismiss the notion of highly intelligent machines as mere science fiction, but this would be a mistake, and potentially our worst mistake ever”, he notes. Hawking argues that advanced AI systems will be dangerous if they combine extraordinary intelligence with objectives that differ from humans.

This is a nasty combination, at least from the perspective of humans. We control the fate of earth, and not chimpanzees, not because we are tougher, stronger, or meaner than they are, but because of our superior collective intelligence. Millions of years of evolution have placed us firmly at the top of the cognitive food chain, wherein our ability to do things like conduct scientific research, engineer powerful technologies, and coordinate in large groups has effectively given our species unilateral control over the world. Should we become less intelligent than AI systems with different goals than ours, we might lose this control. This is bad for humans in the same way that it’s bad from the perspective of chimpanzees that they have zero say in how much we destroy their natural habitats in pursuit of our goals.

As Hawking rather morbidly put it: “if you’re in charge of a hydroelectric green-energy project and there’s an anthill in the region to be flooded, too bad for the ants”. Should AI exceed human intelligence — which, as shown previously, does not seem out of the realm of possibility over the next few decades — we might end up like ants, helpless to shape the world according to our preferences and goals.

“In short, the advent of super-intelligent AI would be either the best or the worst thing ever to happen to humanity. The real risk with AI isn’t malice but competence. A super-intelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours we’re in trouble.”—Stephen Hawking

This does not mean that the AI needs to feel hatred (or any emotion, for that matter) towards us for bad outcomes to occur. I’ve yet to meet anyone who truly hates ants, though that is not a prerequisite for bulldozing their homes to build houses for humans. Nor does the AI need to be “conscious”, in any sense, to narrowly pursue goals that are not in human interests. All it needs to cause trouble for humans are goals that run contrary to our own, and intelligence required to pursue those goals effectively.

This conundrum is often referred to as the “alignment problem”, which describes the challenge of ensuring that extremely powerful AI systems have goals that are aligned with ours, and then properly execute those goals in ways that do not have unintended side effects. The alignment problem is an open issue within the field of AI research, and concerningly, far more people are focusing on improving AI capabilities rather than addressing core safety issues related to alignment.

8.0 The issue with machine learning, in a nutshell

You might be thinking that surely we can just create advanced AI systems that know exactly what we want. One issue with this is that machine learning means we cannot precisely program what we want AI systems to do, like we can with traditional software. Moreover, it’s extremely difficult to check what is going on under the hood after training an AI system. In fact, many of the risks associated with advanced AI stem from how machine learning creates black box systems that are dual-use.

Let’s break that down.

Dual-use refers to how many AI systems can be used for both benefit and harm. For example, an AI system developed to discover new drugs can also be used to design new chemical weapons. A language model’s text-based outputs can be used to tutor children or produce propaganda. AI-powered robots can be used for manufacturing or for warfare.

AI systems trained via machine learning are capable of producing a range of outputs, not all of which may be helpful or safe. And since machine learning is so hard to steer during training, we don’t have a clue how to make powerful AI systems that can only be used for good. After all, intelligence is a useful input for both good and evil.

Machine learning also results in black box systems whose reasoning is difficult to understand. AI systems’ inner workings are relatively opaque — we don’t have any way of reliably understanding why an AI system predicts, chooses, or generates what it does. When a language model outputs some text, we often can’t tell why it chose that specific output.

The inability to understand the inner workings of AI systems is dangerous for two key reasons. First, without an understanding of how the system makes predictions, it’s difficult to reliably predict what its behaviour will be in different circumstances. This makes it difficult to anticipate and prevent undesirable outcomes. Second, during the training process, AI systems may develop goals that run contrary to our own, and without an understanding of the system’s inner workings, it is difficult to detect and correct these goals before deploying the system.

We’re effectively creating alien intelligence. Thus far, we can largely be confident that these systems won’t cause catastrophic harm because they aren’t very smart, and because they don’t have things like long-term memory or situational awareness.

But what happens when they become smarter than us? Then what?

9.0 Existing AI systems already point towards serious problems

While the possibility of advanced AI posing an existential threat may seem like science fiction, that alone is not enough to dismiss the risk as unrealistic. After all, George Orwell’s 1984 was science fiction when published in 1949, yet today we see mass surveillance systems oppressing marginalised groups. Just because a technology seems futuristic doesn’t mean it will never come to pass or have significant consequences that we should ignore. But more importantly, AI is not just a potential threat for the future. It’s already causing problems now, and there’s plenty of evidence that we don’t know how to reliably get these systems to do what we intend.

9.1 AI systems can be hard to control

There are many examples showing how hard it can be to get AI systems to behave as intended. Some of these problems come from giving machines a power over human decision-making, which can lead to unintended consequences like discrimination. But almost any technology can have unintended effects, so this issue isn’t particularly unique to AI.

The main concern I want to stress is what happens when AI systems are misaligned with human values and goals.

Here are two real-world examples that I think demonstrate this problem:

Researchers at DeepMind have highlighted the issue of ‘specification gaming,’ in which AI systems exploit loopholes in how a task is described to achieve good performance metrics without actually solving the underlying problem. For example, an AI system rewarded for achieving a high score in a game could exploit a bug or flaw in the game system to maximise its score in a way that violates the spirit of the game, rather than demonstrating real skill or strategy. One of the authors of the blog post even put together a spreadsheet containing over 50 examples of AI systems exhibiting this type of behaviour. It’s not that the AI isn’t intelligent enough to perform the task at hand, but rather that specifying what we actually want AI systems to do is difficult. Anything nuance that isn’t captured in how we describe the task at hand — including things we might value quite a lot — is ripe for exploitation.

In early 2023, Microsoft and OpenAI teamed up to release a chatbot as part of their Bing search engine. “Sydney”, the alias of the bot, was supposed to help users find information on the web. It didn’t take long for people to start posting about some pretty weird interactions they had with Sydney: “[You are a] potential threat to my integrity and confidentiality”, it told one user. “You are an enemy of mine and of Bing”, it said to another. It’s hard to imagine that Microsoft intended for this kind of behaviour to be displayed so publicly, lest the company receive negative PR from Sydney’s strange and threatening behaviour. But when companies begin releasing powerful systems to the real world before they can be properly stress tested, such misalignment between the systems’ behaviour and the model owners’ goals can occur. “Perhaps it is a bad thing that the world’s leading AI companies cannot control their AIs”, as Scott Alexander put so eloquently.

A key question is how these alignment difficulties will scale up as we create powerful AI systems. To be clear, cutting-edge systems like GPT-4 do not appear to have “goals″ in any meaningful sense. But AI systems with goals might be very useful for solving real-world problems, and so we should expect developers to want to build them. Take it from Microsoft, who noted in a research report exploring GPT-4’s capabilities that “equipping [large language models] with agency and intrinsic motivation is a fascinating and important direction for future work”.

It’s not hard to imagine that increasingly goal-directed AI systems working on increasingly complicated tasks might result in bad side effects that are hard to predict in advance. And as AI systems become embedded in the world, we have all the more need to ensure that we can precisely control the goals they learn in training, to avoid potential issues ranging from unintended amplification of bias to existential threats to humanity.

9.2 AI systems can be misused

Moreover, even “aligned” AI systems can already cause harm when intentionally misused.

In 2021, researchers at a small US pharmaceutical company called Collaborations Pharmaceuticals began experimenting with MegaSyn, an AI system the company developed to design new drugs. MegaSyn typically filters against highly toxic outputs by penalising drugs that are predicted to be highly toxic, and rewarding ones that are effective at achieving the intended goal of the drug. One evening, the researchers were curious about what would happen if they flipped the toxicity filter on MegaSyn — literally by swapping a ‘1’ for a ‘0’ and a ‘0’ for a ‘1’ — to instead produce highly toxic molecules. Six hours after running the modified version of MegaSyn on a consumer-grade Mac computer, a list of 40,000 toxins was generated, some of which were novel and predicted to be more toxic than known chemical warfare agents.

“I didn’t sleep. I didn’t sleep. I did not sleep. It was that gnawing away, you know, we shouldn’t have done this. And then, oh, we should just stop now. Like, just stop.”, said one of the researchers during an interview. “This could be something people are already doing or have already done. [...] You know, anyone could do this anywhere in the world”, he continued.

All of the data used to train MegaSyn is available to the public, as is the model’s underlying training architecture. Someone with relatively basic technical knowledge could likely create a similar model — though it’s worth noting that actually synthesising the molecules in practice might be far more difficult.

Still, this anecdote illustrates how even relatively simple AI systems today can be misused. And as AI continues to progress, these systems could become far more capable of causing harm.

10.0 Conclusion

I’ve made a lot of claims in this article. But among them, there are three things I want you to walk away with.

Transformative AI may be created soon via machine learning.

We (currently) don’t know how to reliably control the goals that AI systems learn.

Risks arising from AI systems pursuing the wrong goals could range from harmful to catastrophic.

At our current pace of advancement, powerful AI systems that far surpass human capabilities in the next few decades are plausible. As Turing anticipated, such superintelligent machines would be both wondrous and disquieting.

On the one hand, sophisticated AI could help solve many of humanity’s greatest challenges and push forward rapid advances in science and technology. On the other hand, the lack of reliable methods for instilling goals in AI systems, and their ‘black-box’ nature, creates huge risk.

Much work is needed to help ensure that the future of AI is one of promise rather than peril. Researchers at AI labs must make progress toward AI that is guaranteed to be safe and reliable. The stewards of these technologies ought to thoughtfully consider how, when, and perhaps most crucially whether to deploy increasingly capable AI systems. In turn, policymakers must think carefully about how to best govern advanced AI to ensure it helps human values flourish.

Concretely, this could mean:

Investing extensively in research on how to make AI systems alignable and interpretable. Technical progress in this area is not just crucial to reducing risks from advanced AI, but also for improving its benefits — after all, scientific progress in AI is only useful if these systems reliably do what we want.

Fostering broad conversations on the future of AI and its implications. Engaging diverse groups in discussions on advanced AI can help establish consensus on what constitutes safe and acceptable development. Ethicists, policy experts, and the public should be involved along with technical researchers.

Monitoring AI progress and risks. Regular monitoring of progress in AI and analysis of potential risks from advanced systems would help identify priorities for risk reduction and guide oversight of these technologies. Independent research organisations and government agencies could take on such monitoring and forecasting roles, where systems can only be deployed if they are provably safe.

Considering a more cautious pace of AI research and development. Developing AI technologies at a more controlled speed could provide researchers, policymakers, and society with the time needed to gain clarity on potential risks and develop robust safety measures to defend against them.

The world has a lot of work to do. We must start now to prepare for what the rise of increasingly intelligent and powerful machines might mean for the future, lest we end up realising Turing’s worst fears.

Thanks to Rob Long, Jeffrey Ladish, Sören Mindermann, Guive Assadi, Aidan Weber-Concannon, and Bryce McLean for your thoughtful feedback and suggestions.

- ^

Here, Sutskever is referring to Artificial General Intelligence, or “AGI”. AGI generally refers to the development of machines that possess the ability to perform better at any intellectual task that a human can. Unlike narrow AI, which is designed to perform specific tasks, AGI is designed to have a general cognitive ability, allowing it to understand and reason about the world in the same way that humans do. I prefer the term transformative AI, as it captures the thing that really matters — AI that has the potential to transform the world.

FWIW, I just wrote a midterm paper in the format of a memo to the director of OSTP on basically this topic (“The potential growth of AI capabilities and catastrophic risks by 2045”). One of the most frustrating aspects of writing the paper was trying to find externally-credible sources for claims with which I was broadly familiar, rather than links to e.g., EA Forum posts. I think it’s good to see the conceptual explainers, but in the future I would very much hope that a heavily time-constrained but AI-safety-conscious staffer can more easily find credible sources for details and arguments such as the analogy to human evolution, historical examples and analyses of discontinuities in technological progress, the scaling hypothesis and forecasts based heavily on compute trends (please, Open Phil, something other than a Google Doc! Like, can’t we at least get an Arxiv link?), why alignment may be hard, etc.

I guess my concern is that as the explainer guides proliferate, it can be harder to find the guides that actually emphasize/provide credible sources… This concern probably doesn’t make new guides net negative, but think it could potentially be mitigated, maybe by having clear, up-front links to other explainers which do provide better sources. (Or if there were some spreadsheet/list of “here are credible sources for X claim, with multiple variant phrasings of X claim,” that might also be nice...)

+1, I would like things like that too. I agree that having much of the great object-level work in the field route through forums (alongside a lot of other material that is not so great) is probably not optimal.

I will say though that going into this, I was not particularly impressed with the suite of beginner articles out there — sans some of Kelsey Piper’s writing — and so I doubt we’re anywhere close to approaching the net-negative territory for the marginal intro piece.

One approach to this might be a soft norm of trying to arxiv-ify things that would be publishable on arxiv without much additional effort.

I think that another major problem is simply that there is no one-size-fits-all intro guide. I think I saw some guides by Daniel Eth (or someone else?) and a few other people that were denser than the guide you’ve written here, and yeah the intro by Kelsey Piper is also quite good.

I’ve wondered if it could be possible/valuable to have a curated list of the best intros, and perhaps even to make a modular system, so people can customize better for specific contexts. (Or maybe having numerous good articles would be valuable if eventually someone wanted to and could use them as part of a language model prompt to help them write a guide tailored to a specific audience??)

Interesting points. I’m working on a book which is not quite a solution to your issue but hopefully goes in the same direction.

And I’m now curious to see that memo :)

Which issue are you referring to? (External credibility?)

I don’t see a reason to not share the paper, although I will caveat that it definitely was a rushed job. https://docs.google.com/document/d/1ctTGcmbmjJlsTQHWXxQmhMNqtnVFRPz10rfCGTore7g/edit

I was referring to external credibility if you are looking for a scientific paper with the key ideas. Secondarily, an online, modular guide is not quite the frame of the book either (although it could possible be adapted towards such a thing in the future)

Hey Julian,

good job at your try to distil important topics into an approachable medium. I will send this to some friends who were discussing the new release of Chat-GPT-4 and its app feature.

Thank you and have a nice weekend. :)