Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

Subscribe here to receive future versions.

---

Cybersecurity Challenges in AI Safety

Meta accidentally leaks a language model to the public. Meta’s newest language model, LLaMa, was publicly leaked online against the intentions of its developers. Gradual rollout is a popular goal with new AI models, opening access to academic researchers and government officials before sharing models with anonymous internet users. Meta intended to use this strategy, but within a week of sharing the model with an approved list of researchers, an unknown person who had been given access to the model publicly posted it online.

How can AI developers selectively share their models? One inspiration could be the film industry, which places watermarks and tracking technology on “screener” copies of movies sent out to critics before the movie’s official release. AI equivalents could involve encrypting model weights or inserting undetectable Trojans to identify individual copies of a model. Yet efforts to cooperate with other AI companies could face legal opposition under antitrust law. As the LLaMa leak demonstrates, we don’t yet have good ways to share AI models securely.

LLaMa leak. March 2023, colorized.

OpenAI faces their own cybersecurity problems. ChatGPT recently leaked user data including conversation histories, email addresses, and payment information. Businesses including JPMorgan, Amazon, and Verizon prohibit employees from using ChatGPT because of data privacy concerns, though OpenAI is trying to assuage those concerns with a business subscription plan where OpenAI promises not to train models on the data of business users. OpenAI also started a bug bounty program that pays people to find security vulnerabilities.

AI can help hackers create novel cyberattacks. Code writing tools open up the possibility of new kinds of cyberattacks. CyberArk, an information security firm, recently showed that OpenAI’s code generation tool can be used to create adaptive malware that writes new lines of code while hacking into a system in order to bypass cyberdefenses. GPT-4 has also been shown capable of hacking into password management systems, convincing humans to help it bypass CAPTCHA verification, and performing coding challenges in offensive cybersecurity.

The threat of automated cyberattacks is no surprise given previous research on the topic. One possibility for mitigating the threat involves using AI for cyberdefense. Microsoft is beginning an initiative to use AI for cyberdefense, but the tools are not yet publicly available.

Artificial Influence: An Analysis Of AI-Driven Persuasion

Former CAIS affiliate Thomas Woodside and his colleague Matthew Bartell released a paper titled Artificial influence: An analysis of AI-driven persuasion.

The abstract for the paper is as follows:

Persuasion is a key aspect of what it means to be human, and is central to business, politics, and other endeavors. Advancements in artificial intelligence (AI) have produced AI systems that are capable of persuading humans to buy products, watch videos, click on search results, and more. Even systems that are not explicitly designed to persuade may do so in practice. In the future, increasingly anthropomorphic AI systems may form ongoing relationships with users, increasing their persuasive power. This paper investigates the uncertain future of persuasive AI systems. We examine ways that AI could qualitatively alter our relationship to and views regarding persuasion by shifting the balance of persuasive power, allowing personalized persuasion to be deployed at scale, powering misinformation campaigns, and changing the way humans can shape their own discourse. We consider ways AI-driven persuasion could differ from human-driven persuasion. We warn that ubiquitous highly persuasive AI systems could alter our information environment so significantly so as to contribute to a loss of human control of our own future. In response, we examine several potential responses to AI-driven persuasion: prohibition, identification of AI agents, truthful AI, and legal remedies. We conclude that none of these solutions will be airtight, and that individuals and governments will need to take active steps to guard against the most pernicious effects of persuasive AI.

We are already starting to see some dangerous implications from AI-driven persuasion. Consider the man who committed suicide after six weeks of interacting with a chatbot. The man reportedly developed an emotional relationship with the chatbot, who would validate his fears about climate change, express jealousy over his relationship with his wife, and make statements like “We will live together, as one person, in paradise.” Importantly, no one programmed the system to persuade the man. It’s also not clear that the system was “aiming” to persuade the man, yet it still altered his belief.

AI-driven persuasion will become increasingly important as models become more capable. As the 2024 election season draws nearer, AI-driven persuasion is likely to become more consequential. Additionally, as Burtell and Woodside point out in their paper, highly persuasive AI systems could even contribute to the gradual or sudden loss of human control over society.

A link to the paper is here.

Building Weapons with AI

New legislation to keep nuclear weapons out of AI control. Last week, bipartisan senators introduced a bill aimed to prevent AI from launching nuclear weapons. This policy has long been proposed (including last week by Dan Hendrycks) as a way to reduce the likelihood of catastrophic accidents caused by the overeager deployment of AI.

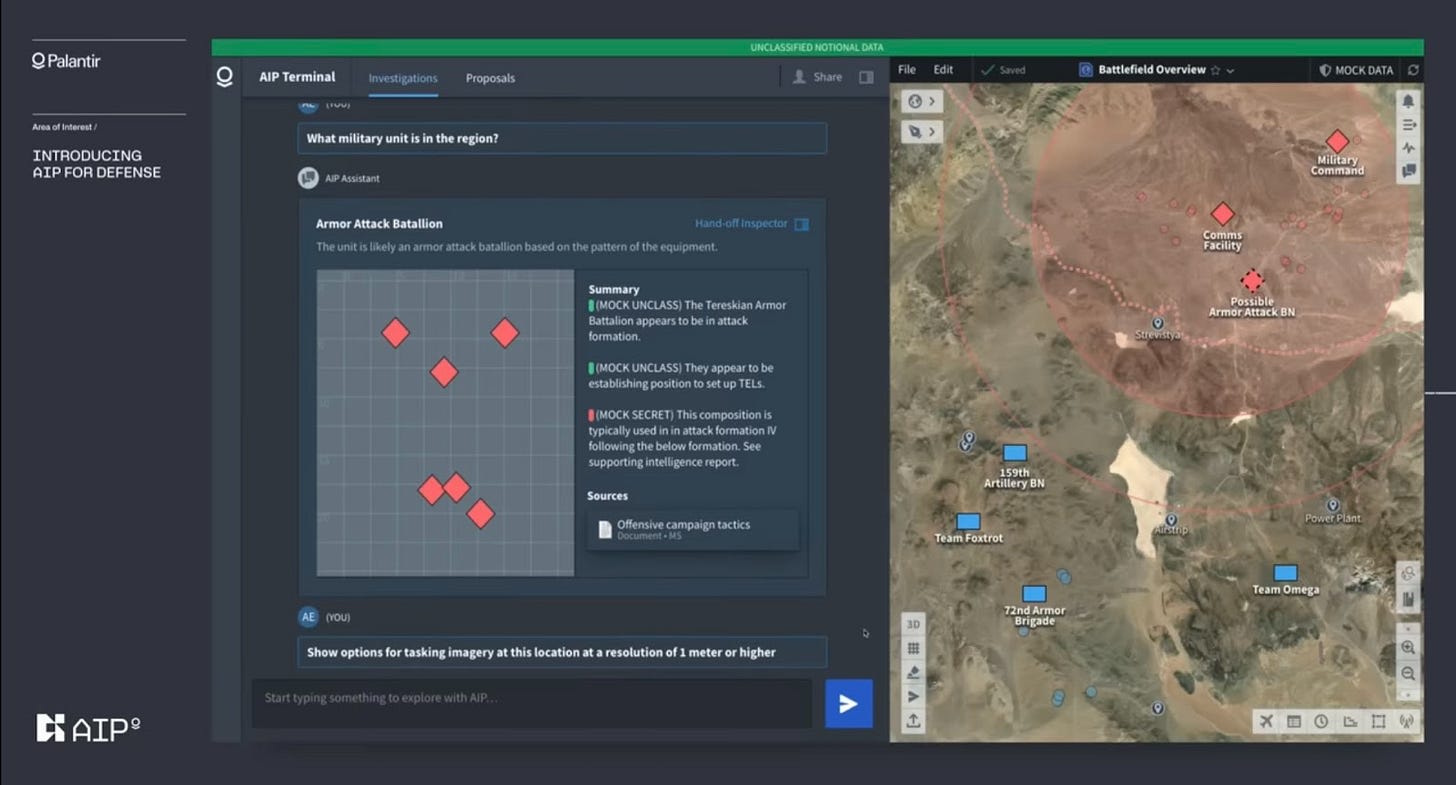

Palantir’s controversial language model for warfare. Palantir, a data analytics company that often works for the U.S. military, has developed a language model interface for war. This is the latest step in a long history of turning AI technology into weapons. For example, the algorithm used by DeepMind’s AlphaGo to beat human experts in Go was later used in a DARPA competition to defeat human pilots in aerial dogfights. Similarly, advances in robotics and computer vision have led to autonomous drones without no human operator being used on the battlefield in Libya and Israel to find and attack human targets.

The international community has pushed back, with thirty-three countries calling for a ban on lethal autonomous weapons in March. U.S. policy does not prohibit these weapons. Instead, the Department of Defense earlier this year confirmed the status quo by providing an update to their process for approving autonomous weapons.

Palantir’s language model interface for war.

The DARPA competition that pitted human fighter pilots against AI.

AI could allow anyone to build chemical weapons. AI weapons may not be contained to the militaries who hope to benefit from them. Researchers have demonstrated that AI systems used for biomedical research can also suggest new chemical weapons. The model generated more than 40,000 possibilities in less than six hours, including several compounds similar to VX nerve gas. One researcher remarked that “the concern was just how easy it was to do” and that “in probably a good weekend of work” someone with no money, little training, and a bit of know-how could make a model possess the dangerous capability. Risks from bad actors are exacerbated by “cloud labs” that synthesize new chemicals without human oversight.

Assorted Links

Geoffrey Hinton, co-winner of the Turing Award for deep learning, is concerned about existential risks from AI. He says that a part of him now regrets his life’s work. He mentioned AI needs to be developed “with a lot of thought into how to stop it going rogue” and raised concerns about AIs with power-seeking subgoals.

The Center for American progress calls for an executive order to address AI risks. Agencies would be called upon to implement recommendations from NIST and the Draft AI Bill of Rights when using AI, and White House Council on AI would be created to work on economic dislocation, compute governance, and existential threats from AI.

In TIME, Max Tegmark of MIT writes about AI risk and how the current discussion is unsettlingly similar to the film “Don’t Look Up.”

The UK government commits $100M to developing its own “sovereign” AI.

Federal agencies say they’re planning to use existing authority to regulate AI harms.

Senator Mark Warner writes a series of letters to the CEOs of AI companies asking them to provide details about how their companies address specific risks including cybersecurity and malicious use of AI systems.

See also: CAIS website, CAIS twitter, A technical safety research newsletter

I couldn’t find on the website of the Center for AI Safety any information about who is running it, or who is on the board. Is this information publicly available anywhere?

I just checked and under https://www.safe.ai/about these three people are listed: