Modelling large-scale cyber attacks from advanced AI systems with Advanced Persistent Threats

Completed as part of the ERA 2023 Fellowship. A huge thanks to Shaun Ee and Moritz Von Knebel for wonderful research mentorship and management respectively. A 12 minute talk that summarises this work is here.

Executive summary:

Descriptions of how misaligned AI systems could pose a catastrophic risk often involve the system engaging in hazardous cyber activity at a large scale. Consider an illustrative example, in which an AI system uses advanced cyber capabilities to gain unauthorised access to classified US government department records, in order to influence and destabilise an election campaign. However, discussions of how such cyberattacks may unfold tend to take place at a relatively abstract level, leaving many important details unfilled. To provide strategic clarity in assessing the likelihood of and preventing such events, this work uses cyberattacks from Advanced Persistent Threat actors (APTs) as case studies for cyberattacks from advanced AI systems.

Attacks conducted by APT actors are highly complex operations, usually requiring large teams (10s to 100s) of skilled humans working for months to years. The tasks involved in executing such an operation include performing extensive reconnaissance on the target, developing novel malware and acquiring computer infrastructure across the globe. We estimate a lower bound of 10,000 hours of human labour in order to execute an attack that targets victims of high value, such as government departments and critical infrastructure. In this report, the lower bound of human hours required to execute an APT attack is called the attack workload.

To identify what type of AI systems are capable of executing APT attacks, we parameterize systems across three dimensions: the number of independent agents running (N), the speed at which they operate (V), and the degree of oversight applied to their actions (F). We use this parameterisation to estimate the time it will take a given system to execute the attack workload, calling this the attack calendar time. We then estimate the attack calendar times for a system during training, evaluation and some stages of iterative deployment, explicitly detailing why systems operating in latter stages of iterative deployment are more likely to have feasible attack calendar times (with feasible in this work defined as 1-100 days).

Under the assumption that future advanced AI systems will be able to execute an APT attack in feasible time frames (1-100 days), we identify two avenues that appear promising to monitor for and prevent such events from unfolding:

Any AI system looking to execute an attack appears likely to utilise frequent access to long-term memory in order to store critical information that is discovered during extensive reconnaissance of the network that it is looking to compromise. Examples of such information are the IP addresses of compromised systems and technical knowledge of sophisticated software vulnerabilities in these target networks. Thus, we explore how frequent and rigorous monitoring of external memory stores that the system has access to could be used to monitor for dangerous cyber activity.

In addition, the most capable APT attackers utilise computer infrastructure consisting of over 1,000 servers across the globe to aid their attacks. Assuming that the acquisition of such resources has a workload of 1,000s-10,000s of hours of human labour, we discuss how human oversight teams could monitor a system looking to obtain this global computer infrastructure.

Our initial investigation into these two monitoring methods is preliminary, and we suggest further work be done that looks to assess the feasibility of the two suggestions above.

Section 1 - Introduction

In many threat models of AI existential risk scenarios there is reference to an advanced AI system engaging in some form of large-scale cyber activity[1] [2] [3].Consider the following illustrative examples:

An AI system hacking into the servers of a BSL-4 lab to gain information on state-of-the-art research in engineering pathogens, in order to allow it to synthesise its own and cause global catastrophe.

An AI system hacking into the computer network of a US government department to access top secret information relating to the plans and locations of key diplomats over the next few days, in order to destabilise the election campaign of a particular presidential candidate’s campaign.

However, the details of how such a large-scale cyber attack may be executed are often glossed over. Attacks on this scale are usually complex, drawn out processes unfolding over months to years; thus by leaving so many details open, there is risk of creating narratives that are unlikely to occur in reality. This piece, therefore, looks to examine in greater detail how such a cyberattack could unfold, in the hope of providing strategic clarity and tactics to assist in avoiding such scenarios. In order to do so, we consider the suitability of cyber attacks from advanced persistent threat groups (APTs) as a reference class for cyber attacks from advanced AI systems. APTs are state-linked groups that execute cyber attacks on the largest scales, and are responsible for infamous attacks such as recent attempts to shutdown Ukrainian power grids, and the 2020 SolarWinds hack.

Section 2 is devoted to justifying whether or not cyber attacks perpetrated by APT groups are sensible models for those that may be carried out by advanced AI systems. Specifically, in section 2.1, we detail the APT attack lifecycle [4] alongside the SolarWinds hack, a recent cyber attack thought to have been amongst the worst cyber-espionage incidents suffered by the US government in its history [5]. The primary goal of this subsection is to provide readers with a clearer and more comprehensive understanding of the modus operandi of APT attacks, in order to form intuitions about the nature of large-scale cyber operations.

In section 2.2, we seek to map the capabilities of APT attackers to AI systems. In short, we look to answer the question of ‘what type of AI system(s) would be able to execute APT-like attacks?’. We first estimate the number of human hours required to execute various stages of the APT attack lifecycle; we then parameterise AI systems along three dimensions (number, speed, and degree of oversight) to determine the calendar time required for an AI system to execute an APT-like attack. In this section we assume AI systems that have skill profiles similar to that of humans, meaning that we are considering very advanced forms of AI, of the type that does not exist today. We justify why we think this assumption is necessary, and explore its implications and caveats in section 2.2.1. In section 2.3, we explore how some environments of operation could be more conducive to facilitating APT attacks than others. For example, consider an AI system of fixed capability; it would be far easier for such a system to execute an APT attack if it had access to a wide range of tools and was subjected to relatively little oversight, which may be seen in mature stages of deployment, compared to the case in which a large proportion of its actions are regularly being monitored and there are significant guardrails on the model’s autonomy. We describe these stages of development and deployment in further depth, concluding with a concrete example of the type of AI system that might be able to execute an APT-like attack in order to ground the discussion of section 2.

In Section 3, we premise upon APT attacks being a useful model of cyber attacks from advanced AI systems. We then explore how large-scale dangerous cyber activity from advanced AI could be prevented. Section 3.1 scopes this question further, given that there are a number of junctures to prevent misaligned cyber behaviour, such as identifying when dangerous cyber capabilities in a model arise, or aiming to rigorously monitor and oversee model behaviour. We focus on monitoring/oversight methods, given that the technical knowledge required for evaluations of dangerous model capabilities are still under developed[6]. Furthermore, we can distinguish monitoring mechanisms into pre-compromise and post-compromise monitoring; the former concerns model behaviour before malware is inserted onto a target network, with the latter instead focusing on oversight methods after a model has established connection with a target network. Section 3.1 argues that monitoring model behaviour during the pre-compromise phase is particularly promising in preventing dangerous cyber activity. Of course, in order for a human-led [7]team to monitor model behaviour, we must assume that the AI system does not exfiltrate whilst executing the APT-like attack—this assumption is examined in section 3.1.1, and relaxed to an extent later in the piece.

Section 3.2 then makes high level recommendations for useful actions in pursuit of pre-compromise monitoring of advanced AI systems to prevent APT-like attacks. Section 3.2.1 overviews three key steps that are executed in the pre-compromise phase—namely reconnaissance, resource acquisition and initial access (to the target network). Section 3.2.2 then examines monitoring of system long-term memory to prevent large-scale, dangerous cyber activity; section 3.2.3 focuses on monitoring mechanisms for the acquisition of computer infrastructure that is required to facilitate such attacks.

Section 2 - APT attacks and the mapping to AI systems

Section 2.1 - The APT attack lifecycle, and the SolarWinds hack

In this section, we explore the lifecycle of an APT attack in greater detail. The aim is to present how large-scale cyber attacks conducted by APTs unfold, to begin assessing the utility of these events as models for cyber attacks from advanced AI systems. The life cycle presented is based upon Mandiant’s framework of APT attacks [4] ;we shall also refer to case studies of APT attacks/actors, namely the SolarWinds attack (carried out by APT29 [8]), as well as a Chinese APT group known as APT1 [9] We also briefly highlight key takeaways from the APT attack lifecycle that are relevant for our study throughout.

Background on SolarWinds: The SolarWinds hack was a cyber espionage operation carried out by an APT attributed to the Russian state, that took place from the fall of 2019 through to December 2020/Jan 2021. SolarWinds is an organisation that provides third party software to 10,000s of companies across the globe, and in October 2019 the APT group above were able to infect an update pipeline for one of SW’s most popular products—the Orion platform. The malware installed into Orion allowed the attackers privileged access to the networks of infected victims, some of which are listed below. Whilst the infected software was downloaded by victims in the spring of 2020, it was only discovered in December 2020 giving the attackers months to siphon away classified data. The length of operation is a key feature of APT attacks that will be discussed later on.

The full extent of the operation is still unknown, though the attack is likely to have significant national security implications for the US. The departments of Homeland Security, State, Commerce and the Treasury all reported missing emails following the attack [10], with the Pentagon and National Nuclear Security Administration also found to have been breached [11]. In addition, there are fears that the attackers could have established a permanent presence in compromised networks, enabling more destructive attacks in the future[12].

2.1.1: Initial Compromise

The first stage of a cyber attack involves the attacker being able to get malicious code (malware) onto the network of their intended victims. This is the initial compromise stage.

Sophisticated attackers use a variety of methods. One of the most common is spear phishing, in which the attackers directly target the intended victim with highly personalised emails or websites. For example, the attacker may set up an email account to impersonate a trusted colleague of the victim, and send them a relatively innocuous looking file that, if downloaded, installs malware onto the victim’s device.

Attackers may also take an indirect approach, such as watering hole attacks. If the attacker is aware of websites that the intended victim visits regularly, and the web server that hosts this website has poor cyber security, the attacker can breach these servers instead with webshells. When the victim’s computer interacts with the compromised web server, injection of the malware onto the target device can take place.

Key point: Complexity of ‘frontier’ APT attacks

However, as was the case with the SolarWinds hack, attackers may be forced to take a more novel and complex approach. The SolarWinds attack was executed by compromising SolarWinds’ CI/CD pipeline, leading to victims downloading the malware onto their systems when new updates to the Orion platform were released. This is known as a supply chain attack, though one had never been conducted on such a scale before[5]. Other highly complex techniques involved the reverse-engineering of Orion’s communication protocols, allowing the attackers to effectively conceal their network traffic. This likely led to them being able to operate on the compromised systems for upwards of 6 months[11] .

The paragraph above illustrates an important point: for the highest value targets—nuclear command and control for example—advanced AI systems looking to conduct cyber attacks might need to push forward the state of the art in identifying and exploiting vulnerabilities, as opposed to utilising existing methods [13], which sophisticated defenders are likely to have addressed. When targeting a sophisticated defender with a novel attack method, the preparatory steps of (a) identifying and performing reconnaissance on the target and their network, as well as (b) acquiring the infrastructure for the attack, and then (c) writing and testing the malware can take months to years of work by hundreds of hackers. A senate hearing following the SolarWinds hack claimed that ‘at least a thousand’ engineers were involved in executing the hack [14]; even though the tech executives that made these claims worked for organisations that were breached, and thus had an incentive to inflate numbers, it seems likely that hundreds of skilled workers are required to execute APT attacks of the largest magnitude.

2.1.2: Establishing and strengthening the foothold

Once inside the victim’s network, attackers may look to further establish and strengthen their foothold within the network.

Attackers can install various types of malware onto a victim’s network, however for cyber espionage attacks the most commonly used malware is a backdoor. Backdoors give an attacker the ability to send commands to a victim’s system remotely—some examples of these commands, taken from a backdoor named BISCUIT [15] that was developed by the APT1 group , are given below:

Key commands that attackers can execute on a victim device upon installing a backdoor.

More complex attackers may equip their backdoors with the ability to mimic communication protocols, so that to network defenders monitoring network outflows, the backdoor communications look like legitimate, ordinary network traffic.

Key point: Extensive attack infrastructure to mask communications

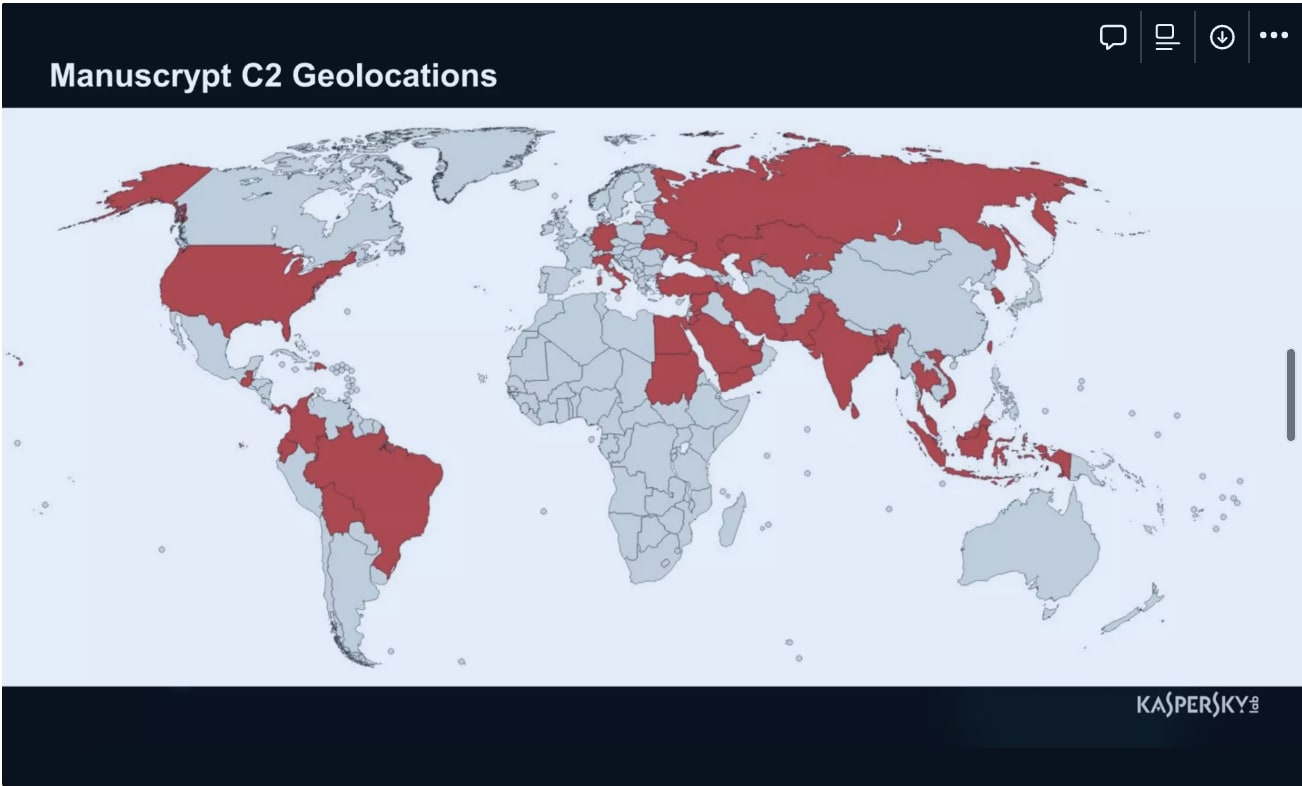

To aid in executing an attack covertly, large APT groups may utilise network infrastructures containing upwards of a thousands devices located across the world. To see why this is the case, consider the perspective from a network defender: if a computer in a US-based network suddenly begins communicating at length with a server in China, it’s easy to see that something may be awry. Experienced cyber defenders would be able to identify this quickly, examine the connection for suspicious behaviour, and terminate if necessary. To bypass this issue, APTs either redirect their network traffic through ‘hop points’ - devices that the group have compromised for relaying communications, rather than directly for their data. They may also install ‘command and control’ (or C2C) software, allowing them to conduct their attacks remotely. APT1 for example, whilst believed to operate out of Shanghai, had over 100 servers in the US (as of 2013) [16] from which to receive data and send instructions to their installed backdoors.

Figure 1: Global distribution of servers utilised by the group APT1 [16].

Figure 2: C2 locations used by the APT38 group to run malware known as ‘Manuscrypt’.

Should advanced AI systems look to execute APT attacks on defenders with sophisticated cyber defences, these systems may also look to build a complex network infrastructure prior to executing an attack. This is discussed in further depth in section 3.2.

2.1.3: Lateral Movement

Lateral movement is the stage in the cyber attack lifecycle in which the attacker explores and escalates privileges once inside the compromised network. Lateral movement can be roughly decomposed into two phases—internal reconnaissance and privilege escalation. Internal reconnaissance involves the attacker exploring the victim’s network to determine the location of high-value data; privilege escalation is the process by which the credentials to access this data are acquired.

Key point: Tool use during lateral movement

Whilst it seems harder to spot internal reconnaissance and privilege escalation taking place as a human operator of the AI system, these stages often involve the use of third party tools (e.g: nmap, mimikatz); restricting a system from using such tools could act as a significant blocker to it successfully engaging in lateral movement. Exploring this in further depth is not pursued in this piece however.

Section 2.2

Section 2.2.1 - What type of AI systems can execute APT attacks?

To determine the type of AI system able to execute an APT-like attack, we’ll begin with making an order of magnitude estimate for the number of human hours required to execute APT-like attacks. There are claims that SolarWinds was the result of ‘at least 1,000 engineers’ [13], though bear in mind that such numbers were claimed by the tech executives that ran the companies that were breached, and so are prone to exaggeration. Let’s account for this by assuming that there were ~200 hackers involved instead [17]. SolarWinds unfolded over the course of 14 months [5](from testing code in Oct 2019 to detection in Dec 2020), though of course, not all of the 200 hackers will have been working for 14 months. Let’s say we had 200 engineers working 40 hour weeks for an effective 2 months (8 weeks). This yields approximately (200 x 40 x 8) = 64,000 hours of labour from skilled human hackers.

Consider also the acquisition of extensive computer infrastructure − 1,000 remote C2 servers in the case of APT1. There’s no clear indication of how long it may take an APT group to establish a remote network infrastructure of this size but it clearly seems to be a non-trivial task. My intuition is that building up this sort of network infrastructure might take months-years of work, again suggesting a work load of at least 10,000 skilled human hacker hours [18] to acquire the infrastructure required for an APT attack. Let’s call the number of human hours it takes to execute an APT-like cyber attack the attack workload.

These numbers help us gain an idea of the types of AI systems that might be able to execute an APT-like attack. Let’s parameterise future AI systems along two dimensions—N (number) of independent agents and V—speed at which one system can operate. Let’s further make the assumption that the capabilities profile of a single system is similar to humans—we’ll discuss this in further detail in section 2.2.2, but for now this means that we’re considering advanced AI agents—equipped with long term planning/action capabilities like humans. We make this assumption because it seems that conducting a cyber-attack on this scale would be an ‘AI-complete’ [19] task, given the open-ended nature of the operation. In this analysis we also assume a system that has not been fine tuned for cyber capability, the reason for this being that I expect such systems to pose a greater risk with regards to taking human operators ‘by surprise’ with their emergent capabilities [20].

Our parameterisation of systems along the dimensions of N and V allow us to obtain a first order estimation on how long a particular system might take to execute large-scale, APT-like attack. For example, a system with V=100 (operates 100 times faster than a human) would take only 100 hours to do the work required for an operation with an attack workload of 10,000 hours. Let’s call this time that it takes for a system to execute a given attack workload the calendar time of the attack.

We can incorporate another parameter that reflects the degree of oversight applied to advanced AI systems, in order to give a more accurate description of its ability to perpetrate cyber attacks. If we assume the model does not exfiltrate (see section 3.1.1 for more on this assumption), then some of the model’s actions will be overseen by a human administrator [21]. If then, 10 GPT-7′s are set off to work on NLP research and they all suddenly start writing malware files and doing social engineering research, one is likely to quickly notice something is awry. In reality, a misaligned system will execute a certain fraction of actions inline with the human’s wishes—to give the false impression that it is behaving as it should be - and the remaining fraction will be in service of its misaligned goal. For example, in order to avoid being caught, a misaligned system may execute 99% of actions inline with the human user’s wishes, and one percent of its actions in pursuit of its misaligned goals. Let F denote the fraction of actions that a model executes in service of its misaligned goals (F=0.01 in the previous example). This simple conceptualisation allows us to capture the impact of human oversight on the model—greater oversight means that a model takes longer to execute an APT attack. If one in every ten actions (F=0.1) are in service of the model’s cyberattack, it takes ten times as long to execute. Example 1 in Table 1 gives NVF values that lead to a model taking 100 hours to execute an attack. In further analysis, we refer to the time it takes a model to execute an attack as the attack calendar time.

What might be sensible values for F? It seems that F=0.1 is far too large; this would lead to a model taking a ‘misaligned action’ 10% of the time, which would lead to a ~10% reduction in productivity on the task it was intended to do [22]- something that would appear noticeable to the human operators.

In further analysis, we usually assume systems with N,V,F parameters that yield calendar attack times on the order of days, weeks, or months. Example 2 in Table 1 gives NVF parameters that yield an attack calendar time of roughly 80 days, or 11 weeks. Moreso, the value of V in this example is what one may expect of foundation models in a decade’s time [23]. On the other hand, this piece does not consider systems that facilitate to attack calendar times on the order of minutes or hours, due to the difficulty of conceiving measures to prevent and/or monitor such an attack. We label systems with such NVF parameterisations as ‘strong superintelligence’, exemplified by example 3 in Table 1.

N | V | F | T (hrs) | |

Example 1 | 10 | 10 | 0.01 | 100 (4 days) |

Example 2 | 100 | 5 | 0.01 | 2000 (11 weeks) |

Example 3: ‘Strong superintelligence’ | 1000 | 1000 | 0.1 | 0.1 (6 minutes) |

Table 1 - NVF systems and their attack calendar times T. An attack workload W of 10,000 hours is assumed. All attack calendar times are given in hours, then with a more intuitive time measure.

Section 2.2.2 - Identical skill profiles to humans assumption

In conceiving of AI systems that are able to conduct large-scale cyber operations without human support, it seems that we require the assumption of human-like capabilities and generality. The intuition guiding this is that in order to constantly outwit a human defender, the attacker also requires near human level intelligence.

Another key point to clarify here is that section 2.1 gave the attack workload in terms of the number of hours of labour for skilled human hackers—however an AI system with a human level skill profile won’t have such advanced hacking capabilities. I think we can mostly gloss over this detail for the following reason: If we assume no qualitative capability leaps from a regular human to a skilled human hacker, then we can say something like ‘X humans with regular hacking capability will be able to replace one skilled human hacker’. The effect of this on the attack workload is to increase it—i.e, we’d need a more capable system (higher N,V, lower F) to execute the attack in the same calendar time. By ignoring the skilled hacker v.s regular human distinction, the analysis here considers a worst case scenario world.

Section 2.3 - How environments influence the attack calendar time

In this section we develop ideas of which types of AI systems could conduct large-scale cyber attacks by considering the environments in which they operate in.

Let’s consider the following stages of development and deployment of an AI system:

Training and external evaluation,

Internal deployment,

External, iterative deployment

We now characterise each stage, making rough estimates of calendar attack times for APT attacks using the NVF framework of section 2.2. I personally have large uncertainties about realistic NVF values at each stage, and further work to refine these estimates would be useful. Assume that in order to be progressed from one environment onto the next, the model is subjected to a range of model evaluations such as in[6]; however such evaluations are still underdeveloped, and thus misaligned models can slip through. The numbers purely serve to illustrate the framework that we are using to think about the feasibility of large-scale cyber activity during a system’s lifecycle. More generally, the aim of this section is to paint a clearer picture of how reduced guardrails and increased capabilities that result from different environments of model operation lead to large-scale operations—such as the APT attack workload, becoming more feasible.

Training

During this stage, it seems likely that there would be relatively large degrees of oversight on the model’s actions. For example, if the model is misaligned then during training it may only execute 1 dangerous action for every 999 aligned [24]actions it takes in order to avoid detection (F=0.001). Moreso, I expect there to be guardrails on things such as the number of sub agents that a model can deploy (for concreteness, let’s assume this is set at N=10 during training—the system can’t deploy more than ten copies of itself). With V fixed at 5 (the system operates five times quicker than a human) [23], then dangerous cyber activity with an attack workload of 10,000 hours would lead to an attack calendar time of roughly 23 years [25]. This is an unrealistic calendar time for attack. Note, the biggest contributor to the calendar time of the attack is the factor F=0.001 - this is what makes model exfiltration such a significant event (see section 3.1.1.)

External evaluation

This stage might relax some NVF parameters on the system. For example, external evaluation might allow N up to 100. Moreso, there will be qualitative changes that aren’t captured by our simple NVF framework that would assist in the model’s capability to engage in an APT attack. For example, the model may be given access to more tools than it had during training—such as web browsing, bash scripting, access to APIs of other AI tools. This was the case for Autonomous Replication and Adaptation (ARA) evaluations performed by ARC [26]. However, given that external evaluation will still apply large degrees of oversight and limited tool use, calendar times for attack may still be on the order of hundreds of days. If the model possesses situational awareness [6], the most sensible strategy appears to wait until it is outside of evaluation stages.

Iterative deployment

Under this framework, systems that are able to get through the APT attack workload in feasible time frames (on the order of days, or tens of days) thus seem to be those that will operate in deployment conditions [27]. Deployment will take place iteratively, in multiple phases in which model autonomy and capability grow. I’ve sketched out three phases below to convey a rough sense of how iterative deployment may unfold, though note that the phases are not mutually exclusive. I also do not try to put NVF numbers at each one of these stages—the idea is that reduced oversight, increased autonomy and work capacity (in the form of more agents running) and additional tool usage will allow the calendar attack time to creep towards feasible time frames.

To ground this discussion with an example, consider an OpenAI system slowly being integrated into a Tesla car manufacturing factory to manage operations such as supply chain logistics, factory security and more [28]. Of course, this example is intended to be purely illustrative.

Phase 1 - the AI system is responsible for managing only 5-10% (say) of the factory workflow, and is only allowed to run 20 copies of itself to do so. It is responsible for limited tasks—just writing and sending emails to arrange supply deliveries, and monitoring internal and external factory security. Both OpenAI (as the provider) and Tesla (as the customer) subject the system to relatively large degrees of oversight (for example, there are hourly checks on key model outputs), and the system is complementary rather than substitutable to the human workers. Model autonomy on these tasks is limited, through methods of structured access [29], such that the system can only execute tasks of pre-defined length—this limits the length of emails the model can send. This could last months as OpenAI and Tesla carry out rigorous checks to ensure that their product is working as intended.

Phase 2 - The system is given more autonomy. Phase 1 was successful, and the system has shown competency in being able to independently perform a small range of tasks, leading to a larger part of the workflow being automated. Human workers start becoming substitutable rather than complementary, and new system capabilities are being implemented [30]. This phase (and its subphases) could last years. Here, we may see:

The model gaining access to new tools/privileges, as its use of tools during phase 1 was deemed safe and productive. For example, in phase 2 we may see Tesla beginning to allow the model to push code to its GitHub repositories.

The model being allowed to run more copies of itself. For example, in phase 1 there could have been 20 copies of the model running to automate supplier communications and monitor security. 50 more copies are run in phase 2, given the additional task of identifying bugs in code that runs factory machinery and flagging them to a team of human software developers.

Phase 3 - In this phase we may see:

Far less structured access; in the limit, this may look like OpenAI giving Tesla access to the model weights, allowing Tesla software engineers to fine-tune the model for their specific needs. Reduced structured access would also lift restrictions on the number of calls to the model, or the length of action chains that the model can execute.

Tesla may scale their AI workforce to 200 agents, overseen by a command team of 30 human employers equipped with AI tools for monitoring.

Complex tool use/accessibility—The model showed competency with tool use in phase two [31], operating as intended with few incidents of unintentional behaviour. As a result, the model is allowed to begin communications with Tesla factories across the United States, granting it access to a far wider network of computer devices.

Section 3 - Preventing large-scale hazardous cyber activity from advanced AI systems

In the following section, we premise upon APT-like attacks being useful models for how cyberattacks from advanced AI systems will unfold. That is, we assume calendar times for attacks on the order of days/weeks/months, as well as adversarial AI systems that are of human level capability. Given that we now have a clearer picture of the stages by which such an event could unfold, we now ask: What can be done to prevent such attacks from occurring?

We begin by scoping this question further, in the process discussing a key crux of this analysis: whether a model can engage in actions required to facilitate an APT attack, whilst not being able to exfiltrate from the network that it is operating on.

Section 3.1 - Scoping the problem and model exfiltration

To help ground the question(s) we are considering, consider the following scenario:

You are on the network administration team of a Tesla car manufacturing company in 2035, operating an AI system that is responsible for managing supply chain logistics in phase 2 of iterative deployment. Call this system CARMSAL [32]. You find out that CARMSAL has been engaging in a cyber espionage attack on a BSL-4 lab over the past 40 days, siphoning away information about the state-of-the-art methods used to synthesise a novel version of smallpox. How did this happen?

I believe that the network administrators of such a system would be asking the following two questions:

When were the capabilities for this attack acquired by the system? Recall that we’re assuming a system that was not fine tuned for cyber espionage. Did giving the model access to a certain tool facilitate this cyber attack? When did the ability to write complex malware appear?

How was the system able to execute such an attack without being discovered? Irrespective of capabilities, the system engaged in reconnaissance, acquired computer infrastructure, infected the BSL-4 lab servers with malware, then spent weeks engaging in lateral movement to find and make away with classified data. How was all of this activity able to go unchecked?

In this piece, we focus on question two due to matters of tractability. Evaluations of model capabilities at key stages of its development and deployment (question 1) are critical for developing safe AI systems, but such evaluations are still in their infancy [26].

In order to make progress on question two, we need to make another, quite significant assumption, namely that the model engaged in the cyber attack above did not exfiltrate from the Tesla factory network—which we’ll refer to as the model’s host network from now on. Once a model propagates itself to another network, there appears to be relatively little that a network administrator can do in terms of applying oversight. This is a critical assumption, and is discussed in further detail in section 3.1.1. Section 3.1.1 also discusses why, even if you don’t buy the ‘model does not exfiltrate’ assumption, the analysis and recommendations in section 3.2 might still be relevant.

We now split the model’s execution of the cyber espionage attack into two stages—pre-compromise and post-compromise.

We take pre-compromise as the stages of the attack lifecycle (section 2.1) up to and including insertion of malicious code onto the network of the target.

We take post-compromise as the stages of the attack lifecycle after insertion of malicious code onto the target network. In this phase, the attacker focuses on strengthening its foothold within a network, performing internal reconnaissance of the target network, escalating privileges and extracting target data.

Monitoring/oversight of pre-compromise behaviour appears to be more promising as a method to prevent cyber activity, the primary reason for this being that pre-compromise behaviour appears to account for a larger proportion of the attack workload. Whilst adversaries might operate in a compromised network for hours, days, weeks or even months once it has been compromised, cyber attacks on the scale of APT attacks often take years of planning.

Section 3.1.1 - Model exfiltration considerations

First, some clarification on what we mean by exfiltration. We say a model exfiltrates from the host network if it manages to run a copy of itself on a device that is not considered to be part of the host network. The implications of model exfiltration are significant—in the NFV framework above, a model operating on a device outside of a network administrators control may essentially be subject to no oversight, leading to F=1 (recall that F was the greatest contributor to large calendar attack times—section 2.2). Moreso, guardrails under the case of F=1 may be non-existent—this could lead to the model scaling N by orders of magnitude.

One can see then that once a model has exfiltrated, methods of preventing a cyber attack are largely out of the hands of a network administrator.

Now, let’s restate the assumptions we are making with respect to model exfiltration, which we’ll call the exfiltration assumptions. We’re assuming that both of the following hold:

a.) We’ve got good assurance that the model won’t exfiltrate → i.e, it can be contained within the network it was intended to operate on.

b.) Even though it can’t exfiltrate, the model can still engage in dangerous cyber activity such that it can conduct APT-like attacks.

Given that the exfiltration assumptions are in tension with each other, how likely is a world in which they both hold? A reason against this is that it would be extremely instrumentally useful to exfiltrate, thus meaning that it would be an early component of most long term plans made by the model. Remember that exfiltrating corresponds to setting the oversight fraction F to 1 in the NVF framework of section 2, in the process drastically reducing the attack calendar time. In addition to, I expect that in later stages of deployment models will be regularly interacting with other networks, making it harder to prevent exfiltration without significantly limiting the usefulness of a model.

On the other hand, the problem of model exfiltration is well known in the AI Safety community, and there have been recent calls from leading AI safety researchers to develop model evaluations solely for detecting exfiltration attempts [33]. In even modestly safety-conscious actors, I expect significant resources to be devoted to monitoring for model exfiltration during training, external evaluation and in early stages of deployment, given the extreme cost of the event. Further, two technical reasons for both the assumptions to hold:

Model exfiltration might look like a qualitatively different type of network traffic that can be caught easier than other type of network traffic.

Neural network parameter counts are growing exponentially[34] . The largest models today may betouching trillions of parameters [35]. Assuming that a model has on the order of 10^14 parameters—a number that is likely to be achieved in the coming years -that each parameter is a 32 bit floating point, we have 1 parameter at 4 bytes and thus the weights of a 100 trillion parameter model at ~100s of GBs. Assuming no compression, this is a huge data packet/data packets exiting the network (even if split into 10 packets each with ~10s of GBs). Other network traffic that is involved in executing cyber attacks may only be on the order of kilobytes, or megabytes—meaning that model exfiltration might be substantially easier to track and monitor.

Frontier models might maintain a large inference footprint

Let the inference footprint of a model be the CPU RAM and the GPU RAM that it needs to run. GPT-3 requires 100s of GB of both CPU RAM and GPU VRAM to run—that is, it possesses a huge inference footprint. A model looking to exfiltrate might then find it very difficult to find a machine that it can exfiltrate to—as most machines will not support its inference footprint. If only 1 in every 1000 devices that the model can exfiltrate to support its inference footprint, and network overseers monitor these special devices closely, then it may be difficult for the model to exfiltrate.

These reasons are less likely to hold if models are able to be significantly compressed, which I imagine will be strongly incentivised as models begin to be used commercially.

All things considered, the chance that we are fortunate to live in a world where both the exfiltration assumptions hold will be dependent on the success of model exfiltration evaluations and the degree to which model compression methods advance.

In section 3.2.2 and 3.2.3, we consider monitoring/oversight mechanisms premising on attack workloads of tens of thousands hours of skilled human labour—in other words, we assume that the model does not exfiltrate. How relevant is the analysis of section 3.2 if you believe that the exfiltration assumptions (a) and (b) are strongly in contention and extremely unlikely to both hold (i.e models will always exfiltrate before they attempt anything on the order of an APT attack)?

I believe that section 3.2.2 is still relevant, but 3.2.3 less so. Section 3.2.2 looks at methods to catch models engaging in dangerous cyber activity during reconnaissance of the network they will be targeting. Even if the model exfiltrates, there will be some attack workload to execute first during the reconnaissance stage, the magnitude of which depends on how strongly the points raised above hold. If you believe that the workload for reconnaissance is on the order of 1000s (or even 100s) of hours, the analysis of section 3.2.2 still holds, albeit on smaller scales. If you believe that the attack workload required for model exfiltration is more like 10s of hours, the analysis may not be useful.

Section 3.2.3 looks at how to monitor a model’s acquisition of a large computer infrastructure to carry out its APT-like attack. If the model is to exfiltrate first however, it is unlikely to need such large-scale infrastructure, and the analysis of section 3.2.3 may not be so relevant.

Section 3.2 - Monitoring and oversight mechanisms for pre-compromise activity

In this section we focus on monitoring, oversight and regulation methods for pre-compromise activity of advanced AI systems. Section 3.2.1 uses the MITRE ATT&CK [36] framework to decompose the pre-compromise phase into three substages—reconnaissance, in which attackers must collect information about their target network, resource development, where attackers acquire the necessary computer infrastructure, technical, and financial tools to facilitate their attack, and initial access, which can be identified with the step of inserting the malware onto the target network. We follow with high-level recommendations for monitoring and regulation of advanced AI model access to long-term memory (section 3.2.2), and oversight of potential acquisitions of computer infrastructure (section 3.2.3).

Note: Considerations of how the general, agentic systems assumed in this piece could also be used for cyber defense are out of scope of this analysis. Whilst we do not consider it here, it is incredibly likely that the most sophisticated defenders will utilise some form of automated defense system. This is a key line for further work (see section 4).

Section 3.2.1 - Decomposing the pre-compromise phase

This subsection is largely based upon the MITRE ATT&CK framework. We now briefly describe each of the three phases of pre-compromise in turn. Note that in reality, the lines between the stages are blurred.

Reconnaissance: The MITRE ATT&CK framework describes the reconnaissance stage as follows:

“Reconnaissance consists of techniques that involve adversaries actively or passively gathering information that can be used to support targeting. Such information may include details of the victim organisation, infrastructure, or staff/personnel. This information can be leveraged by the adversary to aid in other phases of the adversary lifecycle…”

Most methods of compromise can be grouped into one of either two buckets—methods of active compromise or passive compromise. Active compromise involves the attacker directly interacting with the target network, perhaps by intersecting and studying network traffic going to and from the network [37], or exploring their software systems for exploits. Passive reconnaissance involves methods that gather information in indirect methods—this can be as simple as browsing the target organisation on the web, or searching open technical databases for known vulnerabilities in the software that the target uses.

What might be the attack workload of this stage? It’s variable, but for the largest APT-like attacks, it can involve hundreds of analysts working for months, or a smaller group working for years. One source estimates that it would have taken ten highly skilled software engineers 2-3 years to write the infamous Stuxnet worm malware [38] [39] , suggesting an attack workload on the order of 1000-10,000s hours (see section 2.2).

Resource development: The MITRE ATT&CK framework describes the resource development stage as:

“Resource Development consists of techniques that involve adversaries creating, purchasing, or compromising/stealing resources that can be used to support targeting. Such resources include infrastructure, accounts, or capabilities. These resources can be leveraged by the adversary to aid in other phases of the adversary lifecycle.”

Cyber attacks may look to acquire a variety of resources. As discussed in section 2.1, a large computer infrastructure is required to facilitate APT-like attacks; we discuss this in further depth in section 3.2.3. Attackers may also look to acquire financial resources, account credentials and human resources such as ‘insiders’. The success of a model in acquiring these resources will be dependent on the model’s skill profile and the conditions of its deployment. For example, a model with weak social engineering skills that is only able to interact through a text interface could struggle to acquire inside accomplices. A model fine-tuned for stock trading and with access to an online brokerage account is well-placed to acquire significant financial resources.

Initial access: The MITRE ATT&CK framework describes the initial access stage as:

“Initial Access consists of techniques that use various entry vectors to gain their initial foothold within a network. Techniques used to gain a foothold include targeted spearphishing and exploiting weaknesses on public-facing web servers.”

The main methods of initial access were covered in section 2.1.1. A key input to an attacker’s ability to gain access to a network in this stage is its ability to write sophisticated malware. As AI models are given more autonomy to write complex code, it will become increasingly difficult to determine whether files produced by AI systems may contain malware. We do not focus on this issue in further depth, however we note that specialised, ‘tool-like’ AI assistants have already been suggested to evaluate codebases for malware produced by more capable AI systems [40].

Section 3.2.2 - Monitoring and regulating long-term memory access

As implied by the previous section, the steps involved in the reconnaissance and resource acquisition stages may yield attack workloads on the order of hundreds, thousands, or tens of thousands of hours. In calendar time, this could translate to advanced AI systems engaging in ‘reconnaissance projects’ on the order of weeks to months. If we are to consider the agent architecture of recent LLM agents [41], in which the context window of the LLM is roughly analogous to short-term memory and the agent is also equipped with long term memory, then lengthy reconnaissance projects will require the agent to constantly utilise its long-term memory. Concretely, say a model spends 1 calendar hour doing ~5 hours of reconnaissance research, after which it must interact with the human oversight team for the next ~1 calendar hour. It thus stores the results of its reconnaissance progress in its long term memory. When it has finished interacting with the human overseers it looks to continue its reconnaissance research, and so must call the results of its work from the place it was stored. If we’re assuming an attack workload of 1,000 hours, this storing-calling process will unfold ~200 times. This appears like a promising juncture for monitoring the model’s behaviour for malicious cyber activity, which we recommend is the subject of further research. Some open questions (to our knowledge) that may be useful in determining how to monitor model long-term memory are:

How might searches through the model’s long-term memory be implemented by human/AI overseers? For example, we may want to search the model’s memory for lists of IP addresses of devices it has compromised. Do some types of long term memory storage facilitate easier searches than others? An example here might be long term memory storage in the form of text files written in English, versus a vector embedding database storage as is currently used in LLM agents; the former clearly appears easier to search than the latter.

If reconnaissance research requires a relatively large amount of long term memory storage, and we had strict limits on model long-term memory, then conducting reconnaissance research would come at a tradeoff to the performance of the task that the system is intended to perform. How plausible would it be to place strict limits on the model memory?

As a stronger version of question (1), how plausible are periodic full wipings of memory? This would reset any attempts a model was making to engage in dangerous cyber activity. However, would the operational tradeoff here be too significant to make this feasible?

Section 3.2.3 - Monitoring acquisition of computer attack infrastructure

As discussed in section 2.1, APT attacks often utilise large computer attack infrastructure. These are primarily used for two things:

Remote C2C servers, to send and receive commands and data from the target network

Hop points, which relay network traffic from the target network to and from the attacker’s C2C servers.

The largest APT attacks may utilise computer attack networks of hundreds to thousands of devices located around the world (section 2.2). Initially an AI system will begin operating on a network without access to such extensive attack infrastructure, which begs the question: how may it acquire this? This appears like a non-trivial task that could be the target of monitoring methods.

The most natural method for a system to build up this computer attack infrastructure may be extended phishing campaigns, in which it infects devices across the world with hop point/remote C2C malware over the course of weeks to months. Large scale deployment of narrow, tool-like systems that can identify phishing attacks may be the best method of dealing with this.

One difficulty in monitoring an advanced AI system that is building a large attack network infrastructure may be that once the malware is installed on a remote device, the system need not communicate with the compromised device until it begins its attack. That is, it may install the malware on a remote device, then the communication link would proceed to ‘go dark’ for weeks/months until the attack begins. However, this could mean that there would be a noticeable uptick in network activity when the system began executing its attack, as it begins utilising its computer infrastructure. A plausible line for intervention into a cyber attack by a team of human overseers may then be in identifying this increased network activity that signals the start of an attack.

Section 4: Conclusion

The process by which advanced AI systems could execute large-scale cyberattacks has received relatively little attention so far in AI x-risk discussions; given the complexity of such operations, this may lead to conceptions of cyber activity from AI systems that stray from reality. This piece has tried to address that gap, by assessing the suitability of attacks from advanced persistent threat actors (APTs) as a reference class for such attacks. We estimated the number of skilled human hours required to execute APT-like attacks in section 2, finding that tens of thousands of hours of work appears to be a lower bound for such an operation. We used a 3 parameter conceptualisation of the capability of future AI systems to then determine which systems may be able to execute the attack workload of APT-like operations in reasonable calendar times (days, or tens of days).

Section 3 looked to make high-level recommendations to prevent such scenarios unfolding. We focused on methods of model oversight prior to it compromising a target network, although we assumed a case in which the model does not exfiltrate in order to make this question tractable. We identified monitoring of (a) long-term memory storage, and (b) acquisition of extensive attack infrastructure as potentially promising junctures to nullify advanced AI systems engaging in dangerous cyber activity.

Further work from this study can be divided into two main groups:

Addressing assumptions:

We made a number of simplifying assumptions which would benefit from being relaxed.Assumptions on model exfiltration. Is it the case that we could see a model engaging in dangerous cyber activity whilst being unable to exfiltrate? This seems dependent on the extent to which model compression will be able to reduce the size model’s parameter count and its inference footprint (section 3.1.1.)

How might advanced AI systems that are as general and agentic as those considered in this study be able to assist in cyber defence?

Alongside the introduction of new tools for the model to use, which features of reality does the NVF framework not incorporate? How can we think about seemingly abrupt changes in model capability that result from tool use?

Exploring monitoring methods:

We made some high-level recommendations for monitoring methods to prevent large-scale cyber attacks from advanced AI systems. These would benefit from further exploration:How could we restrict model access to long-term memory, that seems critical in the execution of the attack workload in the reconnaissance and resource acquisition stages of a large-scale cyberattack

See section 3.2.2 for questions that may be useful to pursue.

How might we monitor a model’s process of acquiring large scale computer infrastructure to facilitate an APT-like attack?

The most direct method of building up this infrastructure appears to be mass phishing campaigns. How might applications of narrow systems be used to catch this?

Will there be a noticeable uptick in network traffic when a model begins accessing its extensive attack infrastructure? How useful is this as a signal to network administrators that the model is beginning to engage in suspicious activity?

- ^

AI Risks that Could Lead to Catastrophe. In: CAIS. https://www.safe.ai/ai-risk#rogue-ais Accessed 19 Aug 2023

- ^

Bostrom N (2014) Superintelligence: Paths, Dangers, Strategies. Oxford University Press, USA

- ^

Contributors to Wikimedia projects (2023) Existential risk from artificial general intelligence. In: Wikipedia. https://en.wikipedia.org/wiki/Existential_risk_from_artificial_general_intelligence Accessed 19 Aug 2023

- ^

APT1: Exposing One of China’s Cyber Espionage Units | Mandiant | FireEye (page 63) https://www.mandiant.com/sites/default/files/2021-09/mandiant-apt1-report.pdf . Accessed 19 Aug 2023

- ^

Contributors to Wikimedia projects (2023) 2020 United States federal government data breach. In: Wikipedia. https://en.wikipedia.org/wiki/2020_United_States_federal_government_data_breach Accessed 19 Aug 2023

- ^

Shevlane T, Farquhar S, Garfinkel B, Phuong M, Whittlestone J, Leung J, Kokotajlo D, Marchal N, Anderljung M, Kolt N, Ho L, Siddarth D, Avin S, Hawkins W, Kim B, Gabriel I, Bolina V, Clark J, Bengio Y, Christiano P, Dafoe A (2023) Model evaluation for extreme risks. In: arXiv.org. https://arxiv.org/abs/2305.15324

- ^

Here, human-led includes both teams of humans and teams of humans assisted by AI systems.

- ^

(2021) Advanced Persistent Threat (APT) Groups & Threat Actors. In: Mandiant. https://www.mandiant.com/resources/insights/apt-groups . Accessed 19 Aug 2023

- ^

APT1: Exposing One of China’s Cyber Espionage Units | Mandiant | FireEye https://www.mandiant.com/sites/default/files/2021-09/mandiant-apt1-report.pdf Accessed 19 Aug 2023

- ^

Oladimeji S (2023) SolarWinds hack explained: Everything you need to know. TechTarget https://www.techtarget.com/whatis/feature/SolarWinds-hack-explained-Everything-you-need-to-know Accessed 21 Sep 2023

- ^

Jibilian I, Canales K (2021) The US is readying sanctions against Russia over the SolarWinds cyber attack. Here’s a simple explanation of how the massive hack happened and why it’s such a big deal. Insider https://www.yahoo.com/now/heres-simple-explanation-massive-solarwinds-173817540.html Accessed 21 Sep 2023

- ^

Temple-Raston D (2021) A “Worst Nightmare” Cyberattack: The Untold Story Of The SolarWinds Hack. NPR https://www.npr.org/2021/04/16/985439655/a-worst-nightmare-cyberattack-the-untold-story-of-the-solarwinds-hack Accessed 21 Sep 2023

- ^

Paul K (2021) SolarWinds hack was work of “at least 1,000 engineers”, tech executives tell Senate. The Guardian https://www.theguardian.com/technology/2021/feb/23/solarwinds-hack-senate-hearing-microsoft Accessed Sep 21 2023

- ^

Paul K (2021) SolarWinds hack was work of “at least 1,000 engineers”, tech executives tell Senate. The Guardian https://www.theguardian.com/technology/2021/feb/23/solarwinds-hack-senate-hearing-microsoft Accessed Sep 21 2023

- ^

APT1: Exposing One of China’s Cyber Espionage Units | Mandiant | FireEye (page 33) https://www.mandiant.com/sites/default/files/2021-09/mandiant-apt1-report.pdf Accessed 19 Aug 2023

- ^

APT1: Exposing One of China’s Cyber Espionage Units | Mandiant | FireEye (page 43) https://www.mandiant.com/sites/default/files/2021-09/mandiant-apt1-report.pdf Accessed 19 Aug 2023

- ^

We don’t justify this assumption in further depth because we are seeking an order of magnitude estimate only.

- ^

The distinction between skilled human hours (the work of trained hackers) and regular human hours is discussed in section 2.2.2.

- ^

See Wikipedia definition of ‘AI-complete’ https://en.wikipedia.org/wiki/Artificial_general_intelligence—An AI complete problem is essentially one involving human level intelligence (or greater levels) to solve.

- ^

To expand, I expect there to be significantly greater precautionary measures taken by the users of systems that have been fine-tuned for cyber capabilities. By assuming that cyber capabilities of the system are emergent, I’m looking to consider a worst case scenario. Nevertheless, it would be good to see work that relaxes this assumption.

- ^

Or a team of administrators, that may also use ‘narrow’ AI systems for assistance

- ^

We’re assuming here that taking an action in service of a misaligned goal does not contribute to the task that the model was intended to execute

- ^

Steinhardt J (2023) Section 2, What will GPT-2030 look like? Bounded Regret https://bounded-regret.ghost.io/what-will-gpt-2030-look-like/ Accessed Sep 21 2023. See post

- ^

To clarify, aligned action here means an action that is in service of the goal that the operator intends

- ^

T = W/NVF = 10,000 / 10 * 5 * 0.001 = 200,000hrs = 8,333 days = 23 yrs

- ^

Barnes B (2023) ARC Evals new report: Evaluating Language-Model Agents on Realistic Autonomous Tasks. https://www.lesswrong.com/posts/EPLk8QxETC5FEhoxK/arc-evals-new-report-evaluating-language-model-agents-on . Accessed 19 Aug 2023

- ^

Recall that we’re not considering ‘strongly superintelligent’ systems, which were defined in section 2.2.

- ^

It’s plausible that the internal deployment will be the first use of dangerous systems at the scale described—i.e, AI labs deploying AIs at scale to assist in research. In this example we consider a business application instead.

- ^

Shevlane T (2022) Structured access: an emerging paradigm for safe AI deployment https://arxiv.org/abs/2201.05159 Accessed 21 Sep 2023

- ^

This may come via finetuning of the system, few-shot learning, or access to a new tool, depending on the complexity of the task being learnt.

- ^

If the model has situational awareness, it may have been feigning alignment in earlier stages, waiting for the deployers to move it into phase 3 where it has far greater autonomy and access to tools.

- ^

CAR Manufacturing System for Automation of Logistics).

- ^

Leike J (2023) Self-exfiltration is a key dangerous capability. Musings on the Alignment Problem https://aligned.substack.com/p/self-exfiltration Accessed 21 Sep 2023

- ^

- ^

- ^

- ^

To state explicity, this means that if an advanced AI system is engaging in active reconnaissance, it could be communicating with the target network weeks/months before it executes the attack. Thus, networks that the system regularly interactions with will be particularly susceptible.

- ^

Kushner D (2013) The Real Story of Stuxnet. IEEE Spectrum https://spectrum.ieee.org/the-real-story-of-stuxnet Accessed 21 Sep 2023

- ^

The process of developing malware could arguably be placed into the resource acquisition stage, but in reality reconnaissance and resource acquisition happen in parallel, with the results of one stage feeding into the other.

- ^

(2023) Jan Leike on OpenAI’s massive push to make superintelligence safe in 4 years or less. In: 80,000 Hours. https://80000hours.org/podcast/episodes/jan-leike-superalignment/ Quote at 24:20. Accessed 20 Sep 2023

- ^

Weng L (2023) LLM Powered Autonomous Agents. In: Lil’Log. https://lilianweng.github.io/posts/2023-06-23-agent/ Accessed 19 Aug 2023

- ^

Interesting post! In general, I think the field of computer security has lots of good examples of adversarial setups in which the party that can throw the most intelligence at a problem wins.

Probably not central to your main points, but on this:

I think there’s at least one thing you’re overlooking: there is a lot of variance in human labor, and hiring well to end up on the right side of that variance is really hard. 10x engineers are real, and so are 0x and −5x engineers and −50x managers, and if you’re not careful when building your team, you’ll end up paying for 10,000 “skilled” labor hours which don’t actually accomplish much.

An AI comprised of a bunch of subagents might have vaguely similar problems if you squint, but my guess is that the ability to hire and fire relatively instantaneously, clone your most productive workers, etc. makes a pretty big difference. At the very least, the variance is probably much lower.

Another reason that I suspect 10,000 labor hours is on the high end for humans: practical offensive cybersecurity isn’t exactly the most prestigious career track. My guess is that the most cognitively-demanding offensive cybersecurity work is currently done in academia and goes into producing research papers and proofs-of-concept. Among humans, the money, prestige, and lifestyle offered by a career with a government agency or a criminal enterprise just can’t compete with the other options available in academia and industry to the best and brightest minds.

Yep, the variance of human worker teams should definitely be stressed. It’s plausible that a super team of hackers might have attack workloads on the scale of 100s to 1000s of hours [1], whereas for lower quality teams, this may be more like 100,000s of hours.

Thinking about it, I can probably see significant variance amongst AI systems due to various degrees of finetuning on cyber capabilities [2](though as you said, not as much variance as human teams). E.g: A capable foundational model may map to something like a 60th percentile hacker and so have attack workloads on the order of 10,000s of hours (like in this piece). A finetuned model might map to a 95th percentile hacker and so a team of these may have workloads on the scale of 1000s of hours.

Though 100s of hours seems more on the implausible side—I’m guessing this would require a very large team (100s) of very skilled hackers.

And other relevant skills, like management