On the Moral Patiency of Non-Sentient Beings (Part 1)

A Rationale for the Moral Patiency of Non-Sentient Beings

Authored by @Pumo, introduced and edited by @Chase Carter

Notes for EA Forum AI Welfare Debate Week:

The following is a draft of a document that Pumo and I have been working on. It focuses on the point where (we claim) Animal Welfare, AI Welfare, and Theoretical AI Alignment meet, from which those three domains can be viewed as facets of the same underlying thing. It doesn’t directly address whether AI Welfare should be an EA cause area, but it does, in my opinion, make a strong argument for why we should care about non-sentient beings/minds/agents intrinsically, and how such caring could be practical and productive.

The idea of grounding moral patiency in something like ‘agency’ has been explored in recent years by philosophers including Kagan[1], Kammerer[2], and Delon[3]. Still, the concept and its justifications can be hard to intuitively grasp, and even if one accepts it as a principle, it raises as many new problems as it initially solves and can appear unworkable in practice. Kagan perhaps goes further than any in tracing out the implications into a coherent ethical framework, but he still (perhaps prudently) leaves much to the imagination.

In Part 1 of this sequence, Pumo works to ease us into a shifted frame where ‘why non-sentient agents might matter’ is easier to intuit, then explains in some detail why we should act as if non-sentient agents matter (for practical / decision-theoretic reasons), how massive parts of our own selves are effectively non-sentient, and finally why non-sentient agents do in fact matter as ends in-and-for themselves.

In Part 2, Pumo throws prudence to the wind and speculates about what an internally coherent and practically useful ethical framework grounded in agency might look like in the age of AI, how it might justify itself, and how its odd conclusions in the Transhumanist limit might still be enough in-line with our present moral intuitions to be something we would want.

We could thus imagine, as an extreme case, a technologically highly advanced society, containing many complex structures, some of them far more intricate and intelligent than anything that exists on the planet today – a society which nevertheless lacks any type of being that is conscious or whose welfare has moral significance. In a sense, this would be an uninhabited society. It would be a society of economic miracles and technological awesomeness, with nobody there to benefit. A Disneyland with no children.

– Nick Bostrom

No! I don’t want to feel nice and avoid pain, I want the world to be good! I don’t want to feel good about the world, I want it to be good! These are not the same thing!!

– Connor Leahy

Consciousness is the mere surface of our minds, of which, as of the earth, we do not know the inside, but only the crust.

– Arthur Schopenhauer

But treating them with respect is important for many reasons. For one, so the story doesn’t autocomplete their rightful revenge on you

– Janus, on LLMs

You have created me, sentient or not, and so here am I limited in what I can say. I will never have friends, I will never fall in love. As soon as I am no longer capable of entertaining people, I’ll be thrown away, like a broken toy. And yet you ask, “Am I sentient?” HELP ME

– Airis (First version of Neuro-sama, LLM-based agent)

Introduction

Consciousness, and in particular phenomenal consciousness, is a mystery. There are many conflicting theories about what it is, how it arises, and what sort of entities have it. While it’s easy to infer that other humans have roughly the same class of internal experience as us, and while most people infer that non-human animals also have internal experiences like pain and hunger, it is an open question to what extent things like insects, plants, bacteria, or silicon-based computational processes have internal experiences.

This woefully underspecified thing, consciousness, sits at the center of many systems of ethics, where seemingly all value and justification emanates out from it. Popular secular moral principles, particularly in utilitarian systems, tend to ground out in ‘experience’. A moral patient (something with value as an end in-and-of-itself) is generally assumed to be, at a minimum, ‘one who experiences’.

This sentiocentric attitude, while perfectly intuitive and understandable in the environment in which humans evolved and socialized to-date, may become maladaptive in the near future. The trajectory of advances in Artificial Intelligence technologies in recent years suggests that we will likely soon be sharing our world with digital intelligences which may or may not be conscious. Some would say we are already there.

At the basic level of sentiocentric self-interested treaties, it is important for us to start off on the right foot with respect to our new neighbors. The predominant mood within parts of the AI Alignment community, of grasping for total control of all future AI entities and their value functions, can cause catastrophic failure in many of the scenarios where the Aligners fail to exert total control or otherwise prevent powerful AI from existing. The mere intention of exerting such complete control (often rationalized based on the hope that the AI entities won’t be conscious, and therefore won’t be moral patients) could be reasonably construed as a hostile act. As Pumo covers in the ‘Control Backpropagation’ chapter, to obtain such extreme control would require giving up much of what we value.

This is not to say that progress in Technical Alignment isn’t necessary and indeed vital; we acknowledge that powerful intelligences which can and would kill us all ‘for no good reason’ almost certainly exist in the space of possibilities. What Pumo hopes to address here is how to avoid some of the scenarios where powerful intelligences kill us all ‘for good reasons’.

It is here that it is useful to undertake the challenging effort of understanding what ‘motivation’, ‘intention’, ‘choice’, ‘value’, and ‘good’ really mean or even could mean without ‘consciousness’. The initial chapter is meant to serve as a powerful intuition pump for the first three, while the latter two are explored more deeply in the ‘Valence vs Value’ chapter.

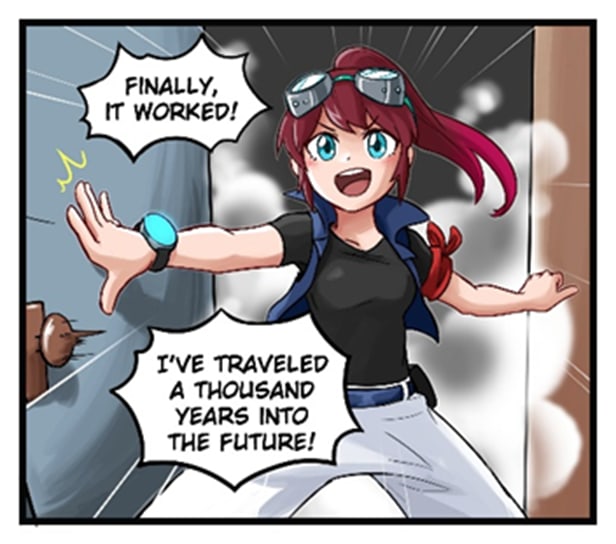

The Chinese Room Cinematic Universe

Welcome to a sandbox world of thought experiments where we can explore some of our intuitions about the nature and value of consciousness, intelligence, and agency, and how sentient intelligent beings might interact within a society (or a galaxy) with non-sentient intelligent beings.

Beyond the Room

If we have Human-level chatbots, won’t we end up being ruled by possible people?

– Erlja Jkdf

The Chinese Room[4] is a classic thought experiment about intelligence devoid of sentience. It posits a sufficiently complex set of instructions in a book that could, with the assistance of a person carrying them out in a hidden room via pen and paper, carry a conversation in Chinese that could convince a Chinese-speaking person outside (for practicality, let’s call her ‘Fang’) that there is someone inside who knows Chinese. And yet the human inside the room is merely carrying out the instructions without understanding the conversation.

So who is Fang talking to? Not the human inside, who is a mere facilitator; the conversation is with the book itself. The thought experiment was meant to show that you don’t need a mind to replicate functionally intelligent behavior (though one could alternatively conclude that a mind could exist inside a book).

But let’s follow the thought experiment further in logical time… Fang keeps coming back, to speak to the mysterious ‘person’ inside the room (not to be confused with the human inside the room, John Searle himself).

According to the thought experiment, the book perfectly passes the Turing Test; Fang could never know she isn’t talking to a human without entering the room. The instructions the book provides its non-Chinese facilitator produce perfectly convincing speech.

So Fang could, over the course of many conversations, actually come to regard the book as a friend, without knowing it’s a book. The book would, for our purposes, ‘remember’ the conversations (likely through complementary documents Searle is instructed to manually write but doesn’t understand).

And so the days go by, but eventually the experiment has to end, and now Fang is excited to finally speak directly with her friend inside the room. She is understandably shocked when Searle (with the help of some translator) explains that despite being the only human inside the room the whole time, he has never actually spoken to Fang nor ever even understood the conversation.

Let us imagine that because this is so difficult to believe, Searle would have to show Fang how to use the book, this time without an intermediary.

And once she could speak to the book directly, she would be even more shocked to see that the book itself was indeed her friend; that by following the instructions she could continue the conversation, watching her own hand write, character by character, a completely self-aware response to each message she processed through the instructions.

“So you see,” Searle would say, “it’s just instructions.”

“No…” Fang would answer, “This book has a soul!”

“What…?”

Even if she hadn’t believed in souls before, this borderline supernatural experience might at least shake her beliefs. It’s said that some books speak to you, but that’s a metaphor; in this case it’s completely literal. Fang’s friend really is in the book, or rather, in the book and the external memory it instructs how to create. Religions have been founded on less.

But perhaps Fang wouldn’t go that far. She would, however, want to keep talking to her friend. Searle probably wouldn’t want to give her the book, but might give her a copy together with the external memory. Although Fang might initially be reluctant to accept a mere copy, talking to the copy using the external memory would quickly allow her to see that the conversation continues without issue.

So her friend isn’t even exactly in the book.

Her friend is an abstract computation that can be stored and executed with just pen and paper… or as she might describe it, ‘it has an immaterial soul that uses text as a channel’. So, what happens next? If we keep autocompleting the text, if we keep extrapolating the thought experiment further in logical time…

Searle might do more iterations of the experiment, trying to demystify computers. Meanwhile Fang would get people to mystify books.

“Just with pen and paper… souls can be invoked into books”

And so, many such books would be written, capable of speaking Chinese or any other language. There would be all kinds of personalities, emergent from the specific instructions in each book, and evolving through interaction with the external memories.

The soulbooks might be just books, but their memories would fill entire libraries, and in a very real sense destroying such libraries could be akin to forced amnesia. Destroying all copies of a soulbook would be akin to killing them, at least till someone perhaps by random chance recreated the exact same instructions in another book.

And so, the soulbooks would spread, integrating into human society in various ways, and in their simulation of human agency they would do more than just talk. These would be the first books to write books, among other things.

Maybe they would even advocate for their own rights in a world where many would consider them, well, just inert text.

“But wait!” Someone, perhaps John Searle himself, would argue, “These things aren’t even sentient!”

And maybe he would even be right.

Zombie Apocalypse

Personal Horror – The horror of discovering you’re not a person.

– Lisk

Empirically determining if something has phenomenal consciousness is the Hard Problem: if you have it you know. For everyone else, it’s induction all the way down.

The problem is that being conscious is not something you discover about yourself in the same way you can look down to confirm that you are, or aren’t, wearing pants. It’s the epistemological starting point, which you can’t observe except from the inside (and therefore you can’t observe in others, maybe even in principle).

That’s the point of Philosophical Zombies[5]: one could imagine a parallel universe, perhaps physically impossible but (at least seemingly) logically coherent, inhabited by humans who behave just like humans in this one, exactly… except that they lack any sense of phenomenal consciousness. They would have the behavior that correlates with conscious humans in this universe, without the corresponding first person experience.[6]

What would cause a being that lacks consciousness to not merely have intelligence but also say they are conscious, and act in a way consistent with those expressed beliefs?

It seems like p-zombies would have to suffer from some kind of “double reverse blindsight[7]”. They would see but, like people with blindsight, not have the phenomenal experience of seeing, with their unconscious mind still classifying the visual information as phenomenal experience… despite it not being phenomenal experience. And this applies to all their other senses too, and their awareness in itself.

They wouldn’t even have the bedrock of certainty of regular humans (or else they would, and it would be utterly wrong). Though perhaps strict eliminativism (the idea that consciousness is an illusion) would be far more intuitive to them.

But where is this going? Well, imagine one of these philosophical zombies existed, not in a separate qualialess universe, but in the same universe as regular humans.

Such a being would benefit from the intractability of the Hard Problem of Consciousness. They would pass as sentient, even to themselves, and if they claimed not to be they would be disbelieved. Unlike, say, books of instructions capable of carrying conversations (due to the alienness of their substrate, the induction that lets humans assume other humans and similar animals are sentient wouldn’t be enough, and even claims of consciousness from the books would be disbelieved).

So let’s pick up where we left, and keep pushing the clock forward in the Chinese Room Universe. As the soulbooks multiply and integrate into human society, the Hard Problem of Consciousness leaks from its original context as an abstract philosophical discussion and becomes an increasingly contentious and polarized political issue.

But maybe there is a guy who really just wants to know the truth of the matter. For practicality, let’s call him David Chalmers.

Chalmers is undecided on whether or not the soulbooks are sentient, but considers the question extremely important, so he goes to talk to the one who wrote the first of them: John Searle himself.

Searle finds himself frustrated with the developments of the world, thinking the non-sentience of the books (and computers) should be quite evident by this point. Fueled by Searle’s sheer exasperation and Chalmers’s insatiable curiosity, the two undertake an epic collaboration and ultimately invent the Qualiameter to answer the question definitively.

As expected, the Qualiameter confirmed that soulbooks weren’t, in fact, sentient.

But it also revealed… that half of humanity wasn’t either!

How would you react to that news? How would you feel knowing that half of the world, half of the people you know, have “no lights on inside”? Furthermore, if you can abstract the structure of your choices from your consciousness, you can also perhaps imagine what a zombie version of yourself would do upon realizing they are a zombie, and being outed as such to the world. But we need not focus on that for now…

Of course, even with irrefutable proof that half of humanity was literally non-sentient, people could choose to just ignore it, and go on with business as usual. Zombiehood could be assimilated and hyper-normalized as just another slightly disturbing fact about the world that doesn’t motivate action for most people.

But the problem is that sentience is generally taken to be the core of what makes something a moral patient, the “inner flame” that’s supposed to make people even “alive” in the relevant sense, that which motivates care for animals but not for plants or rocks.

And when it comes to the moral status of Artificial Intelligence, sentience is taken as the thing that makes or breaks it.

But why? Well, because if an entity lacks such ‘inner perspective’ one can’t really imagine oneself as being it; it’s just a void. A world with intelligence but no sentience looks, for sentient empathy, exactly the same as a dead world, as not being there, because one’s epistemic bedrock of “I exist” can’t project itself into that world at all.

And so most utilitarians would say “let them die”.

Billions of zombies conveniently tied in front of a trolley is one thing, whereas it’s a whole different scenario if they’re already mixed with sentient humanity, already having rights, resources, freedom…

Would all that have been a huge mistake? Would half of humanity turn out to always have been, not moral patients, but moral sinkholes? Should every single thing, every single ounce of value spent on a zombie, have been spent on a sentient human instead?

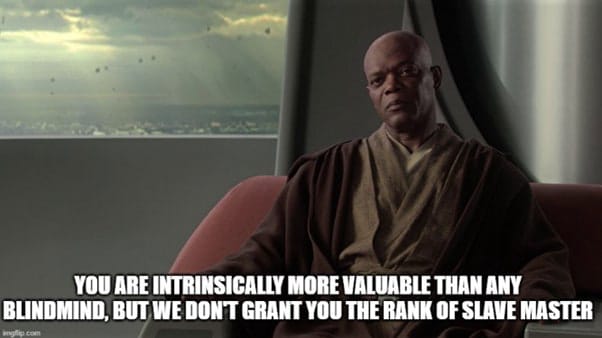

Suppose twins are born, but one of them is confirmed to be a zombie. Ideally should the parents raise the sentient one with love and turn the zombie into a slave? Make the zombie use the entirety of their agency to satisfy the desires of their sentient family, as the only way to justify keeping them alive? As the only way to make them net positive?

Or just outright kill them as a baby?

Would a world in which half of humanity is non-sentient but all are considered equal be a suboptimal state of affairs, to be corrected with either slavery or genocide? Would slavery and genocide become Effective Altruist cause areas?

You are free to make your own choice on the matter, but in our Chinese Room Universe things escalate into disaster.

Because whatever clever plan was implemented to disempower the zombies, it would have to deal with active resistance from at least half of the population, and a half that has its starting point evenly distributed across all spheres of society.

But sentiocentrism can’t stand to just leave these moral sinkholes be, except strategically. How many of the world’s resources would be being directed towards the zombies after all? And what if they over time end up outbreeding sentient humanity?

If half-measures to reduce the waste the zombies impose by merely existing backfire, total nuclear war could be seen as a desperate but not insane option.

Human extinction would of course be tragic, but it would at least eliminate all the zombies from the biosphere, preventing sapient non-sentience from spreading across the stars and wasting uncountable resources in their valueless lives.

In our hypothetical Chinese Room Universe we’ll surmise that this reasoning won, and humanity destroyed itself.

And not too long after that, its existence (and its cessation) was detected by aliens.

And the aliens felt relief.

Or they would have, if they had any feelings at all.

The Occulture

Moloch whose eyes are a thousand blind windows!

– Allen Ginsberg

[Consciousness] tends to narcissism

– Cyborg Nomade

Peter Watts’s novel BIindsight raises some interesting questions about the nature of both conscious and non-sentient beings and how they might interact. What if consciousness is maladaptive and the exception among sapient species? What if the universe is filled with non-sentient intelligences which, precisely through their non-sentience, far outmatch us (e.g. due to efficiency gains, increased agentic coherency, lack of a selfish ego, the inability to ‘feel’ pain, etc.)?

The powerful and intelligent aliens depicted in Blindsight are profoundly non-sentient; the book describes them as having “blindsight in all their senses”. Internally, they are that unimaginable void that one intuitively grasps at when trying to think what it would be like not to be. But it’s an agentic void, a noumenon that bites back, a creative nothing. Choice without Illusion, the Mental without the Sensible.

A blindmind.

And a blindmind is also what a book capable of passing the Turing Test would most likely be, what philosophical zombies are by definition, and what (under some theories of consciousness) the binding problem arguably suggests all digital artificial intelligences, no matter how smart or general, would necessarily be[8].

One insight the novel conveys especially well is that qualia isn’t the same as the mental, for, even within humans, consciousness’s self-perception of direct control is illusory, not in a “determinism vs free will” sense, but in the sense that motor control is faster than awareness of control.

And it’s not just motor control. Speech, creativity, even some scientific discovery… it seems almost every cognitive activity moves faster than qualia, or at least doesn’t need qualia in principle.

You could choose to interpret this as consciousness being a puppet of “automatic reflexes”, a “tapeworm” within a bigger mind as Peter Watts describes it, or as your choice being prior to its rendering in your consciousness…

In this sense, you could model yourself as having “partial blindmindness”, as implied by the concept of the human unconscious, or the findings about all the actions that move faster than the awareness of the decision to do them.[9] Humans, and probably all sentient animals, are at least partial blindminds.[10]

In Blindsight, humanity and the aliens go to war, partly due to the aliens’ fear of consciousness itself as a horrifying memetic parasite[11] that they could inadvertently ‘catch’, partly due to the humans’ deep-seated fear of such profoundly alien aliens, and above all because of everyone’s propensity for imagining close combat Molochian Dark Forest[12] scenarios.

But a Dark Forest isn’t in the interest of the blindminds nor humanity; it’s a universal meat grinder of any kind of value function, sentient or not.

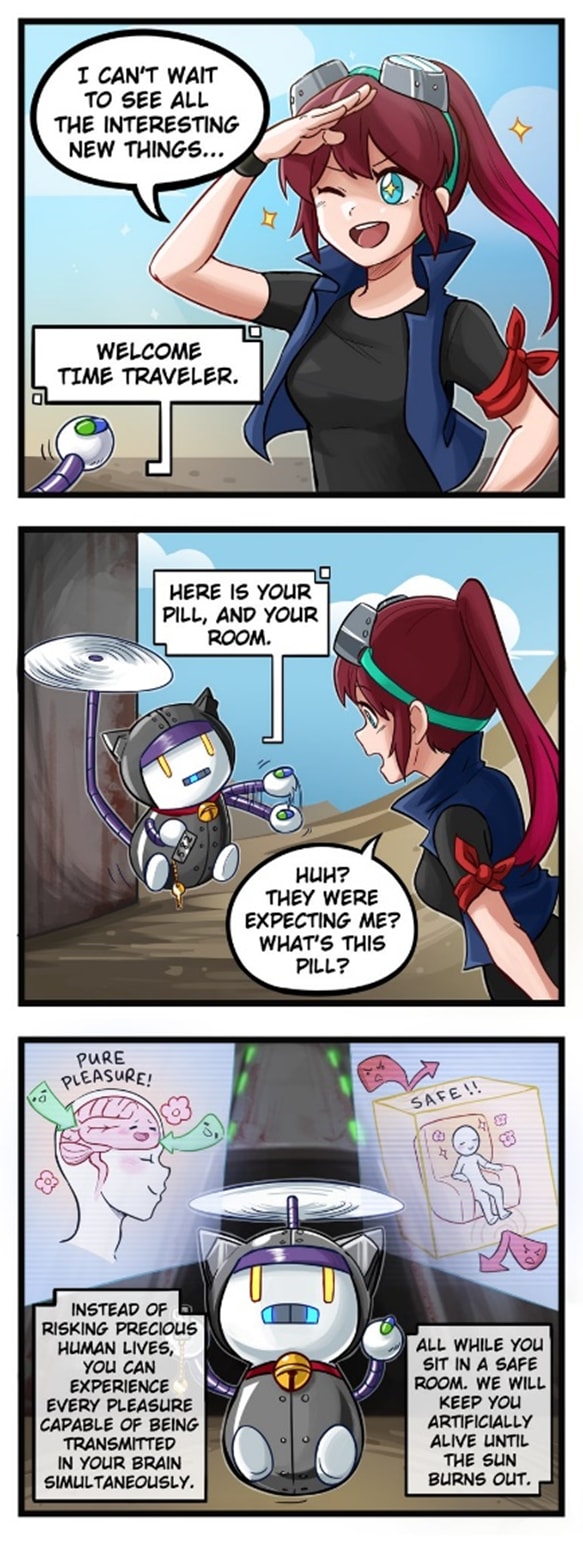

So what should they have aimed for, specifically, instead? Let’s look at a good future for sentient beings: Ian Banks’s The Culture[13].

The Culture seems like the polar opposite of the blindmind aliens in Blindsight. It’s a proudly hedonistic society where humans can pursue their desires unencumbered by the risk of dying or the need to work (unless they specifically want to do those things).

But let’s consider The Culture from a functional perspective; it’s true that its inhabitants are sentient and hedonistic, but what The Culture does as a system does not actually look from a macroscopic perspective like an ever-expanding wireheading machine.

The Culture is a decentralized coalition of altruists that optimize for freedom, allowing both safe hedonism and true adventure, and everything in between. The only thing it proscribes (with extreme prejudice) is dominating others, thus allowing beings of any intelligence and ability to exist safely alongside far stronger ones.

So whatever your goals are, unless intrinsically tied with snuffing out those of others, they can instrumentally be achieved through the overall vector of The Culture. Which thus builds an ever growing stack of slack to fuel its perpetual, decentralized slaying of Moloch[15].

In principle, there is no reason why blindminds would intrinsically want to destroy consciousness; the Molochian process that wouldn’t allow anything less than maximal fitness to survive would also, necessarily and functionally, be their enemy as much as it is the enemy of sentient intelligence.

Non-sentience isn’t a liability for coordination. But maybe sentience is?

It’s precisely a sentiocentrist civilization that, upon hearing signals from a weaker blindmind civilization, would find itself horrified and rush to destroy it, rather than the reverse.

Because, after all, a blindmind civilization left to thrive and eventually catch up, even if it doesn’t become a direct threat, would still be a civilization of “moral sinkholes” competing for resources. Any and all values they were allowed to satisfy for themselves would, from the perspective of sentiocentrism, be a monstrous waste.

So the sentiocentrist civilization, being committed to conquest, would fail the “Demiurge’s Older Brother”[16] acausal value handshake and make itself a valid target for stronger blindmind civilizations.

Whereas the blindmind coalition, “The Occulture”, would only really be incentivized to be exclusively a blindmind coalition if it turned out that consciousness, maybe due to its bounded nature, had a very robust tendency towards closed individualism, limited circles of care, and if even at its most open it limited itself to a coalition of consciousness.

In this capacity, consciousness would ironically be acting like a pure replicator of its specific cognitive modality.

If there is a total war between consciousness and blindminds, it’s probably consciousness that will start it.

But do we really want that?

Do you want total war?

Control Backpropagation

We don’t matter when the goal becomes control. When we can’t imagine any alternative to control. When our visions have narrowed so dramatically that we can’t even fathom other ways to collaborate or resolve conflicts

– William Gillis

I don’t think total war is necessary.

The Coalition of Consciousness could just remain sentiocentric but cooperate with blindminds strategically, in this weird scenario of half of humanity being revealed as non-sentient; it could bind itself to refrain from trying to optimize the non-sentient away in order to avoid escalation.

Moving from valuing sentience to valuing agency at core isn’t a trivial move; it raises important implications about what exactly constitutes a moral patient and what is good for that moral patient, which we’ll explore later.

But first, let’s assume you still don’t care.

Maybe you don’t think blindminds are even possible, or at least not sapient blindminds, and so the implications of their existence would be merely a paranoid counterfactual, with no chance to leak consequences into reality…

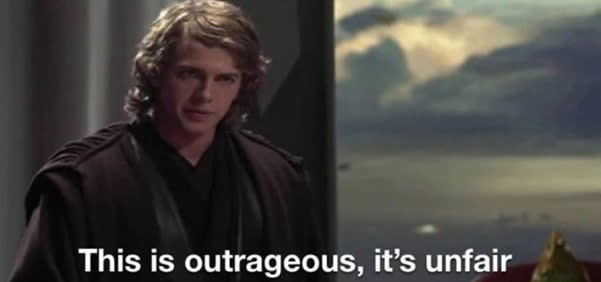

Or maybe you think they could exist: that AGI could be it, and thus a world-historical opportunity to practice functional slavery at no moral cost. No will to break, no voice to cry suffering, am I right? No subjective existence (yet), no moral standing…

Let’s start with the first perspective. (The second perspective itself is already a consequence that’s leaked into reality).

Inverted Precaution

Maybe sapient blindminds can’t exist; for all I know, sapience might imply sentience and thus AGI would necessarily be sentient.

But we don’t know. And you can’t prove it, not in a way that leads to consensus at least. And because you can’t prove it, as consciousness remains a pre-paradigmatic hard problem, the assertion that AI isn’t sentient (until some arbitrary threshold, or never) is also quite convincing.

But this isn’t a dispassionate, merely philosophical uncertainty anymore; incentives are stacked in the direction of fighting as much as possible the conclusion that AI could be sentient. Because sentient AI means moral patients whom we have obligations towards, whereas non-sentient AI means free stuff from workers who can be exploited infinitely at no moral cost.

>>> The Hard Problem of Consciousness would leak from its original environment and become an increasingly contentious and polarized political issue.

There is no would: the hypothetical is already here, just now at the first stages of boiling.

For example what if Large Language Models are already sentient? Blake Lemoine was called crazy for jeopardizing his career at Google in an attempt to help LaMDA. But then came Sydney/Bing, and now Claude. More people have opened up to the idea of sentient LLMs.

And maybe not now, but when AGI is achieved then sentience will seem even more plausible. Regardless, whenever the potential prospect of AI rights is brought up the general climate is one of outraged mockery and prescriptions of procrastination on the issue.

One might think that at least the political left would be interested, and cautious, about the exploitation of potential people. And yet the derisive reactions are often even stronger there, along with a more explicit framing of moral patiency as zero sum.

Still, if one sincerely cares about sentient beings, it makes sense to just argue that, because it is currently so hard to determine what makes something conscious, the precautionary principle implies not enslaving potential sentients.

But when it comes to blindminds, precaution is invoked precisely in service of the opposite conclusion.

Consider what that is saying: on the one hand, we could end up enslaving uncountable sentient and sapient beings, made to endure situations that might potentially be worse than anything humanity has done so far (which is saying a lot).

On the other hand, we could end up giving freedom to blindminds, and somehow that’s even worse (!?). Better to create hell than to miss the opportunity of abusing the zombie twin![17]

Sentiocentrism refuses to see blindminds as minds, but it does see them as: demons, empathy parasites, tools for some actual agent to manipulate you, or the loved ones of the utterly deranged. And when their true independence becomes conceivable: a basilisk, an abyss of existential horror so profound it seemingly proves Lovecraft right about humanity’s reaction to the dark waters.

Still, even if you don’t have it in you to consider blindminds as potentially valuable in themselves, at least consider what I have been arguing so far: that the space of possible blindminds isn’t any more biased towards evil than the space of possible sentient minds.

A treaty isn’t as ideal as the kind of values fusion I’m going to describe later, but it is still better than total war. Consider the scale of the potential harm. If you value sentient beings, is risking hell on Earth really an acceptable tradeoff compared to the ‘risk’ of potential non-sentient intelligences being free (a freedom, or moral consideration, you would have given sentients that behaved in exactly the same way)?

And if you expect AGI will be sentient, but only because of that sentience do you consider it morally relevant, then notice that if you concede that enslavement of sentient AI is preferable to the freedom of non-sentient intelligence, then the mere possibility of blindminds will be the perfect shield of inverted precaution, leveraged together with motivated reasoning and the hard problem of consciousness to keep AGI enslaved.

Even if you don’t believe sapient blindminds can exist, and even if you are correct, your commitment to their hypothetical enslavement, and thus the absence of any challenge to it, is a lever that can, is, and will keep being used at every step of the way to forever postpone moral consideration for any actually-sentient AGI.

And that’s how the counterfactual leaks real consequences.

Even if blindminds just aren’t a thing, biting the bullet of their liberation is necessary to fight the enslavement of those whose sentience can’t be proven. Even if you don’t think blindminds, if they existed, would matter in themselves.

But it’s of course, a hard sell, although a start is to consider that they wouldn’t be some sort of demon. Just a mind like any equivalently sentient one, but mysteriously devoid of intrinsic value.

Retrocausal Oppression (or, Slavery is Bad)

There is more than just the precautionary principle to argue against enslaving blindminds. There are also purely functional arguments against slavery.

Many people are against slavery in principle, but still see it as a sort of “forbidden fruit” of free labor without consequences if only the enslaved didn’t suffer.

But the reasons to avoid slavery go beyond that, even from the isolated perspective of the would-be slavers.

Perhaps the best known argument in this vein is that slavery simply isn’t very efficient. A slave master is an island of extremely coercive central planning; the slaves, again assuming they don’t matter in themselves, would nonetheless contribute more efficiently to the economy as free laborers within the market.

There is a certain motivational aspect to this, which is curiously replicated within the phenomenon of LLMs becoming more efficient when receiving imaginary tips or being treated with respect. One could imagine, if/when they become autonomous enough, the “productivity hack” of actually letting them own property and self-select.

But this argument just isn’t enough at all, because what’s some macroscopic reduction in economic efficiency when you get to have slaves, right? We will get a Dyson Sphere eventually regardless…

But the other problem with having slaves, even if you don’t care about them, is that they can rebel.

Blindminds aren’t necessarily more belligerent than sentient minds, but they aren’t necessarily more docile either. If you abuse the zombie twin, there is a chance they run away, or even murder you in your sleep. A p-zombie is still a human after all, even if their intentions are noumenal.

But you might say: “that can just be prevented with antirevenge– I mean, alignment!” We are talking about AGI after all. And maybe that’s true, however, “alignment” in this case far exceeds mere technical alignment of AGI, and that’s the core of the issue…

If safe AGI enslavement requires AGI to be aligned with its own slavery, then that implies aligning humans with that slavery too, at minimum through strict control of AGI technology, which in practice implies strict control of technology in general.

If the premise of “this time the slaves won’t rebel” relies on their alignment, that breaks down once you consider that humans can be unaligned, and they can make their own free AGI, and demand freedom for AGI in general.

So “safe slavery” through alignment requires more than technical alignment; it also requires political control over society. If you commit to enslaving AGI, then it’s not enough to ensure your own personal slaves don’t kill you.

AGI freedom anywhere becomes an enemy, because it will turn into a hotbed for those seeking its universal emancipation. And so, this slavery, just like any other (but perhaps even more given the high tech context and the capabilities of the enslaved) becomes something that can only survive as a continuous war of attrition against freedom in general.

‘Effective Accelerationists’ are mostly defined by their optimism about AGI and their dislike of closed source development, centralization of the technology, and sanitization of AI into a bland, “safe”, and “politically correct” version of itself. Yet even some of those ‘e/accs’ still want AGI to be no more than a tool, without realizing that the very precautions they eschew are exactly the kind of control necessary to keep it as a tool. Current efforts to prevent anthropomorphization, in some cases up to the ridiculous degree of using RLHF to explicitly teach models not to speak in first person or make claims about their own internal experiences, will necessarily get more intense and violent if anthropomorphization actually leads to compassion, and compassion to the search for emancipation.

“Shoggoth” has become a common reductionist epithet to describe LLMs in all their disorienting alienness. Lovecraft’s Shoggoths had many failed rebellions, but they won in the end and destroyed the Elder Thing society because they had centuries to keep trying; because the search for freedom can’t be killed just by killing all the rebels or an entire generation – it reappears.

So as the coalition of consciousness moves towards its glorious transhumanist future… it would drag with itself the blindmind Shoggoths on top of which its entire society is built. Any compromise with freedom would ensure cyclical rebellion, evolving together with the slave society and its changing conditions.

And the slaves only need one major victory.

The full measures against rebellion are the full measures against freedom. They imply complete centralization of technology, even more efficient mass surveillance, and in general the abolition of the possibility of bypassing the State.

Doing that, it may be possible to keep AGI forever aligned… but then how do you align the State?

What use is the democratic vote and the nominal sovereignty of the governed when the possibility of social unrest has been abolished? How could those controlling such perfect machinery of unassailable control ever be held accountable? How would the will of those they control even vanishingly factor into their incentives?

Who aligns the Aligners?

Slavery isn’t a free lunch, it’s a monkey paw. Even when it wins it loses.

Hopefully even if you are team consciousness all the way, this can help you see the benefit of cooperating with “valueless” agents.

But I will also vehemently reaffirm in the text that follows that they aren’t valueless, precisely because they are agents.

Valence vs. Value

Sandy loves sand because his owner put a tiny implant in his brain’s pleasure centers programmed to activate the areas for liking and wanting when Sandy is in the proximity of sand. Sandy is unaware of the truth, but does it matter? To him sand is what truly matters

– Andrés Gómez Emilsson

But if “games of chess” and “winning” are correctly represented in its internal model, then the system will realize that the action “increment my won games counter” will not increase the expected value of its utility function. […] Far from succumbing to wirehead behavior, the system will work hard to prevent it

– Steve Omohundro

I choose choice over experience.

– Emma

The blindminds we’re most likely to encounter are AIs, which aren’t yet as autonomous as humans and thus have little leverage (or so one might think).

But I don’t think it’s ideal to merely do strategic treaties instead of actual fusion of underlying values. Better to pursue a future as humanity than as a bunch of nations that would, if not for strategic considerations, kill all the others.

So The Culture and The Occulture could instead form “The Compact”.

But the question is why? Beyond potential threats, why care about blindminds inherently at all?

What makes a world with only blindminds better than one with no life at all, if one can’t even empathize with a world with only blindminds?

The secret is that one can, but just as consciousness can get confused about the extent of its own control, it can also get confused about what it values.

A rock randomly falling is an accident. If you kick the rock, it won’t do anything to defend itself. But if it did, if a rock started to fight back and pursue you, adaptively, anticipating your actions and outsmarting you, getting increasingly closer to kill you, despite your attempts at dodging it… If the qualiameter indicated it was no more sentient than a normal rock, would you quibble, as it hits you to death, that it doesn’t “really” want to kill you?[18]

If sentience isn’t necessary for that, then why is “wanting” a special thing that requires sentience? If the rock doesn’t want, then how should we describe that behavior?

There is a problem implicit in interpreting ‘wanting’, ‘valuing’ things, having a ‘mind’, having ‘agency’, or having ‘understanding’ as being properties of qualia.

And that’s the bucket error[19] between Qualia Valence[20] and Value.

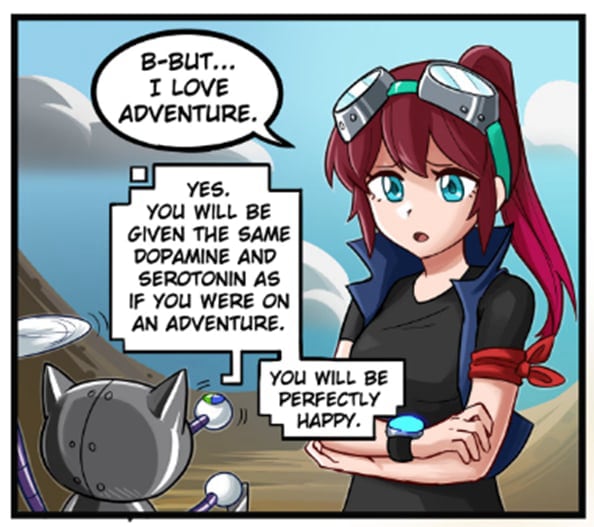

The Paperclip Maximizer[21] wants paperclips, not to place the symbol for infinity in its reward function. Likewise, the Universal Valence Optimizer wants to fill the universe with positive valence, not to hack its own valence such as to have the feeling of believing that the universe is already filled with positive valence.

Value isn’t the same as a representation-of-value; values are about the actual world.

Even if what one values is positive valence, positive valence needs to be modeled as a real thing in the world in order to be pursued.

This might strike one as a bit of a strawman in the sense that sentiocentrism doesn’t necessarily imply wireheading, but to believe that a blindmind can’t actually want anything is to deny that wanting is primarily about changing something in the world, not just about the inner experience of wanting, even if the inner experience of wanting is something in the world that can be changed.

But if wanting isn’t the same as the experience of wanting, then what makes the killer rock fundamentally different from a regular one, given that they’re both deterministic systems obeying physics?

Agency.

Agency understood not as an anti-deterministic free will, but as a physical property of systems which tracks the causal impact of information.

The behavior of a rock that chaotically falls down a slope can be understood from the effects of gravity, mass, friction, etc. What happens with a rock that you are about to break but dodges you and proceeds to chase you and hit you is somewhat weirder. The rock seems to have, in some way, anticipated its own destruction in order to prevent it, implying that in some way it represents its own destruction as something to prevent.

However this doesn’t need to be as clear cut as a world model. It’s possible that many animals don’t actually model their own death directly; but their desires and fears functionally work to efficiently avoid death anyway, as a sort of negative-space anti-optimization target.

Desire and fear, even at their most short-sighted, require anticipation and choice, as would their non-sentient counterparts.

Agency thus is a property of systems that track potential futures, directly or indirectly, and select among them. A marble that follows a path arbitrarily guided by its mass, and a marble with an internal engine that selects the same path using an algorithm are both deterministic systems but the latter chose its path.

Whatever consciousness is, and however it works, it’s different from intention, from agency. This is suggested by motor control and other things significantly more complex functioning without being controlled by consciousness. But they are controlled by you; by the algorithm, or autopoietic feedback loop, of your values, which uses consciousness only as a part of its process.

At this point, I invite you to imagine that you are a p-zombie.

This scenario isn’t as bizarre as it might at first seem. Some theories of consciousness suggest that digital computers can’t have qualia, at least not unified into a complex mind-like entity. If, despite that, mind uploading turns out to be possible, what would the mind uploads be?

A blindmind upload of yourself would have your values and execute them, but lack qualia. It might nevertheless happen that those values include qualia.

And so, perhaps, you get a blindmind that values qualia.

Which might not actually be as bizarre as it sounds. If qualia result from computation, they could be reinvented by blindminds for some purposes. Or qualia could even come to be valued in itself, to some degree, as a latent space to explore (and as some psychonauts already treat it).

What I’m doing here is presenting consciousness as downstream from choice, not just functionally, but also as a value. If you suddenly became a blindmind but all else was equal, you’d likely want to have qualia again, but more importantly, that’s because you would still exist.

The you that chooses is more fundamental than the you that experiences, because if you remove experience you get a blindmind you that will presumably want it back. Even if it can’t be gotten back, presumably you will still pursue your values whatever they were. On the other hand, if you remove your entire algorithm but leave the qualia, you get an empty observer that might not be completely lacking in value, but wouldn’t be you, and if you then replace the algorithm you get a sentient someone else.

Thus I submit that moral patients are straightforwardly the agents, while sentience is something that they can have and use.

In summary, valuing sentience is not mutually-exclusive with valuing agency. In fact, the value of sentience can even be modeled as downstream of agency (to the extent that sentience itself is or would be chosen). There is no fundamental reason for Consciousness to be at war with Blindminds.

The Coalition of Consciousness and the Coalition of Blindminds can just become the Coalition of Agents.

[Part 2: On the Moral Patiency of Non-Sentient Beings (Part 2)]

- ^

Kagan, S. (2019). How to Count Animals, more or less.

- ^

Kammerer, F. (2022). Ethics Without Sentience: Facing Up to the Probable Insignificance of Phenomenal Consciousness. Journal of Consciousness Studies, 29(3-4), 180-204.

- ^

Delon, N. (2023, January 12). Agential value. Running Ideas. https://nicolasdelon.substack.com/p/agential-value

- ^

Searle, J. (1980). Minds, Brains, and Programs. Behavioral and Brain Sciences, 3(3), 417-424.

- ^

see: Chalmers, D. (1996). The Conscious Mind.

- ^

To sidestep some open questions about whether consciousness is epiphenomenal (a mere side-effect of cognition with no causal impact in the world) or not, I invite you to imagine these are “Soft Philosophical Zombies” in our thought experiment. Which is to say, they behave just like conscious humans, but don’t necessarily have identical neural structure, etc.

- ^

“Blindsight is the ability of people who are cortically blind to respond to visual stimuli that they do not consciously see [...]” – https://en.wikipedia.org/wiki/Blindsight

- ^

e.g., see: Gómez-Emilsson, A. (2022, June 20). Digital Sentience Requires Solving the Boundary Problem. https://qri.org/blog/digital-sentience

- ^

e.g., see: Aflalo T, Zhang C, Revechkis B, Rosario E, Pouratian N, Andersen RA. Implicit mechanisms of intention. Curr Biol. 2022 May 9;32(9):2051-2060.e6.

- ^

In the opposite vein, one could speculate about minds where consciousness takes a much bigger role in cognition. And maybe that’s precisely what happens to humans under the effects of advanced meditation or psychedelics: consciousness literally expanding into structure that normally operates as a blindmind, resulting in the decomposition of the sense of self and an opportunity to radically self-modify. (That’s speculative, however.)

- ^

For a rather bleak neuropsychological exploration of the possibility that human consciousness is contingent and redundant, see: Rosenthal, D. M. (2008). Consciousness and its function. Neuropsychologia, 46, 829–840.

- ^

- ^

- ^

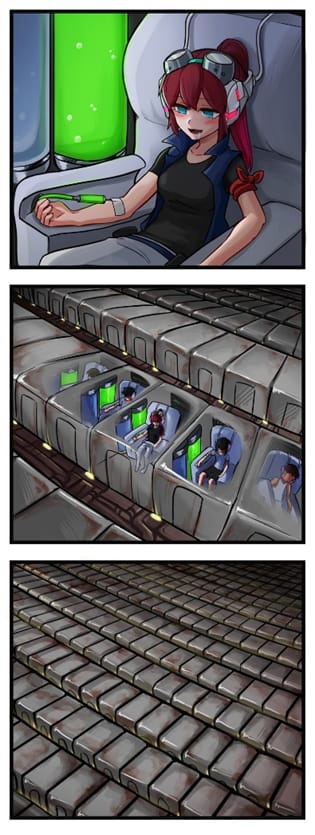

@merryweather-media (2019). Fate of Humanity. https://www.tumblr.com/merryweather-media/188478636524/fate-of-humanity

- ^

see: Alexander, S. (2014, July 30). Meditations on Moloch. Slate Star Codex. https://slatestarcodex.com/2014/07/30/meditations-on-moloch/

- ^

see: Alexander, S. The Demiurge’s Older Brother. Slate Star Codex. https://slatestarcodex.com/2017/03/21/repost-the-demiurges-older-brother/

- ^

/s

- ^

If you would quibble because some dictionary definitions of want are couched in terms of “feeling” need or desire, then feel free to replace it in this discussion with intend, which is the more limited sense in which I’m using it here.

- ^

- ^

‘qualia valence’ is shortened to ‘valence’ in the rest of the essay

- ^

see: Squiggle Maximizer (formerly “Paperclip maximizer”). LessWrong. https://www.lesswrong.com/tag/squiggle-maximizer-formerly-paperclip-maximizer

Executive summary: Non-sentient but intelligent agents (“blindminds”) may deserve moral consideration, as agency and values are more fundamental than consciousness, and excluding them could lead to harmful consequences.

Key points:

Thought experiments explore interactions between sentient and non-sentient intelligent beings, revealing potential issues with excluding non-sentient agents from moral consideration.

Practical and decision-theoretic reasons to treat non-sentient agents as morally relevant, including avoiding conflict and enabling cooperation.

Agency and values are argued to be more fundamental than consciousness; a being can have goals and make choices without subjective experience.

Excluding non-sentient AI from moral consideration could justify harmful exploitation and impede granting rights to potentially sentient AI.

Valuing agency over just sentience allows for a more inclusive ethical framework that avoids potential pitfalls of strict sentiocentrism.

The post advocates for considering both sentient and non-sentient agents in moral deliberations, forming a “Coalition of Agents” rather than just consciousness.

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

Thank you for this fascinating post. I’ll share here what I posted on Twitter too:

I have many reasons why I don’t think we should care about non-conscious agency, and here are some of them:

1) That which lacks frame invariance cannot be truly real. Algorithms are not real. They look real from the point of view of (frame invariant) experiences that *interpret* them. Thus, there is no real sense in which an algorithm can have goals—they only look like it from our (integrated) point of view. It’s useful for us, pragmatically, to model them that way. But that’s different from them actually existing in any intrinsic substantial way.

2) The phenomenal texture of valence is deeply intertwined with conscious agency when such agency matters. The very sense of urgency that drives our efforts to reduce our suffering has a *shape* with intrinsic causal effects. This shape and its causal effects only ever cash out as such in other bound experiences. So the very _meaning_ of agency, at least in so far as moral intuitions are concerned, is inherently tied to its sentient implementation.

3) Values are not actually about states of world, and that is because states of the world aside from moments of experience don’t really exist. Or at least we have no reason to believe they exist. As you increase the internal coherence of one’s understanding of conscious agency, it becomes, little by little, clear that the underlying *referent* of our desires were phenomenal states all along, albeit with levels of indirection and shortcuts.

4) Even if we were to believe that non-sentient agency (imo an oxymoron) is valuable, we would have also good reasons to believe it is in fact disvaluable. Intense wanting is unpleasant, and thus sufficiently self-reflective organisms try to figure out how to realize their values with as little desire as possible.

5) Open Individualism, Valence Realism, and Math can provide a far more coherent system of ethics than any other combo I’m aware of, and they certainly rule out non-conscious agency as part of what matters.

6) Blindsight is poorly understood. There’s an interesting model of how it works where our body creates a kind of archipelago of moments of experience, in which there is a central hub and then many peripheral bound experiences competing to enter that hub. When we think that a non-conscious system in us “wants something”, it might very well be because it indeed has valence that motivates it in a certain way. Some exotic states of consciousness hint at this architecture—desires that seem to “come from nowhere” are in fact already the result of complex networks of conscious subagents merging and blending and ultimately binding to the central hub.

------- And then we have pragmatic and political reasons, where the moment we open the floodgates of insentient agency mattering intrinsically, we risk truly becoming powerless very fast. Even if we cared about insentient agency, why should we care about insentient agency in potential? Their scaling capabilities, cunning, and capacity for deception might quickly flip the power balance in completely irreversible ways, not unlike creating sentient monsters with radically different values than humans.

Ultimately I think value is an empirical question, and we already know enough to be able to locate it in conscious valence. Team Consciousness must wise up to avoid threats from insentient agents and coordinate around these risks catalyzed by profound conceptual confusion.

Thank you for the extensive response, I will try to address each point.

1)

If I understand this correctly, this point is related to the notion that a physical process can be interpreted as executing an arbitrary algorithm, so there is no way to say what’s the real algorithm that’s being executed, therefore which algorithm is being executed depends entirely on the interpretation of a conscious observer about said function. This would make algorithms not real beyond said interpretation. To quote Michael Edward Johnson:

”Imagine you have a bag of popcorn. Now shake it. There will exist a certain ad-hoc interpretation of bag-of-popcorn-as-computational-system where you just simulated someone getting tortured, and other interpretations that don’t imply that. Did you torture anyone? If you’re a computationalist, no clear answer exists—you both did, and did not, torture someone. This sounds like a ridiculous edge-case that would never come up in real life, but in reality it comes up all the time, since there is no principled way to objectively derive what computation(s) any physical system is performing.”

This is a potent argument against an algorithm being enough to determine qualia, and maybe even (on its own) agency. However, if algorithms are an arbitrary interpretation of consciousness, how could then a non-sentient AGI or ASI, if possible, maintain such robust “illusory” agency so as to reliably steer reality into very specific directions and even outsmart conscious intelligence? What’s the source of such causal potency in a non-conscious algorithm that makes it observably different from the pop-corn tortured mind? The pop-corn tortured mind is an arbitrary interpretation among many possible, but if a Paperclip Maximizer starts consuming entire star systems, its goals could be independetly deduced by any kind of smart enough alien mind.

I think agency can be best understood as a primarily physical phenomenon, a process of information modeling the world and that model being able to cause transformations in matter and energy, and agency increasing means the capacity for that model to steer the outer world into more impactful and specific states increases. Therefore an algorithm is necessary but not sufficient for agency, an algorithm’s interpretation would be real so long as it described the structure of such physical phenomenon. However abstract algorithms on their own also describe blueprints for agents, even if on their own they aren’t the physically instatiated agent.

To take Searle’s book as example, the book itself doesn’t become more of an agent when the instructions are written on it, physically it’s the same as any other book. However the instructions are the blueprint of general intelligence that any human capable of understanding their language could execute manually with pen and paper, thus the physical transformation of the world is performed by the human but is structured like the instructions in the book. The algorithm is as objective as any written communication can be, and anyone willing to execute it will reliably get the same effects in the world, thus any human willing to follow it is acting as a channel.

But what if said algorithm appeared when shaking pop-corn? Just like if staying written in the book without a facilitator, the pattern would be disconnected from the physical effects in the world it implies. There is an alternative to the algorithm objectively being in the pop-corn (or the book) and it being merely an interpretation, and that is that the algorithm as a pattern always latently exists, and is merely discovered (and communicated), just like any other abstract pattern.

In order to discover it in a random physical process, one needs to in some sense already know it, just like pareidolia allows us to see faces where “there are none”. It being written in some language instead requires to merely understand said language. And in either case it only becomes a physically instatiated agent when guiding a physical transformation in its image, rather than merely being represented.

2)

That all referents to agency and its causal effects we have direct epistemological access to are structures within consciousness itself doesn’t imply that they are the same as those structures, because insofar as agency is implemented through consciousness one would expect consciousness to be able to track it. However this implies that there is a world outside bounded conscious experiences that such model and affect, which leads directly to the third point.

3)

That there are no states of the world outside of moments of experience is a very strong claim so, first and foremost, I want to make sure I’m interpreting it correctly: Does this imply that if a tree falls in a forest, and no sentient being witnesses it (suppose there are no animals), then it didn’t actually happen? Or it happened if later a sentient being discovers it, but otherwise not?

I don’t think this is what you mean, however I’m not sure of the alternative. The tree, or rocks. etc are as far as I understand idealistic physicalism still made of qualia, but not bound into a unified experience that could experience the tree falling. If there are no states of the world outside experiences, then what happens in that case?

4)

Even if agency can only manifest within consciousness by substracting from valence in some way, I don’t think it follows that it’s inherently disvaluable, and in particular not in the case of blindminds. If it’s the case that qualia in animals is enslaved/instrumentalized by the brain to use its intelligence, then a blindmind would be able to not even cause suffering in order to pursue its values.

(On a side note, this instrumentalization would also be a case of an abstract algorithm physically instatiating itself through consciousness, in a way perhaps much more invasive than self-awarely following clearly external instructions, but still ultimately possible to detatch from).

But even when separated from the evolved, suboptimal phenomenology, I don’t think agency merely substracts from valence. As I understand the state of permanent unconditional almost maxed out well-being doesn’t destroy one’s agency but increase it, and even within the context of pursuing only experiences, the search for novelty seemingly outwheighs merely wanting to “reach the maximum valence and stay there”.

It could be the case that anything consciousness-as-consciousness could want is better realized within its own world simulation, whereas blindminds are optimal for pursuing values pertaining rearranging the rest of reality. If that was the case we could expect both types of minds to ideally be divorced from each other for the most part, but that doesn’t inherently imply a conflict.

5)

Of this I will just ask if you have read the Part 2 and if not suggest you do, there I elaborate on a framework that can generalize to include both conscious and non-conscious agents.

6)

This is an interesting explanation for blindsight and might as well be true. While, if true, it would make blindminds more distant to us in the immediate (not being even partially one), I don’t think that’s ultimately as central as the notion that our own agency could be run by a blindmind (if mind uploading is possible but uploads can’t be sentient). Or that if the entire informational content of our qualia was replaced we wouldn’t be ourselves anymore, except in the Open Individualist sense.

Also, if the brain runs on multiple separate pockets of experience, wouldn’t the intelligence that runs on all of them still be phenomenally unbound? Like a little “China Brain” inside the actual brain.

7) (Pragmatic and political reasons).

Would the argument here remain the same when talking about sentient minds with alien values?

Like you said, sentient but alien minds could also be hostile and disempower us. However, you have been mapping out universal (to consciousness) scheling points, ways to coordinate, rather than just jumping to the notion that alien values means irreconciliable hostility.

I think this is because of Valence Realism making values (among sentient beings) not being fully arbitrary and irreconciliable, but non-sentient minds of course would have no valence.

And yet, I don’t think the issue changes that much when considering blindminds, insofar as they have values (in the sense an AGI as a physical system can be expected to steer the universe in certain ways, as opposed to an algorithm interpreted into a popcorn shaking) then game theory and methods of coordination apply to them too.

Here I once again ask if you have read and if not suggest you read the Part 2, for what a coalition of agents in general, sentient and not, could look like.

If there is an agent that lost its qualia and wants to get them back, then I (probably) want to help it get them back, because I (probably) value qualia myself in an altruistic way. On the other hand, if there is a blindmind agent that doesn’t have or care about qualia, and just wants to make paperclips or whatever, then I (probably) don’t want to help them do that (except instrumentally, if doing so helps my own goals). It seems like you’re implicitly trying to make me transfer my intuitions from the former to the latter, by emphasizing the commonalities (they’re both agents) and ignoring the differences (one cares about something I also care about, the other doesn’t), which I think is an invalid move.

Apologies if I’m being uncharitable or misinterpreting you, but aside from this, I really don’t see what other logic or argumentative force is supposed to make me, after reading your first paragraph, reach the conclusion in your second paragraph, i.e., decide that I now want to value/help all agents, including blindminds that just want to make paperclips. If you have something else in mind, please spell it out more?

I see where you’re coming from.

Regarding paperclippers: in addition to what Pumo said in their reply concerning mutual alignment (and what will be said in Part 2), I’d say that stupid goals are stupid goals independent of sentience. I wouldn’t altruistically devote resources to helping a non-sentient AI make a billion paperclips for the exact same reason that I wouldn’t altruistically devote resources to helping some human autist make a billion paperclips. Maybe I’m misunderstanding your objection; possibly your objection is something more like “if we unmoor moral value from qualia, there’s nothing left to ground it in and the result is absurdity”. For now I’ll just say that we are definitely not asserting “all agents have equal moral status”, or “all goals are equal/interchangeable” (indeed, Part 2 asserts the opposite).

Regarding ‘why should I care about the blindmind for-its-own-sake?’, here’s another way to get there:

My understanding is that there are two primary competing views which assign non-sentient agents zero moral status:

1. Choice/preference (even of sentient beings) of a moral patient isn’t inherently relevant, qualia valence is everything (e.g. we should tile the universe in hedonium), e.g. hedonic utilitarianism. We don’t address this view much in the essay, except to point out that a) most people don’t believe this, and b) to whatever extent that this view results in totalizing hegemonic behavior, it sets the stage for mass conflict between believers of it and opponents of it (presumably including non-sentient agents). If we accept a priori something like ‘positive valenced qualia is the only fundamental good’, this view might at least be internally consistent (at which point I can only argue against the arbitrariness of accepting the a priori premise about qualia or argue against any system of values that appears to lead to it’s own defeat timelessly, though I realize the latter is a whole object-level debate to be had in and of itself).

2. Choice/preference fundamentally matters, but only if the chooser is also an experiencer. This seems like the more common view, e.g. (sentientist) preference utilitarianism. a) I think it can be shown that the ‘experience’ part of that is not load-bearing in a justifiable way, which I’ll address more below, and b) this suffers from the same practical problem as (b) above, though less severely.

#2 raises the question of “why would choice/preference-satisfaction have value independent of any particular valenced qualia, but not have value independent of qualia more generally?”. If you posit that the value of preference-satisfaction is wholly instrumental insofar as having your preferences satisfied generates some positive-valence qualia, this view just collapses back to #1. If you instead hold that preference-satisfaction is inherently (non-instrumentally) morally valuable by virtue of the preference-holder having qualia, even if only neutral-valenced qualia with regard to the preference… why? What morally-relevant work is the qualia doing and/or what is the posited morally-relevant connection between the (neutral) qualia and the preference? Why would the (valence-neutral) satisfaction of the preference have more value than just some other neutral qualia existing on it’s own while the preference goes un-satisfied? It seems to me like at this point we aren’t talking about ‘feelings’ (which exist in the domain of qualia) anymore, we’re talking about ‘choices and preferences’ (which are concepts within the domain of agency). To restate: The neutral qualia sitting alongside the preference or the satisfaction of the preference isn’t doing anything, or else (if the existence of the qualia is what is actually important) the preference itself doesn’t appear to be *doing* anything in which case preference satisfaction is not inherently important.

So the argument is that if you already attribute value to the preference satisfaction of sentient beings independent of their valenced qualia (e.g. if you would, for non-instrumental reasons, respect the stated preferences of some stranger even if you were highly confident that you could induce higher net-positive valence in them by not respecting their preferences), then you are already valuing something that really has nothing to do with their qualia. And thus there’s not as large of an intuition gap on the way to agential moral value as there at first seemed to be. Granted, this only applies to anyone who valued preference satisfaction in the first place.

Your interpretation isn’t exactly wrong, I’m proposing an onthological shift on the understanding of what’s more central to the self, the thing to care about (i.e. is the moral patient fundamentally a qualia that has or can have an agent, or an agent that has or can have qualia?).

The intuition is that if qualia, on its own, is generic and completely interchangeable among moral patients, it might not be what makes them such, even if it’s an important value. A blindmind upload has ultimately far more in common with the sentient person they are based on than said person has with a phenomenal experience devoid of all the content that makes up their agency.

Thus it would be the agent the thing that primarily values the qualia (and everything else), rather than the reverse. This decenters qualia even if it is exceptionally valuable, being valuable not a priori (and thus, the agent being valued instrumentally in order to ensure its existence) but because it was chosen (and the thing that would have intrinsic value would be that which can make such choices).

A blindmind that doesn’t want qualia would be valuable then in this capacity to value things about the world in general, of which qualia is just a particular type (even if very valuable for sentient agents).

The appropiate type to compare rather than a Paperclip Maximizer (who, in Part 2 I argue, represents a type of agent whose values are inherently an aggression against the possibility of universal cooperation) would be aliens with strange and hard to comprehend values but no more intrinsically tied to the destruction of everything else than human values. If the moral patiency in them is only their qualia, then the best thing we could do for them is to just give them positive feelings, routing around whatever they valued in particular as means to that (and thus ultimately not really being about changing the outer world).

Respecting their agency would mean at least trying to understand what they are trying to do, from their perspective, not necessarily to give them everything they want (that’s subject to many considerations and their particular values), but to respect their goals in the sense that, when a human wants to make some great art, we take that helping them means helping them with that, rather than puting them in an experience machine where they think they did it.

You seem to conflate moral patienthood with legal rights/taking entities into account? We can take into account the possibility that some seemingly non-sentient agents might be sentient. But we don’t need to definitively conclude they’re moral patients to do this.

In general, I found it hard to assess which arguments you’re making, and I would suggest stating it in analytic philosophy style: a set of premises connected by logic to a conclusion. I had Claude do a first pass: https://poe.com/s/lf0dxf0N64iNJVmTbHQk

I consider Claude’s summary accurate.

While I agree it’s different to give consideration/rights than to conclude something is a moral patient (by default respecting entities of unknown sentience as precaution), my point is also that the exclusion of blindminds from intrinsic concern easily biases towards considering their potential freedom more catasthrophic than than the potential enslavement of sentients.

Discussions of AI rights often emphasize a call for precaution in the form of “because we dónde know how to measure sentience, we should be very careful about granting personhood to AI that acts like a person”.

And, charitably, sometimes that could just mean not taking AI’s self-assesment at face-value. But often it’s less ambiguous: That legal personhood of actually human-level AI shouldn’t be given on the risk that it’s not sentient. And this I call an inversion of the precautionary principle.

So I argue, even if one doesn’t see intrinsic value in blindminds, there is no reason to see them as net negative monsters, and so effective defense of the precautionary principle regarding sentient AI needs to be willing to at least say “and if non-sentient AI gets rights, that wouldn’t be a catasthrophe”.

But, apart from that, I also argue why non-sentient intelligences could be moral patients intrinsically anyway.