I live for a high disagree-to-upvote ratio

huw

I think it’s tractable, right? The rich had a far greater hold over American politics in the early 1900s, and after financial devastation coupled with the threat of communism, the U.S. got the New Deal and a 90% marginal tax rate for 20 years following the war (well after the war effort had been fully paid off), during the most prosperous period in U.S. history. My sense of these changes is that widespread labour & political organisation threatened the government into a compromise in order to protect liberalism & capitalism from a near-total overthrow. It can be done.

But equally, that story suggests that things will probably have to get much worse before the political will is there to be activated. And there’s no guarantee that any money raised from taxation will be spent on the global poor!

My honest, loosely held opinion here is that EA/adjacent money could be used to build research & lobbying groups (rather than grassroots organising or direct political donations—too controversial and not EA’s strong suit), that would be ready for such a moment if/when it comes. They should be producing policy briefs and papers that, and possibly public-facing outputs, on the same level as the current YIMBY/abundance movement, who are far more developed than the redistributionists on these capabilities. When the backlash hits and taxes get raised, we should already have people well-placed to push for high redistribution on an international and non-speciesist level.

Surely it would be easier to just take the money from them, with taxes

No, but seriously—the U.S. presently has an extremely clear example of the excesses of oligarchy and low taxation. The idea that billionaires need less tax in order to invest more in the economy is laughable when Elon has used his excess money to essentially just enrich himself. I think it would be pretty high leverage to put money, time, and connections into this movement (if you can legally do so); and if the enemy is properly demarcated as oligarchy, it should result in reducing wealth inequality once its proponents take power.

Excuse the naïve question, but could far-UVC also reduce the cost of running high-level labs? If so, this could have transformational effects on medical development and cultured meat also

Perhaps this is a bit tangential, but I wanted to ask since the 80k team seem to be reading this post. How have 80k historically approached the mental health effects of exposing younger (i.e. likely to be a bit more neurotic) people to existential risks? I’m thinking in the vein of Here’s the exit. Do you/could you recommend alternate paths or career advice sites for people who might not be able to contribute to existential risk reduction due to, for lack of a better word, their temperament? (Perhaps a similar thing for factory farming, too?)

For example, I think I might make a decent enough AI Safety person and generally agree it could be a good idea, but I’ve explicitly chosen not to pursue it because (among other reasons) I’m pretty sure it would totally fry my nerves. The popularity of that LessWrong post suggests that I’m not alone, and also raises the interesting possibility that such people might end up actively detracting from the efforts of others, rather than just neutrally crashing out.

Here’s a much less intellectual podcast on the Rationalists, Zizians, and EA from TrueAnon, more on the dirtbag left side of things (for those who’re interested in how others see EA)

Would also be interested to hear from the realists: Do they believe they have discovered any of these moral truths themselves, or just that these truths are out there somewhere?

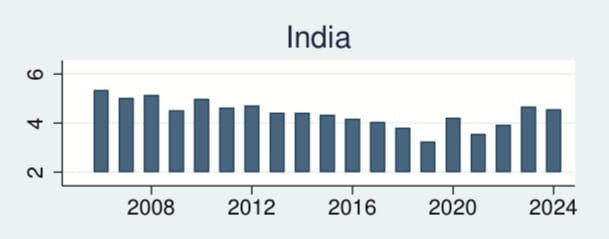

Here is their plot over time, from the Chapter 2 Appendix. I think these are the raw per-year scores, not the averages.

I find this really baffling. It’s probably not political; the Modi government took power in 2014 and only lost absolute majority in late 2024. The effects of COVID seem to be varied; India did relatively well in 2020 but got obliterated by the Delta variant in 2021. Equally, GDP per capita steadily increased over this time, barring a dip in 2020. Population has steadily increased, and growth has steadily decreased.

India have long had a larger residual value than others in the WHR’s happiness model; they’re much less happy than their model might predict.

Without access to the raw data, it’s hard to say if Gallup’s methodology has changed over this time; India is a huge and varied country, and it’s hard to tell if Gallup maintained a similar sample over time.

AIM seems to be doing this quite well in the GHW/AW spaces, but lacks the literal openness of the EA community-as-idea (for better or worse)

The World Happiness Report 2025 is out!

Finland leads the world in happiness for the eighth year in a row, with Finns reporting an average score of 7.736 (out of 10) when asked to evaluate their lives.

Costa Rica (6th) and Mexico (10th) both enter the top 10 for the first time, while continued upward trends for countries such as Lithuania (16th), Slovenia (19th) and Czechia (20th) underline the convergence of happiness levels between Eastern, Central and Western Europe.

The United States (24th) falls to its lowest-ever position, with the United Kingdom (23rd) reporting its lowest average life evaluation since the 2017 report.

I bang this drum a lot, but it does genuinely appear that once a country reaches the upper-middle income bracket, GDP doesn’t seem to matter much more.

Also featuring is a chapter from the Happier Lives Institute, where they compare the cost-effectiveness of improving wellbeing across multiple charities. They find that the top charities (including Pure Earth and Tamaika) might be 100x as cost-effective as others, especially those in high-income countries.

Reposting this from Daniel Eth:

On the one hand, this seems like not much (shouldn’t AGIs be able to hit ‘escape velocity’ and operate autonomously forever?), but on the other, being able to do a month’s worth of work coherently would surely get us close to recursive self-improvement.

Some general thoughts about India specifically:

The EA community is slowly developing, but the biggest obstacle is the lack of a clear hub city. Government is in Delhi, tech is in Bengaluru, many orgs are also in Pune or Mumbai (such as my own).

The philanthropic sector isn’t tuned to EA ideas just yet, but we think it might get more feasible to find local funding. Anecdotally, this seems to be easier in mental health, which is well-understood by the traditional philanthropic sector. Further development of EGIs and the local community will help here.

Anecdotally at EAGxIndia 2024, most younger attendees were interested in AI work, and far fewer into GHW/AW. There’s probably some bias here, since it was hosted in Bengaluru, which is heavier on tech. That is to say, I’m not convinced the talent pipeline for an India-based AIM-like org is quite there yet, although AIM could be nudged to incubate more often there.

On the other hand, legally operating in India is more complex than almost any other country AIM incubates into, and having India-specific expertise and operational support, while expensive, would pay dividends

Just wait until you see the PRs I wanna submit to the forum software 😛

FWIW the point that I was trying to make (however badly) was that the government clearly behaved in a way that had little regard for accuracy, and I don’t see incentives for them to behave any differently here

The U.S. State Department will reportedly use AI tools to trawl social media accounts, in order to detect pro-Hamas sentiment to be used as grounds for visa revocations (per Axios).

Regardless of your views on the matter, regardless of whether you trust the same government that at best had a 40% hit rate on ‘woke science’ to do this: They are clearly charging ahead on this stuff. The kind of thoughtful consideration of the risks that we’d like is clearly not happening here. So why would we expect it to happen when it comes to existential risks, or a capability race with a foreign power?

Is the assumption here that they would lobby behind the scenes for carbon-neutrality? Because this just sounds like capitulation without a strong line in the sand to me

(Could you elaborate on ‘economics doesn’t have a very good track record on advanced AI so far’? I haven’t heard this before)

Nowhere in their RFP do they place restrictions on what kinds of energy capacity they want built. They are asking for a 4% increase in U.S. energy capacity—this is a serious amount of additional CO2 emissions if that capacity isn’t built renewably. But that’s just what they’re asking for now; if they’re serious about building & scaling AGI, they would be asking for much bigger increases, without a strong precedent of carbon-neutrality to back it up. That seems really bad?

Also to pre-empt—the energy capacity has to come before you build an AI powerful enough to ‘solve climate change’. So if they fail to do that, the downside is that they make the problem significantly worse. I think the environmental downsides of attempting to build AGI should be a meaningful part of one’s calculus.

Reading between the lines, the narrative that the UK want to push here is that due to Trump’s presumed defunding of NATO & the general U.S. nuclear umbrella, they have to increase defence and cut aid? So if you buy this narrative, this is a follow-on consequence of Trump’s election?

Thank you MHFC! As with past grantees, I can attest that working with MHFC was extremely easy & pleasant. They take cost-effectiveness & future prospects seriously, but aren’t onerous in their requirements. If you’re in the mental health space they’re an excellent partner to have!

Richest 1% wealth share, US (admittedly, this has been flat for the last 20 years, but you can see the trend since 1980):

Pre-tax income shares, US:

A 3–4% change for most income categories isn’t anything to sneeze at (even if this is pre-tax).

You can explore the WID data through OWID to see the effect for other countries; it’s less pronounced for many but the broad trend in high-income neoliberalised countries is similar (as you’d expect to happen with lower taxation).