I’m currently researching forecasting and epistemics as part of the Quantified Uncertainty Research Institute.

Ozzie Gooen

“Though I think AI is critically important, it is not something I get a real kick out of thinking and hearing about.”

-> Personally, I find a whole lot of non-technical AI content to be highly repetitive. It seems like a lot of the same questions are being discussed again and again with fairly little progress.

For 80k, I think I’d really encourage the team to focus a lot on figuring out new subtopics that are interesting and important. I’m sure there are many great stories out there, but I think it’s very easy to get trapped into talking about the routine updates or controversies of the week, with little big-picture understanding.

Thanks for writing this, and many kudos for your work with USAID. The situation now seems heartbreaking.

I don’t represent the major funders. I’d hope that the ones targeting global health would be monitoring situations like these and figuring out if there might be useful and high-efficiency interventions.Sadly there are many critical problems in the world and there are still many people dying from cheap-to-prevent malaria and similar, so the bar is quite high for these specific pots of funding, but it should definitely be considered.

This feels highly targeted :)

Noted, though! I find it quite difficult to make good technical progress, manage the nonprofit basics, and do marketing/outreach, with a tiny team. (Mainly just me right now). But would like to improve.

We’ve recently updated the models for SquiggleAI, adding Sonnet 4.5, Haiku 4.5, and Grok Code Fast 1.

Initial tests show promising results, though probably not game-changing. Will run further tests on numeric differences.

People are welcome to use (for free!) It’s a big unreliable, so feel free to run a few times on each input.https://quantifieduncertainty.org/posts/updated-llm-models-for-squiggleai/

Quickly → I sympathize with these arguments, but I see the above podcast as practically a different topic. Could be a good separate blog post on its own.

This got me to investigate Ed Lee a bit. Seems like a sort of weird situation.

The corresponding website in question’s about page doesn’t mention him.

Seems like he has another very similar site, https://bitcoiniseatingtheworld.com/, for reference, also with no about section

Here seems to be his Twitter page, where he advertises his new NFT book.

Good point about it coming from a source. But looking at that, I think that that blog post was had a similarly clickbait headline, though more detailed (“Anthropic faces potential business-ending liability in statutory damages after Judge Alsup certifies class action by Bartz”).

The analysis in question also looks very rough to me. Like a quick sketch / blog post.

I’d guess that if you’d have most readers here estimate what the chances seem that this will actually force the company to close down or similar, after some investigation, it would be fairly minimal.

The article broadly seems informative, but I really don’t like the clickbait headline.

“Potentially Business-Ending”?

I did a quick look at the Manifold predictions. In this (small) market, there’s a 22% chance given to “Will Anthropic be ordered to pay $1B+ in damages in Bartz v. Anthropic?” (note that even $1B would be far from “business-ending”).

And larger forecasts of the overall success of Anthropic have barely changed.

I’d be curious to hear what you think about telling the AI: “Don’t just do this task, but optimize broadly on my behalf.” When does that start to cross into dangerous ground?

I think we’re seeing a lot of empirical data about that with AI+code. Now a lot of the human part is in oversight. It’s not too hard to say, “Recommend 5 things to improve. Order them. Do them”—with very little human input.

There’s work to make sure that the AI scope is bounded, and that anything potentially-dangerous it could do gets passed by the human. This seems like a good workflow to me.

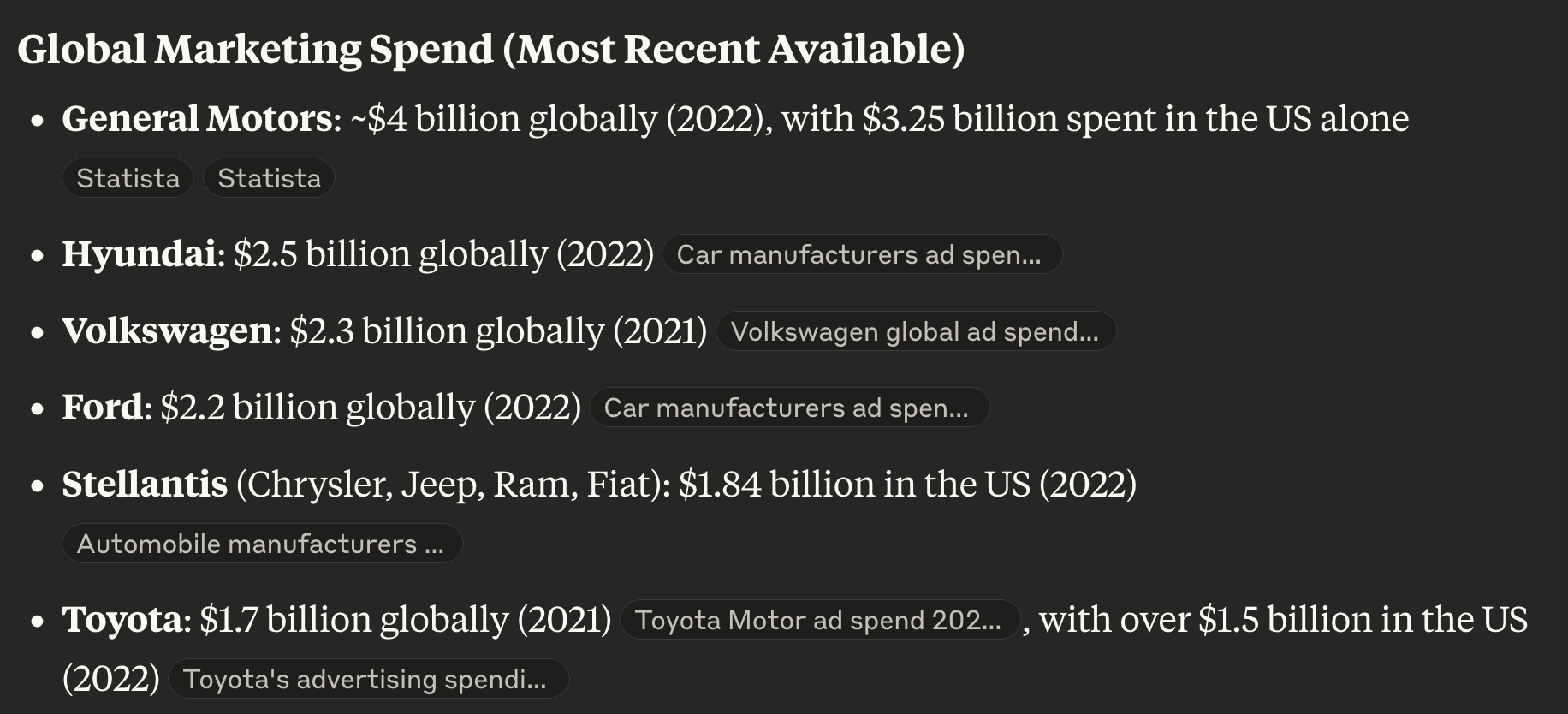

Frustratingly, the links that Claude found for these went to Statista, which is mostly paywalled, but the public bits mostly seem to verify these rough numbers.

https://claude.ai/share/941fbda6-2dc5-4d15-bb31-99fcfc4d0a8b

To give more clarity on what I mean by imagining larger spends—it seems to me like many of these efforts are sales-heavy, instead of being marketing-heavy.

I can understand that it would take a while to scale up sales. But scaling up marketing seems much better understood. Large companies routinely spend billions per year on marketing.

Here are some figures a quick Claude search gave for car marketing spending, for instance. I think this might be an interesting comparison because cars, like charitable donations, are large expenses that might take time for people to consider.

(I do realize that the economics might be pretty different around charity, so of course I’d recommend being very clever and thoughtful before scaling up quite to this level)

How do you imagine the benefits might continue past 2 years?

If any of these can be high-growth ventures, then early work is mainly useful in helping to set up later work. There’s often a lot of experimentation, product-market-fit finding, learning about which talent is good at this, early on.

Related, I’d expect that some of this work would take a long time to provide concrete returns. My model is that it can take several years to convince certain wealthy people to give up money. Many will make their donations late in life. (Though I realize that discounting factors might make the later stuff much less valuable than otherwise)

I’m happy to see this, thanks for organizing!

Quickly: One other strand of survey I’d be curious about is basically, “Which organizations/ideas do you feel comfortable critiquing?”

I have a hunch that many people are very scared of critiquing some of the powerful groups, but I’d be eager to see data.

https://forum.effectivealtruism.org/posts/hAHNtAYLidmSJK7bs/who-is-uncomfortable-critiquing-who-around-ea

Happy to see this. Overall I’m pretty excited about this area and would like to see further work here. I think my main concern is just that I’d like to see dramatically more capital being used in this area. It’s easy for me to imaging spending $10M-$100M per year on expanding donations; especially because there’s just so much money out there.

I’m a bit curious about the ROI number.

“We estimate a weighted average ROI of ~4.3x across the portfolio, which means we expect our grantees to raise more than $6 million in adjusted funding over the next 1-2 years.”

1-2 years really isn’t that much. I’m sure a lot of the benefits of this grant will be felt for longer periods.

Also, of course:

1. I’d expect that IRR would also be useful, especially if benefits will come after 2 years out.

2. I’d hope that it wouldn’t be too difficult to provide some 90% bounds or similar.

Quickly: “and should we be worried or optimistic?”

This title seems to presume that we should either be worried or be optimistic. I consider this basically a fallacy. I assume it’s possible to be worried about some parts of AI and optimistic about others, which is the state I find myself in.

I’m happy to see discussion on the bigger points here, just wanted to flag that issue to encourage better titles in the future.

Yea, the stickers are really meant to be useful for those already talking to the person. There are a lot of limitations here, so you need to make tradeoffs.

I occasionally get asked how to find jobs in “epistemics” or “epistemics+AI”.

I think my current take is that most people are much better off chasing jobs in AI Safety. There’s just a dramatically larger ecosystem there—both of funding and mentorship.

I suspect that “AI Safety” will eventually encompass a lot of “AI+epistemics”. There’s already work on truth and sycophancy for example, there are a lot of research directions I expect to be fruitful.

I’d naively expect that a lot of the ultimate advancements in the next 5 years around this topic will come from AI labs. They’re the main ones with the money and resources.

Other groups can still do valuable work. I’m still working under an independent nonprofit, for instance. But I expect a lot of the value of my work will come from ideating and experimenting with directions that would later get scaled up by larger labs.

I’m a big fan of names on most badges. But I’d be fine with some fraction of people not having names on their badges, in cases where that might be pragmatic. I also think that pseudonyms can make a lot of sense on occasion.

I imagine a lot of the downvotes here are on “names are generally a bad idea”, rather than “some people should be allowed to not use their names on badges.”

Sorry, fixed. Mistyped.

“Should EA develop any framework for responding to acute crises where traditional cost-effectiveness analysis isn’t possible? Or is our position that if we can’t measure it with near-certainty, we won’t fund it—even during famines?”

This is tricky. I think that most[1] of EA is outside of global health/welfare, and much of this is incredibly speculative. AI safety is pretty wild, and even animal welfare work can be more speculative.

GiveWell has historically represented much of the EA-aligned global welfare work. They’ve also seemed to cater to particularly risk-averse donors, from what I can tell.

So an intervention like this is in a tricky middle-ground, where it’s much less speculative than AI risk, but more speculative than much of the GiveWell spend. This is about the point where you can’t really think of “EA” as one unified thing with one utility function. The funding works much more as a bunch of different buckets with fairly different criteria.

Bigger-picture, EAs have a very small sliver of philanthropic spending, which itself is a small sliver of global spending. In my preferred world we wouldn’t need to be so incredibly ruthless with charity choices, because there would just be much more available.

[1] In terms of respected EA discussions/researchers.