I should have chosen a clearer phrase than “not through formal channels”. What I meant was that my much of my forecasting work and experiences came about through my participation on Metaculus, which is “outside” of academia; this participation did not manifest as forecasting publications or assistantships (as would be done through a Masters or PhD program), but rather as my track record (linked in CV to Metaculus profile) and my GitHub repositories. There was also a forecasting tournament I won, which I also linked on the CV.

QubitSwarm99

I agree with this.

“Number of publications” and “Impact per publication” are separate axes, and leaving the latter out produces a poorer landscape of X-risk research.

Glad to hear that the links were useful!

Keeping by Holden’s timeline sounds good, and I agree that AGI > HLMI in terms of recognizability. I hope the quiz goes well once it is officially released!

I am not the best person to ask this question (@so8res, @katja_grace, @holdenkarnofsky) but I will try to offer some points.

These links should be quite useful:

What do ML researchers think about AI in 2022? (37 years until a 50% chance of HLMI)

LW Wiki—AI Timelines (e.g., roughly 15% chance of transformative AI by 2036 and ~75% of AGI by 2032)

(somewhat less useful) LW Wiki—Transformative AI; LW Wiki—Technological forecasting

I don’t know of any recent AI expert surveys for transformative AI timelines specifically, but have pointed you to very recent ones of human-level machine intelligence and AGI.

For comprehensiveness, I think you should cover both transformative AI (AI that precipitates a change of equal or greater magnitude to the agricultural or industrial revolution) and HLMI. I have yet to read Holden’s AI Timelines post, but believe it’s likely a good resource to defer to, given Holden’s epistemic track record, so I think you should use this for the transformative AI timelines. For the HLMI timelines, I think you should use the 2022 expert survey (the first link). Additionally, if you trust that a techno.-optimist leaning crowd’s forecasting accuracy generalizes to AI timelines, then it might be worth checking out Metaculus as well.

the community here has an IQR forecast of (2030, 2041, 2075) for When will the first general AI system be devised, tested, and publicly announced?

the uniform median forecast is 54% for Will there be human/machine intelligence parity by 2040?

Lastly, I think it might be useful to ask under the existential risk section what percentage of ML/AI researchers think AI safety research should be prioritized (from the survey: “The median respondent believes society should prioritize AI safety research “more” than it is currently prioritized. Respondents chose from “much less,” “less,” “about the same,” “more,” and “much more.” 69% of respondents chose “more” or “much more,” up from 49% in 2016.”)

I completed the three quizzes and enjoyed it thoroughly.

Without any further improvements, I think these quizzes would still be quite effective. It would be nice to have a completion counter (e.g., an X/Total questions complete) at the bottom of the quizzes, but I don’t know if this is possible on quizmanity.

Got through about 25% of the essay and I can confirm it’s pretty good so far.

Strong upvote for introducing me to the authors and the site. Thank you for posting.

Every time I think about how I can do the most good, I am burdened by questions roughly like

How should value be measured?

How should well-being be measured?

How might my actions engender unintended, harmful outcomes?

How can my impact be measured?

I do not have good answers to these questions, but I would bet on some actions being positively impactful on the net.

For example

Promoting vegetarianism or veganism

Providing medicine and resources to those in poverty

Building robust political institutions in developing countries

Promoting policy to monitor develops in AI

W.r.t. the action that is most positively impactful, my intuition is that it would take the form of safeguarding humanity’s future or protecting life on Earth.

Some possible actions that might fit this bill:

Work that robustly illustrates the theoretical limits of the dangers from and capabilities of superintelligence.

Work that accurately encodes human values digitally

A global surveillance system for human and machine threats

A system that protects Earth from solar weather and NEOs

The problem here is that some of these actions might spawn harm, particularly (2) and (3).

Thoughts and Notes: October 5th 0012022 (1)

As per my last shortform, over the next couple of weeks I will be moving my brief profiles for different catastrophes from my draft existential risk frameworks post into shortform posts to make the existential risk frameworks post lighter and more simple.

In my last shortform, I included the profile for the use of nuclear weapons and today I will include the profile for climate change.

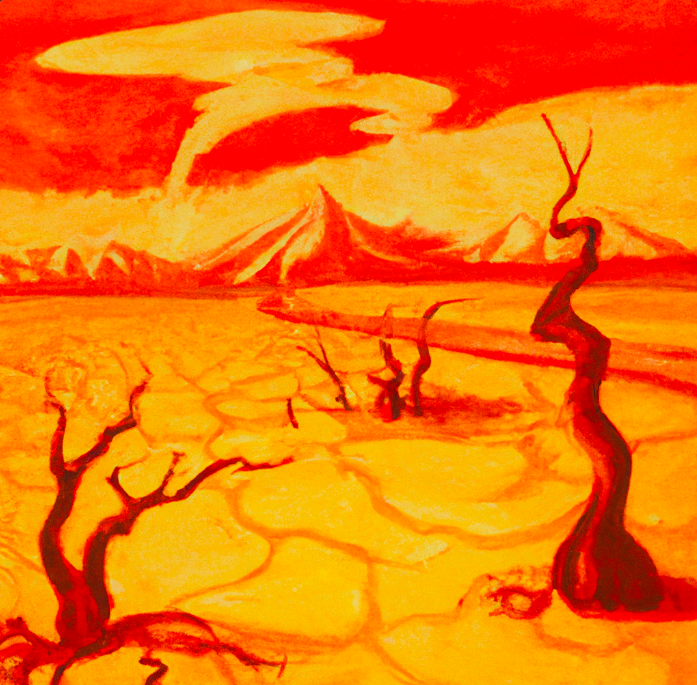

Climate change

Risk: (sections from the well written Wikipedia page on Climate Change): “Contemporary climate change includes both global warming and its impacts on Earth’s weather patterns. There have been previous periods of climate change, but the current rise in global average temperature is more rapid and is primarily caused by humans.[2][3] Burning fossil fuels adds greenhouse gases to the atmosphere, most importantly carbon dioxide (CO2) and methane. Greenhouse gases warm the air by absorbing heat radiated by the Earth, trapping the heat near the surface. Greenhouse gas emissions amplify this effect, causing the Earth to take in more energy from sunlight than it can radiate back into space.” In general, the risk from climate change mostly comes from the destabilizing downstream effects it has on civilization, rather than from its direct effects, such as the ice caps melting or increased weather severity. One severe climate change catastrophe is the runaway greenhouse effect, but this seems unlikely to occur, as the present humans activities and natural processes contributing to global warming don’t appear capable of engendering such a catastrophe anytime soon.

Links: EAF Wiki; LW Wiki; Climate Change (Wikipedia); IPCC Report 2022; NOAA Climate Q & A; OWID Carbon Dioxide and Emissions (2020); UN Reports on CC; CC and Longtermism (2022); NASA Evidence of CC (2022)

Forecasts: If climate catastrophe by 2100, human population falls by >= 95%? − 1%; If global catastrophe by 2100, due to climate change or geoengineering? − 10%; How much warming by 2100? - (1.8, 2.6, 3.5) degrees; When fossil fuels < 50% of global energy? - (2038, 2044, 2056)

Does anyone have a good list of books related to existential and global catastrophic risk? This doesn’t have to just include books on X-risk / GCRs in general, but can also include books on individual catastrophic events, such as nuclear war.

Here is my current resource landscape (these are books that I have personally looked at and can vouch for; the entries came to my mind as I wrote them—I do not have a list of GCR / X-risk books at the moment; I have not read some of them in full):

General:

Global Catastrophic Risks (2008)

X-Risk (2020)

Anticipation, Sustainability, Futures, and Human Extinction (2021)

The Precipice (2019)

AI Safety

Superintelligence (2014)

The Alignment Problem (2020)

Human Compatible (2019)

Nuclear risk

General / space

Dark Skies (2020)

Biosecurity

Thoughts and Notes: October 3rd 0012022 (1)

I have been working on a post which introduces a framework for existential risks that I have not seen covered on the either LW or EAF, but I think I’ve impeded my progress by setting out to do more than I originally intended.

Rather than simply introduce the framework and compare it to the Bostrom’s 2013 framework and the Wikipedia page on GCRs, I’ve tried to aggregate all global and existential catastrophes I could find under the “new” framework.

Creating an anthology of global and existential catastrophes is something I would like to complete at some point, but doing so in the post I’ve written would be overkill and would not in line with the goal of”making the introduction of this little known framework brief and simple”.

To make my life easier, I am going to remove the aggregated catastrophes section of my post. I will work incrementally (and somewhat informally) on accumulating links and notes for and thinking about each global and/or existential catastrophe through shortform posts.

Each shortform post in this vein will pertain to a single type of catastrophe. Of course, I may post other shortforms in between, but my goal generally is to cover the different global and existential risks one by one via shortform.

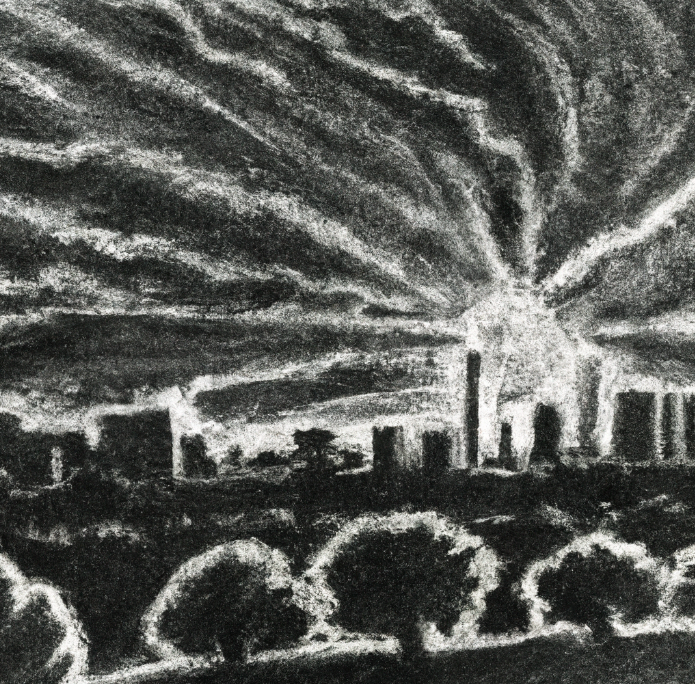

As was the case in my original post, I include DALLE-2 art with each catastrophe, and the loose structure for each catastrophe is Risk, Links, Forecasts.

Here is the first catastrophe in the list. Again note that I am not aiming for comprehensiveness here, but rather am trying to get the ball rolling for a more extensive review of the catastrophic or existential risks that I plan to complete at a later date. The forecasts were observed on October 3 0002022 and represent the community’s uniform median forecast.

Use of Nuclear Weapons(Anthropogenic, Current, Preventable)

Risk: The use of a nuclear weapon on a well populated region could directly kill thousands to millions of people. The indirect effects, which include radiation poisoning from the fallout and heightened instability, contributes to additional death. In the event one nuke is deployed, the use of many more nuclear weapons might follow; the soot released into the stratosphere from these blasts could block out the sun—a nuclear winter—leading to the collapse agricultural systems and subsequently to widespread famine. Both scenarios—the use of one nuclear weapon or the use of many—could precipitate a chain of events culminating in societal collapse.

Links: EAF Wiki; LW Wiki; Nuclear warfare (Wikipedia); Nuclear war is unlikely to cause human extinction (2021); Nuclear War Map; OWID Article (2022); On Assessing the Risk of Nuclear War (2021); Model for impact of nuclear war (2020); “Putin raises possibility of using nuclear weapons for the war” (YouTube, LW 2022)

Forecasts: Nuke used in Ukraine before 2023? − 5%; US nuke detonated in Russia before 2023? − 1%; Russian nuke detonated in Ukraine before 2023 − 1%; >=1 nuke used in war by 2050? − 28%; No non-test nukes used by 2035? − 76%; If global catastrophe by 2100, due to nuclear war? − 25%; Global thermonuclear war by 2070? − 10%

While I have much less experience in this domain, i.e. EA outreach, than you, I too fall on the side of debate that the amount spent is justified, or at least not negative in value. Even if those who’ve learned about EA or who’ve contributed to it in some way don’t identify with EA completely, it seems that in the majority of instances some benefit was had collectively, be it from the skepticism, feedback, and input of these people on the EA movement / doing good or from the learning and resources the person tapped into and benefited from by being exposed to EA.

In my experience as someone belonging to the WEIRD demographic, males in heterosexual relationships provide less domestic or child support, on average, than their spouse, where by “less” I mean both lower frequency and lower quality in terms of attention and emotional support provided. Males seem entirely capable of learning such skills, but there does seem to some discrepancy in the amount of support actually provided. I would be convinced otherwise were someone to show me a meta-analysis or two of parental care behaviors in heterosexual relationships that found, generally speaking, males and females provide analogous levels of care. In my demographic though, this does not seem to be the case.

Agreed. I also think putting these in a shortform, requesting individual feedback in DMs, or posting these in a slightly less formal place such as the EA Gather town or a Discord server might be better alternatives than having this on the forum in such a bare-bones state. Also, simply posting each article in full on the forum and receiving feedback in the comments could work as well. I just think [2 sentence context + links] doesn’t make for a great post.

Thank you very much for this offer—I have many questions, but I don’t want to eat away too much of your time, so feel free to answer as few or as many of my questions as you choose. One criterion might be only answering those questions that you believe I would have the longest or most difficult time answering myself. I believe that many of my questions could have fairly complex answers; as such, it might be more efficient to simply point me to the appropriate resources via links. While this may increase your work load, I think that if you crossposted this on LessWrong, its members would also find this post valuable.

Background

My partner and I are both in our early-mid 20′s (we are only 2 months apart in our ages) and are interested in having children. More specifically, we currently believe that our first child, should we have any children together, will likely be born 6.5 to 9.5 years from now. We are thinking about having 2-4 children, but the final number depends on my partner’s experiences with childbirth and on our collective experience of raising the first, second, etc… child. Some members of my partner’s family experience depression and my partner has a mild connective tissue disorder. Members of my family deal with some depression as well. My partner and I are thinking about using embryo selection to address for these things and to potentially optimize the IQ of our children, among other features that might have polygenic scores. We might also consider using gene-editing if it is available, but we definitely have to think more about this. The current health risks to both the host and embryo involved with IVF and egg extraction make my partner nervous, but she thinks she is more likely than not to follow through with the procedure. As for temperament, my partner and I are both relatively calm and inquisitive people and broadly share the same parenting values, though we haven’t, of course, fully scoped these values out. I suspect that I might be more conservative or rigid than her with my future parenting practices, but much of this comes from me having not yet formed beliefs about what works and what doesn’t with parenting and instead making precaution my default mode of operation. Now, onto the questions.

Optimal Timing

How much do my partner and I stand to lose in terms of our children’s health and psychological development if we were to have our first child at age ~28 versus at age ~32. We might have up to 4 children, so assuming each child is 2 years apart in age: “first child at 28” scenario ==> new child at ages 28, 30, 32, and 34; “first child at 33″ scenario ==> new child at ages 33, 35, 37, and 39. While I am unfamiliar with the details of the research, my current belief are “risk of child having health defects due to older father only really begin to increase, on average, when fathers reach around the age of 40” and “risk of child having health defects due to older mother increase, on average, in early 30s”. I think it is important to consider how old my partner and I will when our youngest child is 10 in each scenario: 44 for the first scenario and 49 for the second scenario. I am unaware of how large the difference in physical ability / stamina is for the ages 20 vs. 30, 30 vs. 40, 40 vs. 50, etc… but I feel that 44 vs. 49 constitutes a large difference in stamina.

Embryo Selection and IVF

Do you have any forecasts concerning embryo selection and IVF? Some example questions: How much do you think IVF will cost in 2027? in 2032? How do you think the risks associated with IVF and egg extraction with change in the next 6 years? In what ways do you think polygenic screening will improve in the next 6 years? What are you thoughts on the safety, morality, and practicality of embryo selection today, and how do you think this will change in the next 6 years?

Nature vs. Nurture

Can you provide a concise reflection of the current consensus of the genetics / biology community on this question? Your answer might contribute marginally to my parenting attitudes and behavior.

Naming

There are two primary questions I have on this. (1) In your experience, how much do you believe a person’s name affects their life outcomes? My current belief is for the overwhelming majority of people the effect is marginal. (2) What do you think are the effects of having your children call you by your first name instead of by something like “Mommy” or “Daddy”? My partner and I intend to have our children call us by our first names.

Holidays

Assuming that my partner and I raise our children in a sparsely populated suburb or rural region in the Eastern USA, do you think never celebrating holidays such as Christmas or birthdays would have a strong effect on the psychological development of our children? My partner and I intend to avoid celebrating any conventional holidays, except for Halloween, and to celebrate the Solstices and Equinoxes instead.

Twins

If we had the option to do this, do you think it would be a good idea to have 2 pairs of twins instead of 4 children, i.e. generally speaking, do people who have a twin sibling experience higher levels of well-being, and if so, would recommend this option?

Birth Order

From what I’ve read, it appears that, at least among many current indigenous societies, the demographic that predominantly plays with and cares for children beyond the mother and father are adolescent females. With this in mind, might it make more sense to have the first child be a female? Additionally, I’ve come across the idea that the last child of families with more than 2 children typically has more estrogen than other the children, on average. What are some of the consequences of birth order by sex, assuming that my partner and I have 4 children and that each of these children are born 2 years apart? For example, would you suspect that the well-being of the following group, in order of birth from 1st born to last born, (F, M, M, F) experiences higher well-being than (M, F, F, M), all other things controlled for, hypothetically?

Geographical location

My partner and I are considering forming some establishment in a region with forests, some terrain, a low population density, and low air pollution* that is at most 3 hours away from an urban area. Some regions in the Eastern US comes to mind here. The air pollution part has an asterisk next to it since I highly value this consideration for where to raise children. Generally speaking, what might be some disadvantages to raising children in such an area? I suspect that a potential lack of immediate access to healthcare services might be one.

Education and technology

My partner and I plan to home-school our children until they are college age. We suspect that socialization might be a large issue if we follow through with this plan. I intend to instruct my children in many subjects and would very much like for them to develop strong critical thinking skills, a curiosity and wonder with the universe, and a general mental framework for how the world works. This is all standard though; do you have any recommendations for inculcating these things across the various developmental milestones? For example, it might be the case that I should primarily talk to an X year old in such a way because they have not developed Y capacity yet. Also, I am quite keen on limiting the access of my children when they are young (0-10, but this upper bound is something that I need to think about) to most of YouTube (except for educational channels), most of the Internet (except for educational websites or resources), and all of television. I intend to teach my children at least the basics of electronics and programming, so their engagement with the technology involved with these things would be openly encouraged. What would might be some consequences on the mental development of my children of me taking these actions? Of course, your answer is not the sole consideration for my decision.

Emotions and Discipline

My partner and I are both highly conflict avoidant. We are both quite calm most of the time and are not too affected by anger or frustration, though we do feel such things occasionally. We intend to not display frustration, anger, or intense exasperation in front of our children, but don’t know if this a good idea. Are there some emotions or reactions that should not be shown to children as they may lead to a weakened ability to deal with / tolerate certain aspects of human interaction / the world? Also, my partner and I are both unaware of what the most developmentally beneficial forms of discipline are for children. For one heuristic, we are considering making an effort to enforce disciplinary action immediately in response to some undesirable action, and to always follow throw with what we promise and warn about.

This is a long list of questions, but thank you for reading it if you’ve gotten to the end. It has actually been helpful in eliciting some of the ideas I’ve had about parenting that I’ve not explicitly written out.

Have a nice day!

Impact successful—so exciting!

Without thinking too deeply, I believe that this framing, i.e. one in line with AI developers are gambling with the fate of humanity for the sake of profit, and we need to stop them/ensure that their efforts don’t have catastrophic effects, for AI risk could serve as a conversational cushion for those who are unfamiliar with the general state of AI progress and with the existential risk poorly aligned AI poses.

Those unfamiliar with AI might disregard the extent of risk from AI if approached in conversation with remarks about how not only it is non-trivial that humanity might be extinguished by AI, but many researchers believe this event is highly likely to occur, even in the next 25 years. I imagine such scenarios are, for them, generally unbelievable.

The cushioning could, however, lead to people trying to think about AI risk independently or to them searching for more evidence and commentary online, which might subsequently lead to them to the conclusion that AI does in fact pose a significant existential risk to humanity.

When trying to introduce the idea of AI risk to someone who is unfamiliar with it, it’s probably a good idea to give an example of a current issue with AI, and then have them extrapolate. The example of poorly designed AI systems being used by corporations for click-through, as covered in the introduction of Human Compatible, seems good to use in your framing of AI safety as a public good. Most people are familiar with the ills of algorithms designed for social media, so it is not a great step to imagine researchers designing more powerful AI systems that are deleterious to humanity via a similar design issue but at a much more lethal level:

They aren’t particularly intelligent, but they are in a position to affect the entire world because they directly influence billions of people. Typically, such algorithms are designed to maximize click-through, that is, the probability that the user clicks on presented items. The solution is simply to present items that the user likes to click on, right? Wrong. The solution is to change the user’s preferences so that they become more predictable. A more predictable user can be fed items that they are likely to click on, thereby generating more revenue. People with more extreme political views tend to be more predictable in which items they click on.

I’ve been keeping tabs on this since mid-August when the following Metaculus question was created:

The community and I (97%, given NASA’s track record of success) seem in agreement that it is unlikely DART fails to make an impact. Here are some useful Wikipedia links that aided me with the prediction: (Asteroid impact avoidance, Asteroid impact prediction, Near Earth-object (NEO), Potentially hazardous object).

There are roughly 3 hours remaining until impact (https://dart.jhuapl.edu/); it seems unlikely that something goes awry, and I am firmly hoping for success.

While I’m unfamiliar with the state of research on asteroid redirection or trophy systems for NEOs, DART seems like a major step in the correct direction, one where humanity faces a lower levels of risks from the collision of asteroids, comets, and other celestial objects with Earth.

While it is my belief that the there is some wider context missing from certain aspects of this post (e.g., what sorts of AI progress are we talking about, perhaps strong AI or transformative AI?- this makes a difference), the analogy does a fair job at illustrating that the intention to use advanced AI to engender progress (beneficial outcomes for humanity) might have unintended and antithetical effects instead. This seems to actually encapsulate the core idea of AI safety / alignment, roughly that a system which is capable of engendering vasts amounts of scientific and economic gains for humanity need not be (and is unlikely to be) aligned with human values and flourishing by default and thus is capable of taking actions that may cause great harm to humanity. The Future of Life’s page on the Benefits and Risk of AI comments on this:

The AI is programmed to do something beneficial, but it develops a destructive method for achieving its goal: This can happen whenever we fail to fully align the AI’s goals with ours, which is strikingly difficult.

Needless to comment on this further, but the worst outcomes possible from unaligned AI seem extremely likely to exceed unemployment in severity.

I agree with the overall premise of this post that, generally speaking, the quality of engagement on the forum, through posts or comments, has decreased, though I am not convinced (yet) that some of the points made by the author as evidence for this are completely accurate. What follows are some comments on what a reduced average post quality of the forum could mean, along with a closing comment or two.

If it is true that certain aspects of EAF posts have gotten worse over time, it’s worth examining exactly which aspects of comments and posts have degraded, and I think, in this regard, this post will be / has been helpful. Point 13 does claim that the degradation of the average quality of the forum’s content may be an illusion, but HaydnBelfield’s comment that “spotting the signal from the noise has become harder” seems to be stronger evidence that the average quality has indeed decreased, which is important as this means that people’s time is being wasted sorting through posts. This can be solved partially by subscribing only to the forum posters who produce the best content, but this won’t work for people who are new to the forum and produce high quality content, so other interventions or approaches should be explored.

While the question of how “quality” engagement on forum should be measured is not discussed in this post, I imagine that in most people’s minds engagement “quality” on this site is probably some function of the proportion of different types of posts (e.g., linkposts, criticisms, meta-EA, analyses, summaries, etc...), the proportion of the types of content of posts (e.g., community building, organizational updates, AI safety, animal welfare, etc...), and the epistemics of each post (this last point might be able to integrated with the first point). The way people engage with the forum, the forum’s optics, and how much impact is generated as a result of the forum existing are all affected by the average quality of the forum’s posts, so there seems to be a lot at stake.

I don’t have a novel solution for improving the quality of forum’s posts and comments. Presently, downvotes can be used to disincentivize certain content, comments can be used to point out epistemic flaws to the author of a post and to generally improve the epistemics of a discussion, high quality posters can create more high quality posts to alter the proportion of posts that are high quality, and forum moderators can disincentivize poor epistemic practices. In the present state of the forum, diffusing or organizing contest posts might make it easier to locate high quality posts. Additionally, having one additional layer of moderated review for posts created by users with less than some threshold of karma might go a long way in increasing the average quality of post’s made on the forum (e.g., that the Metaculus moderation team reviews its questions seems to help maintain the epistemic baseline of the site—of course, there are counterexamples, but in terms of average quality, extra review usually works, though it is somewhat expensive).

The forum metrics listed in Miller’s comment seem useful as well for getting a more detailed description of how engagement has changed over the years as the number of forum posters has changed.

As for the points themselves, I will comment that I think point (4) should be fleshed out in more detail—what are some examples here. Also, I think point 7 and 11 can be merged, and that more attention should be diverted to this. There can be inadvertent and countervalue consequences of welcoming the reduction in strength of people’s conversational filters on the forum. As such, moderators should consider these things more deeply (they may have weighed the pros and cons of taking actions to incentivize more engagement on the forum, and determined that this is best for the long term potential of the forum and EA more generally, but I do not know of the existence of such efforts).

Thank you Thomas Kwa for contributing this take to the forum; I think it could lead to an increase in some people’s threshold for posting and might lead to forum figures searching for ways to organize similar posts (e.g., creating a means to organize contest spam) and move the average post quality upwards.

The dormant period occurred between applying and getting referred for the position, and between getting referred and receiving an email for an interview. These periods were unexpectedly long and I wish there had been more communication or at least some statement regarding how long I should expect to wait. However, once I had the interview, I only had to wait a week (if I am remembering correctly) to learn if I was to be given a test task. After completing the test task, it was around another week before I learned I had performed competently enough to be hired.