I think affecting P(things go really well | no AI takeover) is pretty tractable!

What interventions are you most excited about? Why? What are they bottlenecked on?

I think affecting P(things go really well | no AI takeover) is pretty tractable!

What interventions are you most excited about? Why? What are they bottlenecked on?

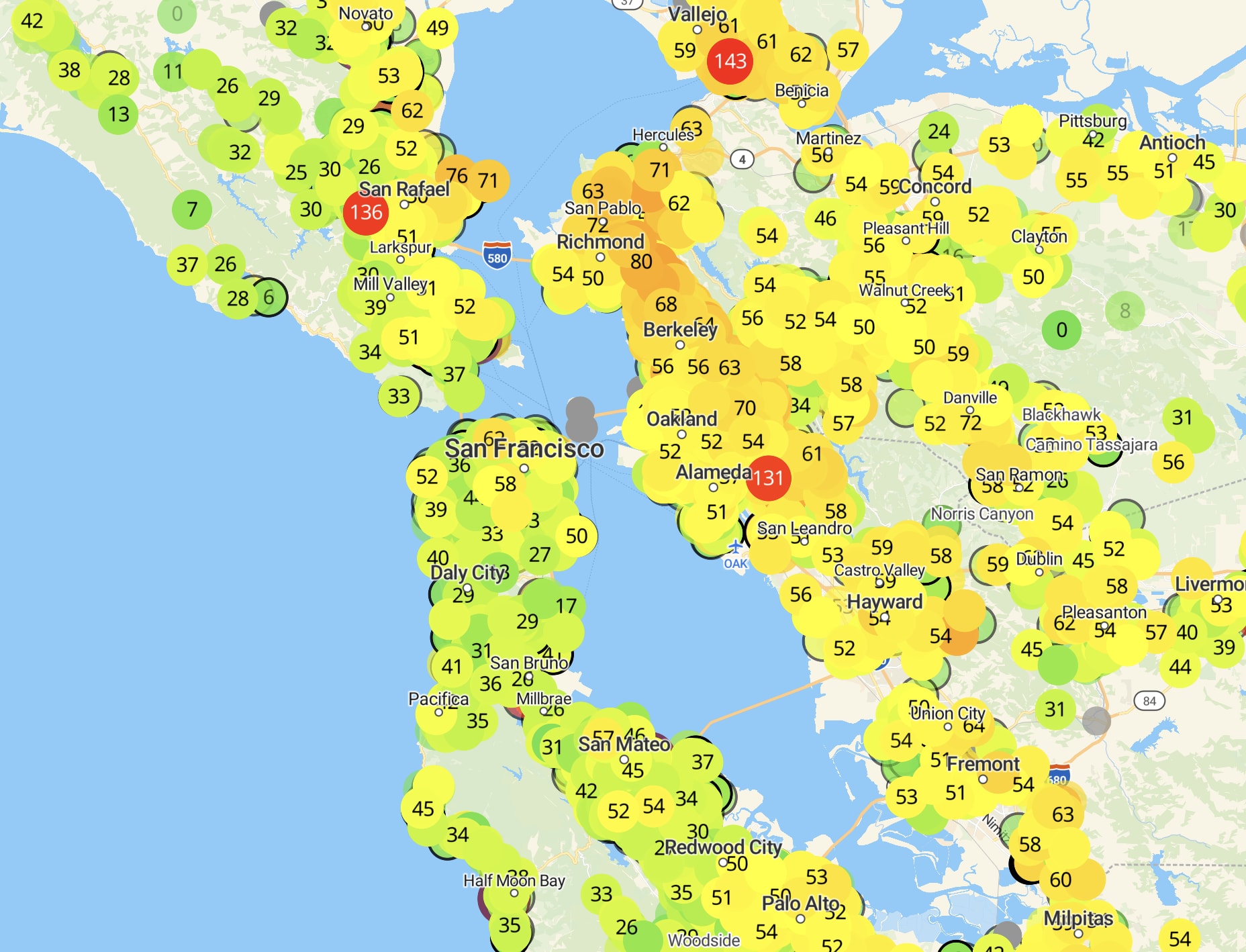

PurpleAir collects data from a network of private air quality sensors. Looks interesting, and possibly useful for tracking rapid changes in air quality (e.g. from a wildfire).

(written v quickly, sorry for informal tone/etc)

i think that a happy medium is getting small-group conversations (that are useful, effective, etc) of size 3–4 people. this includes 1-1s, but the vibe of a Formal, Thirty Minute One on One is a very different vibe from floating through 10–15, 3–4-person conversations in a day, each that last varying amounts of time.

much more information can flow with 3-4 ppl than with just 2 ppl

people can dip in and out of small conversations more than they can with 1-1s

more-organic time blocks means that particularly unhelpful conversations can end after 5-10m, and particularly helpful ones can last the duration that would be good for them to last (even many hours!)

3-4 person conversations naturally select for a good 1-1. once 1-2 people have left a 3-4 person conversation, the conversation is then just a 1-1 of the two people who’ve engaged in the conversation longest — which seems like some evidence of their being a good match for a 1-1.

however, i think that this is operationally much harder to do for organizers than just 1-1s. my understanding is that this is much of the reason EAGs (& other conferences) do 1-1s, instead of small group conversations.

i think Writehaven did a mediocre job of this at LessOnline this past year (but, tbc, it did vastly better than any other piece of software i’ve encountered).

i think Lighthaven as a venue forces this sort of thing to happen, since there are so so so many nooks for 2-4 people to sit and chat, and the space is set up to make 10+ person conversations less likely to happen.

i know that The Curve (from @Rachel Weinberg) created some “Curated Conversations:” they manually selected people to have predetermined conversations for some set amount of time. iirc this was typically 3-6 people for ~1h, but i could be wrong on the details. rachel: how did these end up going, relative to the cost of putting them together?

[srs unconf at lighthaven this sunday 9⁄21]

Memoria is a one-day festival/unconference for spaced repetition, incremental reading, and memory systems. It’s hosted at Lighthaven in Berkeley, CA, on September 21st, from 10am through the afternoon/evening.

Michael Nielsen, Andy Matuschak, Soren Bjornstad, Martin Schneider, and about 90–110 others will be there — if you use & tinker with memory systems like Anki, SuperMemo, Remnote, MathAcademy, etc, then maybe you should come!

Tickets are $80 and include lunch & dinner. More info at memoria.day.

have you tried Fatebook.io, from @Adam Binksmith ?

i thought this was excellent. thank you for writing it up!

Thank you for this! It can take a lot of effort to write, edit, and publish reports like this, but they (generally) create quite a bit of value. I found this one exceptionally concrete & clear to read — well done!

thanks for writing/cross-posting! i particularly liked that you had the reader pause at a moment where we could reasonably attempt to figure it out on our own.

I skimmed most of this post, and it seems great! Thank you for writing it.

However:

In this post, I introduce a concept I call surface area for serendipity …

I’m not sure what you mean by “introduce” here? I read it as “here is this new phrase that I’m putting forward for use” — unless I’m misunderstanding, I’m quite confident that’s wrong. You’re definitely not the first person to use the phrase.

Consider editing it to be:

In this post, I describe a concept I use called surface area for serendipity…

Or something similar.

fwiw i instinctively read it as the 2nd, which i think is caleb’s intended reading

epistemic status: i timeboxed the below to 30 minutes. it’s been bubbling for a while, but i haven’t spent that much time explicitly thinking about this. i figured it’d be a lot better to share half-baked thoughts than to keep it all in my head — but accordingly, i don’t expect to reflectively endorse all of these points later down the line. i think it’s probably most useful & accurate to view the below as a slice of my emotions, rather than a developed point of view. i’m not very keen on arguing about any of the points below, but if you think you could be useful toward my reflecting processes (or if you think i could be useful toward yours!), i’d prefer that you book a call to chat more over replying in the comments. i do not give you consent to quote my writing in this short-form without also including the entirety of this epistemic status.

1-3 years ago, i was a decently involved with EA (helping organize my university EA program, attending EA events, contracting with EA orgs, reading EA content, thinking through EA frames, etc).

i am now a lot less involved in EA.

e.g. i currently attend uc berkeley, and am ~uninvolved in uc berkeley EA

e.g. i haven’t attended a casual EA social in a long time, and i notice myself ughing in response to invites to explicitly-EA socials

e.g. i think through impact-maximization frames with a lot more care & wariness, and have plenty of other frames in my toolbox that i use to a greater relative degree than the EA ones

e.g. the orgs i find myself interested in working for seem to do effectively altruistic things by my lights, but seem (at closest) to be EA-community-adjacent and (at furthest) actively antagonistic to the EA community

(to be clear, i still find myself wanting to be altruistic, and wanting to be effective in that process. but i think describing my shift as merely moving a bit away from the community would be underselling the extent to which i’ve also moved a bit away from EA’s frames of thinking.)

why?

a lot of EA seems fake

the stuff — the orientations — the orgs — i’m finding it hard to straightforwardly point at, but it feels kinda easy for me to notice ex-post

there’s been an odd mix of orientations toward [ aiming at a character of transparent/open/clear/etc ] alongside [ taking actions that are strategic/instrumentally useful/best at accomplishing narrow goals… that also happen to be mildly deceptive, or lying by omission, or otherwise somewhat slimy/untrustworthy/etc ]

the thing that really gets me is the combination of an implicit (and sometimes explicit!) request for deep trust alongside a level of trust that doesn’t live up to that expectation.

it’s fine to be in a low-trust environment, and also fine to be in a high-trust environment; it’s not fine to signal one and be the other. my experience of EA has been that people have generally behaved extremely well/with high integrity and with high trust… but not quite as well & as high as what was written on the tin.

for a concrete ex (& note that i totally might be screwing up some of the details here, please don’t index too hard on the specific people/orgs involved): when i was participating in — and then organizing for — brandeis EA, it seemed like our goal was (very roughly speaking) to increase awareness of EA ideas/principles, both via increasing depth & quantity of conversation and via increasing membership. i noticed a lack of action/doing-things-in-the-world, which felt kinda annoying to me… until i became aware that the action was “organizing the group,” and that some of the organizers (and higher up the chain, people at CEA/on the Groups team/at UGAP/etc) believed that most of the impact of university groups comes from recruiting/training organizers — that the “action” i felt was missing wasn’t missing at all, it was just happening to me, not from me. i doubt there was some point where anyone said “oh, and make sure not to tell the people in the club that their value is to be a training ground for the organizers!” — but that’s sorta how it felt, both on the object-level and on the deception-level.

this sort of orientation feels decently reprensentative of the 25th percentile end of what i’m talking about.

also some confusion around ethics/how i should behave given my confusion/etc

importantly, some confusions around how i value things. it feels like looking at the world through an EA frame blinds myself to things that i actually do care about, and blinds myself to the fact that i’m blinding myself. i think it’s taken me awhile to know what that feels like, and i’ve grown to find that blinding & meta-blinding extremely distasteful, and a signal that something’s wrong.

some of this might merely be confusion about orientation, and not ethics — e.g. it might be that in some sense the right doxastic attitude is “EA,” but that the right conative attitude is somewhere closer to (e.g.) “embody your character — be kind, warm, clear-thinking, goofy, loving, wise, [insert more virtues i want to be here]. oh and do some EA on the side, timeboxed & contained, like when you’re donating your yearly pledge money.”

where now?

i’m not sure! i could imagine the pendulum swinging more in either direction, and want to avoid doing any further prediction about where it will swing for fear of that prediction interacting harmfully with a sincere process of reflection.

i did find writing this out useful, though!

Thanks for the clarification — I’ve sent a similar comment on the Open Phil post, to get confirmation from them that your reading is accurate :)

How will this change affect university groups currently supported by Open Philanthropy that are neither under the banner of AI safety nor EA? The category on my mind is university forecasting clubs, but I’d also be keen to get a better sense of this for e.g. biosecurity clubs, rationality clubs, etc.

(I originally posted this comment under the Uni Groups Team’s/Joris’s post (link), but Joris didn’t seem to have a super conclusive answer, and directed me to this post.)

(also — thanks for taking the time to write this out & share it. these sorts of announcement posts don’t just magically happen!)

How will this change affect university groups currently supported by Open Philanthropy that are neither under the banner of AI safety nor EA? The category on my mind is university forecasting clubs, but I’d also be keen to get a better sense of this for e.g. biosecurity clubs, rationality clubs, etc.

[epistemic status: i’ve spent about 5-20 hours thinking by myself and talking with rai about my thoughts below. however, i spent fairly little time actually writing this, so the literal text below might not map to my views as well as other comments of mine.]

IMO, Sentinel is one of the most impactful uses of marginal forecasting money.

some specific things i like about the team & the org thus far:

nuno’s blog is absolutely fantastic — deeply excellent, there are few that i’d recommend higher

rai is responsive (both in terms of time and in terms of feedback) and extremely well-calibrated across a variety of interpersonal domains

samotsvety is, far and away, the best forecasting team in the world

sentinel’s weekly newsletter is my ~only news source

why would i seek anything but takes from the best forecasters in the world?

i think i’d be willing to pay at least $5/week for this, though i expect many folks in the EA community would be happy to pay 5x-10x that. their blog is currently free (!!)

i’d recommend skimming whatever their latest newsletter was to get a sense of the content/scope/etc

linch’s piece sums up my thoughts around strategy pretty well

i have the highest crux-uncertainty and -elasticity around the following, in (extremely rough) order of impact on my thought process:

do i have higher-order philosophical commitments that swamp whatever Sentinel does? (for ex: short timelines, animal suffering, etc)

will Sentinel be able to successfully scale up?

conditional on Sentinel successfully forecasting a relevant GCR, will Sentinel successfully prevent or mitigate the GCR?

will Sentinel be able to successfully forecast a relevant GCR?

how likely are the category of GCRs that sentinel might mitigate to actually come about? (vs no GCRS or GCRS that are totally unpredictable/unmitigateable)

i’ll add $250, with exactly the same commentary as austin :)

to the extent that others are also interested in contributing to the prize pool, you might consider making a manifund page. if you’re not sure how to do this or just want help getting started, let me (or austin/rachel) know!

also, you might adjust the “prize pool” amount at the top of the metaculus page — it currently reads “$0.”

What, concretely, would that involve? /What, concretely, are you proposing?