I.

From Scott Alexander’s review of Joe Henrich’s The Secret of our Success:

In the Americas, where manioc was first domesticated, societies who have relied on bitter varieties for thousands of years show no evidence of chronic cyanide poisoning. In the Colombian Amazon, for example, indigenous Tukanoans use a multistep, multi-day processing technique that involves scraping, grating, and finally washing the roots in order to separate the fiber, starch, and liquid. Once separated, the liquid is boiled into a beverage, but the fiber and starch must then sit for two more days, when they can then be baked and eaten.

Such processing techniques are crucial for living in many parts of Amazonia, where other crops are difficult to cultivate and often unproductive. However, despite their utility, one person would have a difficult time figuring out the detoxification technique. Consider the situation from the point of view of the children and adolescents who are learning the techniques. They would have rarely, if ever, seen anyone get cyanide poisoning, because the techniques work. And even if the processing was ineffective, such that cases of goiter (swollen necks) or neurological problems were common, it would still be hard to recognize the link between these chronic health issues and eating manioc. Most people would have eaten manioc for years with no apparent effects. Low cyanogenic varieties are typically boiled, but boiling alone is insufficient to prevent the chronic conditions for bitter varieties. Boiling does, however, remove or reduce the bitter taste and prevent the acute symptoms (e.g., diarrhea, stomach troubles, and vomiting).

So, if one did the common-sense thing and just boiled the high-cyanogenic manioc, everything would seem fine. Since the multistep task of processing manioc is long, arduous, and boring, sticking with it is certainly non-intuitive. Tukanoan women spend about a quarter of their day detoxifying manioc, so this is a costly technique in the short term. Now consider what might result if a self-reliant Tukanoan mother decided to drop any seemingly unnecessary steps from the processing of her bitter manioc. She might critically examine the procedure handed down to her from earlier generations and conclude that the goal of the procedure is to remove the bitter taste. She might then experiment with alternative procedures by dropping some of the more labor-intensive or time-consuming steps. She’d find that with a shorter and much less labor-intensive process, she could remove the bitter taste. Adopting this easier protocol, she would have more time for other activities, like caring for her children. Of course, years or decades later her family would begin to develop the symptoms of chronic cyanide poisoning.

Thus, the unwillingness of this mother to take on faith the practices handed down to her from earlier generations would result in sickness and early death for members of her family. Individual learning does not pay here, and intuitions are misleading. The problem is that the steps in this procedure are causally opaque—an individual cannot readily infer their functions, interrelationships, or importance. The causal opacity of many cultural adaptations had a big impact on our psychology.

Scott continues:

Humans evolved to transmit culture with high fidelity. And one of the biggest threats to transmitting culture with high fidelity was Reason. Our ancestors lived in epistemic hell, where they had to constantly rely on causally opaque processes with justifications that couldn’t possibly be true, and if they ever questioned them then they might die. Historically, Reason has been the villain of the human narrative, a corrosive force that tempts people away from adaptive behavior towards choices that “sounded good at the time”.

II.

In their essay “Reality is Very Weird and You Need to be Prepared for That”, SlimeMoldTimeMold discuss an example of a particular epistemic hell that we managed to escape from through sheer dumb luck. SMTM review Maciej Cegłowski’s essay Scott And Scurvy which tells the incredible true history of how we came to understand the titular disease. They (SMTM is two people) say that it is one of the most interesting things they’ve ever read and that they can’t do full justice to all the twists and turns of the story; I will try to briefly summarize their telling of the story so you should expect even less justice to be done to it, but here it goes anyways.

First, how terrible was scurvy?

Scurvy killed more than two million sailors between the time of Columbus’s transatlantic voyage and the rise of steam engines in the mid-19th century. The problem was so common that shipowners and governments assumed a 50% death rate from scurvy for their sailors on any major voyage. According to historian Stephen Bown scurvy was responsible for more deaths at sea than storms, shipwrecks, combat, and all other diseases combined. In fact, scurvy was so devastating that the search for a cure became what Bown describes as “a vital factor determining the destiny of nations.”

None of the potential fates awaiting sailors was pleasant, but scurvy exacted a particularly gruesome death. The earliest symptom—lethargy so intense that people once believed laziness was a cause of the disease—is debilitating. Your body feels weak. Your joints ache. Your arms and legs swell, and your skin bruises at the slightest touch. As the disease progresses, your gums become spongy and your breath fetid, your teeth loosen, and internal hemorrhaging makes splotches on your skin. Old wounds open; mucous membranes bleed. Left untreated, you will die, likely from a sudden hemorrhage near your heart or brain. (“The Age of Scurvy”)

Hellish indeed, but the epistemic horror of this story comes from the fact that a simple cure for a terrible disease—literally eat anything with vitamin C which is like most food except muscle meat, bread, eggs, and cheese—was repeatedly lost.

Scurvy was “cured” as early as 1497, when Vasco de Gama’s crew discovered the power of citrus...but this cure was repeatedly lost, forgotten, rediscovered, misconstrued, confused, and just generally messed around with for hundreds of years, despite being a leading killer of seafarers and other explorers. By the 1870s the “citrus cure” was discredited, and for nearly sixty years, scurvy— despite being cured, with scientific research to back it up—continued killing people, including men on Scott’s 1911 expedition to the South Pole. This went on until vitamin C was finally isolated in 1932 during research on guinea pigs.

The Wikipedia page does a good job of summarizing the history of cures; many people in the 1500s and 1600s knew that citrus fruits did the trick. The story you may have learned about in school was that of Scottish physician James Lind who supposedly found the cure for scurvy when he ran one of the first controlled experiments in medical history. The problem is that while yes Lind did do an experiment which provided evidence that lemons could cure scurvy, he didn’t even recognize the significance of his own findings. Just in case you weren’t sure whether or not we should credit Lind with discovering the cure, there is an article in the Lancet titled, “Treatment for scurvy not discovered by Lind”.

Throughout the 400-year history of scurvy, James Lind is systematically introduced as the man who discovered and promoted lemon juice as the best way to treat the condition. However, lemon juice was not distributed to English sailors until 40 years after the publication of his dissertation. Some say his findings did not convince the Admiralty. I wonder whether Lind himself was unconvinced.

In 1747, Lind did a trial on 12 sailors, the results of which showed the effectiveness of a mix of lemon and orange juices against scurvy. However, the experiment occupies only four out of 400 pages of his treatise on scurvy, in several chapters of which he disregards the observations. Scurvy, Lind writes, is provoked mainly by dampness. Adding, in the chapter on prevention, that those affected need dry air. Lind recommends lemon juice only to fight the effects of the dampness inevitable at sea. Furthermore, the chapter on treatment hardly mentions citrus fruit, and instead focuses on exercise and change of air. Lemons are mentioned, but Lind adds that they can be replaced by an infusion.

So it wasn’t Lind that popularized the citrus cure, but eventually the British Royal Navy did figure it out, basically just by accidentally noticing that sailors didn’t get scurvy when they ate lemons on long voyages. SlimeMoldTimeMold describe the comedy of errors that then led to the Royal Navy discrediting the citrus cure.

Originally, the Royal Navy was given lemon juice, which works well because it contains a lot of vitamin C. But at some point between 1799 and 1870, someone switched out lemons for limes, which contain a lot less vitamin C. Worse, the lime juice was pumped through copper tubing as part of its processing, which destroyed the little vitamin C that it had to begin with.

This ended up being fine, because ships were so much faster at this point that no one had time to develop scurvy. So everything was all right until 1875, when a British arctic expedition set out on an attempt to reach the North Pole. They had plenty of lime juice and thought they were prepared — but they all got scurvy.

The same thing happened a few more times on other polar voyages, and this was enough to convince everyone that citrus juice doesn’t cure scurvy. The bacterial theory of disease was the hot new thing at the time, so from the 1870s on, people played around with a theory that a bacteria-produced substance called “ptomaine” in preserved meat was the cause of scurvy instead.

This theory was wrong, so it didn’t work very well.

The road to rediscovering the citrus cure began with a stroke of dumb luck:

It was pure luck that led to the actual discovery of vitamin C. Axel Holst and Theodor Frolich had been studying beriberi (another deficiency disease) in pigeons, and when they decided to switch to a mammal model, they serendipitously chose guinea pigs, the one animal besides human beings and monkeys that requires vitamin C in its diet. Fed a diet of pure grain, the animals showed no signs of beriberi, but quickly sickened and died of something that closely resembled human scurvy.

No one had seen scurvy in animals before. With a simple animal model for the disease in hand, it became a matter of running the correct experiments, and it was quickly established that scurvy was a deficiency disease after all. Very quickly the compound that prevents the disease was identified as a small molecule present in cabbage, lemon juice, and many other foods, and in 1932 Szent-Györgyi definitively isolated ascorbic acid.

This dramatically undersells how hellish the whole situation was.

Holst and Frolich also ran a version of the study with dogs. But the dogs were fine. They never developed scurvy, because unlike humans and guinea pigs, they don’t need vitamin C in their diet. Almost any other animal would also have been fine — guinea pigs and a few species of primates just happen to be really weird about vitamin C. So what would this have looked like if Holst and Frolich just never got around to replicating their dog research on guinea pigs? What if the guinea pigs had gotten lost in the mail?

SlimeMoldTimeMold describe how devilishly bewildering the whole situation would have been if the guinea pigs had in fact gotten lost in the mail by imagining a hypothetical dialogue between the two researchers.

Frolich: You know Holst, I think old James Lind was right. I think scurvy really is a disease of deficiency, that there’s something in citrus fruits and cabbages that the human body needs, and that you can’t go too long without.

Holst: Frolich, what are you talking about? That doesn’t make any sense.

Frolich: No, I think it makes very good sense. People who have scurvy and eat citrus, or potatoes, or many other foods, are always cured.

Holst: Look, we know that can’t be right. George Nares had plenty of lime juice when he led his expedition to the North Pole, but they all got scurvy in a couple weeks. The same thing happened in the Expedition to Franz-Josef Land in 1894. They had high-quality lime juice, everyone took their doses, but everyone got scurvy. It can’t be citrus.

Frolich: Maybe some citrus fruits contain the antiscorbutic [scurvy-curing] property and others don’t. Maybe the British Royal Navy used one kind of lime back when Lind did his research but gave a different kind of lime to Nares and the others on their Arctic expeditions. Or maybe they did something to the lime juice that removed the antiscorbutic property. Maybe they boiled it, or ran it through copper piping or something, and that ruined it.

Holst: Two different kinds of limes? Frolich, you gotta get a hold of yourself. Besides, the polar explorers found that fresh meat also cures scurvy. They would kill a polar bear or some seals, have the meat for dinner, and then they would be fine. You expect me to believe that this antiscorbutic property is found in both polar bear meat AND some kinds of citrus fruits, but not in other kinds of citrus?

Frolich: You have to agree that it’s possible. Why can’t the property be in some foods and not others?

Holst: It’s possible, but it seems really unlikely. Different varieties of limes are way more similar to one another than they are to polar bear meat. I guess what you describe fits the evidence, but it really sounds like you made it up just to save your favorite theory.

Frolich: Look, it’s still consistent with what we know. It would also explain why Lind says that citrus cures scurvy, even though it clearly didn’t cure scurvy in the polar expeditions. All you need is different kinds of citrus, or something in the preparation that ruined it — or both!

Holst: What about our research? We fed those dogs nothing but grain for weeks. They didn’t like it, but they didn’t get scurvy. We know that grain isn’t enough to keep sailors from getting scurvy, so if scurvy is about not getting enough of something in your diet, those dogs should have gotten scurvy too.

Frolich: Maybe only a few kinds of animals need the antiscorbutic property in their food. Maybe humans need it, but dogs don’t. I bet if those guinea pigs hadn’t gotten lost in the mail, and we had run our study on guinea pigs instead of dogs, the guinea pigs would have developed scurvy.

Holst: Let me get this straight, you think there’s this magical ingredient, totally essential to human life, but other animals don’t need it at all? That we would have seen something entirely different if we had used guinea pigs or rats or squirrels or bats or beavers?

Frolich: Yeah basically. I bet most animals don’t need this “ingredient”, but humans do, and maybe a few others. So we won’t see scurvy in our studies unless we happen to choose the right animal, and we just picked the wrong animal when we decided to study dogs. If we had gotten those guinea pigs, things would have turned out different.

[cute scene]

…and Frolich is entirely right on every point. He also sounds totally insane.

Maybe there are different kinds of citrus. Maybe some animals need this mystery ingredient and others don’t. Maybe polar bear meat is, medically speaking, more like citrus fruit from Sicily than like citrus fruit from the West Indies. Really???

III.

One more example of an epistemic hell—from Gwern’s (fantastic) essay

“The Origins of Innovation: Bakewell & Breeding”:

People, both ordinary and men of leisure, often intensely interested in agriculture, have been farming animals for millennia and presumably interfering in their reproduction, and had ample opportunity to informally observe many matings, long pedigrees, crosses between breeds, and comparisons with neighboring farmers, and they had great incentive to reach correct beliefs not just for the immediate & compounding returns but also from being able to sell their superior specimens for improving other herds.But with all of this incentive, we were horribly confused on even the most basic principles of heredity for millennia…

“It took humanity a remarkably long time to discover that there are consistent relations between parent and offspring, and to develop ways of studying those relations. The raw phenomena of heredity were sufficiently complex to be impervious to ‘common-sense’ reasoning, to the brilliant but stifling schemas that were developed by the Greek philosophers, and even to the stunning forays of the early scientists.“

(“Heredity before Genetics: a History”; Cobb, 2006)

We now know that here are an indefinitely long list of ways that development can go wrong with thousands of environmental insults or developmental error or distinct genetic diseases (each with many possible contributing mutations), and that for the most part, each case is its own special case; sometimes ‘monsters’ can shed light on key aspects of biology like metabolic pathways, but that requires biology centuries more advanced than was available, and that the search for universal principles was futile. There are universal principles but they pertain mostly to populations, must be investigated in the aggregate, statistically, and individual counterexamples can only be shrugged at, as the universal principles can be and often are overridden by many of those special cases.

So how did we escape?

What was required was not a novel piece of apparatus, nor even a new theory; the key thing that was needed was statistically extraordinary data sets. On the one hand, these were composed of many reliable human pedigrees of unusual or pathological characters; on the other, they were the large-scale experimental studies that were carried out consciously by Mendel, or as a by-product of the commercial activity of eighteenth-century livestock breeders.

IV.

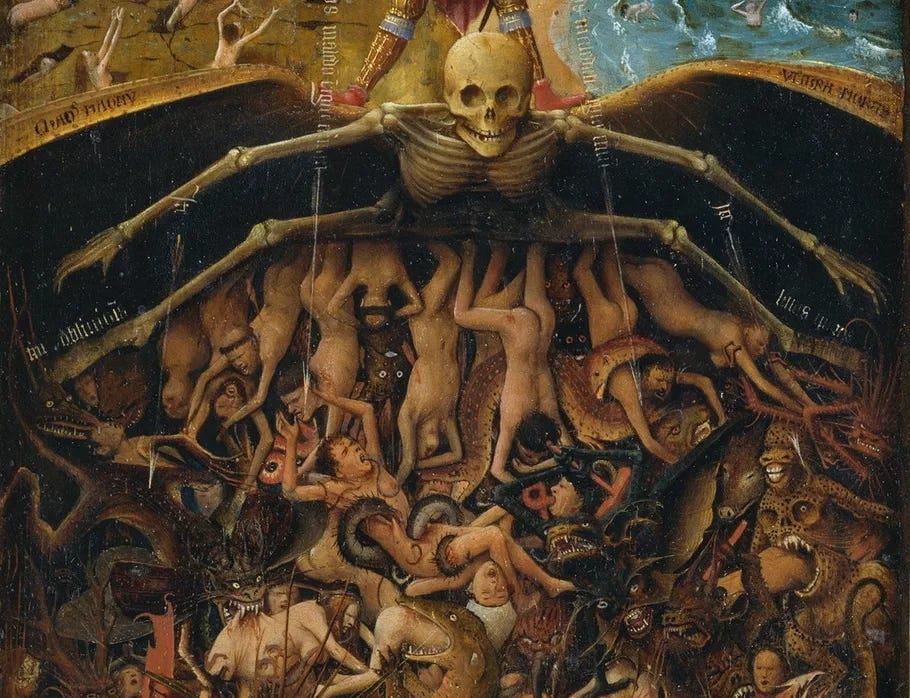

“Hell is empty and all the devils are here.” (Billy Shakes)

So what exactly is an epistemic hell?

Clearly these three examples are very different from one another, but they all describe a situation in which the dictates of logic and “proper” scientific investigation reliably lead us further from the truth. On some level, an epistemic hell is simply a case of bad luck—the world happened to be set up in a way where appearances, common sense, and obvious experiments/interventions are misleading in regards to some phenomenon. If we were just utterly lost and had no idea where to begin that would be one thing, but the problem is that it doesn’t feel that way while you’re in the midst of the inferno—it feels like there are numerous promising hypotheses on offer or that the phenomena in question is simply not amenable to any straightforward theory or remedy. There may even be optimism that now, finally, we are on the right track, and a breakthrough is right around the corner if we just keep plugging away.

What current scientific unknowns may be devilishly difficult in ways we don’t comprehend?

Complex diseases/disorders on which little progress has been made seem like good candidates; from the aforementioned “Scott and Scurvy” essay:

…one of the simplest of diseases managed to utterly confound us for so long, at the cost of millions of lives, even after we had stumbled across an unequivocal cure. It makes you wonder how many incurable ailments of the modern world—depression, autism, hypertension, obesity—will turn out to have equally simple solutions, once we are able to see them in the correct light. What will we be slapping our foreheads about sixty years from now, wondering how we missed something so obvious?

It might seem like the diseases listed in the quote (I would add Alzheimer’s disease, Parkinson’s disease, Bipolar disorder and Schizophrenia) are unlikely nominees, but that’s always what it feels like when you are trapped in Hades.

What about physics and cosmology? When you see a spate of articles with titles like “Why the foundations of physics have not progressed for 40 years”, “Escaping cosmology’s failing paradigm”, and “Physics is stuck — and needs another Einstein to revolutionize it, physicist Avi Loeb says”, that’s a good sign you might be in fiery knowledge abyss.

Given the landscape of physics today, could an Einstein-like physicist exist again — someone who, say, works in a patent office, quietly pondering the nature of space-time, yet whose revelations cause much of the field to be completely rethought?

Loeb thought so. “There are some dark clouds in physics,” Loeb told me. “People will tell you, ‘we just need to figure out which particles makes the dark matter, it’s just another particle. It has some weak interaction, and that’s pretty much it.’ But I think there is a very good chance that we are missing some very important ingredients that a brilliant person might recognize in the coming years.” Loeb even said the potential for a revolutionary physics breakthrough today “is not smaller — it’s actually bigger right now” than it was in Einstein’s time.

And then there is the so-called Hard Problem of Consciousness, a prime candidate if there ever was one. Maybe the problem is just difficult in an ordinary manner, but as we continue research decade after decade without any real breakthroughs it becomes increasingly likely that we are deeply confused about something fundamental (e.g. the scientific hellfires of Matter and Mind are one in the same).

Are there are general strategies or methods that we can use to at least increase the odds of extricating ourselves from epistemic netherworlds?

Being stuck in a hell means that all of the short moves through idea-space are misleading, so what you need is something radical, a quantum leap to an entirely new region of thought. In the case of scurvy, it was dumb luck (the testing of guinea pigs) that got us out of the hole; this suggests that engineering serendipity by increasing the randomness of the scientific process may be helpful (see my essay “Randomness in Science” for further discussion).

In regards to heredity, I would point to two things. First, the assembly of “extraordinary datasets” (multi-generation pedigrees, large-scale breeding experiments). In the case of physics (e.g. the LHC) and medicine, there is already substantial precedent for this path out of the inferno.

Multiple sclerosis is a chronic demyelinating disease of the central nervous system. The underlying cause of this disease is not known, but Epstein-Barr virus is thought to be a possible culprit. However, most people infected with this common virus do not develop multiple sclerosis, and it is not feasible to directly demonstrate causation of this disease in humans. Using data from millions of US military recruits monitored over a 20-year period, Bjornevik et al. determined that Epstein-Barr virus infection greatly increased the risk of subsequent multiple sclerosis and that it preceded the development of disease, supporting its potential role in the pathogenesis of multiple sclerosis (Bjornevik et al., 2022)

However, what was extraordinary about Mendel wasn’t only his dataset, but the simplicity of his approach—he disregarded all prior thinking, much of which seemed quite reasonable, and started from first principles, reasoning only from self-collected data (there was also dumb luck—pea plants and the simple traits he studied happened to be unusually good for the observation of hereditary patterns). It makes sense that this would be helpful; part of being epistemically damned means that you don’t know what you think you know, so a kind of “unlearning” is needed (I discuss this at length in Life on the Grid: Terra Incognita, which is basically a manifesto for how to make quantum leaps through idea-space)

When a distinguished but elderly scientist states that something is possible, he is almost certainly right. When he states that something is impossible, he is very probably wrong. (Clarke’s first law)

In the next 2 posts of this series (if you want to call it that), I’ll explore another approach for escaping epistemic hells which could be summarized as:

I know this is becoming a recurring question, but why have others downvoted this post so heavily?

I quite liked this post, though it felt a bit offtopic for the previous tags it had (building EA, community epistemic health; I’ve removed them and added new ones).