War between the world’s great powers sharply increases the risk of a global catastrophe: nuclear weapon use becomes more likely, as does the development of other unsafe technology. In this talk from EA Global 2018: London, Brian Tse explores the prevention of great power conflict as a potential cause area.

Below is a transcript of Brian’s talk, which CEA has lightly edited for clarity. You can also read this talk on the effectivealtruism.org, or watch it on YouTube.

The Talk

Many people believe that we are living in the most peaceful period of human history. John Lewis Gaddis proclaimed that we live in a Long Peace period, beginning at the end of the Second World War.

Steven Pinker further popularized the idea of Long Peace in his book, The Better Angels of our Nature, and explained the period by pointing to the pacifying forces of trade, democracy, and international society.

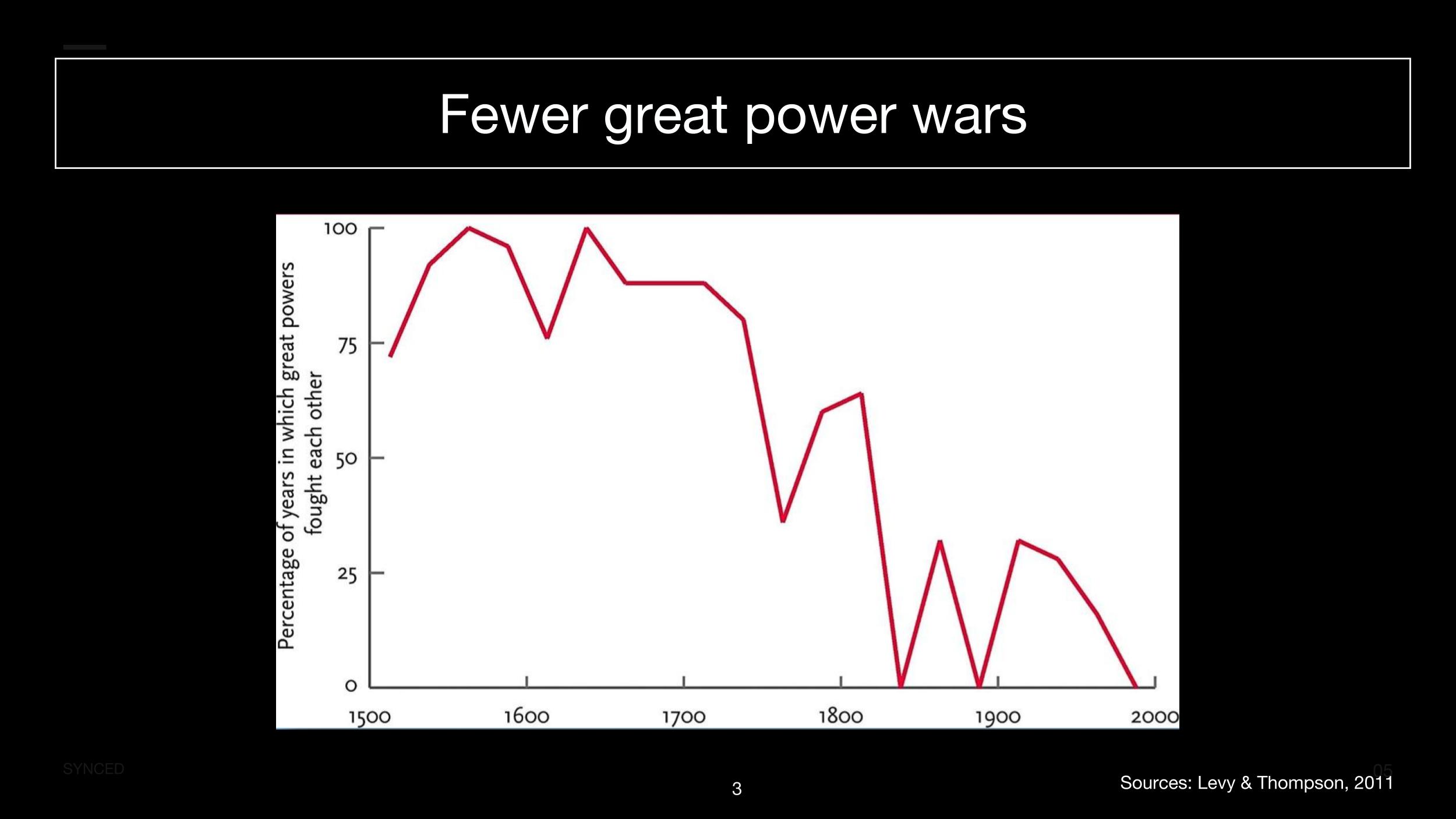

This graph shows the percentage of time when great powers have been at war with each other. 500 years ago, the great powers were almost always fighting each other. However, the frequency has declined steadily.

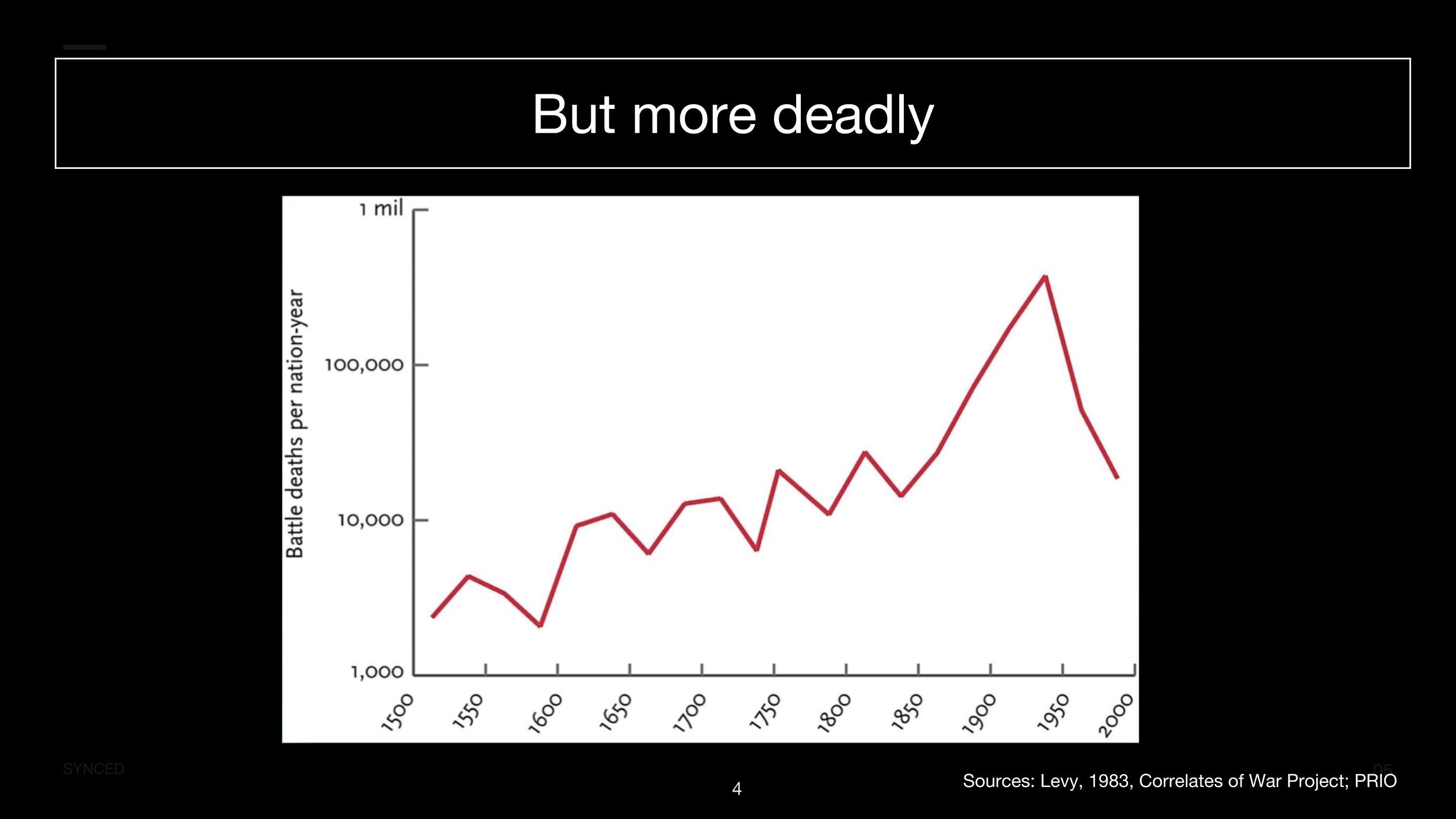

This graph, however, shows the deadliness of war, which shows a trend that goes into the opposite direction. Although great powers go to war with each other less often, the wars that do happen are more damaging.

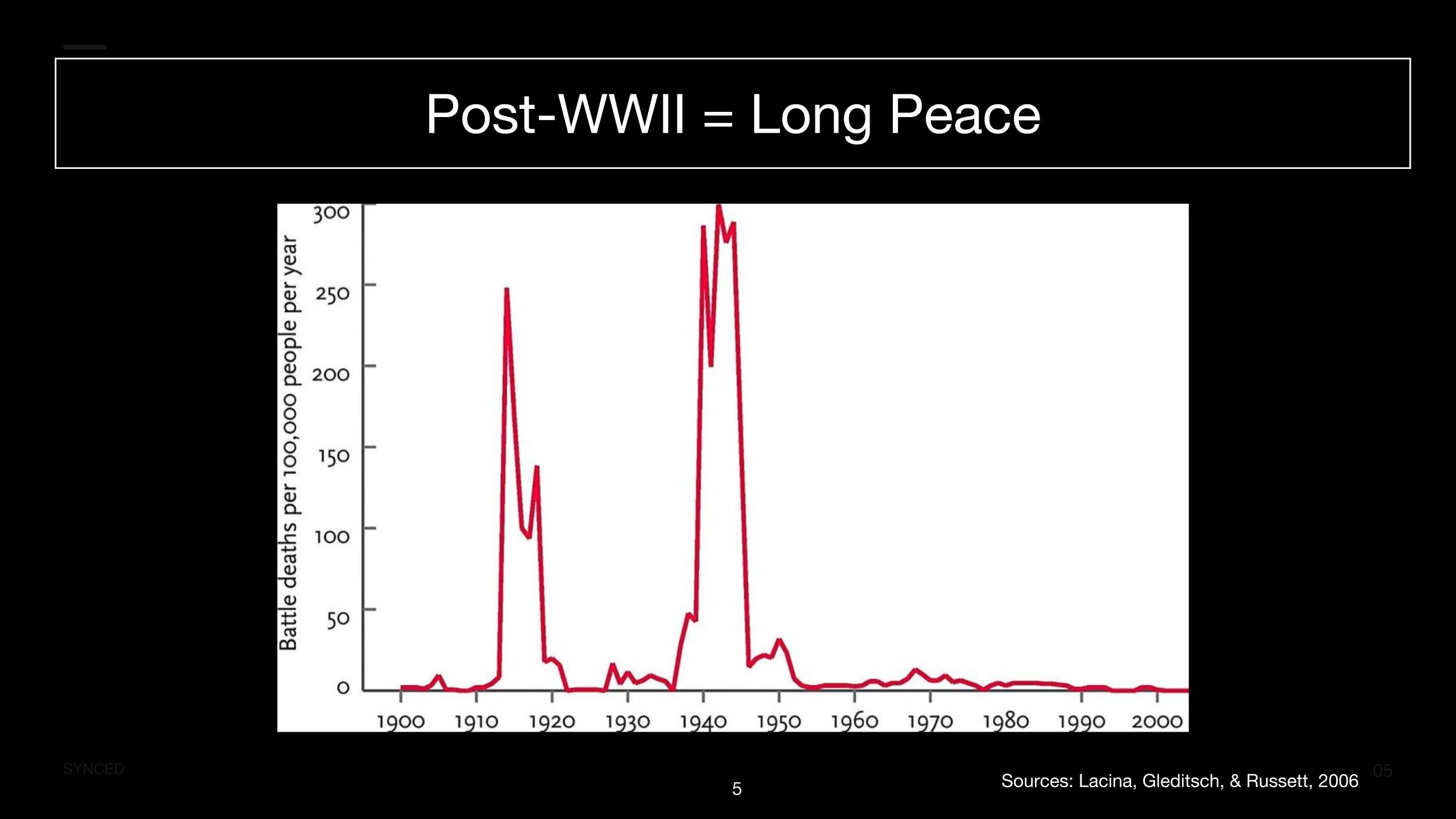

The deadliness trend did an about-face after the Second World War. For the first time in modern human history, great power conflicts were fewer in number, shorter in duration, and less deadly. Steven Pinker expects the trend to continue.

Not everyone agrees with this optimistic picture. Nassim Taleb believes that great power conflict on the scale of 10 million casualties only happens once every century. The Long Peace period only covers 70 years, so what appears to be a decline in violent conflict could merely be a gap between major wars. In his paper on the statistical properties and tail risk of violent conflict, Taleb concludes that no statistical trend can be asserted. The idea is that extrapolating on the basis of historical data assumes that there is no qualitative change to the nature of the system producing that data, whereas many people believe that nuclear weapons constitutes a major change to the data-generating process.

Some other experts seem to share a more sober picture than Pinker. In 2015, there was a poll done among 50 international relations experts from around the world. 60% of them believed that risk has increased in the last decade. 52% believe that nuclear great power conflict would increase in the next 10 years. Overall, the experts gave a median 5% chance of a nuclear great power conflict killing at least 80 million people in the next 20 years. And then there are some international relations theories which suggest a lower bound of risk.

The Tragedy of Great Power Politics proposes the theory of offensive realism. This theory says that great powers always seek to achieve regional hegemony, maximize wealth, and achieve nuclear superiority. Through this process great power conflicts will never see an end. Another book, The Clash of Civilizations, suggests that the conflicts between ideologies during the Cold War era are now being replaced by conflicts between ancient civilizations.

In the 21st century, the rise of non-Western societies presents plausible scenarios of conflict. And then, there’s some emerging discourse on the Thucydides’ Trap, which points to the structural pattern of stress when a rising power challenges a ruling one. In analyzing the Peloponnesian War that devastated Ancient Greece, the historian Thucydides explained that it was the rise of Athens, and the fear that this instilled in Sparta, that made war inevitable.

In Graham Allison’s recent book, Destined for War, he points out that this lens is crucial for understanding China-US relations in the 21st century.

So, these perspectives suggest that we should be reasonably alert about potential risks for great power conflict, but how bad would these conflicts be?

For the purpose of my talk, I first define contemporary great powers. They are US, UK, France, Russia, and China. These are the five countries that have permanent seats, and veto power on the UN Security Council. There are also the only five countries that are formally recognized as nuclear weapon states. Collectively, they account for more than half of global military spending.

We should expect conflict between great powers to be quite tragic. During the Second World War, 50 to 80 million people died. By some models, these wars cost on the order of national GDPs, and are likely to be several times more expensive. They also presents a direct extinction risk.

At a Global Catastrophic Risk Conference hosted by the University of Oxford, academics predicted that there is 1% chance of nuclear extinction risk in the 21st century. The climatic effects of nuclear wars are not very well understood, so nuclear winter presents a plausible scenario of extinction risk. Although, it’s also important to take model uncertainty into account in any risk analysis.

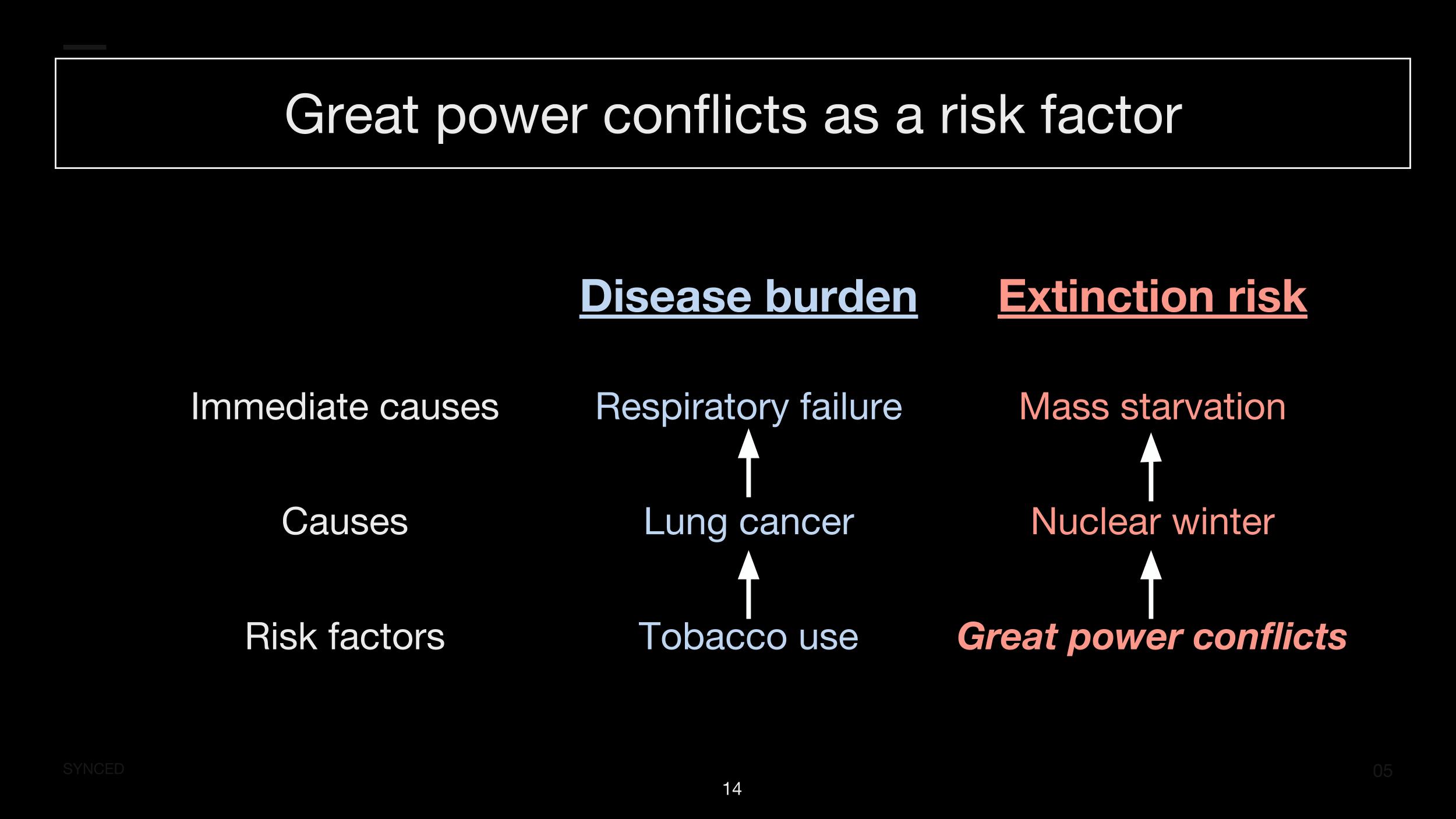

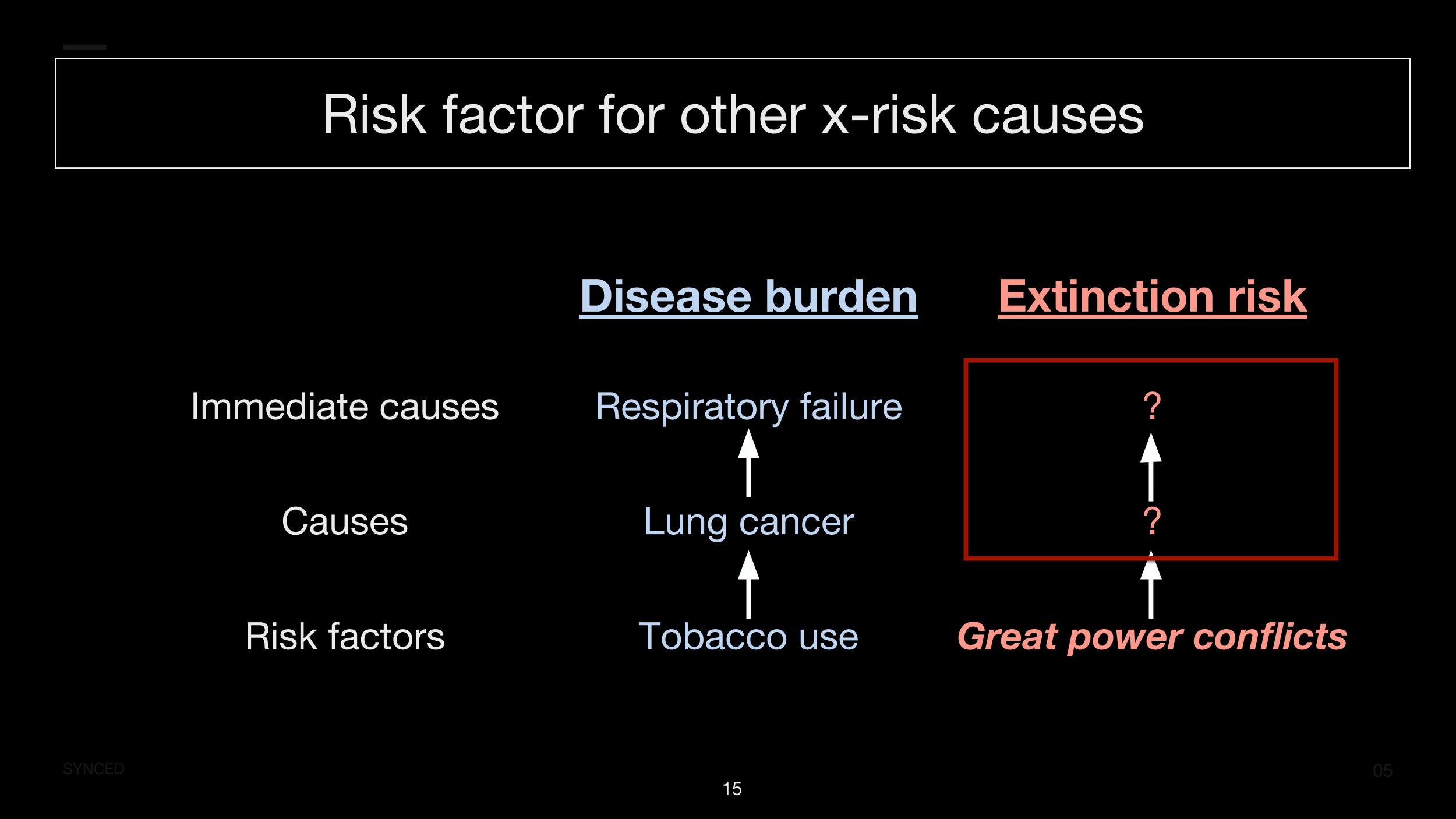

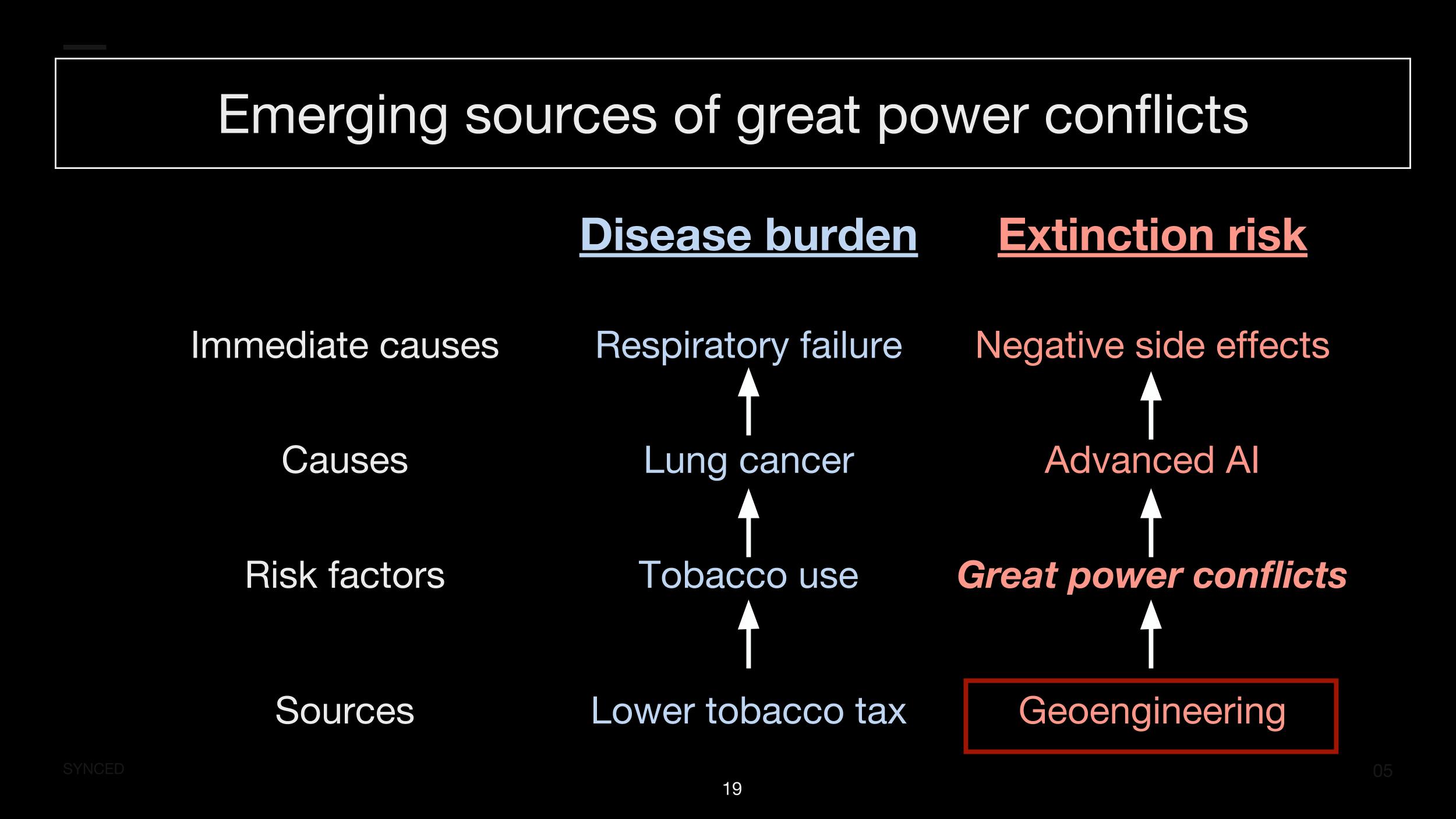

One way to think about great power conflict is as a risk factor, in the same way that tobacco use is a risk factor for the global burden of diseases. Tobacco use can lead to a wide range of scenarios of death, including lung cancer. Similarly, great power conflicts can lead to a wide range of different extinction scenarios. One example is nuclear winter, followed by mass starvation.

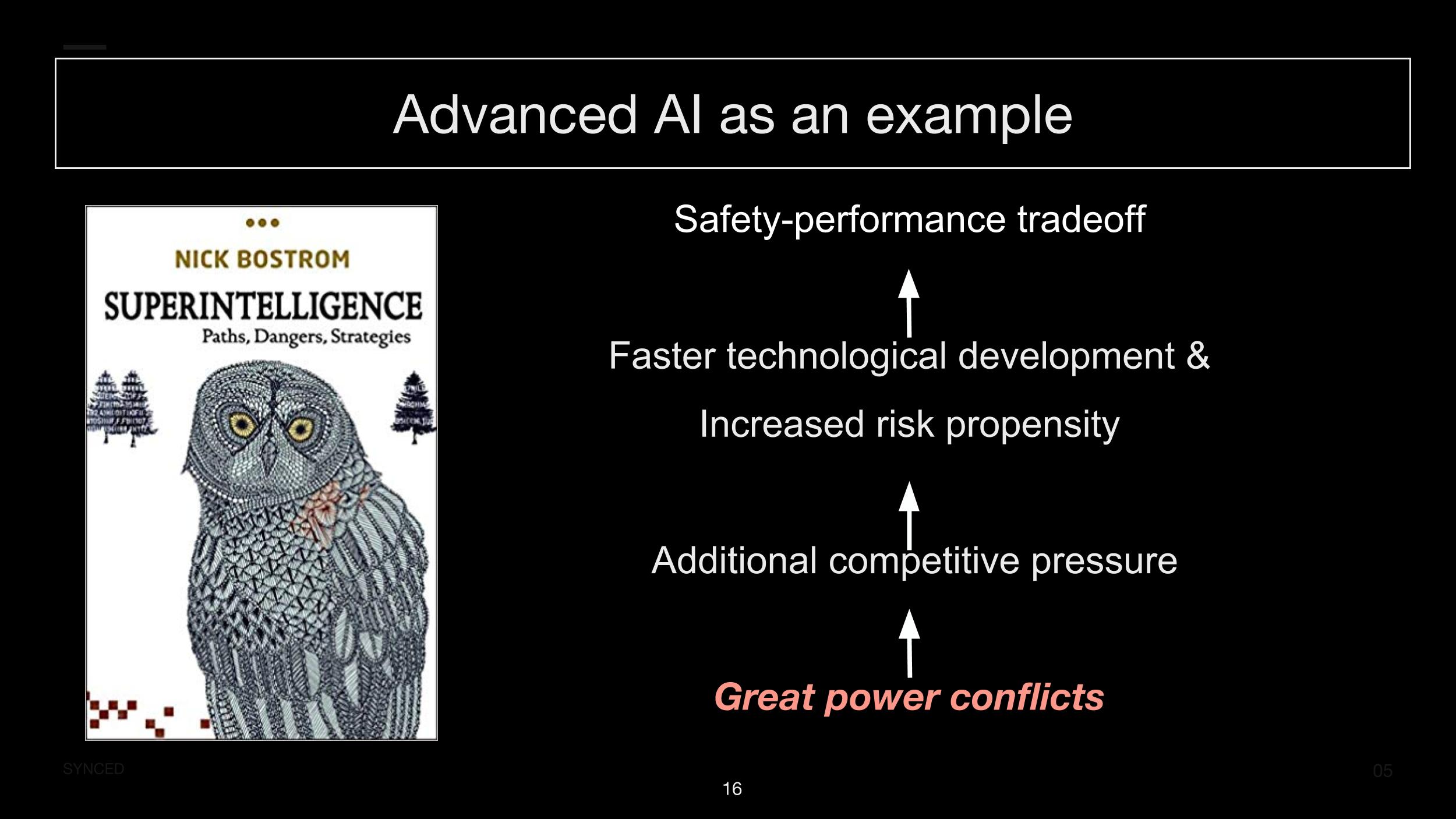

Others are less obvious, which could arise due to failures of global coordination. Let’s consider the development of advanced AI as an example. Wars typically cause faster technological development, often enhanced by public investment. Countries become more willing to take risks in order to develop technology first. One example was the development of a nuclear weapons program by India after going to war with China in 1962.

Repeating the same competitive dynamic in the area of advanced AI is likely to be catastrophic. Actors may trade-off safety research and implementation in the process, and that might present a extinction risk, as discussed in the book Superintelligence.

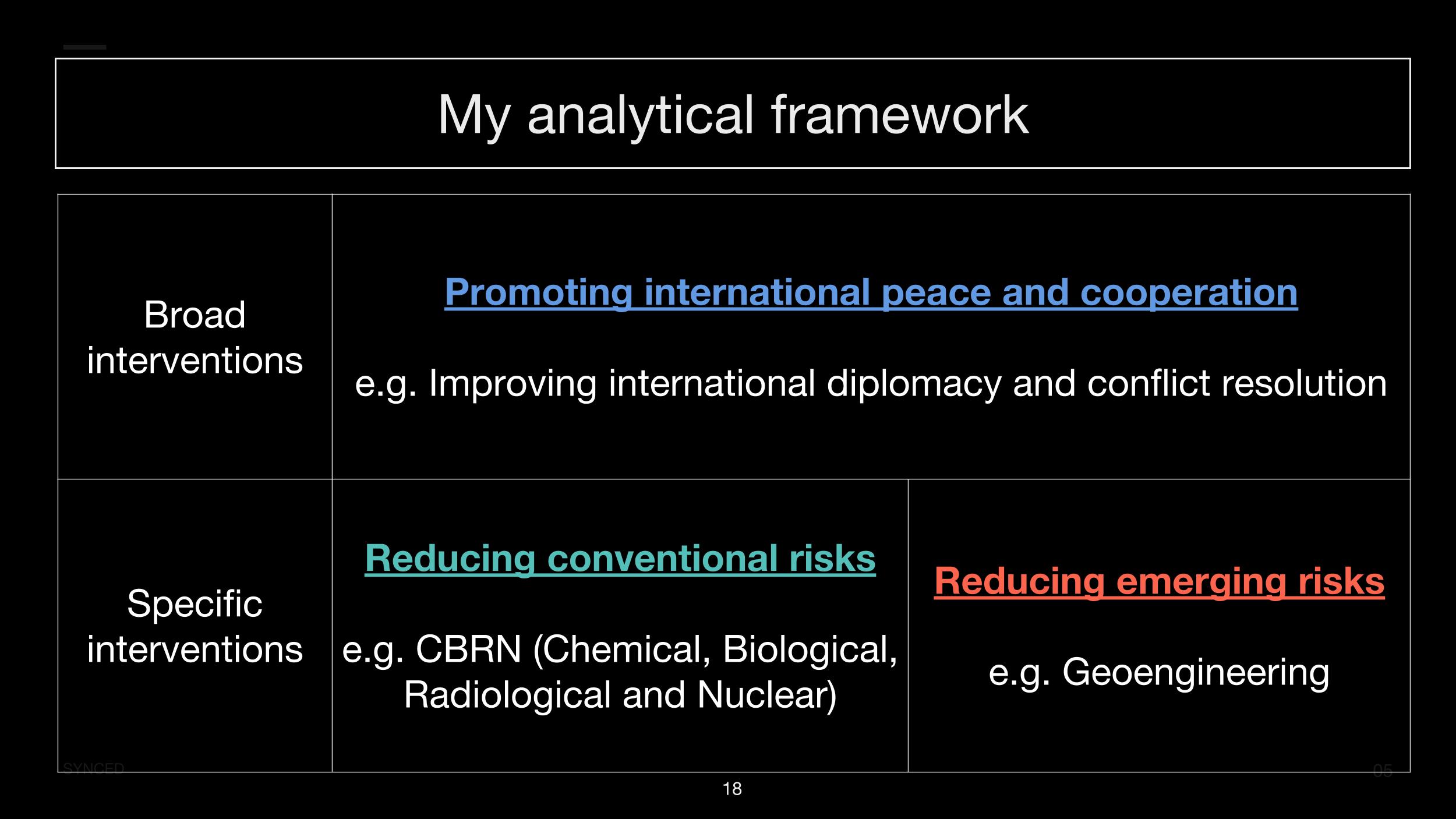

Now, how neglected is the problem? I developed a framework to help evaluate this question.

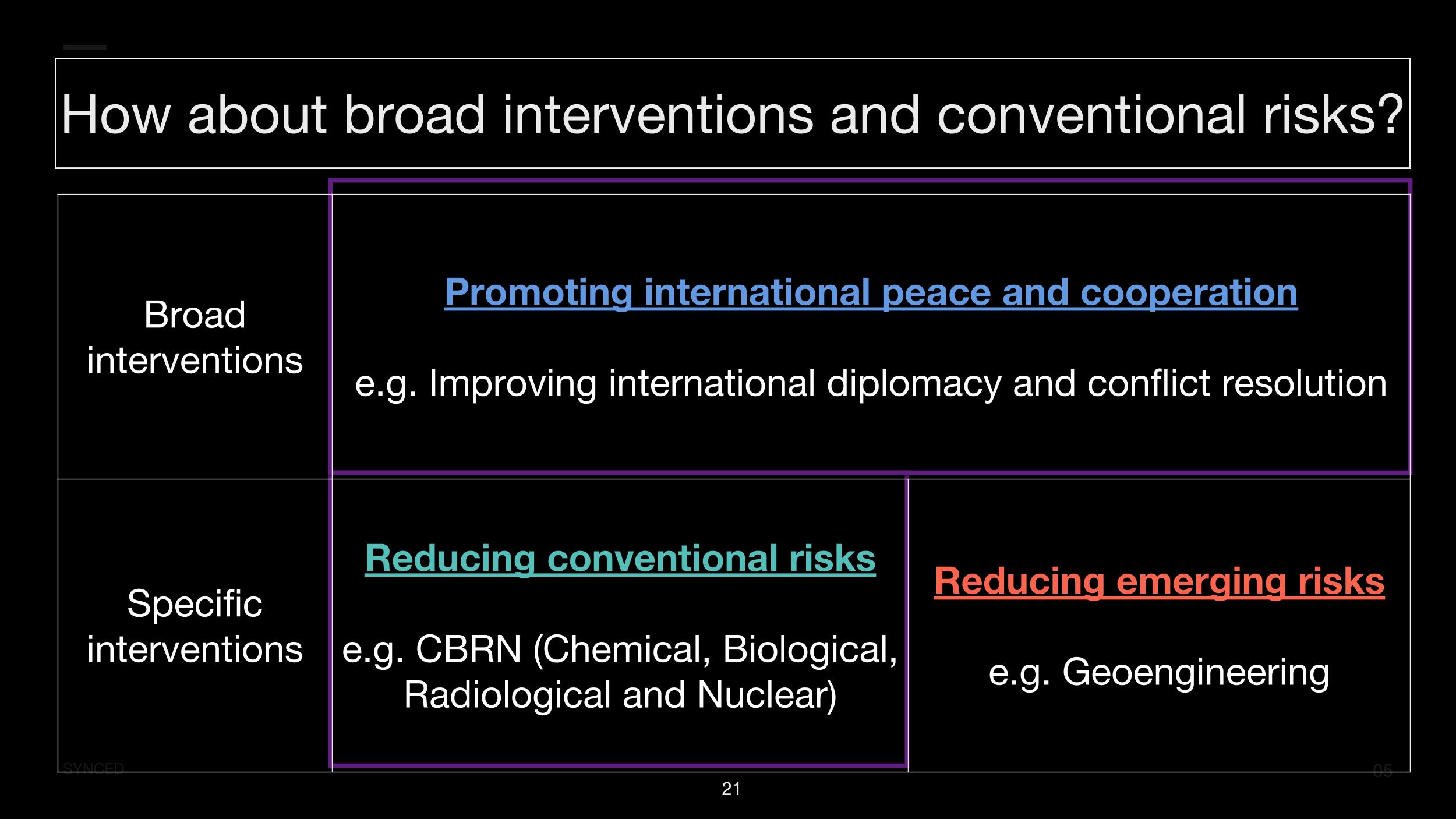

First, I make a distinction between broad versus specific interventions. By broad interventions I roughly mean promoting international cooperation and peace, and this could be by improving diplomacy and conflict resolution. With specific interventions, there are two categories of conventional risk versus emerging risk. I define conventional risk by those that are studied by international relations experts and national security professionals. So, chemical, biological, radiological, and nuclear risk, collectively known as CBRN in the community.

And then there are some novel concerns arising from emerging technologies, such as the development and deployment of geoengineering. Now, let’s go back to the framework that I used to compare existential risk to the global burden of diseases. Lower tobacco tax can lead to an increased rate of smoking. Similarly, development of emerging technologies such as geoengineering can lead to greater conflict between great powers, or lead to wars in the first place. Now in the upcoming decades, I think that it’s plausible to see the following scenarios.

Private industry players are already setting their sights on space mining; major space-faring countries in the future may compete for the available resources on the moon and asteroids. Military applications of molecular nanotechnology could be even more destabilizing than nuclear weapons. Such technology will allow for targeted destruction during attack, and also allow for greater uncertainty about the capabilities of an adversary.

With geoengineering, every technologically advanced nation could change the temperature of the planet. Any unilateral action taken by countries could lead to disagreement and conflict between them. Gene-editing will allow for a large-scale eugenics program, which could lead to a bio-ethical panic in the rest of the world. Other countries might be worried about their national security interest, because of the uneven distribution of human capital and power. Now, it seems that these emerging sources of risk are likely to be quite neglected, but what about broad interventions and conventional risks?

It seems that political attention and resources have been devoted to the problem. There are anti-war and peace movements around the world advocating for diplomacy, and the support of anti-war political candidates. There are also some academic disciplines, such as international relations and security studies, that are helpful for making progress on the issue. Governments also have the interest to maintain peace.

The US government has tens of billions in the budget for nuclear security issues, and presumably a fraction of it is dedicated to the safety, control, and detection of nuclear risk. Then, there are also some inter-governmental organizations that put aside funding for improving nuclear security. One example is the International Atomic Energy Agency.

But it seems plausible to me that there are still some neglected niches. According to a report of nuclear weapons policy done by the Open Philanthropy Project, some of the biggest gaps in the space are outside of the US and US-based advocacy. In a report that comprehensively studies US-China relations and their charter diplomacy programs, the report concludes that some relevant think tanks are actually constrained by a committed source of funding from foundations interested in the area. Since most of research on nuclear weapons policy is done on behalf of governments and thus could be tied to national interest, it seems more useful to focus on public interest from philanthropy and nonprofit perspective. One example is the Stockholm International Peace Research Institute. With that perspective, it seems that the space could be more neglected than it seems.

Now, let’s turn to assessment of solvability. This is the variable that I’m most uncertain about, so what I’m going to say is pretty speculative. By reviewing literature, it seems that there are some levers that could be used to promote peace and reduce the risk of great power conflicts.

Let’s begin with broad interventions. First, you can promote international dialogue and conflict resolution. A case study was that during the Cold War, five great powers, including Japan, France, Germany, the UK, and the US decided that a state of peace was desirable. After the Cuban Missile Crisis, they basically resolved the dispute in the United Nations and other international forums for discussions. However, one could argue that promoting dialogue is unlikely to be useful if there is no pre-alignment of interest.

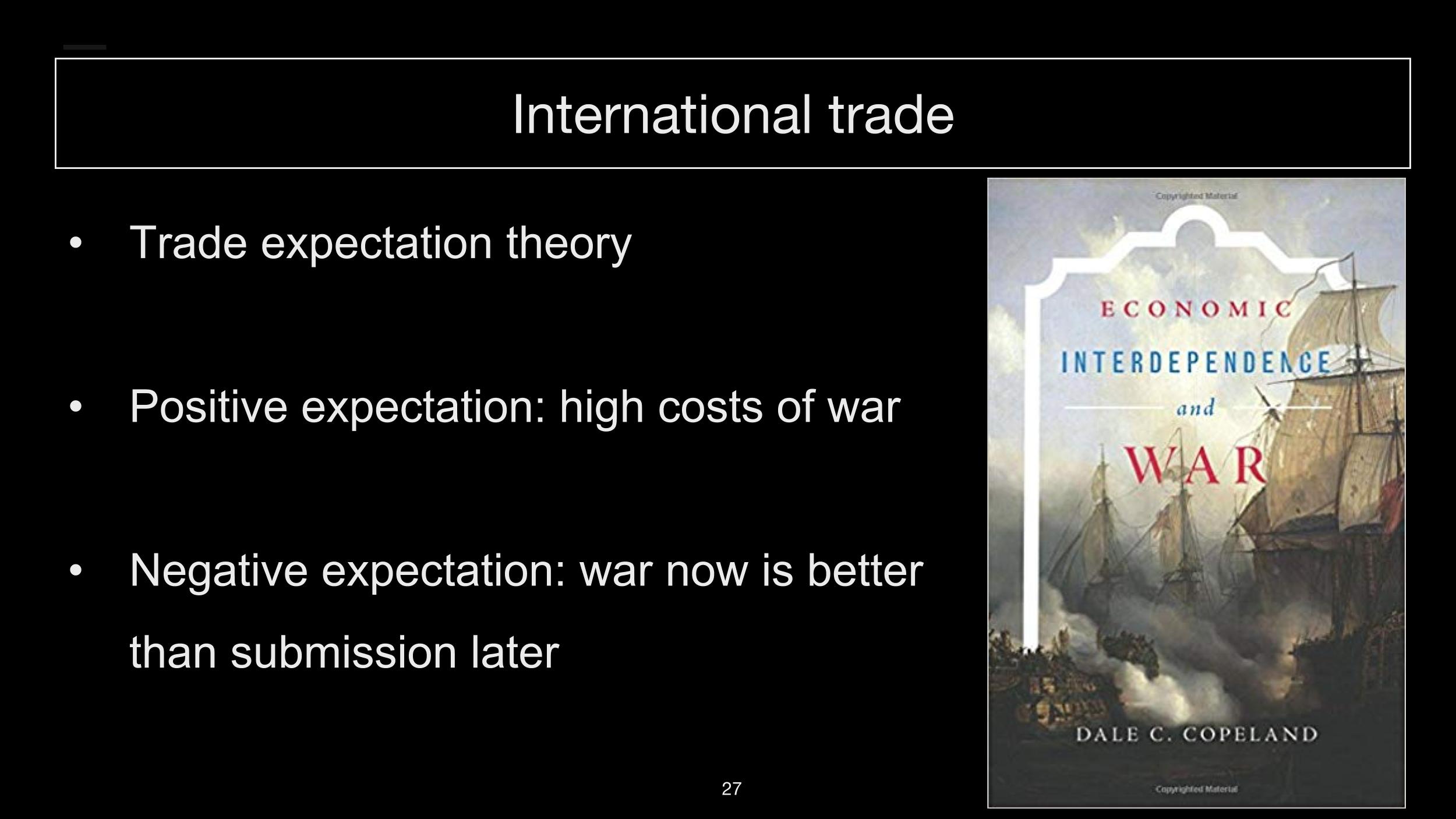

Another lever is promoting international trade. The book Economic Interdependence and War suggests the theory of trade expectations in predicting whether increased trade could reduce risk of war. If state leaders have positive expectations about the future, then they would believe in the benefits of peace, and see the high cost of war. However, if they fear economic decline and the potential loss to foreign trade and investment, then they might believe that war now is actually better than submission later. So it is probably mistaken to believe that promoting trade in general is robustly useful, if you only do it under specific circumstances.

Within specific and conventional risk, it seems that work on international arms control may improve stability. Recently the nonprofit International Campaign to Abolish Nuclear Weapons brought about a treaty on the prohibition of nuclear weapons. They were awarded the Nobel Peace Prize in 2017.

Recently, there’s also a campaign to bring nuclear weapons off hair-trigger alert. However, the campaign and the treaty have not been executed for that long, so the impacts of these initiatives are yet to be seen. With the emerging sources of risk, it seems that the space is heavily bottlenecked by under-defined and entangled research questions. It’s possible to make progress on this issue by just finding out what are the most important questions in the space, and what the structure of the space is like.

Now, what are the implications for the effective altruism community?

Many people in the community believe that improving the long-term future of civilization is one of the best ways to make a huge, positive impact.

Both the Open Philanthropy Project and 80,000 Hours have expressed the view that reducing great power conflicts, and improving international peace, could be promising areas to look into.

Throughout the talk I expressed my view through the following arguments:

It seems that the idea of Long Peace is overly optimistic, as suggested by diverse perspectives of technical analysis, expert forecasting, and international relations theories.

Great power conflicts can be understood as a risk factor that could lead to human extinction either directly, such as through nuclear winter, or indirectly, through a wide range of scenarios.

It seems that there are some neglected niches that arise from the development of novel emerging technologies. I gave examples of molecular nanotechnology, gene-editing, and space mining.

I’ve expressed significant uncertainty about the solvability of the issue, however, my best guess is that doing some disentanglement research is likely to be somewhat useful.

Additionally, it seems that there are comparative advantage for the EA community to work on this problem. A lot of people in the community share strong cosmopolitan values, which could be useful for fostering international collaboration rather than being attached to national interests and national identities. The community can also bring in the culture of explicit prioritization and long-termist perspectives to the field, and then, some people in the community are also familiar with concepts such as The Unilateralist’s Curse, Information Hazard, and Differential Technological Progress, which could be useful for analyzing emerging technologies and their associated risk.

All things considered, it seems to me that risks from great power conflicts really could be the Cause X that William MacAskill talks about. In this case, it wouldn’t be a moral problem that we have not discovered. Instead, it would be something that we’re aware of today, but for bad reasons, we deprioritized. Now my main recommendation here is that a whole lot more research should be done, so this is a small list of potential research questions.

I hope this talk can serve as a starting point for more conversations and research on the topic. Thank you.

Questions

Nathan: Well, that’s scary! How much do you pay attention to current news, like 2018 versus the much zoomed out picture of the century timeline that you showed?

Brian: I don’t think I pay that much attention to current news, but I also don’t look at this problem just on a century timeline perspective. I guess from the presentation, it would be something that is possible in the next two to three decades. I think that more research should be done on emerging technologies, but it seems with space mining, with geoengineering, these are possible in the next 10 to 15 years, but I’m not sure whether paying attention to the everyday political trends would be the most effective use of the time of effective altruists in terms of analyzing long-term trends.

Nathan: Yeah. It seems also that a lot of the scenarios that you’re talking about remain risks even if the relationships between great powers are superficially quite good, because the majority of the risk is not even in direct hot conflict, but in other things going wrong via rivalry and escalation. Is that how you see it as well?

Brian: Yeah, I think so. I think the reason why I said that it seems like there is some neglected niche in the issue, is that most of the international relations experts and scholars are not paying attention to these emerging technologies. And these technologies could really change the structure and the incentive of the countries, so even if China-US relations appear to be… well, that’s is a pretty bad example because now it’s not going that well, but suppose in a few years some international relations appear to be pretty positive, the development of powerful technologies could still just change dynamics from that state.

Nathan: Have there been a lot of near misses? We know about a few of the nuclear near misses. Have there been other kinds of near misses where great powers nearly entered into conflict, but didn’t?

Brian: Yeah. I think one paper shows that there were almost 40 near misses, and I think that was put up by the Future of Life Institute, so some people can look up that paper, and I think that in general it seems that experts agree some of the biggest risks from nuclear would be accidental use, rather than deliberate and malicious use between countries. That might be something that people should look into, just on improving the detection systems and improving the technical robustness of the reporting, and so forth.

Nathan: It seems like one fairly obvious career path that might come out of this analysis would be to go into the civil service and try to be a good steward of the government apparatus. What do you think of that, and are there other career paths that you have identified that you think people should be considering as they worry about the same things you’re worrying about?

Brian: Yeah. I think apart from civil services, working at think tanks seems also plausible, and if you are particularly interested in the development of emerging technologies like the examples I have given, then it seems that there are some relevant EA organizations that would be interested. FHI would be one example, and I think doing some independent research could also be somewhat useful, especially if we are still in a stage of disentangling the space. It would be good to find out what some of the most promising topics are to focus on.

Question: What effect do you think climate change has on the risk of great power conflicts?

Brian: I think that one scenario that I’m worried about would be geoengineering. Geoengineering is like a plan B for dealing with climate change, and I think that there is a decent chance that the world won’t be able deal with climate change in time otherwise. In that case, we would need to figure out a mechanism in which countries can cooperate and govern the deployment of geoengineering. One example would be, China and India are geographically very close, and if one of them decided to deploy the geoengineering technologies, that would also affect the climatic interest the other. So, disagreement and conflict between these two countries could be quite catastrophic.

Nathan: What do you think the role in the future will be for international organizations like the UN? Are they too slow to be effective, or do you think they have an important role to play?

Brian: I am a little bit skeptical about the roles of these international organizations, especially because of two reasons. One is that these emerging technologies are being developed very quickly, and so if you look at AI, I think that nonprofits and civil society initiatives and firms will be able to respond to these changes much more quickly, instead of going through all the bureaucracy of UN. Also, it seems that historically nuclear weapons and bio-weapons were mostly driven by the development of countries, but with AI, and possibly with space mining, perhaps with gene-editing, private firms are going to play a significant role. I think I would be keen to explore other models, such as multi-stakeholder models, firm-to-firm, or lab-to-lab collaboration. And also possibly the role of epistemic communities between researchers in different countries, and just get them to be in the same room and agree with a set of principles. The Asilomar Principles regulated biotechnology 30 years ago, and now we have a converging discourse and consensus around a Asilomar Conference on AI, so I think people should export these confidence models in the future as well.

Nathan: A seemingly important factor in the European peace since World War II has been a sense of European identity, and a shared commitment to that. Do you think that it is possible or desirable to create a global sense of identity that everyone can belong to?

Brian: Yeah, this is quite complicated. I think that there are two pieces to it. First, the creation of a global governance model may exaggerate the risk of global permanent totalitarianism, so that’s a downside that people should be aware of. But at the same time, there are benefits of global governance in terms of better cooperation and security that seem to be really necessary for regulating the development of synthetic biology. So, a more widespread use of surveillance might be necessary in the future, and people should not disregard this possibility. I’m pretty uncertain about what the trade-off is there, but people should be aware of this trade-off and keep doing research on this.

Nathan: What is your vision for success? That is to say, what’s the most likely scenario in which global great power conflict is avoided? Is the hope just to manage the current status quo effectively, or do we really need a sort of new paradigm or a new world order to take shape?

Brian: I guess I am hopeful for cooperation based on a consensus on the future as a world of abundance. I think that a lot of framework that went into my presentation was around regulating and minimizing the downside risk, but I think it’s possible to foster international cooperation around the positive future. Just look at how much good we can create with safe and beneficial AI. We can potentially have universal basic income. If we cooperate on space mining, then we can go to the space and just have amazing resources in the cosmos. I think that if people have an emerging view on the huge benefits of cooperation, and the irrationality of conflict, then it’s possible to see a pretty bright future.

Possibly you are thinking of the Global Catastrophic Risks Institute, and Baum et al.’s A Model for the Probability of Nuclear War ?

Interesting talk. I agree with the core model of great power conflict being a significant catastrophic risk, including via leading to nuclear war. I also agree that emerging tech is a risk factor, and emerging tech governance a potential cause area, albeit one with uncertain tractability.

I would have guessed AI and bioweapons were far more dangerous than space mining and gene editing in particular; I’d have guessed those two were many decades off from having a significant effect, and preventing China from gene editing seems low-tractability. Geoengineering seems low-scale, but might be particularly tractable since we already have significant knowledge about how it could take place and what the results might be. Nanotech seems like another low-probability high-impact uncertain-tractability emerging tech, though my sense is it doesn’t have as obvious a path to large-scale application as AI or biotech.

--

The Taleb paper mentioned is here: http://www.fooledbyrandomness.com/longpeace.pdf

I don’t understand all the statistical analysis, but the table on page 7 is pretty useful for summarizing the historical mean and spread of time-gaps between conflicts of a given casualty scale. As a rule of thumb, average waiting time for a conflict with >= X million casualties is about 14 years * sqrt(X), and the mean absolute deviation is about equal to the average. (This is using the ‘rescaled’ data, which buckets historical events based on casualties as a proportion of population; this feels to me like a better way to generalize than by considering raw casualty numbers.)

They later mention that the data are consistent with homogeneous Poisson distributions, especially for larger casualty scales. That is, the waiting time between conflicts of a given scale can be modeled as exponentially distributed, with a mean waiting time that doesn’t change over time. So looking at that table should in theory give you a sense of the likelihood of future conflicts.

But I think it’s very likely that, as Brian notes in the talk, nuclear weapons have qualitatively changed the distribution of war casualties, reducing the likelihood of say 1-20 million casualty wars but increasing the proportion of wars with casualties in the hundreds of millions or billions. I suspect that when forecasting future conflicts it’s more useful to consider specific scenarios and perhaps especially-relevant historical analogues, though the Taleb analysis is useful for forming a very broad outside-view prior.