Thanks for the response, and to be honest it’s something that I’d agree with too. I’ve edited my initial comment to better reflect what’s actually true. I wouldn’t call the EA Global that I’ve been to an “AI Safety Conference,” but if Bay Area is truly different it wouldn’t surprise me. “Well-funded” is also subjective, and I think it’s likely that I was letting my reflexive defensiveness get in the way of engaging directly. That said, I think the broader point about it exposing a weakness in EA comms and the comments reflecting broad low-trust attitudes towards ideas like EA stand, and I hope people continue to engage with them.

Charlie G 🔹

That’s the thing that gets me here: the TikTok itself is primarily not mean-spirited (I would reccomend watching it, it’s 3 minutes, and it did make me cringe, but there was definitely a decent amount of thought put into it!) Some of the commenters are a bit mean-spirited, I won’t deny, but some are also just jaded. The problem, to me, right now, is that the “thoughtful media” idea of EA, which to me this person embodies, says that EA has interesting philosophical grounding and also has a lot of weird Silicon Valley stuff going on. I think that content like this is exactly what we should be hoping to influence.

Hey y’all,

My TikTok algorithm recently presented me with this video about effective altruism, with over 100k likes and (TikTok claims) almost 1 million views. This isn’t a ridiculous amount, but it’s a pretty broad audience to reach with one video, and it’s not a particularly kind framing to EA. As far as criticisms go, it’s not the worst, it starts with Peter Singer’s thought experiment and it takes the moral imperative seriously as a concept, but it also frames several EA and EA-adjacent activities negatively, saying EA quote “has an enormously well funded branch … that is spending millions on hosting AI safety conferences.”

I think there’s a lot to take from it. The first is in relation to @Bella’s argument recently that EA should be doing more to actively define itself. This is what happens when it doesn’t. Because EA is legitimately an interesting topic to learn about because it asks an interesting question. That’s what I assume drew many of us here to begin with. It’s interesting enough that when outsiders make videos like this, even when they’re not the picture that’d we’d prefer,[1] they will capture the attention of many. This video is a significant impression, but it’s not the end-all-be-all, and we should seek to define ourself lest we be defined by videos like it.

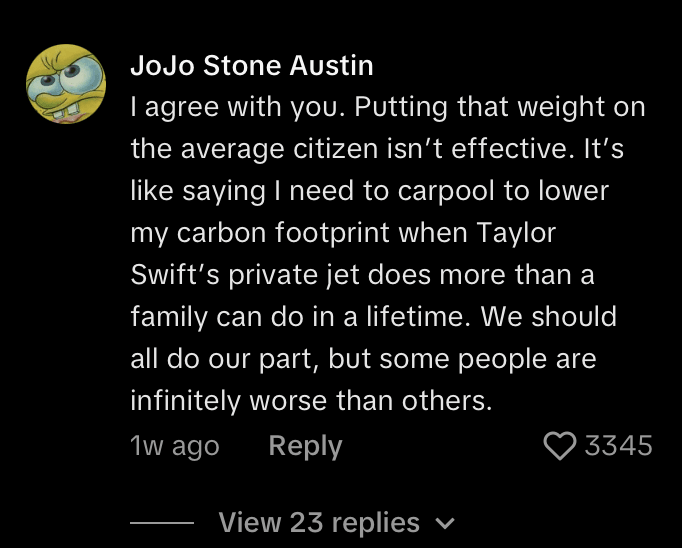

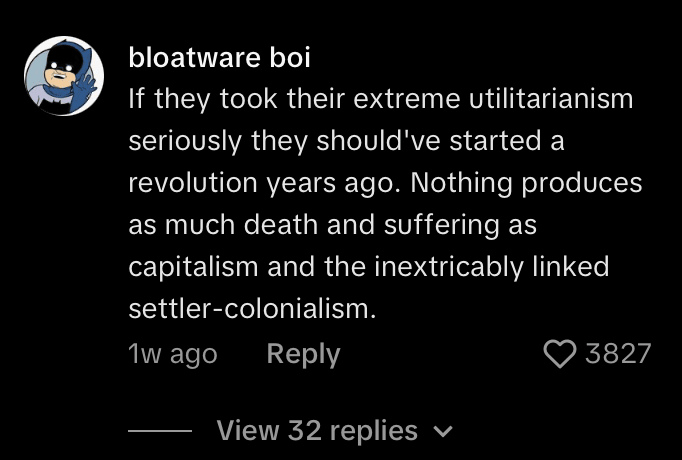

The second is about zero-sum attitudes and leftism’s relation to EA. In the comments, many views like this were presented:

@LennoxJohnson really thoughtfully grappled with this a few months ago, when he talked about how his journey from a zero-sum form of leftism and the need for structural change towards becoming more sympathetic to the orthodox EA approach happened. But I don’t think we can necessarily depend on similar reckonings happening to everyone, all at the same time. With this, I think there’s a much less clear solution than the PR problem, as I think on the one hand that EA sometimes doesn’t grapple enough with systemic change, but on the other hand that society would be dramatically better if more people took a more EA outlook towards alleviating suffering.

For me, I’m partial towards demonstrating virtue as one of the primary ways of showing that it’s possible to create improvement without systemic change. If EAs are directly helping people out, with whatever they might have, it makes it harder to position yourself above people who are doing that. In particular, I keep hearing about GiveDirectly specifically as a way of doing this. When you’re directly giving money to people much poorer than yourself, there’s something to that that really can’t be ignored. Money is agency in today’s society, and when you’re directly giving someone money, that’s a form of charity that is much harder to interpret as paternalistic or narrow-sighted, it’s just altruistic.

GiveDirectly is already the benchmark by which GiveWell evaluates charities, it’s worth emphasizing that even more within the movement and in our outreach efforts.That isn’t to say I think it should supplant x-risk reduction and AI safety work, I think those are still extremely important and neglected in society at large, but EA as a whole has a fundamental issue with what it is if it wants to be a mass movement. A few months ago, I ran into a service worker who could not be regarded as an EA by any extent. But he was telling about this new charity he’d heard about, GiveDirectly, and how giving to it felt like going around the charity industry and helping without working with existing power structures. In my opinion, people like this should form the core of a broader EA movement. I think it’s possible to have a movement which is primarily based on the idea of doing good, where many members donate 1-10% of their income to charity, and engage with EA ideas somewhere roughly weekly and can be activated when they see something that’s clearly dangerous towards our long-term future. I think, to some extent, that’s what EA the movement should strive for. EA and 80k should be separate, and right now there is no distinction. @Mjreard a few months ago expressed this as EA needing fans (donors) rather than players (direct workers). We can and should work towards that world.

The speaker says EA spends “millions on AI safety conferences,” which is pretty inaccurate though not 100% wrong, as that is EA Global’s budget where AI safety topics are a major discussion though not the only focus. She also says AI safety is “particularly well-funded,” which is basically untrue right now in the broader world, but isn’t pants-on-fire wrong in strictly the EA world.↩︎I’ve retracted this section following @NickLaing’s comment.

The OpenAI Governance Transition: The History, What It Is, and What It Means

Running EA Oxford Socials: What Worked (and What Didn’t)

After someone reached out to me about my experience running EA socials for the Oxford group, I shared my experience and was encouraged to share what I sent him more widely. As such, here’s a brief summary of what I found from a few terms of hosting EA Oxford socials.

The Power of Consistency

Every week at the same time, we would host an event. I strongly recommend this, or having some kind of strong schedule, as it lets people form a routine around your events and can help create EA aligned friend-groups. Regardless of the event we were hosting, we had a solid 5ish person core who were there basically every week, which was very helpful. We tended to have 15 to 20 people per event, with fewer at the end of the term as people got busy with finishing tutorials.

Board Game Socials

Board game socials tended to work the best of the types of socials I tried. No real structure was necessary, just have a few strong EAs to set the tone, so it really feels like “EA boardgames,” and then just let people play. Having the games acts as a natural conversation starter. Casual games especially are recommended, “Codenames” and “Coup” were favorites in particular at my socials but I can imagine many others working too. Deeper games have a place too, but they generally weren’t primary. In the first two terms, we would just hold one of these every week. They felt like ways for people to just talk about EA stuff in a more casual environment than the discussion groups or fellowships.

“Lightning Talks”

We also pretty effectively did “Lightning Talks,” basically EA powerpoint nights. As this was in Oxford, we could typically get at least one EA-aligned researcher or worker there every week we did it (which was every other week), and the rest of the time would be filled with community member presentations (typically between 5-10 minutes). These seemed to be best at re-engaging people who signed up once but had lost contact with EA, my guess is primarily because EA-inclined people tend to have joined partially because of that lecture-appreciating personality. In the third term, we ended up alternating weeks between the lightning talks and board game socials.

Other Formats

Other formats, including pub socials and one-off games (like the estimation game, or speed updating) seemed less effective, possibly just due to lower name recognition. Everyone knows what they’re getting with board games, and they can figure out lightning talks, but getting too creative seemed to result in lower turnout.

Execution Above All

Probably more important than what event we did was doing what we did well. We found that having (vegan) pizza and drinks ready before the social, and arriving 20 minutes early to set things up, dramatically improved retention. People really like well-run events; it helps them relax and enjoy rather than wondering when the pizza will arrive, and I think that’s especially true of student clubs where that organizational competence is never guaranteed.

I’ve been considering writing something similar for a while, so I’m really glad you posted this (I honestly lacked the courage to do it myself).

My own experience aligns with your altruism-first approach. I got involved with EA Oxford through that route, and when I took over organizing our socials (initially just out of willingness and due to my college’s booking policy), our primary organizer noted how surprisingly effective they were at engaging people.

I’d been planning to bring this social-first model back to my home university, but I’ve been hesitant to buck the conventional wisdom about what works for EA groups. Despite some current issues with my school’s activities council, I was defaulting to running an intro fellowship. Your post makes me reconsider—having evidence from different EA group models could be really valuable.

On pitching EA: I completely agree about reorienting our pitches. At this year’s activities fair, I essentially A/B tested different approaches. The most effective ones focused on “helping people do as much good as possible, whatever that ends up meaning to them,” then describing areas others have found effective through their own frameworks.

On “fellowships”: The term itself reinforces the exclusivity and hierarchy issues you mention. It positions itself as the path into EA, but I don’t think it’s particularly good at that role. We should be introducing ideas and encouraging exploration and sharing, not gatekeeping.

On philosophical grounding: EA often gets bogged down in philosophical prerequisites when our core appeal is simple: people want to do good effectively. We don’t need everyone to choose a philosophical framework first. The desire to save lives can come from virtue ethics, deontology, or just basic human compassion.

This reminds me of a conversation I had with a food service worker at Mission Burrito last year. He was drawn to GiveDirectly as an alternative to what he saw as a corrupt charity world. His entry point was completely different from the typical EA pathway, and it made me realize how many people we might be missing by not meeting them where they are.

I agree that the timing is to some extent a coincidence, especially considering that the TIME piece followed an Anthropic board appointment which would have to have been months in the making, but I’m also fairly confident that your piece shaped at least part of the TIME article. As far as I can tell, you were the first person to bring up the concern that large shareholders, in particular potentially Amazon and Google, could end up overruling the LTBT and annulling it. The TIME piece quite directly addressed that concern, saying,

The Amazon and Google question

According to Anthropic’s incorporation documents, there is a caveat to the agreement governing the Long Term Benefit Trust. If a supermajority of shareholders votes to do so, they can rewrite the rules that govern the LTBT without the consent of its five members. This mechanism was designed as a “failsafe” to account for the possibility of the structure being flawed in unexpected ways, Anthropic says. But it also raises the specter that Google and Amazon could force a change to Anthropic’s corporate governance.

But according to Israel, this would be impossible. Amazon and Google, he says, do not own voting shares in Anthropic, meaning they cannot elect board members and their votes would not be counted in any supermajority required to rewrite the rules governing the LTBT. (Holders of Anthropic’s Series B stock, much of which was initially bought by the defunct cryptocurrency exchange FTX, also do not have voting rights, Israel says.)

To me, it would be surprising if this section was added without your post in mind. Again, your post is the only time prior to the publication of this article (AFAICT) that this concern was raised.

Charlie G’s Quick takes

On May 27, 2024, Zach Stein-Perlman argued on here that Anthropic’s Long-Term Benefit Trust (LTBT) might be toothless, pointing to unclear voting thresholds and the potential for large shareholder dominance, such as from Amazon and Google.

On May 30, 2024 TIME ran a deeply reported piece confirming key governance details, e.g., that a shareholder supermajority can rewrite LTBT rules but (per Anthropic’s GC) Amazon/Google don’t hold voting shares, speaking directly to the concerns raised three days earlier. It also specifically reviewed incorporation documents via permission granted by Anthropic and interviewed experts about them, confirming some details about when exactly the LTBT would gain control of board seats.

I don’t claim that this is causal, but the addressing of the specific points raised by Stein-Perlman’s post which weren’t previously widely examined and the timeline of the two articles implies some degree of conversation between them to me. It points toward this being an example of how EA forum posts can shape discourse around AI safety. It also seems to encourage the idea that if you see addressable concerns for Anthropic in particular and AI safety companies in general, posting them here could be a way influencing the conversation.

We should slow AI down

I think anywhere between 9 (8?) and 15 is acceptable. To me, AI seems to have tremendous potential to alleviate suffering if used properly. At the same time, basically every sign you could possibly look at tells us we’re dealing with something that’s potentially immensely dangerous. If you buy into longtermist philosophy whatsoever, as I do, that means a certain duty to ensure safety. I kinda don’t like going above 11, as it starts to feel authoritarian, which I have a very strong aversion to, but it seems non-deontologicaclly correct.

If you’re in EA in California or Delaware and believe OpenAI has a significant chance of achieving AGI first and there being a takeoff, it’s probably time-effective to write a letter to your AG encouraging them to pursue action against OpenAI. OpenAI’s nonprofit structure isn’t perfect, but it’s infinitely better than a purely private company would be.

Absolutely agreed that factor 2 that I mentioned might be insubstantial, but I felt the need to mention it just in case it ended up being greater than I expected. My intuition on this issue is somewhat different than yours, and my guess is that the two largest factors (remittances and direct effects on Nigeria through less nurses) are going to roughly balance out, and that it’s going to depend on the other issues which I placed under factor 4.

You mention two of the indirect (factor 4) impacts I was thinking of, but there’s definitely a lot of that kind of impact which is difficult to measure.

On its own terms as you discuss it, I absolutely agree that the original article is flawed. It’s certainly the case that the issue nowhere near as straightforward as the paper’s authors would have you believe. However, the question of the overall cost-benefit is also an important one, and also worth examining. I’m going to start working on a basic model, and I’ll post here (and maybe in a separate post as well) once I’ve completed it to a level I’m content with. The overall issue at work here, of brain drain vs. remittances appears to me to be a very important issue in global development, with this being an example where the costs of brain drain appears higher than usual. If the effect of remittances is powerful enough to outweigh brain drain even in this case, it could have broader impacts in terms of immigration as a net QALY increase.

There already does appear to be some evidence towards an effect of “brain gain” where even medical immigration appears to improve net wellbeing through remittances, as seen in the example of the Philippines here, but it’s a complex issue, and the medical situation in the Philippines is different from the situation in Nigeria.

This is a good point about the CGD paper I believe, though to some extent it’s based on whether to look at overall flows rather than flows specifically to the UK. I think you make a strong case that it’s best to look at overall flows, and show issues with the WHO per capita data. However, I don’t think this gets anywhere near actually solving whether or not this can be a “win-win” for both countries. It is easily possible that nurse emigration, despite lowering the supply of nurses in Nigeria, can still be a win-win due to remittances. There is abundant evidence that remittances promote financial development and long-term economic growth. In order to determine whether or not this emmigration is a net benefit for both countries, one would have to take into account:

The benefit that the nurse gives in Nigeria.

The benefit the nurse gives in the UK.

The benefit to Nigeria from remittances the nurse sends back.

Any long-term effects that might come from having talented Nigerians leave for the

UK, and long-term effects a nurse shortage could have in Nigeria.

To me, this seems like a complicated question that would take many hours of research to reach a satisfactory conclusion to.

How do you square this with the experience of J.C. Penney, who, after eliminating sales and instead choosing to offer “everyday low prices”, experienced a 20% drop in sales? It’s certainly tempting to believe that discounts and other such unnecessary promotions don’t work, but it seems that sales do to some extent stimulate, well, sales.

See https://hbr.org/2012/05/can-there-ever-be-a-fair-price.

I actually share a lot of your read here. I think it is actually a very strong explanation of Singer’s argument (the shoes-for-suit swap is a nice touch), and the observation about the motivation for AI safety warrants engagement rather than dismissal.

My one quibble with the video’s content is the “extreme utilitarians” framing; as I’m one of maybe five EA virtue ethicists, I bristle a bit at the implication that EA requires utilitarianism, and in this context it reads as dismissive. It’s a pretty minor issue though.

I think that the video is still worth providing a counter-narrative to though, and I think that’s actually going to be my primary disagreement. For me, that counter-narrative isn’t that EA is perfect, but that taking a principled EA mindset towards problems actually leads towards better solutions, and has lead to a lot of good being done in the world already.

The issue with the video, which I should’ve been more explicit about in the original comment, is that when taken in the context of TikTok, it acts as a reinforcement to people who think that you can’t try to make the world better. She presents a vision of EA where it initially tried to do good (while not mentioning any of the good it actually did, just the sacrifices that people made for it), and then that it was corrupted by people with impure intentions, and now no longer does.

Regardless of what you or I think of the AI safety movement, I think that the people who believe in it believe in it seriously, and got there primarily through reasoning from EA principles. It isn’t a corruption of EA ideas of doing good, just a different way of accomplishing them, though we can (and should) disagree on how the weighting of these factors plays out. And it primarily hasn’t supplanted the other ways that people within the movement are doing good, it’s supplemented them.

When the first exposure of EA ideas leads people towards the “things can’t be better” meme, that’s something that I think is worth combatting. I don’t think EA is perfect, but I think that thinking about and acting on EA principles really can help make the world better, and that’s what an ideal simple EA counter-narrative would emphasize to me.