I’m a doctor working towards the dream that every human will have access to high quality healthcare. I’m a medic and director of OneDay Health, which has launched 53 simple but comprehensive nurse-led health centers in remote rural Ugandan Villages. A huge thanks to the EA Cambridge student community in 2018 for helping me realise that I could do more good by focusing on providing healthcare in remote places.

NickLaing

Thanks for this reply—I agree with most of what you have written here.

I think though you’ve missed some of the biggest problems with this campaign.

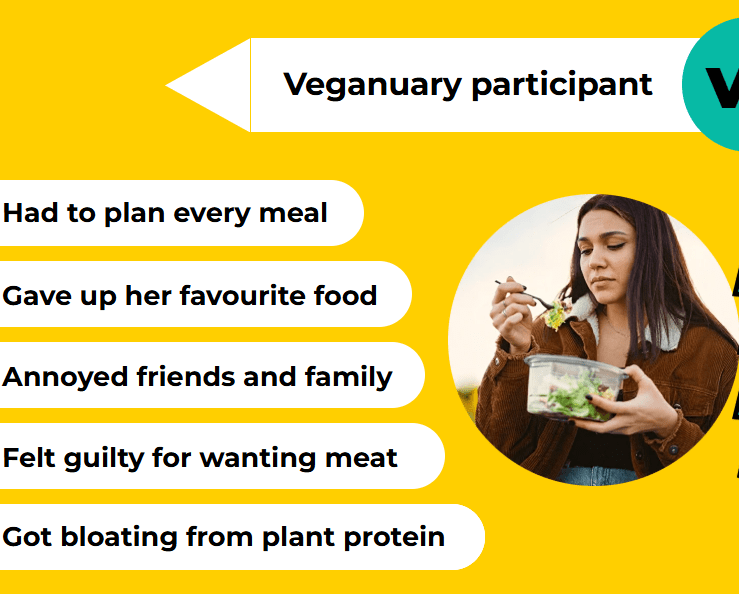

1. This seems to undermine vegans and vegetarians (see image above), and their efforts to help animals. It seems straightforwardly fair to interpreted this as anti-veganuary and anti-vegan, especially at a glance.

2. What matters in media is how you are portrayed, not what the truth is. Your initial campaign poster is ambiguous enough that its easy to interpret as a pro meat-eating campaign and anti-vegan campaign. I could have interpreted it as that myself, I don’t think the media were grossly wrong here to report that.

The Telegraph article is pretty good actually overall and makes good points that could be good for animal welfare, although the first “clickbaity” title and paragraph is unfortunate (see above)

Media lasts for a day, correcting it is the right thing to do but doesn’t have much of an impact.

I can see what you are trying to do here, and its quite clever. I love most of your stuff, but this campaign seems like a mistake to me.

You’re right that we aren’t the target audience. I take this as probably evidence in the other direction. I think if EA’s on the forum feel uncomfortable about this, the general public is likely to take it even worse than us.

I agree that its a light-hearted campaign, that is clever with good intentions. I just think its a mistake and might well do more harm than good. That’s OK, this is just one campaign among many great ones from FarmKind

After having a quick look at this campaign, it pretty straightforwardly seems misguided and confusing. Farmkind’s efforts to appeal to regular people to donate rather than go vegan seems good and makes sense. This adversarial campaign looks and feels awful. Two reasons immediately jumped out as to why it feels off.

it undermines and even goads vegans and vegetarians doing their bit for animals

glorifying people who eat lots of meat feels bad in a guttaral almost “Kantian” kind of way, regardless of the utilitarian calculation.

In general i think complex utilitarian arguments struggle to be communicated well in pithy campaigns.

I’m surprised the FarmKind people have made what seems like a pretty straightforward mistake like this, I’ve been super impressed by all the other material they have put out.

I get the good intentions here but it looks to have backfired badly. Obviously I’m not deep in this but I hope that withdrawing the campaign and a quick apology is on the table for you guys at least. All the best figuring it out!

why does it take years to recruit as small number of patients if sufferers are so enthusiastic about this as a potential treatment?

First..”If it took an FDA approved RCT to get a family member free from torture I would not wait. And if it was illegal or broke FDA norms that would not stop me.”

I agree with this. even if it might be placebo if a family member was this convinced of something working, I would probably get it for them illegally. This isn’t related by my lights to the question of following proper process.

Second.. “suffering exists on an exponential scale, and these truly represent the end of this spectrum. This is an affliction worse than torture. Survivors of both have said cluster headaches were worse.”

this is a tragedy for sure but I think we have to be careful not to make arguments like this is the only situation where delay causes lots of suffering.

recently a malaria vaccine rollout sat waiting a while for FDA approval. every day delay the might have cost tens of lives (just a guess). I’m not saying waiting for FDA approval is the best situation, but this is not a special case, tragic delays happen all the time.

I do see that as a string argument, but i still think drugs should only go to market legally when both safety and efficacy has been proven in well enough powered RCTs. The risk to the medical profession and the reputation of the drug production industry is just too high to allow any less i think. There’s no reason a fast tracked RCT couldn’t get this sorted in a year and that seems like a reasonable way forward.

The RCT should also be vs. tryptans and not vs. placebo. I’m not sure why the previous small trials were not done vs. standard best practice, that seems odd to me?

If I was sitting in the FDA reviewing this i would still be pretty nervous about making an exception.

Interesting take, very American centric though, while China has about as many self driving cars on the road as the US, more companies in the game and faster scale up plans. With less extreme regulation why would Chinese makers not accelerate The takeoff here, and even maybe take over like they are with electric cars?

point 2 is actually a really good point. pumping s 20l Jerry can with a borehole takes at least 2 minutes even accounting for transfer times between Jerry cams etc. Asuming 12 hours of continuous pumping 6am to 6pm (unrealistic) that would mean 30x12 20 litre Jerry cans a day which is about 360 jerry cans. People often do use under 20 liters a day on average despite WHO recommendations (we do even lol) but i would say 500 people might be the absolute limit that a borehole could realistically fully serve? And even that feels quite unrealistic.

I’ve also NEVER seen a borehole that heavily used here in UgAnda, but it might be possible in other places?

my math could be a bit wrong but I’ve never thought about it like that before, thanks @Mihkel Viires 🔹 .

EDIT on googling, it seems that standard boreholes are usually designed to serve communities of 200 to 300 people usually with up to 500 a stretch in some cases. Stacks up with our thoughts here!

Comments like this @Alfredo Parra 🔸 “There’s no doubt in my mind that psychedelics help many patients enormously, and at low doses for most patients. ” and this @Curran Janssens “This is not a placebo effect.” have updated me a little against the clusterfree initiative and this treatment specifically. This is not a good scientific approach, and mirrors language from patient lobby groups I’ve seen that often turns out to be incorrect or at least grossly overstated

This could still be placebo effect. After being a practising doctor for many years, I’ve experienced the power of the brain so many times. I’ve seen conversion disorder here in Uganda multiple times that’s so extreme that when someone is poked with a sharp needle (and bleed) in multiple places there is zero pain reaction. Obviously we thought there was something serious going on in these cases but every time it turned out to be a strong psychological effect.

I agree with @Henry Howard🔸 , this probably shouldn’t be legal yet. I wouldn’t call the evidence “strong” yet until we get a well powered RCT vs. the best pain relief options currently available. “Trial or it didn’t happen” as henry says is super important here, and this needs happen before any treatment becomes an encouraged, widespread norm.

If the effect is really as strong as claimed, you wouldn’t even need 100 patients for an RCT. Perhaps clusterfree could even make this happen faster

Thanks I’ve updated my post i think i was wrong here. I think you’re a little optimistic with your 7 figures comments (earning a salary over a million dollars is no joke) but your point stands!

After @RobertM’s comment below it seems i was wrong here and yes the GiveWell top team is pretty darn powerful thanks Robert (I just disagree voted myself which was fun!) I stand by the second paragraph 😊.

I would guess this might be true for a small proportion of these people, but i would personally guess that most of the top in 10 GiveWell employees would not be able to earn 200k plus very easily in the private sector.We’re not “hassling anyone for making too much money” here, I don’t think anyone in this discussion begrudges any be GiveWell employee their salary. We’re just discussing whether it’s actually the best idea to have salaries this high in jobs like this, which is a complicated question.

Wow those GiveWell salaries seem high from the perspective of someone running a non profit. Its hard to wrap my head around, after running an org that raised less than any of those individual salaries in yearly donations for the first 5 years of our operation.

Of course this doesn’t mean those high salaries are necessarily bad, but when you’re sitting here in northern Uganda it feels like another world that’s for sure. I might comment more on this later

I agree @Vasco Grilo🔸 in principle, although I really doubt this is a strong consideration for very many workers, even at aligned places like GiveWell. I think few give away large proportions of their salary.

Nice one love it, all sounds very reasonable. With CEAs there are always so many tricky decisions to make. Keep in mind this isn’t my specific area of expertise so don’t over index on my suggestions too much 😊.

I’m not sure why there hasn’t been more high quality research on wells given how common an intervention it is. The one big RCT in Ghana i could find showed a reduction in diarrhea if stuff 15 percent, similar to other water cleaning interventions.

Thanks so much for this. I love that you have done this initial CEA and then revised it. For what its worth even after my comments below, I think its entirely possible that an efficient well drilling organisation could be more cost-effective than in-line chlorination, especially in a place like Niger with the experience this org has. Wells4Wellness seem impressive in their efficiency and transparency.

First, @GiveWell does a lot of research and I think (could be wrong) have basically decided that for the moment they are doubling down on in-line chlorination as a cost-effective, scalable clean water option. Part of this might be that the research on wells is pretty poor, there just isn’t the RCT bank we have for chlorination. Also scaling wells cost effectively and quickly isn’t easy.One thing I’m super impressed with about Wells4Wellness about is that they include all their expenses in their “cost of a well drilled” calculation. Its simply “Total org money spent this year / No. of wells drilled”. Even many GiveWell orgs fudge around the edges here, and don’t include management, marketing costs etc.in their cost of operations.

I think you’ve done a good job on your CEA, but I still feel like you’re a bit optimistic on your DALY calculation, mainly due to unrealistic time horizons, and lack of discounting. Here are a few reasons why…

(My biggest issue) Your 50 year estimate for well lifespan seems both unrealistic and doesn’t match other cost-effectiveness time horizons. Here in Northern Uganda I haven’t seen boreholes last more than 30ish years (many last less long). When I did our initial CEA for our OneDay Health centers I capped it at 5 years even though they will likely persist much longer. I think maybe 10-15 years makes more sense as a time horizon? More than that is tough to justify given how hard the future is to predict. A lot of things could change in the meantime. Political upheaval can make wells redundant, urbanisation could pull populations away from the borehole (likely), piped water might reach more places making them redundant (see India), wells might break faster than predicted…

Costs of repair and replacement parts might skyrocket as wells get more than 10 years old. Boreholes drilled by Wells4Wellness are still very new. I can’t see only $200 a year being realistic for more than 5 years or so. I’d be interested to know how much is being spent on maintaining their oldest boreholes?

Diarrhoea morbidity has improved over time and that will likely continue.. I don’t think its reasonable to take a 2018 DALY estimate and project it into the future (especially not 50 years), then attribute it all to wells.The 2023 Global burden of disease estimate was 330 DALYs per 1000, down from 360ish in 2018 (already a bit lower than your estimate).

(Small) I got 18% not 20% of Niger’s population under 5 through world bank/ chat GPT but no big deal

The wells serving 1,200 people seems on the high end to me (but uncertain) - population would need to be fairly high density for that to be realistic. Most of Niger is pretty low population density. Unfortunately people will usually opt for the closest water source, even if the clean one is only a little further away. You wouldn’t believe the heavily used dirty pond near my house loads of people use when there are clean options a bit further

(On the positive side) I think you are pretty conservative on your mortality reduction figures which is nice, as you don’t include the mills-reinke effect here.

As a side note (not so important) I don’t think those studies you cited add too much to the discussion, their quality isn’t great. They are broad cross-sectional studies which can’t (and don’t claim to) even hint much at causality. The first study is a slight on BMC global health and their system. How a peer reviewed study gets away with the first sentence which says…

“Diarrhea, the second leading cause of child morbidity and mortality worldwide, is responsible for more than 90% of deaths in children under 5 years of age in low and middle-income countries (LMICs).”

I would love to know what percent the leading cause of mortality makes up then. I mean, I can’t even….. I hope its just a typo…

I’m not sure if this is a general question or for me in particular?

I’m happy to take a little responsibility? Although I don’t know what that really means practically. Generally in organisations and movements, the leadership (for better or worse) bears the brunt of the responsibility of failings. I’m not in any EA leadership position so I don’t practically bear much responsibility—just a bit of backlash from anti EA people.

On a personal note I’ve mostly jumped on board after FTX too, and have nothing to do with AI shenanegans. When the next big scandal happens I’ll likely take it on the chin too—I’m not one to run quickly from something I have decided is good.

Hey I’m wondering what you mean by “leave EA” exactly here? First its not clear to me what you mean practically by “leave” exactly? Second FWIW I call myself an Effective Altruist and I don’t feel like I need to sign up to the extent/standards you do to carry that label.

I call myself an EA because I’m committed to “Finding the best way to help others” and “Turning good intentions into impact” (love these from the CEA website). In addition I’ve been impressed by the character and heart of EAs I have met who do Global Development things, and I appreciate the forum development discourse (although there is less material year on year).

I feel like people will have diverse reasons for identifying as an “EA” from your nice list, whether that’s community, the mindset, the online discourse or a combination of them all. Some might have vaguer reasons which is all good too.

Also I suspect I’m just in far less deep than you were here, so its harder for me to identify with your experience. I can also imagine the AI/GCR community and disagreements within it are more fraught than within GHD.

i think orgs are responsible for thinking about other factors besides just this. The positive and negative effects of high/low salaries are many and varied, I think “leave it to the strange NGO marketplace” doesn’t tell the whole story.

Love this comment so so much! Only minor disagreement is that I think the forum here isn’t a bad place to have a bit of a “vibes based” conversation about a campaign like this. Then we can move into great analysis like yours right here.