pre-doc at Data Innovation & AI Lab

previously worked in options HFT and tried building a social media startup

founder of Northwestern EA club

Charlie_Guthmann

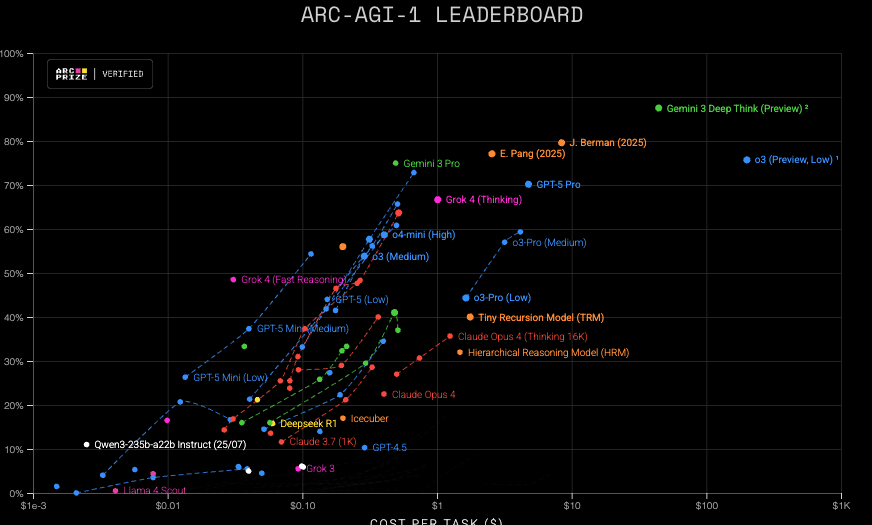

yea doesn’t arc leaderboard have somewhat opposing trends? https://arcprize.org/leaderboard

might want to check out this (only indirectly related but maybe useful).

Personally don’t mind o-risk think it has some utility but s-risk ~somewhat seems like it still works here. An O-risk is just a smaller scale s-risk no?

What do people think of the idea of pushing for a constitutional convention/amendment? The coalition would be ending presidential immunity + reducing the pardon powers + banning stock trading for elected officials. Probably politically impossible but if there were ever a time it might be now.

tldr; wrote some responses to sections, don’t think I have an overall point. I think this line of argumentation deserves to be taken seriously but think this post is maybe trying to do too much at once. The main argument is simply cluelessness + short term positive EV.

In virtually every other area of human decision-making, people generally accept without much argument that the very-long-term consequences of our actions are extremely difficult to predict.

I’m a little confused what your argumentative technique is here. Is that fact that most humans do something the core component here? Wouldn’t this immediately disqualify much of what EAs work on? Or is this just a persuasive technique, and you mean ~ “most humans think this for reason x. I also think this for reason x, though the fact most humans think it matters little to me.”

For me, most humans do x is not an especially convincing argument of something.I don’t want to get bogged down on cluelessness because there are many lengthy discussions elsewhere but I’ll say that cluelessness depends on the question. If you told me what the rainforest looked like and then asked me to guess the animals I wouldn’t have a chance. If you asked me to guess if they ate food and drank water I think I would do decent. Or a more on the nose example. If you took me back to 5 million years ago and asked me to guess what would happen to the chimps if humans came to exist, I wouldn’t be able to predict much specifics, but I might be able to predict (1) humans would become the top dog, and with less certainty (2) chimp population would go down and with even less certainty (3) chimps will go extinct. That’s why the horse model gets so much play, people have some level of belief that there are certain outcomes that might be less chaotic if modeled correctly.

To wrap up I think your first 4 paragraphs could be shortened to your unique views on cluelessness (specifically wrt ai?) + discount rates/whatever other unique philosophical axioms you might hold.

Understood in this way, AI does not actually pose a risk of astronomical catastrophe in Bostrom’s sense.

To be clear, neither does the asteroid. Aliens might exist and our survival similarly presents a risk of replacement for all the alien civs that won’t have time to biologically evolve as (humans or ai from earth) speed through the lightcone. Also even if no aliens, we have no idea if conditional on humans being grabby, utility is net positive or negative. There isn’t even agreement on this forum or in the world on if there is such a thing as a negative life or not. Don’t think i’m arguing against you here but feels like you are being a little loose here (don’t want to be too pedantic as I can totally understand if you are writing for a more general audience).

Now, you might still reasonably be very concerned about such a replacement catastrophe. I myself share that concern and take the possibility seriously. But it is crucial to keep the structure of the original argument clearly in mind. … Even if you accept that killing eight billion people would be an extraordinarily terrible outcome, it does not automatically follow that this harm carries the same moral weight as a catastrophe that permanently eliminates the possibility of 10^23 future lives.

Well I have my own “values”. Just because I die doesn’t mean these disappear. I’d prefer that those 10^23 lives aren’t horrifically tortured for instance.

Though I say this with extremely weak confidence, I feel like in the case where a “single agent/hivemind” misaligned ai immediately wipes us all out, I’m thinking they probably are not going to convert resources into utility as efficiently as me (by my current values), and thus this might be viewed as an s-risk. I’m guessing you might say that we can’t possibly predict that, but then can we even predict if those 10^23 lives will be positive or negative? if not I guess i’m not sure why you brought any of this up anyway. Bostrom’s whole argument predicates on the assumption that earth descended life is + ev, which predicates on not being clueless or having a very kumbaya pronatal moral philosophy.

So I guess even better for you, from my POV you don’t even need to counter argue this.

Virtually every proposed mechanism by which AI systems might cause human extinction relies on the assumption that these AI systems would be extraordinarily capable, productive, or technologically sophisticated.

I might not be especially up to date here. Can’t it like cause a nuclear fallout etc? totalitarian lock in? the matrix? Extreme wealth and power disparity? is there agreement that the only scenarios in which our potential is permanently curtailed the terminator flavors?

The reason is that a decade of delayed progress would mean that nearly a billion people will die from diseases and age-related decline who might otherwise have been saved by the rapid medical advances that AI could enable. Those billion people would have gone on to live much longer, healthier, and more prosperous lives.

You might need to flesh this out a bit more for me because I don’t think it’s as true as you said. Is the claim here that AI will (1) invent new medicine or (2) replace doctors or (3) improve US healthcare policy?

(1) Drug development pipelines are excruciatingly long and mostly not because of a lack of hypotheses. For instance, https://pmc.ncbi.nlm.nih.gov/articles/PMC10786682/ GLP-1 have been in the pipelines for half a century (though debatably with better ai some of the nausea stuff could have been figured out quicker). IL-23 connection to IBD/Crohns was basically known ~2000 as it was one of the first/most significant single nucleotide mutations picked up with GWAS phenotype/genotype studies. Yet Skyrizi only hit the market a few years ago. Assuming ai could instantly just invent the drugs, IIRC it’s a minimum of like 7 years to get approval. That’s absolute minimum. And likely even super intelligent AI is gonna need physical labs, iteration, make mistakes, etc.

Assuming sufficient AGI in 2030 for this threshold, we are looking at early 2040s before we start to see significant impact on the drugs we use, although it’s possible AI will usher a new era of repurposes drug cocktails via extremely good lit review (although IMO the current tools might already be enough to see huge benefits here!).

(2) Doctors, while overpaid, still only make up like 10-15% of healthcare costs in the US. I do think ai will end up being better than them, although whether people will quickly accept this idk. So you can get some nice savings there, but again that’s assuming you just break the massive lobbying power they have. And beyond the costs, tons of the most important health stuff is already widely known among the public. Stuff like don’t smoke cigarettes, don’t drink alcohol, don’t be fat, don’t be lonely. People still fail to do this stuff. Not an information problem. Further doctors often know when they are overprescribing useless stuff, often just an incentives problem. No good reason to think AI will break this trend unless you are envisioning a completely decentralized or single payer system that uses all ai doctors, both are at least partially political issues not intelligence. And if we are talking solid basic primary care for the developing world, I just question how smart the ai needs to be. I’d assume a 130 iq llm with perfect vision and full knowledge of medical lit would be more than sufficient, and that seems like it will be the next major gemini release?

(3) will leave this for now.

Kinda got sidetracked here and will leave this comment here for now because so long, but I guess takeaway from this section: You can’t claim cluelessness on the harms and then assume the benefits are guaranteed.

2 thoughts here just thinking about persuasiveness. I’m not quite sure what you mean by normal people and also if you still want your arguments to be actually arguments or just persuasion-max.

show don’t tell for 1-3

For anyone who hasn’t intimately used frontier models but is willing to with an open mind, I’d guess you should just push them to use and actually engage mentally with them and their thought traces, even better if you can convince them to use something agentic like CC.

Ask and/or tell stories for 4

What can history tell us about what happens when a significantly more tech savy/powerful nation finds another one?

no “right” answer here though the general arc of history is that significantly more powerful nations capture/kill/etc.

What would it be like to be a native during various european conquests in the new world (esp ignoring effects of smallpox/disease to the extent you can)?

Incan perspective? Mayan?

I especially like Orellena’s first expedition down the amazon. As far as I can tell, Orellena was not especially bloodthirsty, had some interest/respect for natives. Though he is certainly misaligned with the natives.

Even if Orellana is “less bloodthirsty,” you still don’t want to be a native on that river. You hear fragmented rumors—trade, disease, violence—with no shared narrative; you don’t know what these outsiders want or what their weapons do; you don’t know whether letting them land changes the local equilibrium by enabling alliances with your enemies; and you don’t know whether the boat carries Orellana or someone worse.

Do you trade? attack? flee? coordinate? Any move could be fatal, and the entire situation destabilizes before anyone has to decide “we should exterminate them.”

and for all of these situations you can actually see what happened (approximately) and usually it doesn’t end well.

Why is AI different?

not rhetorical and gives them space to think in a smaller, more structured way that doesn’t force an answer.

Just finding about about this & crux website. So cool. Would love to see something like this for charity ranking (if it isn’t already somewhere on the site).

Don’t you need a philosophy axioms layer between outputs and outcomes? Existential catastrophe definitions seems to be assuming a lot of things.

Would also need to think harder about why/in what context i’m using this but “governance” being a subcomponent when it’s arguably more important/ can control literally everything else at the top level seems wrong.

Thanks for the post — There is definitely a certain fuzziness at times about value claims in the movement and I have been critical at times of similar things. Also chatgpt edited this but (nearly) all thoughts are my own hope that’s ok!

I see a few threads here that are easy to blur:

1) Metaethics (realism vs anti-realism) is mostly orthogonal to Ideal Reflection.

You can be a realist or anti-realist and still endorse (or reject) a norm like “defer to what an idealized version of you would believe, holding evidence fixed.” Ideal Reflection doesn’t have to claim there’s a stance-independent EV “out there”; it can be a procedural claim about which internal standpoint is authoritative (idealized deliberation vs current snap judgment), and about how to talk when you’re trying to approximate that standpoint. I’m not saying you claimed the opposite exactly but language was a bit confusing to me at times.2) Ideal Reflection is a metanormative framework; EA is basically a practical operationalization of it.

Ideal Reflection by itself is extremely broad. But on its own it doesn’t tell you what you value, and it doesn’t even guarantee that you can map possible world-histories to an ordinal ranking. It might seem less hand-wavy but its lack of assumptions makes it hard to see what non trivial claims can follow. Once you add enough axioms/structure to make action-guiding comparisons possible (some consequentialist-ish evaluative ranking, plus willingness to act under uncertainty), then you can start building “upward” from reflection to action.

It also seems to me (and is part of what makes EA distinctive) that EA ecosystem was built by unusually self-reflective people — sometimes to a fault — who tried hard to notice when they were rationalizing, to systematize their uncertainty, and to actually let arguments change their minds.On that picture, EA is a specifc operationalization/instance of Ideal Reflection for agents who (a) accept some ranking over world-states/world-histories, and (b) want scalable, uncertainty-aware guidance about what to do next.

3) But this mainly helps with the “upward” direction; it doesn’t make the “downward” direction easier.

I think of philosophy as stacked layers: at the bottom are the rules of the game; at the top is “what should I do next.” EA (and the surrounding thought infrastructure) clarifies many paths upward once you’ve committed to enough structure to compare outcomes. But it’s not obvious why building effective machinery for action gives us privileged access to bedrock foundations. People have been trying to “go down” for a long time. So in practice a lot of EAs seem to do something like: “axiomatize enough to move, then keep climbing,” with occasional hops between layers when the cracks become salient.4) At the community level, there’s a coordination story that explains the quasi-objective EV rhetoric and the sensitivity to hidden axioms.

Even among “utilitarians,” the shape of the value function can differ a lot — and the best next action can be extremely sensitive to those details (population ethics, welfare weights across species, s-risk vs x-risk prioritization, etc.). Full transparency about deep disagreements can threaten cohesion, so the community ends up facilitating a kind of moral trade: we coordinate around shared methods and mid-level abstractions, and we get the benefits of specialization and shared infrastructure, even without deep convergence.It’s true—Institutionally, founder effects + decentralization + concentrated resources (in a world with billionaires) create path dependence: once people find a lane and drive — building an org, a research agenda, a funding pipeline — they implicitly assume a set of rules and commit resources accordingly. As the work becomes more specific, certain foundational assumptions become increasingly salient, and it’s easy for implicit axioms to harden and complexify over time. To some extent you can say that is what happened, although on the object level it feels like we have picked pretty good stuff to work on in my view. And charitably, when 80k writes fuzzy definitions of the good, it isn’t necessarily that the employees and org don’t have more specific values, it’s that they think its better to leave it at the level of abstraction to build the best coalition right now. And also that they are trying to help you build up from what you have to making a decision.

I don’t see this strain of argument as particularly action relevant. I feel like you are getting way to caught up in the abstractions of what “agi” is and such. This is obviously a big deal, this is obviously going to happen “soon” and/or already “happening”, it’s obviously time to take this very serious and act like responsible adults.

Ok so you think “AGI” is likely 5+ years away. Are you not worried about anthropic having a fiduciary responsibility to it’s shareholders to maximize profits? I guess reading between the lines you see very little value in slowing down or regulating AI? While leaving room for the chance that our whole disagreement does revolve around our object level timeline differences, I think you probably are missing the forrest from the trees here in your quest to prove the incorrectness of people with shorter timelines.

I am not a doom maximilist in the sense that I think this technology is already profoundly world-bending and scary today. I am worried about my cousin becoming a short form addicted goonbot with an AI best friend right now—whether or not robot bees are about to gorge my eyes out.

I think there are a reasonably long list of sensible regulations around this stuff (both x-risk related and more minor stuff) that would probably result in a large drawdown in these companies valuations and really the stock market at large. For example but not limited to—AI companionship, romance, porn should probably be on a pause right now while the government performs large scale AB testing, the same thing we should have done with social media and cellphone use especially in children that our government horribly failed to do because of its inability to utilitize RCTs and the absolute horrifying average age of our president and both houses of congress.

it’s quite easy, I actually already did it with printful + shopify. I stalled out because (1) I realized it’s much more confusing to deal with all the copyright stuff and stepping on toes (I don’t want to be competing with ea itself or ea orgs and didn’t feel like coordinating with a bunch of people. (2) you kind of get raked using a easy fully automated stack. Not a big deal but with shipping hoodies end up being like 35-40 and t shirts almost 20. I felt like given the size of EA we should probably just buy a heat press or embroidery machine since we probably want to produce 100s+.

Anyway feel free to reach out and we can chat!

here is the example site I spun up, again not actually trying to sell those products was just testing if I could do it https://tp403r-fy.myshopify.com/

Thank you for writing this. To be honest, I’m pretty shocked that the main discussions around the anthropic IPO have been about “patient philanthropy” concerns and not the massive, earth shattering conflicts of interest (both for us as non-anthropic members of the EA community and for anthropic itself which will now have a “fiduciary responsibility”). I think this shortform does a pretty good job summarizing my concern. The missing mood is big. I also just have a sense that way too many of us are living in group houses and attending the same parties together, and that AI employees are included in this + I think if you actually hear those conversations at parties they are less like “man I am so scared” and more like “holy shit that new proto-memory paper is sick”. Conflicts of interest, nepotism, etc. are not taken seriously enough by the community and this just isn’t a new problem or something I have confidence in us fixing.

Without trying to heavily engage in a timelines debate, I’ll just say it’s pretty obvious we are in go time. I don’t think anyone should be able to confidently say that we are more than a single 10x or breakthrough away from machines being smarter than us. I’m not personally huge in beating the horn for pause AI, I think there are probably better ways to regulate than that. That being said, I genuinely think it might be time for people to start disclosing their investments. I’m paranoid about everyone’s motives (including my own).You are talking about the movement scale issues, with the awareness that crashing anthropic stock could crash ea wealth. That’s charitable but let’s be less charitable—way too many people here have yolo’d significant parts of their networth on ai stocks, low delta snp/ai calls, etc. and are still holding the bag. Assuming many of you are anything like me, you feel in your brain that you want the world to go well, but I personally feel happier when my brokerage account goes up 3% than when I hear news that AI timelines are way longer than we thought because xyz.

Again kind of just repeating you here but I think it’s important and under discussed.

I think this is https://www.lesswrong.com/w/coherent-extrapolated-volition this sort of?

Curious why METR. This is less METR specific and more about capabilities benchmarks: Doesn’t frontier capabilities benchmarking help accelerate ai development? I know they also do pure safety but in practice I feel like they have done more to push forth the agentic autmation race.

Tangential to the main post but I just read your shortform comment on less wrong and really agree. From a variety of different anecdotal experiences, I’m getting increasingly paranoid about trusting anyone here regarding ai opinions. How can I trust someone if 50% of their money is in Nvidia and snp 5 delta calls? if we pause AI they stand to lose so much. I don’t think this is a small amount of the community either.

( I have most of my money in snp stock and ~15% specifically in tech stocks so I’m still quite exposed to AI progress but significantly less than a lot of people here and I still find myself rooting for ai bull market sometimes because i’m selfish).

benchmarking model behavior seems increasingly hard to keep track off.

I think there are a bunch of separate layers that are being analyzed, and it’s increasingly complicated the degree to which they are separate.e.g.

level 1 → pre-trained only

level 2 → post-trained

level 3 → with ____ amount of inference (pro, high, low, thinking, etc.)

level 4 → with agentic scaffolding (Claude code, swe agent, codex)

level 5 → context engineering setups inside of your agentic repo (ACE, GEPA, ai scientists)

level 6 → The built digital environment (arguably could be included partially in level 4, stuff like api’s being crafted to be better for llms, workflows being re written to accomplish the same goal in a more verifiable way, ui’s that are more readable by llms).

In some sense you can boil all of this down to cost per run, like ARC, but you will miss important differences in model behavior on a fixed frontier.

https://jeremyberman.substack.com/p/how-i-got-the-highest-score-on-arc-agi-again

if you read J. Berman substack, you will see he uses existing llms to get his scores with an evolutionary scaffolding (hard to place this as being level 4⁄5). While I’m decently bitter lesson pilled, It seems plausible we will see proto-agis popping up that are heavily scaffolded before raw models reach that level (though also plausibly we might just see the really useful, generalizable scaffolds get consumed by the model soon thereafter). The behavior of j bermans system is going to be different than the first raw model to hit that score with no scaffolding and pose different threats at the same level of intelligence.

How politicized will AI get in the next (1,2,5) and what will those trends look like?

I think we are investing as a community more in policy/advocacy/research but the value of these things might be heavily a function of the politicization/toxicity of AI. Not a strong prior but I’d assume that like OMB/Think tanks get to write a really large % of the policy for boring non electorally dominant issues but have much less hard power when the issue at hand is like (healthcare, crime, immigration).

Hi wyatt there is some work on number 1 (although agreed needs a lot more). I keep posting this stack so maybe i should curate it into a sequence.

https://forum.effectivealtruism.org/s/wmqLbtMMraAv5Gyqn

https://forum.effectivealtruism.org/posts/W4vuHbj7Enzdg5g8y/two-tools-for-rethinking-existential-risk-2

https://forum.effectivealtruism.org/posts/zuQeTaqrjveSiSMYo/a-proposed-hierarchy-of-longtermist-concepts

https://forum.effectivealtruism.org/posts/fi3Abht55xHGQ4Pha/longtermist-especially-x-risk-terminology-has-biasing

https://forum.effectivealtruism.org/posts/wqmY98m3yNs6TiKeL/parfit-singer-aliens

https://forum.effectivealtruism.org/posts/zLi3MbMCTtCv9ttyz/formalizing-extinction-risk-reduction-vs-longtermismhttps://static1.squarespace.com/static/58e2a71bf7e0ab3ba886cea3/t/5a8c5ddc24a6947bb63a9bc9/1519148520220/Todd+Miller.evpsych+of+ETI.BioTheory.2017.pdf

https://forum.effectivealtruism.org/posts/mzT2ZQGNce8AywAx3

Very nice, thank you for writing.

It seems plausible that p(annual collapse risk) is in part a function of the N friction as well? I think you may cover some of this here but can’t really remember.

e.g. a society with less non-renewables will have to be more sustainable survive/grow → resulting population is somehow more value aligned → reduced annual collapse risk.

or on the other side

nukes still exist and we can still launch them → we have higher N friction in pre nuclear age in the next society → increased annual collapse risk.

(i have a bad habit of just adding slop onto models and think this isn’t at all something that need be in the scope of original post just a curiousity).

I think monied prediction markets are negative EV. The original reasons the CFTC were not allowing binary event contracts on most things are/were actually good reasons. It’s quite clear that our elected officials can get away with insider trading (and probably to a certain extent market manipulation). My intuition is that in the current admin I expect this behavior to increasingly not be punished and maybe actively encouraged. Importantly, insider trading on the existing financial instruments doesn’t really work. My take here is just that the marginal value of a piece of information is pretty low for traditionally financial markets, so while it might allow a high-tech research shop to beat the market it doesn’t even cover slippage/the lack of complex modeling done when in the hands of a dumb paper congress person. This is not the case for most/all binary event markets, where a single marginal piece of information can credibly flip probabilities from <1 → >99.

Insider trading, which I expect to be much more likely to happen at scale with these markets, is not that bad. It degrades the integrity of the prediction markets themselves and might lower volume but probably not that relevant for EA. However market manipulation could get really really bad in terms of EV (I think significant market manipulation is currently quite unlikely at the current levels of liquidity on these markets). In particular, the less likely something is to happen, the more incentive a prediction market provides for manipulating the market. And like in insider trading, I think market manipulation is going to be much more accessible and effective for generating alpha vs traditional markets.

low stakes example: Trump’s speech writer puts random phrase ( Lock x up, I hate x country, Sperm)medium stakes: Every election market is an assassination market, and a bounty for either candidate to drop out

High stakes: “will x leader/country bomb y”

Of course with the medium and high stakes examples, we are far from the liquidity on these markets to make the incentives provided to be worth seriously considering. But what if we 100x? what if e.g. mamdani could make 50 million dollars by dropping out instead of 500k? What if trump could make 20m by delaying a ceasefire a few days?To some extent we will just have to see how the enforcement of this stuff plays out as that will shape the incentives. It might seem obvious that the stuff above will be easily sorted out by the relevant federal bodies. Again, my intuition is that (1) I don’t actually expect this to be a huge priority right now and (2) the regulatory burden to properly regulate this stuff is ridiculously high (both in terms of just tracking everything that is happening and actually trying to apply the law to decide if various things constitute as “security fraud”).

It’s already hard for the SEC to enforce behavior the NYSE or CME, and that’s with mostly big institutional players who cooperate and record all their communications, etc. I’d have to imagine especially in the short term the vast majority of questionable or illegal behavior on prediction markets will slip through the cracks. The space of potential “securities fraud” is just so ridiculously big and confusing when you have 1000s of random binary event contracts.

Then you put that with the encouragement of gambling and incentivizing the spread of fake news, I just think we should be very very skeptical of going head first into this.

And the alternatives are pretty good! Non monied prediction markets like manifold and metaculus are huge improvements over previous epistemics, and to be fair the monied prediction markets are quite literally crowding them out. It’s not really fair to compare polymarkets accuracy to manifold, when a bunch of people who are on poly would probably use manifold if they made poly illegal (and that being said I’m pretty sure manifold doesn’t stack up to poorly but don’t have up to date stats). And some of the most important binary event contracts were already being traded on CME because they didn’t suffer from the issues I listed above.

Given the public good nature of prediction markets, the government should be quite willing to front $10s-100s of millions to improve their quality. And there are lots of ways to improve the markets without directly providing personal monetary incentives. This could mean improving the site ui/ux, creating a public leaderboard with some sort of recognization/award, improving the question statements/resolution, or even providing prizes that can be donated to a charity of the winners choice.

Yea my original framing was a little confused wrt the “vs” dichotomy you present in paragraph one, good shout. I guess I actually meant a little bit of each, though. My interpretation of the post is basically, (1) in so forth as we need to defeat powerful people or thought patterns we (ea or humans) haven’t proven it (2) it’s somewhat likely we will need to do this to create the world we want.

I.e. Given that future s-risk efforts are probably not going to be successful, current extinction-risk efforts are therefore also less useful.I am saying aligning AI is in the best interests of AI companies

If you define it in a specifically narrow AI Takeover way yes. Making sure it doesn’t allow a dictator to take power or gradually disempowerment scenarios, not really. Or to the extent that ensuring alignment requires slowing down progress.

Anyway mostly in agreement with your points/world, I definitely think we should be focusing on AI right now and I think that our goals and the AI companies/US gov are sufficiently aligned atm that we aren’t swimming up stream, but I resonante with OP that it would allievate some concerns if we actually racked up some hard fought politically unpopular battles before trying to steer the whole future.

It certainly seems possible (>1%) that in the next 2 US admins (current plus next) AI safety becomes so toxic that all the EA -adj ai safety people in the gov get purged and they stop listeing to most ai safety researchers. If this co-occurs with some sort of AI nationalization most of our TOC is cooked.

Thank you for doing this, love to see some data.

I don’t have high familiarity with METR but I think it is probably not great data for this type of analysis. Few issues or clarifications would be needed (and anyone who understands METR better bear with me/or correct me on my mistakes plz).

1. How does METR handle context windows? Are we doing a rolling window? Compact? something else?

How much of this inverse quadratic relationship is just caused by longer tasks having a larger used context window for the back half of the run? How much is caused by a lack of a default information management system that persists?

2. What is the exact harness(es) METR is using?

Harness/enviornment engineering/information management might control more of the cost of long running SWE projects than iq (past a point).

3. Does METR allow repo forking? routing?

In the future no 180 iq ai is building the ORM and buttons for a crud app. They are either forking a boilerplate or routing it to a cheaper model.

etc.

It is said that the current iteration of models suffer from retrograde amnesia. Whether or not this will get bitter lesson pilled is a separate question, but for this class of memento models, version control, information management, context management, and the meta process of improving and routing through the best versions of these combos for a specific task is not some side quest but in fact the main route to making long tasks cheaper. Even as we enter the next paradigm of models that don’t have such profound short term memory loss, a huge part of cost reduction will come from the orchestrator meta planning about how much to explore the space of options/build out the software factory / vs actually starting the work.

I’m not denying the core question OP is raising — costs could plausibly be rising and could matter a lot. I’m just not convinced this specific curve cleanly isolates “AI economics” from “how expensive a particular scaffold/set of arbitrary constraints makes long-context work.”