Flowers are selective about the pollinators they attract. Diurnal flowers must compete with each other for visual attention, so they use colours to crowd out their neighbours. But flowers with nocturnal anthesis are generally white, as they aim only to outshine the night.

rime

In addition to the title being somewhat misleading, I think it reinforces a very “either in or out” narrative re effective altruism. Very good object-level news, though!

Edit: The title was updated, and I have no issue. : )

I support this. I recently got a monitor, and here are my takeaways:

I was really surprised to see how CO2 levels could drop below comfort even with the window open (and no wind).

I will close the doors to my study and bedroom when I cook food (especially if frying anything), otherwise PM2.5 can go too high. Frying food seems potentially like a bad idea generally, given the PM2.5.

PM2.5 deposits into the thinnest blood vessels in the lung, and increases blood viscosity (I seem to not have noted down my sources in my knowledge-net, but feel free to google). If viscosity gets too high, it may decrease efficiency of microcirculation (e.g. efficiency of nutrient transport in microvasculature, plausibly including the brain). Totally underinformed hypothesis, however; do not defer to me on this—I mention it in case you (the reader) may benefit from having a hypothesis.

Just the arguments in the summary are really solid.[1] And while I wasn’t considering supporting sustainability in fishing anyway, I now believe it’s more urgent to culturally/semiotically/associatively separate between animal welfare and some strands of “environmentalism”. Thanks!

Alas, I don’t predict I will work anywhere where this update becomes pivotal to my actions, but my practically relevant takeaway is: I will reproduce the arguments from this post (and/or link it) in contexts where people are discussing conjunctions/disjunctions between environmental concerns and animal welfare.

Hmm, I notice that (what I perceive as) the core argument generalizes to all efforts to make something terrible more “sustainable”. We sometimes want there to be high price of anarchy (long-run) wrt competing agents/companies trying to profit from doing something terrible. If they’re competitively “forced” to act myopically and collectively profit less over the long-run, this is good insofar as their profit correlates straightforwardly with disutility for others.[2]

It doesn’t hold in cases where what we care about isn’t straightforwardly correlated with their profit, however. E.g. ecosystems/species are disproportionately imperiled by race-to-the-bottom-type incentives, because they have an absorbing state at 0.

(Tagging @niplav, because interesting patterns and related to large-scale suffering.)

Paying people for what they do works great if most of their potential impact comes from activities you can verify. But if their most effective activities are things they have a hard time explaining to others (yet have intrinsic motivation to do), you could miss out on a lot of impact by requiring them instead to work on what’s verifiable.

Perhaps funders should consider granting motivated altruists multi-year basic income. Now they don’t have to compromise[1] between what’s explainable/verifiable vs what they think is most effective—they now have independence to purely pursue the latter.

Bonus point: People who are much more competent than you at X[2] will probably behave in ways you don’t recognise as more competent. If you could, they wouldn’t be much more competent. Your “deference limit” is the level of competence above which you stop being able to reliable judge the difference between experts.

If good research is heavy-tailed & in a positive selection-regime, then cautiousness actively selects against features with the highest expected value.

- ^

Consider how the cost of compromising between optimisation criteria interacts with what part of the impact distribution you’re aiming for. If you’re searching for a project with top p% impact and top p% explainability-to-funders, you can expect only p^2 of projects to fit both criteria—assuming independence.

But I think it’s an open question how & when the distributions correlate. One reason to think they could sometimes be anticorrelated is that the projects with the highest explainability-to-funders are also more likely to receive adequate attention from profit-incentives alone.

If you’re doing conjunctive search over projects/ideas for ones that score above a threshold for multiple criteria, it matters a lot which criteria you prioritise most of your parallel attention on to identify candidates for further serial examination. Try out various examples here & here.

- ^

At least for hard-to-measure activities where most of the competence derives from knowing what to do in the first place. I reckon this includes most fields of altruistic work.

- ^

I think there are good arguments for thinking that personal consumption choices have relatively insignificant impact when compared to the impact you can have with more targeted work.

However, I also think there’s likely to be some counter-countersignalling going on. If you mostly hang out with people who don’t care about the world, you can’t signal uniqueness by living high. But when your peers are already very considerate, choosing to refrain from refraining makes you look like you grok the arguments in the previous paragraph—you’re not one of those naive altruists who don’t seem to care about 2nd, 3rd, and nth-level arguments.

Fwiw, I just personally want the EA movement to (continue to) embrace a frugal aesthetic, irrespective of the arguments re effectiveness. It doesn’t have to be viewed as a tragic sacrifice and hinder your productivity. And I do think it has significant positives on culture & mindset.

Good point I hadn’t thought of before!

…[1]

- ^

BTW I notice you linked to the sensitivity and specificity article. Did you get that from me or is it a positive coincidence? I link it everywhere I go, and it’s one of the most referenced concepts in my note-network.

- ^

Any other points worth highlighting from the 10-page long rules? I find it confusing. Is this normal for legalspeak? The requirements include, and I quote:

All information provided in the Entry must be true, accurate, and correct in all

respects. [oops, excludes nearly all possible utterances I could say]The Contest is open to any natural person who meets all of the following eligibility

requirements:[Resides in a place where the Contest is not prohibited by law]

The entrant is at least eighteen (18) years old at the time of entry.

The entrant has access to the internet. [What?]

Notes on cultivating good incentives

I actually like a lot of these. I wish the forum had collapsible sections or bullet points so that curious readers could expand sections we wish to learn more about.

Extremify

I do some version of this a lot and call it “limiting-case analysis”. The idea is that you want to find the most generalisable aspects of the pattern you’re analysing, and that often means setting some variables to or or even removing them altogether.

“The art of doing mathematics consists in finding that special case which contains all the germs of generality.”—David Hilbert

Notice which aspects are parameters. If they’re set to some specific value, generalise them by turning them into variables instead.

Play the generalised game of whatever you’re trying to do. Chess boards with an infinite number or unconstrained variable of squares are more general than 8x8.

Social proof

This is so crucial for motivation. If we’re worried about how our ideas will be received, our brains will refuse to innovate.

However, I would caution against the need for social validation. If you rely on others to check your ideas for you, you’ll have less incentive to try to check them yourself. At the start, it may just be true that others are better at judging your ideas than you are, but I’d still recommend trusting your own judgment because it won’t get practice otherwise.

“I was used to just having everybody else being wrong and obviously wrong, and I think that’s important. I think you need the faith in science to be willing to work on stuff just because it’s obviously right even though everybody else says it’s nonsense.”—Geoffrey Hinton

Plus, you need to be able to use your ideas to reach more distant nodes on the search tree without slowing down for validation at every step. It’s better to have the option to internalise the entire process. This gives you a much shorter feedback loop that gets better over time.

YourBias.Is

You advise me to “familiarise myself with common cognitive biases” so that I can learn to avoid them. I agree of course, but I think there’s important nuance. If you defer to empirical experiments and solid statistics to form your beliefs about how you’re biased, you may learn to be statistically without understanding why you’re correct.

Imo, the reason you should consult literature and statistics about biases is mostly just so that you can learn to recognise how they work internally via introspection. I think that’s the only realistic way to learn to mitigate them.

Yes! The latter. I’m definitely a fan of using causal diagrams in sentences. It should just be native to the English language.

And I wasn’t really critiquing you. Just highlighting the benefits of not having to be formal. : )

Quirks: I say “we” because writing “I” in formal writing makes me feel weird.

Good to say so! But I encourage people to use “I” precisely in order to push back against formalspeak norms. Ostentation is bad for the mind, and it’s hard to do good research while feeling pressure to be formal.

Regardless though, I trust your abstract. Thanks. : )

I think the notion of ‘marginal charity’ misses the idea that it’s easier to hit a target if you aim purely at it, rather than having to compromise between several different optimisation criteria. Aiming purely to do good will have much better chance of hitting the target compared to marginally veering your aim away from your selfish purposes. There are costs of compromise.

I would be interested in following this bot if it were made. Thanks for trying!

Second-best theories & Nash equilibria

A general frame I often find comes in handy while analysing systems is to look for look for equilibria, figure out the key variables sustaining it (e.g., strategic complements, balancing selection, latency or asymmetrical information in commons-tragedies), and well, that’s it. Those are the leverage points to the system. If you understand them, you’re in a much better position to evaluate whether some suggested changes might work, is guaranteed to fail, or suffers from a lack of imagination.

Suggestions that fail to consider the relevant system variables are often what I call “second-best theories”. Though they might be locally correct, they’re also blind to the broader implications or underappreciative of the full space of possibilities.

(A) If it is infeasible to remove a particular market distortion, introducing one or more additional market distortions in an interdependent market may partially counteract the first, and lead to a more efficient outcome.

(B) In an economy with some uncorrectable market failure in one sector, actions to correct market failures in another related sector with the intent of increasing economic efficiency may actually decrease overall economic efficiency.

Examples

The allele that causes sickle-cell anaemia is good because it confers resistance against malaria. (A)

Just cure malaria, and sickle-cell disease ceases to be a problem as well.

Sexual liberalism is bad because people need predictable rules to avoid getting hurt. (B)

Imo, allow people to figure out how to deal with the complexities of human relationships and you eventually remove the need for excessive rules as well.

We should encourage profit-maximising behaviour because the market efficiently balances prices according to demand. (A/B)

Everyone being motivated by altruism is better because market prices only correlate with actual human need insofar as wealth is equally distributed. The more inequality there is, the less you can rely on willingness-to-pay to signal urgency of need. Modern capitalism is far from the global-optimal equilibrium in market design.

If I have a limp in one leg, I should start limping with my other leg to balance it out. (A)

Maybe the immediate effect is that you’ll walk more efficiently on the margin, but don’t forget to focus on healing whatever’s causing you to limp in the first place.

Effective altruists seem to have a bias in favour of pursuing what’s intellectually interesting & high status over pursuing the boringly effective. Thus, we should apply an equal and opposite skepticism of high-status stuff and pay more attention to what might be boringly effective. (A)

Imo, rather than introducing another distortion in your motivational system, just try to figure out why you have that bias in the first place and solve it at its root. Don’t do the equivalent of limping on both your legs.

I might edit in more examples later if I can think of them, but I hope the above gets the point across.

rime’s Quick takes

Think about it like this: Both sickle-cell anaemia & malaria are bad when considered separately, but they’re also in a frequency-dependent equilibrium because the allele (HbS) that causes anaemia for a minority also confers resistance against malaria for the majority. Thus, a “second-best theory” would be to say that the HbS allele is good because it improves the situation relative to nobody having resistance against malaria at all. While it’s true, it’s also myopic.

When we cure malaria, there will no longer be any selection-pressure for HbS, so we cure sickle-cell disease as well.

To unpack the metaphor: I think many traditional & strict norms (HbS) around sex & relationships can be net good on the margin, but only because they enforce rigid rules in an area where humans haven’t learned to deal with the complexities (malaria) in a healthy manner. “Sexual liberalism” encompasses imo an attempt to deal with them directly and eventually learn better norms that are more likely to work long-term.

I did skim this,[1] but still thought it was an excellent post. The main value I gained from it was the question re what aspects of a debate/question to treat as “exogenous” or not. Being inconsistent about this is what motte-and-bailey is about.

“We need to be clear about the scope of what we’re arguing about, I think XYZ is exogenous.”

“I both agree and disagree about things within what you’re arguing for, so I think we should decouple concerns (narrow the scope) and talk about them individually.”

Related words: argument scope, decoupling, domain, codomain, image, preimage

- ^

I think skimming is underappreciated, underutilised, unfairly maligned. If you read a paragraph and effortlessly understand everything without pause, you wasted an entire paragraph’s worth of reading-time.

I mused about this yesterday and scribbled some thoughts on it on Twitter here.

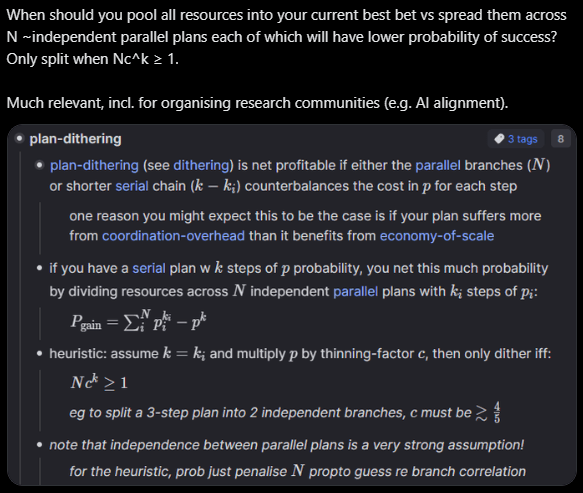

“When should you pool all resources into your current best bet vs spread them across N ~independent parallel plans each of which will have lower probability of success?”

Investing marginal resources (workers, in this case) into your single most promising approach might have diminishing returns due to A) limited low-hanging fruits for that approach, B) making it harder to coordinate, and C) making it harder to think original thoughts due to rapid internal communication & Zollman effects. But marginal investment may also have increasing returns due to D) various scale-economicsy effects.

There are many more factors here, including stuff you mention. The math below doesn’t try to capture any of this, however. It’s supposed to work as a conceptual thinking-aid, not something you’d use to calculate anything important with.

A toy-model heuristic is to split into separate approaches iff the extra independent chances counterbalance the reduced probability of success for your top approach.

One observation is that the more dependent/serial steps ( your plan has, the more it matters to maximise general efficiencies internally (), since that gets exponentially amplified by .[1]

- ^

You can view this as a special case of Ahmdal’s argument. If you want. Because nobody can stop you, and all you need to worry about is whether it works profitably in your own head.

- ^

The problem is that if you select people cautiously, you miss out on hiring people significantly more competent than you. The people who are much higher competence will behave in ways you don’t recognise as more competent. If you were able to tell what right things to do are, you would just do those things and be at their level. Innovation on the frontier is anti-inductive.

If good research is heavy-tailed & in a positive selection-regime, then cautiousness actively selects against features with the highest expected value.[1]

That said, “30k/year” was just an arbitrary example, not something I’ve calculated or thought deeply about. I think that sum works for a lot of people, but I wouldn’t set it as a hard limit.

- ^

Based on data sampled from looking at stuff. :P Only supposed to demonstrate the conceptual point.

Your “deference limit” is the level of competence above your own at which you stop being able to tell the difference between competences above that point. For games with legible performance metrics like chess, you get a very high deference limit merely by looking at Elo ratings. In altruistic research, however...

- ^

Um, I did not know about “came in fluffer” until I googled it now, inspired by your post. I’m not English, so I thought “fluffer” meant some type of costume, and that some high-status person showed up somewhere in it. My innocence didn’t last long.

I’m not against sexual activities, per se, but do you really want to highlight and reinforce that as a salient example of “Rationality culture”?