“In the day I would be reminded of those men and women,

Brave, setting up signals across vast distances,

Considering a nameless way of living, of almost unimagined values.”

Emrik

Oh, this is excellent! I do a version of this, but I haven’t paid enough attention to what I do to give it a name. “Blurting” is perfect.

I try to make sure to always notice my immediate reaction to something, so I can more reliably tell what my more sophisticated reasoning modules transforms that reaction into. Almost all the search-process imbalances (eg. filtered recollections, motivated stopping, etc.) come into play during the sophistication, so it’s inherently risky. But refusing to reason past the blurt is equally inadvisable.

This is interesting from a predictive-processing perspective.[1] The first thing I do when I hear someone I respect tell me their opinion, is to compare that statement to my prior mental model of the world. That’s the fast check. If it conflicts, I aspire to mentally blurt out that reaction to myself.

It takes longer to generate an alternative mental model (ie. sophistication) that is able to predict the world described by the other person’s statement, and there’s a lot more room for bias to enter via the mental equivalent of multiple comparisons. Thus, if I’m overly prone to conform, that bias will show itself after I’ve already blurted out “huh!” and made note of my prior. The blurt helps me avoid the failure mode of conforming and feeling like that’s what I believed all along.

Blurting is a faster and more usefwl variation on writing down your predictions in advance.

- ^

Speculation. I’m not very familiar with predictive processing, but the claim seems plausible to me on alternative models as well.

- ^

If Will thought SBF was a “bad egg”, then it could be more important to establish influence with him, because you don’t need to establish influence (as in ‘willingness to cooperate’) with someone who is entirely value-aligned with you.

Yes! That should work fine. That’s 21:00 CET for me. See you then!

My email is emrik.asheim@gmail.com btw.

I think it’d be easy to come up with highly impactfwl things to do with free reign over Twitter? Like, even before I’ve thought about it, there should be a high prior on usefwl patterns. Brainstorming:

Experiment with giving users control over recommender algorithms, and/or designing them to be in the long-term interests of the users themselves (because you’re ok with foregoing some profit in order to not aggressively hijacking people’s attention)

Optimising the algorithms for showing users what they reflectively prefer (eg. what do I want to want to see on my Twitter feed?)[1]

Optimising algorithms for making people kinder (eg. downweighting views that come from bandwagony effects and toxoplasma), but still allowing users to opt-out or opt-in, and clearly guiding them on how to do so.

Trust networks

Liquid democracy-like transitive trust systems (eg. here, here)

I can see several potential benefits to this, but most of the considerations are unknown to me, which just means that there could still be massive value that I haven’t seen yet.

This could be used to overcome Vingean deference limits and allow for hiring more competent people more reliably than academic credentials (I realise I’m not explaining this, I’m just pointing to the existence of ideas enabled with Twitter)

This could also be a way to “vote” for political candidates or decision-makers in general too, or be used as a trust metric to find out whether you want to vote for particular candidates in the first place.

Platform to arrange vote swapping and similar, allow for better compromises and reduce hostile zero-sum voting tendencies.

Platform for highly visible public assurance contracts (eg. here), could be potentially be great for cooperation between powerfwl actors or large groups of people.

This also enables more visibility for views that held back by pluralistic ignorance. This could be both good and bad, depending on the view (eg. both “it’s ok to be gay” and “it’s not ok to be gay” can be held back by pluralistic ignorance).

Could also be used to coordinate actions in a crisis

eg. the next pandemic is about to hit, and it’s a thousand times more dangerous than covid, and no one realises because it’s still early on the exponential curve. Now you utilise your power to influence people to take it seriously. You stop caring about whether this will be called “propaganda” because what matters isn’t how nice you’ll look to the newspapers, what matters is saving people’s lives.

Something-something nudging idk.

Mostly, even if I thought Sam was in the wrong for considering a deal with Elon, I find it strange to cast a negative light on Will for putting them in touch. That seems awfwly transitive. I think judgments for transitive associations are dangerous, especially given incomplete information. Sam/Will probably thought much longer on this than I have, so I don’t think I can justifiably fault their judgment even if I had no ideas on how to use twitter myself.

- ^

This idea was originally from a post by Paul Christiano some years ago where he urged FB to adopt an algorithm like this, but I can’t seem to find it rn.

Hmm, I suspect that anyone who had the potential to be bumped over the threshold for interest in EA, would be likely to view the EA Wikipedia article positively despite clicking through to it via SBF. Though I suspect there are a small number of people with the potential to be bumped over that threshold. I have around 10% probability on that the negative news has been positive for the movement, primarily because it gained exposure. Unlikely, but not beyond the realm of possibility. Oo

Mh, I had in mind both, and wanted to leave it up for interpretation. A public debate about something could be cool because I’ve never done that, but we’d need to know what we’re supposed to disagree about first. Though, I primarily just wish to learn from you, since you have a different perspective, so a private call would be my second offer.

I’m confused. You asked whether I had in mind a public or private call, and I said I’d be fine with either. Which question are you referring to?

Happy to either, but I’ll stay off-camera if it’s going to be recorded. Up to you, if you wish to prioritise it. : )

This is excellent. I hadn’t even thought to check. Though I think I disagree with the strength of your conclusion.

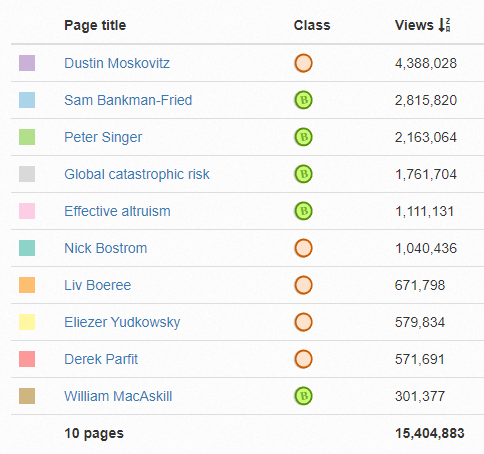

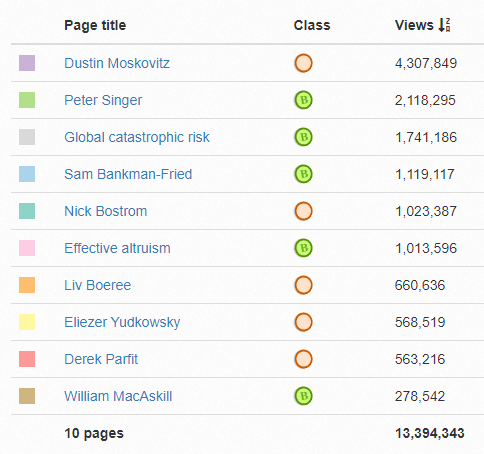

If you look back since 2015 (“all time”), it looks like this.[1] Keep in mind that Sam has 1.1M pageviews since before November[2] (before anyone could know anything about anything). Additionally, if you browse his Wikipedia page, EA is mentioned under “Careers”, and is not something you read about unless particularly interested. On the graph, roughly 5% click through to EA. (Plus, most of the news-readers are likely to be in the US, so the damage is localised?)

A point of uncertainty for me is to what extent news outlets will drag out the story. Might be that most of the negative-association-pageviews are yet to come, but I suspect not? Idk.

Not sure how to make the prediction bettable but I think I’m significantly less pessimistic about the brand value than you seem to be.

Feel free to grab these if you want to make a post of it. Seems very usefwly calibrating.

Hmm, not sure how that job would work, but if someone could be paid to fix all of EAs coordination problems, that’d probably be worth the money. It is the responsibility of anyone who wishes to assume that responsibility. And if they can, I really hope they do.

I’m a very slow reader, but do you wish to discuss (or debate it!) over a video call sometime? I’m eager to learn things from someone who’s likely to have a different background on the questions I’m interested in. : )

I also made a suggestion on Sasha’s post related to nudging people’s reading habits by separating out FTX posts by default. I don’t endorse the design, but it could look something like this.[1] Alternatively, could introduce ‘tag profiles’ or something, where you can select a profile, and define your filters within each profile.[2]

(P.S. Sorry for the ceaseless suggestions, haha! Brain goes all sparkly with an idea and doesn’t shut up until I make a comment about it. ^^’)

I have an unusual perspective on this. I skim nearly everything, and rarely see the benefit of completionism in reading. And I don’t benefit from the author being nuanced or give different views a balanced take. I don’t care about how epistemically virtuous the author is being! I just care about learning usefwl patterns from the stuff I’m reading, and for that, there’s no value to me knowing whether the author is obviously biased in favour of a view or not. There are more things to say here, but I say some of them here.

Btw, I appreciate your contributions to the forum, at least for what I’ve seen of it. : )

I feel like quite a few people are working on things related to this, with approaches I have different independent impressions about, but I’m very happy there’s a portfolio.

Manifold Markets, Impact Markets, Assurance Contracts, Trust Networks, and probably very obvious stuff I’m forgetting right now but I thought I’d quickly throw these in here. I’m also kinda working on this, but it’s in parallel with other things and it mostly consists of a long path of learning and trying to build up understanding of things.

I don’t know of standardised methods that I think are likely to more reliably generate that, and I am worried about rushing standardisation prematurely. But this worry is far from insurmountable, and I’d be happy to hear suggestions for things that should be standardised. You have any? : )

There are both positives and negatives to being addicted to the EA Forum, so I’m not sure I’m against it entirely. Also, I think the current gatekeeper(s) (mainly Lizka atm, I think) have better judgment than the aggregated wisdom of accumulated karma, so I really appreciate the curation. Weighted upvotes are controversial, and I could change my mind, but for now I suspect the benefits outweigh the costs because I trust the judgment of high-karma-power users more on average. For an alternative way to filter posts, I think Zoe William’s weekly summaries are excellent.

Ideally, it would be cool if EA karma could be replaced with a distributed trust network of sorts, such as one I alluded to on LW.

Big agree! I call it the “frontpage time window”. To mitigate the downsides, I suggested resurfacing curated posts on a spaced-repetition cycle to encourage long-term engagement, and/or breaking out a subset of topics into “tabs” so the forum effectively becomes something like a hybrid model of the current system and the more traditional format.

Huh! I literally browsed this paper of his earlier today, and now I find it on the forum. Weird!

Anyway, Michael Nielsen is great. I especially enjoy his insights on spaced repetition.

“What is the most important question in science or meta science we should be seeking to understand at the moment?”

Imo, the question is not “how can we marginally shift academic norms and incentives so the global academic workforce is marginally more effective,” but instead “how can we build an entirely independent system with the correct meta-norms at the outset, allowing knowledge workers to coordinate around more effective norms and incentive structures as they’re discovered, while remaining scalable and attractive[1] enough to compete with academia for talent?”

Even if we manage to marginally reform academia, the distance between “marginal” and “adequate” reform seems insurmountable. And I think returns from precisely aimed research are exponential, such that a minority of precisely aimed researchers achieve good compared to a global workforce mostly compelled to do elaborate rituals of paper pushing. To be clear, this isn’t their fault. It’s the result of a tightly regulated bureaucratic process that’s built up too much design debt to refactor. It’s high time to jump ship.

- ^

in profit and prestige

- ^

I’m in favour of this!

While you can click “hide” topic, I had forgotten about it, and it wasn’t obvious how to before I read the comments. Besides, I agree that it would be healthy for the community if the Frontpage wasn’t inundated with FTX posts. (After I hid the topic, the forum suddenly felt less angry somehow. Oo)

Here’s a suggestion for how it could look.[1]

- ^

When you’re on the Frontpage, you see fewer Community posts. With a separate tab for Community, I think it would be fine if FTX crisis posts were hidden by default or something, or hot-topic posts were given a default negative modifier. (We don’t want the forum to waste time.)

I suppose it would also be nice to have an option to collapse the tags so they take up less space.[2]

- ^

- ^

Not sure I agree, but then again, there’s no clear nailed-down target to disagree with :p

For particular people’s behaviour in a social environment, there’s a high prior that the true explanation is complex. That doesn’t nail down which complex story we should update towards, so there’s still more probability mass in any individual simpler story than in individual complex stories. But what it does mean is that if someone gives you a complex story, you shouldn’t be surprised that the story is complex and therefore reduce your trust in them—at least not by much.

(Actually, I guess sometimes, if someone gives you a simple story, and the prior on complex true stories is really high, you should distrust them more. )

To be clear, if someone has a complex story for why they did what they did, you can penalise that particular story for its complexity, but you should already be expecting whatever story they produce to be complex. In other words, if your prior distribution over how complex their story will be is nearly equal to your posterior distribution (the complexity of their story roughly fits your expectations), then however much you think complexity should update your trust in people, you should already have been distrusting them approximately that much based on your prior. Conservation of expected evidence!

Just wanted to say, I love the 500-word limit. A contest that doesn’t goodhart on effort moralization!