On Loyalty

Epistemic status: I am confident about most individual points but there are probably errors overall. I imagine if there are lots of comments to this piece I’ll have changed my mind by the time I’ve finished reading them.

I was having a conversation with a friend, who said that EAs weren’t loyal. They said that the community’s recent behaviour would made them fear that they would be attacked for small errors. Hearing this I felt sad, and wanted to understand.

Tl;dr

I feel a desire to be loyal. This is an exploration of that. If you don’t feel that desire this may not be useful

I think loyalty is a compact between current and future members on what it is to be within a community—“do this and you will be safe”

Status matters and loyalty is a “status insurance policy”—even if everyone else doesn’t like you, we will

I find more interest in where we have been disloyal than where we ought to be loyal.

Were we disloyal to Bostrom?

Is loyalty even good?

Were people disloyal in sharing the Leadership Slack?

Going forward I would like that

I have and give a clear sense of how I coordinate with others

I take crises slowly and relaxedly and not panic

I am able to trust that people will keep private conversations which discuss no serious wrongdoing secret and have them trust me that I will do the same

I feel that it is acceptable to talk to journalists if helping a directionally accurate story, but that almost all of the time I should consider this a risky thing to do

This account is deliberately incoherent—it contains many views and feelings that I can’t turn into a single argument. Feel free to disagree or suggestion some kind of synthesis.

Intro

Testing situations are when I find out who I am. But importantly, I can change. And living right matters to me perhaps more than anything else (though I am hugely flawed). So should I change here?

I go through these 1 by 1 because I think this is actually hard and I don’t know the answers. Feel free to nitpick.

What is Loyalty (in this article)?

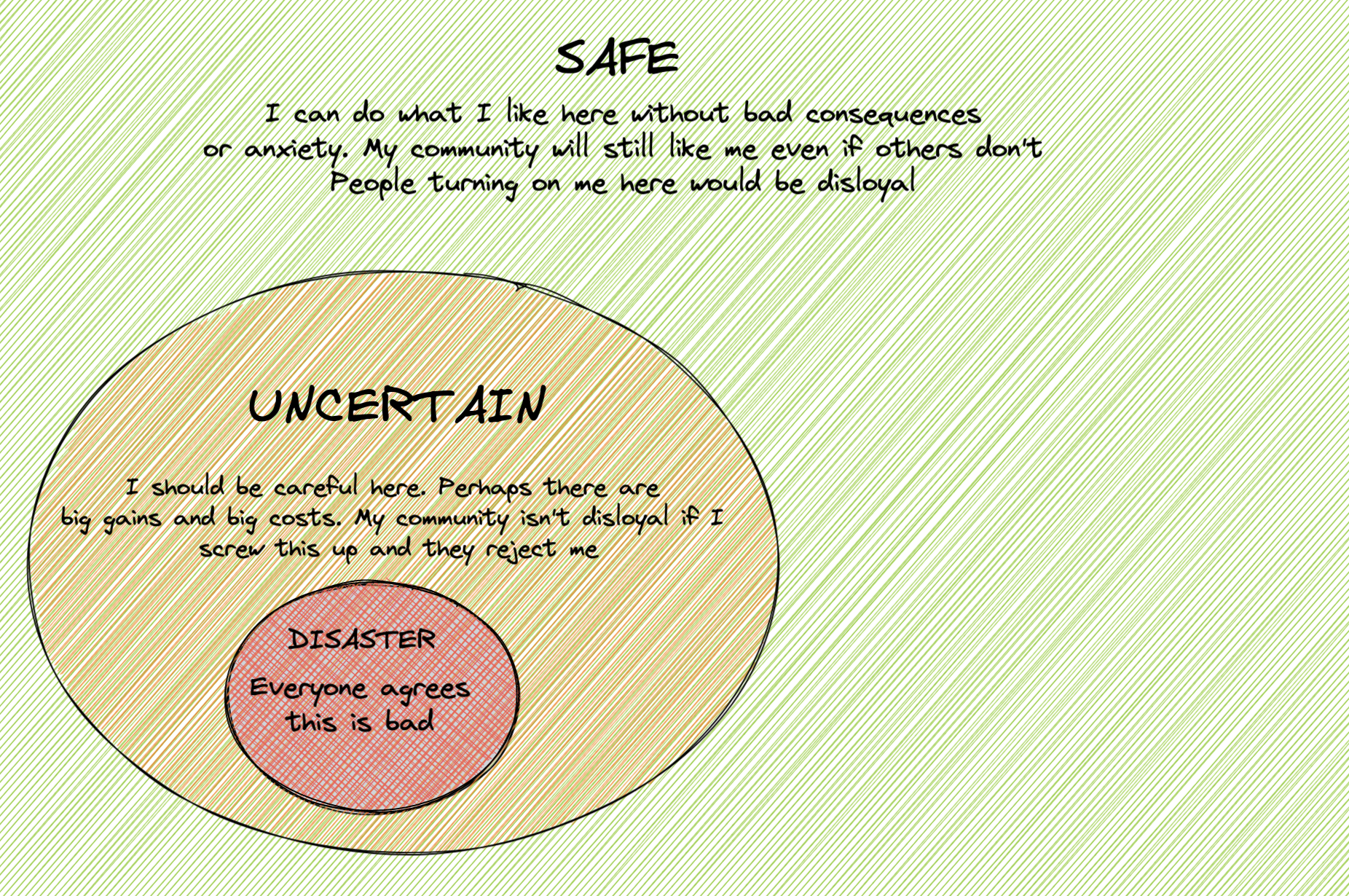

I think loyalty is the sense that do right by those who have followed the rules. It’s a coordination tool. “You stuck by the rules, so you’ll be treated well”, “you are safe within these bounds, you don’t need to fear”.

I want to be loyal—for people to think “there goes Nathan, when I abide by community norms, he won’t treat me badly”.

The notion of safety implies a notion of risk. I am okay with that. Sometimes a little fear and ambiguity is good—I’m okay with a little fear around flirting at EAGs because that reduces the amount of it, I’m okay with ambiguity in how to work in a biorisk lab. There isn’t always a clear path to “doing the right thing” and if, in hindsight I didn’t do it, I don’t want your loyalty. But I want our safety, uncertainty, disaster circles to be well calibrated.

Some might say “loyalty isn’t good”—that we should seek to treat those in EA exactly the same as those outside. For me this equivaltent to saying our circles should be well calibrated—if someone turns out to have broken the norms we care about then I already don’t feel a need to be loyal to them.

But to me, a sense of ingroup loyalty feel inevitable. I just like you more and differently than those ousisde EA. It feels naïve to say otherwise. Much like “you aren’t in traffic, you are trafffic”, “I don’t just feel feelings, to some extent I am feelings”

So let’s cut to the chase.

The One With The Email

Nick Bostrom sent an awful email. He wrote an apology a while back, but he wrote another to avoid a scandal (whoops). Twitter at least did not think he was sorry. CEA wrote a short condemnation. There was a lot of forum upset. Hopefully we can agree on this.

Let’s look at this through some different frames.

The loyalty frame

I think I was a bit disloyal to Bostrom. I don’t know him, but I don’t think he’s the thing described by “a racist” [1]. Likewise the email, while awful, I am relatively confident isn’t some coded call to the far right. It was an a point about truth framed in an edgy way. Then the apology was clumsy and self-righteous and failed to convey to many readers that he was sorry he sent it. To many it read more like he was sad that he had to write an apology. So at the time I liked that the CEA criticism meant that EA probably wouldn’t be dragged into another scandal.

But what did I owe Bostrom? I don’t think I owe public EAs some special loyalty as EAs, nor do I want It from you if you dislike my actions. But I think I owe public figures who create valuable work and aren’t racists to not imply so. At the same time it’s okay to say he was a fool to write it, but only if I’m clear about my general views.

I think it’s underrated how productive Bostrom is. He’s created loads of useful ideas. I’m glad he did and I’m glad that he answers our emails. I probably would want Bostrom involved much more than a random EA (though I wouldn’t want a culture that drives away productive and non-productive people alike). I sense this is actually the nub of the discussion, though it feels aversive to raise—how many EAs leaving is Bostrom worth?

Finally, there is an increased benefit to helping people who are being attacked. Émile Torres was writing an article which would portray that email as evidence of Bostrom’s racism. I think that was incorrect and so should not benefit Émile for trying it.

The harm frame pt. 1

Some people really disliked Bostrom’s apology. But I sense that more than that they disliked an immediate community response to protect him. I guess these people want to feel like racist emails won’t be sent by people they respect and that they won’t fear that scientific racism undergirds bad treatment they receive.

I have heard a number of people say things like this so I take it seriously. Bostrom’s apology knocked their confidence that EA was safe. Or would be safe. I guess a few people will leave the EA community as a result. That’s a real cost.

The people who hold this view that I talked to liked CEA’s response. It gave them confidence that this wouldn’t happen again.

The harm frame pt. 2

Some people disliked the condemnation of Bostrom. I guess they felt that while he had been unwise a long time ago but that he wasn’t a racist so we shouldn’t imply he was.

For them the backlash against saying this was scary. They were articulating true and relevant beliefs but were being told to keep quiet because it was upsetting.

They disliked CEA’s response because they thought it was literally false, implied that all EAs supported it and was PR-ey as opposed to being straightforward. They thought it was the wrong process (an edict from on high that didn’t need to happen) and the wrong answer (Bostrom was dumb but not a racist).

The honesty frame

I think the CEA statement does pretty mediocrely on the onion test, in that I don’t think the first reading gives the impression of what the institution actually believes.

Effective altruism is based on the core belief that all people count equally. We unequivocally condemn Nick Bostrom’s recklessly flawed and reprehensible words. We reject this unacceptable racist language, and the callous discussion of ideas that can and have harmed Black people. It is fundamentally inconsistent with our mission of building an inclusive and welcoming community.

— The Centre for Effective Altruism

For me, the first line is fine. I think we can get into it, but many of the things we believe actually sit under that idea. On the rest, while I can’t actually disagree with any of it, I think that it implies Bostrom was racist and.. I don’t think he was. I think moreover it sends a couple of signals:

“If you say something that is reasonably interpreted as racist, and then don’t appear to recognise the potential harm to the community you are apologising to, we will make a statement that emphasises our committment to creating a safe community for that group of people”

and also “if you said a dumb thing 25 years ago and then clumsily apologise for it, we will condemn you, because we are scared of controversy”

I have complex feeling about the first signal and I guess I’ll pass on whether I agree or disagree. In hindsight, I don’t support the second, as cheap as that is to say now.

It’s worth considering what signal things send/what norms they set. I guess here, the signals are both “don’t say stuff that upsets people” and “be careful what you say if it might damage our reputation” and also “like everywhere else, racism is an issue mired in complexity, fear and status games”.

I think I’d prefer a unified signal that we all agreed with rather than these quite different signals to different groups.

Personally I could have sent a signal that:

I disagree with the email

I don’t want emails like that

I want better and more careful apologies than that

I am not going to tear someone apart over what isn’t true.

But I didn’t send such signals.

Attempt at consensus

I struggle to weigh these harms to different groups to be honest. I know that usually we go with the “all racism must be dealt with swiftly and maximally” but I think my desire to do that comes more out of fear than truth. I think there was a way to have the discussion in a way that left most people feeling like the EA forum wasn’t a place for racism but that also standing up for Bostrom not being a racist wasn’t an awful thing to do. I think we failed there. And I see that as a community failure.

To avoid a sort of general “we all could have done better”, I think each party could have specifically done better. Those defending Bostrom could have talked more about their emotions—this wasn’t just a discussion about truth, I think it was about them feeling safe in the community in future. Likewise those who liked CEAs response shouldn’t be pushing for a rapid response to every scandal. Cold takes are good, I think (though as I’ve written elsewhere, the silence on FTX is almost farcical at this point).

I think an additional complexity is that Bostrom’s comms was just really bad here. And to the extent that he needs to do comms he should stop or get better. Likewise his org I guess needs to hire people from both parties here, so it’s worth understand and engaging with both. I don’t think it’s good to turn on people in a crisis, but often crises do reveal actual faults or strengths. Bostrom could have revealed himself as a master communicator. EA could have revealed itself as wise and balanced when managing such issues. In both cases that’s not what happened.

The underrated thing is that there really is some kind of tradeoff between thinking he’s part of EA (which might affect his productivity or how much he recommends EA ideas) and some people being a part of EA who otherwise wouldn’t. It feels right to surface that these things are currently at odds. And perhaps an honest accounting of what we thinking Bostrom currently believes and does fits in here too, though I don’t know where.

I think it’s pretty cowardly to write something like this and not attempt to give actual solutions. So, here is what I wish I’d done at the time.

Written a post saying “We can all agree the Bostrom email was really bad, but can take a moment before responding? This is fraught and we could hugely upset one another”

And feel pretty uncertain that CEA’s statement was better than silence. Yes it conveyed that they take this seriously, but also set a precedent for rapid strident responses, which imply things that I don’t believe. I don’t know what pressure journalists were giving, so this might be wrong, but I am uncertain.

Perhaps I’d have liked CEA to define some red lines around bad actions that might happen rather than just statements—eg talking about if Bostrom had sent that email today. That feels like it would have been easier to judge and a clearer signal.

That said (and I’m biased as someone who likes Shakeel) I do think Shakeel takes more flack than I’d expect and a lot of it is quite personal. If you wouldn’t say it to Ben Todd or Amy Labenz, don’t say it to Shakeel Hashim and in particular, don’t say it on twitter if that’s not the topic in hand[2].

The EA Governance Slack Leaks

In the wake of the FTX crisis, the New Yorker wrote an article, with many exerpts from the EA governance slack. In particular it attempted to skewer Robert Wiblin for these comments:

[In reference to some article] This is pretty bad because our great virtue is being right, not being likeable or uncontroversial or ‘right-on’ in terms of having fashionable political opinions.

Again some frames:

The loyalty frame

Wiblin is a senior member in this community. He and others should be able to talk without fear of being quoted out of context. If he can’t then either he and others will discuss in some more private space or they will have to be less clear about what they think. Both of those options seem bad.

I am upset that someone leaked extensive amounts of private conversation without evidence of severe wrongdoing. And unlike much of what I say about whistleblowers[3] (who I think we could serve better), I think this is an offence which should get whoever did it blacklisted.

I say a lot of things. And I stand by the content of almost all of them. But I misphrase things all the time. I would not want my comments in a big magazine. And, absent of serious wrongdoing, I don’t think you should either.

The truth frame

This is, I guess, a thing Wiblin actually believes. Until the FTX crisis, I think I’d have agreed with him - (it’s a bit more complex now—how does one compare predicting a pandemic correctly, to incorrectly predicting a fraudster?). So why not publish it?

Because people are allowed not to say things. We don’t live in a panopticon, we can’t all read each others’ minds. And generally consensus is that that’s a good norm. So it should be here too.

An attempt at consensus

As you know, I’m a big fan of prediction markets, but I draw the line at markets on relationships (among other things). This is because I once mused about publicly forecasting a friend’s relationship and they were upset. Even though I wouldn’t have been saying anything untrue, some beautiful things cannot stand scrutiny. This was a dumb and callous mistake from me, but I resolved not to make it again. Hence, I try to be especially cautious around surfacing things that are vulnerable and personal.

While not exactly the same, I think that good governance requires some privacy. It requires space to think about your views, to change your mind and speak freely. We should have an extremely strong prior against sharing these conversations.

This leak in my opinion did not meet this bar. Had the slack contained evidence that EA figures knew about FTX’s fraud that certainly would, but to me this falls short by at least an order of magnitude. And seemingly a lot of documents were shared. There weren’t crimes just opinions. Currently, I think whoever shared of it should be ashamed of themselves and I would seriously consider kicking them out of community events. For clarity, I absolutely do not think that about those who gave sexual harassment stories to Time. For me the two are as night and day—by all means give evidence of severe wrongdoing, but never share verbatim random conversations for political gain.

Some early reviews think that final point is a crux. That sometimes it’s acceptable to use internal private knowledge to steer EA if people aren’t convinced by your arguments. I think such thinking destroys trust and turns the whole community into an adversarial environment. We are a community who argue our positions, not one that seeks to uses newspapers to win arguments we couldn’t win by discussion.

Talking With Journalists

It seems the consensus that we shouldn’t do this. That the costs outweigh the benefits.

The cost-benefit frame

If a journalist contacts you it’s unlikely that you are going to significantly shift the narrative of their story. But you might accidentally give them a quote that makes the story a lot worse or leak a load of private slack messages, smh.

Everyone likes to claim that they respect communities that are transparent, but I don’t see that in practice. Does OpenPhil gain respect from people other than us for making its grants easy to critique? My guess is no. So really we should optimise transparency for what we want to know rather than externals.

Here, talking to journalists has a high downside and small upside. You play in an adversarial environment. They are experts, I am not.

The reputation/accuracy frame

I think you should aim for a good reputation, rather than good PR. And I think you should aim for it by actually… being good and then communicating that accurately. If I am bad, I deserve a bad reputation.

So if we do bad things and a journalist asks about them, I feel a strong temptation to go on the record if I think the story is framed correctly (though not sharing private quotes from others). I usually try and get friends to talk me out of it. What I will say is that I don’t mind worsening the image of EA when that is reality.

The loyalty frame

Here, my gut says that talking to journalists is a betrayal of the community—others don’t expect me to do it so it’s disloyal. I don’t want to read people speaking for me when it’s not true or misframing discussions.

I guess at times the “loyalty” part of me can see journalists as our outgroup who should at worst be placated, but at best ignored. This is a soldier mindset, though, before criticising, it seems very standard in other large communities.

I guess I’d like us to shift our loyaly to “truth in an adverse information environment” so without being naïve we can still acknowledge or even publicly affirm the flaws within EA.

The Notorious S.B.F.

I’m not gonna introduce this one. You know the story.

The loyalty frame?

I thought SBF was one of the best people alive. Within 12 hours I turned on him. Was I disloyal?

And do I owe loyalty to a fraudster? What about a fraudster who was encouraged to take big risks by people like me?

The main response from people I’ve talked to about this article is the speed with which I heel turned on SBF, before I could be sure. My main response here is that there are not many legal ways you can lose $8bn and that my work as a forecaster teaches me to update fast. Within ~6 hours, I’d have been happy to bet at 90%+ odds that SBF had done immoral things and I did take flack for the position I took. I think that position was defensible then and I think it’s defensible now.

In that way I don’t feel loyal to people that did immoral things. You should not expect me to defend you if you do them. Perhaps my friend fearing being hung out to dry was right. The question is what actions he’s concerned about.

But was what SBF did immoral. Austin makes the case that SBF was taking risks and allocating money towards good causes. That is behaviour we should want to stand by if it goes badly. Maybe especially if it goes badly, since if it goes well it has rewards in money and status.

I don’t find this case compelling, but I do find that awfully convenient. I don’t know.

The coherence frame

If SBF had won his bets but then I’d found out about the fraud would I have denounced him? I doubt it. In that world, I’d justify by saying “he knew the risks he was taking” then happily take his cash. Maybe I ought to, but I know myself well enough to say that without this moment to stop and consider I probably wouldn’t have.

That’s not to say I won’t act differently in future, though I really hope this doesn’t happen again.

This leaves me with a problem if I don’t want to be a charlatan here too. And honestly, I don’t know the answer. Or maybe I’m afraid of the answer. Am I a charlatan? Integrity really matters to me and it scares me to think, maybe I just don’t have much here.

The empathy frame

I think I am badly compromised by being able to much more easily empathise with SBF than the millions who lost money on FTX (though I think my public statements are things I’d stand by).

Then again, I find it much easier to empathise with the people who lost money on FTX than the people who won’t live if we all day. Do these ends justify these means? But like actually?

Perhaps this isn’t really in the discussion

One thing I think is underrated is that maybe this wasn’t really about the ends justifying the means. Maybe it was just incompetence and then fraud to cover it up. At that point I don’t feel much loyalty.

No attempt at consensus

I think my response is suspect. Even with the knowledge of fraud I probably would have supported SBF et al if successful and denied them if not. If there was another possibly fraudulent EA I think I’d deny them much sooner.

I don’t know how to synthesise this. Let me know if you do. Maybe write a comment that others can agree with.

Some throwaway remarks on loyalty in regard to other recent issues

Non-Longtermism

Has it been disloyal to global health folks to use the positive image they have generated for pushing catastrophic risk work?

On the yes side. They gained the good reputation and now have to watch as it is used and sometimes squandered by causes they don’t support

On the no side, EA is about the most effective use of resources and reputation is a resource. I am not (I think) a longtermist and I think the case for more catastrophic risk spending is pretty unassailable. I think global health folks did in some way sign up[4] for their good work to be used to support better work if the community found it.

It’s worth noting that global health folks can tug things back in their direction. I think there is a strong case that much non-xrisk longtermist work is very speculative and so we should go with small positives rather than big question marks. They can make this case, or find even more effective interventions

I lean quite strongly “no” here. I think most arguments against the importance of catastrophic risk are bad. I would support global health/animal welfare folks if they left, though I’d be sad.

Going forward (for me)

I am okay with the idea that we messed this up so here are some personal thoughts:

I like the space we provide for truth-seeking and want that to be maintained even if we take flack from time to time

I want a sense that we are unlikely to be in a constant state of scandal and that eugenics isn’t gonna be a regular topic

If you (not Bostrom) said or did something moderately bad a long time ago and apologise poorly I’m gonna be annoyed, but I am still probably happy to work with you. I don’t want you, the reader to live in fear. But maybe talk to someone before you apologise if you tend to be bad at that kind of thing

If you leak private conversations that point to serious wrongdoing, good job. Let’s not do serious wrongdoing.

If you leak private conversations without serious wrongdoing, I won’t respect you and I won’t invite you to EA stuff. Though I might sometimes work with you if I think it’s a really good idea

I won’t write prediction markets based on private info (unless it’s very bad behaviour) but I migggght sometimes bet on them especially if anonymous. I’m open to correction here, but I really like information being free

If you talk to a journalist and help write a tough but fair article, good job. I have no desire for journalists to be easy on EA

If you talk to a journalist and fuck it up I’m gonna be upset. I’m not gonna shun you probably, but I’ll just be upset. Become better calibrated. Though really I’m the person who needs to hear this

If you defraud $8Bn I am gonna be sad and confused and upset and guilty and I probably less productive for a long time. I think you deserve shunning (though I wish you well, as I wish all individuals well). If it’s actually a big bet with huge upside, please consider that overwhelming likelihood that you are a megalomanic and deluding yourself

Going forward (more broadly)

I would like to:

Leave people with a clear sense of how we coordinate together

Have an epistemic environment which is rigorous but only rarely discusses subjects we hate

Not turn on people for politically expedient reasons

Take crises slowly and relaxedly and not panic

Be able to trust that people will keep private conversations secret and have them trust me that I will do the same

Feel that it is acceptable to talk to journalists if helping a directionally accurate story

Thanks to everyone who looked over this. In the past, I have found that thanking people for controversial blogs is controversial in itself. Suffice it to say I’m grateful and that ~6 people looked over it.

In the Comments

I’ve written 5 proposals for possible future EA community norms around loyalty: agreevote if you agree, disagreevote if you don’t (neither affects karma). Can you write statements that people agree with more?

Upvotes and downvotes are for how important things are to be seen. Some things that are true are boring and some things that are false are worth reading.

- ^

I doubt he treats people significantly differently on the basis of race. Though I wouldn’t be surprised if he sometimes makes people uncomfortable while talking about it. I also wouldn’t be surprised if people of colour didn’t want to work in his org a bit after this.

- ^

Topic and medium matter. While I think there is a forum culture of prising apart any issue, this isn’t true everywhere. Twitter is not a place to randomly challenge people you don’t know well unless that is a norm they have consented to. Feel free to do it to me, do not feel free to do it to random EAs. It’s like striding up to someone and poking them in the chest at a party. It comes across as aggressive. And if you knowingly do something aggressive, I’m gonna think you intend to be aggressive.

- ^

This is a wip article, but it’s evidence that I was pro-whistleblower even before FTX https://forum.effectivealtruism.org/posts/QCv5GNcQFeH34iN2w/what-we-are-for-community-correction-and-scale-wip (there is some chance I edited it after and forgot but I don’t think so)

- ^

One reviewer noted they didn’t sign up for SBF’s fraud. Well I didn’t either. I think that’s a different problem than a global health/catastrophic risk split

Proposed community norm. Agreevote if you agree.

Be very careful when talking to journalists. Have a strong prior against it unless you are confident they are honest, in which case have a weak prior towards it. Consider what the story they are trying to write is. Will your comment make it more accurate or not. And be careful, they are experts and you aren’t. But, if you trust them it’s okay to give accurate information in areas that EAs reputation is wrong (even if it’s too positive)

I agreevoted but I don’t think this is a community norm. It’s just a life skill.

I think many communities have a norm against talking to journalists. Or talking to them in a specific way.

Eg political communities will probably burn you if they find you talking to journalists, but political people cultivate relationships with journalists who they feed info to to advance their aims.

community norm:

Fraud and other morally questionable behavior should always be denounced, regardless of whether it “paid off”.

Would you have done in that other world? I’m not sure I would have.

Proposed community norm. Agreevote if you agree.

People should not be condemned for things we think they don’t actually believe or that we don’t actually think are issues. We should at least weather more than a day of criticism before folding to stuff like this

Two separate statements there . . .

Sure, upvote only if you agree to both.

Proposed community norm. Agreevote if you agree.

Do not share private information publicly unless it is of serious wrongdoing. It damages our ability to trust each other.

Disagreevote because “private information” is too vague and likely too broad. What you’re describing as far as the Slack channel is what we would call “deliberative process” in my field—and a pattern of leaking that kind of information is pretty corrosive of the ability to explore ideas freely. On the other hand, I think the norm should be significantly weaker for official actions of organizations, even if knowledge of those actions is “private.” And I think whether the person told you the information in confidence is a pretty significant factor here.

I suggest people write their own attempts at community norms.

Proposed community norm. Agreevote if you agree.

We do not want to be a community where upsetting issues unrelated to doing good are regularly discussed. Especially not in a callous or edgy way. If they need to be discussed, we should be able to, but they should not be discussed frequently. If we became a community that discussed upsetting issues reguarly, we wouldn’t want to be here or invite others to be here.

edited for clarity.

Technically I agree, but I feel like some of your proposals lead people to believe there’s a big problem when there isn’t one. We didn’t bring up The Email; Torres did. And then lots of people understandably attacked a well-respected member of the community and then lots of people understandably defended him. We weren’t discussing it to be “edgy” and we don’t discuss it”regularly.”

I suspect there were a number of callous comments given the sensitive nature of the topic, so I might support a version of this that’s about reminding ourselves of the harm that can do as and when appropriate, but I think the forum mods are pretty good at this.

The last clause is difficult to understand—could you clarify?

How’s that?

Clearer! Thanks!

This seems somewhat overconfident, knowing what to me seems like robust results from e.g. resume experiments:

I acknowledge that you did use the phrase “significantly differently”, but still I would not consider discrimination in hiring processes insignificant. That said, I do not think this detracts much from your overall argument and I might be nit-picky here, but it felt important to me to point out. In other words: I would expect most people to exhibit somewhat significantly differential treatment of people based on race.

I am no expert in this, perhaps there is solid refutation of resume experiment results—I would be happy to learn that my current understanding of this is wrong as it would indicate a less discriminatory world!

All the more reason for CV blind hiring!

Absolutely agree! I like that Rethink Priorities explicitly ask people not to include a photo in their applications (if I remember correctly):