BitBets: A Simple Scoring System for Forecaster Training

This post follows up my report on running a forecasting tournament at an EA retreat (teaser: the tournament ended up involving bribery, stealing, and game theory). For the tournament, I wanted a method of scoring predictions which could be computed by hand, which would simplify the set up and not make us dependent on internet/computer availability. The scoring rule I came up with for the tournament satisfied this criterion, but was ad-hoc in its construction and had counter-intuitive properties.

In this post I introduce BitBets, a prediction scoring rule which is simple, intuitive, can be computed by hand, and can be made more or less complicated to suit the sophistication of the forecasters.

In this post is structured as follows:

I introduce the setting and notation of prediction scoring rules.

I describe logarithmic scoring, which popular and the basis of the following scoring rules.

I highlight Spencer Greenberg’s contribution to scoring rules for practical training. Specifically, the criteria Greenberg provides for designing a user-friendly scoring system, and the scoring rule Greenberg proposes to meet these cirteria.

I describe logarithmic point scoring: the scoring system I used in the forecasting tournament I ran.

I describe BitBets, my currently preferred scoring system.

I summarise the contexts for which different scoring rules are appropriate.

Setting

We assume forecasters are predicting the answers to yes/no questions.

We will use the following notation:

is a binary variable indicating whether a prediction was correct. indicates that the prediction was correct, and indicates that the prediction was incorrect.

is the confidence a forecaster assigns to a prediction. indicates that the forecaster thinks it is impossible that they will be incorrect, indicates that the forecaster is guessing.

is a scoring rule. That is, a function which takes as input the player’s confidence and correctness on some prediction, and outputs some number of points which the forecaster should be awarded.

A scoring rule should be

Proper: A proper scoring rule is one which is not gameable. That is, there is no strategy a forecaster can use which will win more points in expectation than honestly reporting their confidence. A strictly proper scoring rule is one where if a player reports any confidence other than their true confidence, then they will in expectation lose points. A proper scoring rule which has been linearly scaled is also proper.

Logarithmic Scoring

A popular scoring system is logarithmic scoring:

We can illustrate logarithmic scoring graphically as:

As you can see, you can never gain points from logarithmic scoring; you can only lose points. The more confident you are in an incorrect prediction, the more points you lose. At best, you can break even if you make a correct prediction with 100% confidence.

Here are some reasons why logarithmic scoring is nice:

It is strictly proper.

It indicates how many bits of surprisal the outcome represents for the forecaster.

The sum of log scores for a series of independent predictions is equal to the log score for a single prediction on the Cartesian product of the outcomes for all the predictions. This is because .

Up to a linear transformation, log scoring is the only strictly proper rule which satisfies “locality” in predictions with more than two outcomes. That is, the score depends only on the probability you assign to the outcome which ends up obtaining, not on the probabilities for outcomes that don’t obtain.

Greenberg Scoring

Logarithmic scoring has properties which are attractive to philosophers and mathematicians, but which tend to be counter-intuitive to forecasters. Spencer Greenberg has argued that a scoring rule should have the following properties to be intuitive to users:

Higher is better: You should aim to maximize points.

Upper bound: You can’t gain arbitrarily many points by being 100% confident and being right.

Lower bound: You can’t lose arbitrarily many points by being 100% confident and being wrong.

Positive is good, negative is bad: You gain points iff you make a correct prediction, you lose points iff you make an incorrect prediction.

Confidence is good when correct, bad when incorrect: The scoring system should award the most to least points in the following situations:

confident and right

unconfident and right

unconfident and wrong

confident and wrong.

Total uncertainty should give zero points: Answering with 50% confidence should be equivalent to passing and be worth zero points for either a win or a loss.

Continuity: A small change in confidence should result in a small change in points.

With these properties as a guide, Greenberg designed the following scoring to optimise for usability:

where

is a maximum score users can be awarded per prediction.

is a maximum confidence users are allowed to assign to predictions.

is the confidence one would have if randomly guessing an outcome (equal probability assigned to all outcomes).

Note that Greenberg scoring is really logarithmic scoring “under the hood”, but with a confidence limit and linear scaling.

Greenberg’s scoring rule is illustrated below:

This is the scoring rule used in the Calibrate your Judgement app, created by clearerthinking.org and the Open Philanthropy Project.

Logarithmic Point Scoring

Greenberg’s scoring rule is well suited to a calibration app like Calibrate your Judgement. But when running an in-person forecasting tournament which is accessable to beginners, there are further desiderata we should aim for:

Mathematically trivial: Not all aspiring forecasters are mathematically sophisticated. For the sake of inclusivity, the scoring rule should not require any fancy maths to understand. Even if you are deeply familiar with the mathematical properties of logarithms, this won’t necessarily translate into having a good intuition about the risks and payoffs of logarithmic scoring.

Hand-computable: Scores should be computable by hand, without the aid of technology. This is so 1) Tournaments can be run even when not all participants have access to computers or the internet; 2) It’s more satisfying when you can solve problems using only your brain unaided by technology; 3) Machines kill human connection. If you want forecasters to have fun and bond with one another, have them talk to each other rather than stare at screens.

When I ran a forecasting tournament at the EANZ retreat, I used what I’m calling “logarithmic point scoring” to achieve these desiderata. Essentially, I restricted users to only reporting one of 4 confidences, so that the logarithmic scores for these confidences could be pre-computed and looked up in a small table. The logarithmic scores were scaled to achieve Greenberg’s criteria (except for continuity), and rounded to the nearest integer.

The confidence values were chosen to roughly map onto the intuitive confidences of “don’t know”, “more likely than not”, “probable”, and “near certainty”.

Logarithmic point scoring is plotted below as a series of points. For comparison, Greenberg scoring is also included as a dashed line.

It’s debatable whether logarithmic point scoring really achieves mathemtical triviality. On the one hand, computing your score only requires adding up integers from a table. On the other hand, to understand where those integers came from you still need to understand logarithms and linear scaling.

Furthermore, there’s the glaring problem that if your true confidence is between two of the allowed values, you don’t know which one you should choose! If you do the expected value calculations, you’ll find that the cutoffs between when you should choose one confidence vs another are not very intuitive. For example, if you have anywhere between 73.3% and 93.9% confidence, the best strategy is to report 80% confidence.

BitBet Scoring

Currently, my preferred scoring system for forecasting tournaments is what I will call BitBet scoring. It fulfills Greenberg’s criteria (except continuity), is quite simple and intuitive, can be computed with pen and paper, and avoids the failures of point scoring.

We simply offer the forecaster a series of bets with decreasingly favourable odds:

Even odds, so that if you’re right you are awarded +1 points and if you’re wrong you’re awarded −1 points.

2:1 odds, so that if you’re right you are awarded +1 points and if you’re wrong you’re awarded −2 points.

4:1 odds, so that if you’re right you are awarded +1 points and if you’re wrong you’re awarded −4 points.

8:1 odds, so that if you’re right you are awarded +1 points and if you’re wrong you’re awarded −8 points.

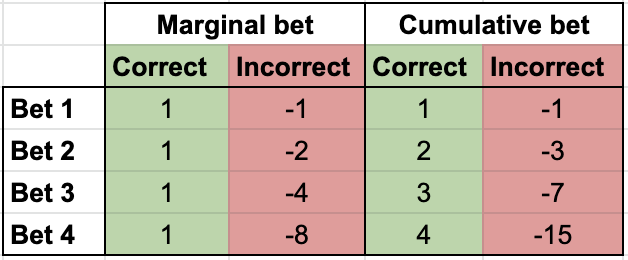

And so on with successive powers of two. Note that if a forecaster is willing to accept the -th bet, then they should also accept bets to , which have more favourable odds. The marginal bets thus accumulate as shown in this table:

The idea is to award one point per “bit of confidence” in a correct prediction. That is,

50% confidence = 1:1 odds of being correct, which means +0 point if correct

66.7% confidence = 2:1 odds of being correct, which means +1 point if correct

80% confidence = 4:1 odds of being correct, which means +2 point if correct

and so on. The expected payoff of a marginal bet will be positive iff the forecaster’s subjective odds of winner are more favourable than the betting odds (see the appendix for additional details). Thus, the following table describes a proper scoring rule:

Thus rather than reporting a point confidence value (as all the previous scoring systems did), players report the interval within which their confidence falls.

For example, if my true confidence is 89%, then I report my confidence as “88-94%”. If my prediction is corrrect, then I will be awarded +4 points. If I am incorrect, I will be awarded −15 points.

Formally, we define the bits of confidence corresponding to confidence as

To satisfy the hand-computability and upper/lower bound criteria, we take the floor of this value and cap the number of bits at :

The scoring system is then given by

the equivalence between this scoring system and the above series of bets is shown the appendix. The scoring rule is plotted below:

As a bonus, BitBet scoring can also be converted into a continuous scoring rule by omitting the ceiling. The continuous version may be more appropriate in a training app.

Also, framing forecasting in terms of “bits of confidence” raises interesting questions like

What is the maximum number bits of confidence achieveable by a calibrated human forecaster?

When a belief feels “certain”, roughly how many bits should I assign to it?

What is the median number of bits of beliefs I hold?

One final virtue of BitBets: in my previous post I asked the question

After you’ve introduced someone to forecasting with a quick tournament, how can you move them up the ladder of forecasting sophistication until they become superforecasters?

BitBets gives us a natural ladder construction method. Start with a low cap on the maximum bits; restrict novices to at most assigning three bits to predictions, for example. If someone can’t discirimate between a zero-bit belief and a one-bit beliefs, then there’s no point in giving them the option of ten-bit beliefs.

After the forecaster has demonstrated mastery over the current cap, incrementally extend it. For example, the forecaster first masters the question: do you have more or less than one bit of confidence

We can do this by requiring forecasters to keep meet some kind of reserve requirement for the point they hold, analagous to the reserve requirements of central banks. For example, perhaps you’re not allowed to make a bet that could lose 6 points unless you have earned 5 points already.

Conclusion

Under what circumstances should you use one or another training rule? If your goal is simply to evaluate the quality of forecasters, logarithmic scoring is a simple and effective solution. If your goal is to provide forecasters meaningful feedback in an in-person tournament, BitBets is looks like a clear winner to me. It’s simple, intuitive, can be computed by hand, and can be adjusted in complexity to suit the sophistication of forecasters. In general, I wouldn’t recommend logarithmic point scoring, as BitBets has all the same advantages plus some.

If providing feedback to forecasters through an app, then either BitBets or Greenberg scoring may be preferrable. The points awarded for correct predictions increase as in Greenberg scoring, but as in BitBet scoring. I suspect this means that Greenberg scoring is better for calibrating forecasters at low degrees of confidence (e.g., 0.6 vs 0.7), whereas BitBets is better at calibrating forecasters at high degrees of confidence (e.g., 0.99 vs 0.999). Which of these is more important will depend on the context.

If you don’t have a clear preference for low-confidence calibration, I think BitBets is the better method of providing feedback to users. It admits incremental difficulty, by changing , or by switching from the discrete to continuous version, so is better for an cumulative calibration curriculum. Arguably the difficulty of Greenberg scoring can be adjusted with , although this is a relatively crude way of setting the difficulty.

Appendix: BitBets Derivations

We first wish to show:

The expected payoff of a marginal bet will be positive iff the forecaster’s subjective odds of winner are more favourable than the betting odds.

Let us suppose the forecaster has a subjective probability that their prediction is correct. Then their probabilty that the prediction is incorrect is and their odds of being correct are

Let us say a probability is assigned to a prediction being correct. Then the odds assigned to the prediction being correct is

If a forecaster is offered a bet with payoff if the prediction is correct, and if the prediction is incorrect, then the betting odds are . If is the forecaster’s subjective probability that the prediction is correct, then the expected payoff of taking the bet will be positive iff:

That is, if the forecaster’s subjective odds of winner are more favourable than the betting odds. Thus a rational forecaster will accept all bets less favourable odds than hers, and be indifferent to those with equal odds. □

We now show that the BitBets scoring rule is equivalent to offering a series of bets with odds of one against ascending powers of two. We have that

And so

Thus the powers-of-two bets which the forecaster will accept because the odds are less favourable than her own estimate are (with at best indifference to ). Summing the payoffs of these bets yeilds

for a correct prediction and

for an incorrect prediction. □

Proving that the continuous version of BitBets is proper is left as an exercise for the reader.

This has beautiful elements.

I’m also interested in using scoring rules for actual tournaments, so some thoughts on that:

This scoring rule incentivizes people to predict on questions for which their credence is closer to the extremes, rather than on questions where their credence is closer to even.

The rule is is some ways analogous to an automatic market maker which resets for each participant, which is an interesting idea. You could use a set-up such as this to elicit probabilities from forecasters, and give them points/money in the process.

You could start your bits somewhere other than at 50⁄50 (this would be equivalent to starting your automatic market maker somewhere else).

Thanks for the insightful comments.

One other thought I’ve had in this area is “auctioning” predictions, so that whoever is willing to assign the most bits of confidence to their prediction (the highest “bitter”) gets exclusive payoffs. I’m not sure what this would be useful for though. Maybe you award a contract for some really important task to whoever is most confident they will be able to fulfill the contract.

The auctioning scheme might not end up being proper, though

It just occurred to me that you don’t actually need to convert the forecaster’s odds to bits. You can just take the ceiling of the odds themselves:

O(p)=⌈p1−p⌉S(p,c)={O(p)if c=1−O(p)(O(p)+1)2if c=0Which is more useful for calibrating in the low-confidence range.

Additional note: BitBets is a proper scoring rule, but not strictly proper. If you round report odds which are rounded up to the next power of two you will achieve the same scores in expectation.