EA Market Testing

Authors: Josh Lewis, David Reinstein, and Luke Freeman

Acknowledgements: Thanks to peter_wildeford, jswinchell and others for pre-publication comments

Introduction

How can we get more people to give to effective charities? How can we identify those with the greatest potential to do good? How can we encourage people to take on more impactful careers? How do we spread ideas such as longtermism and a concern for animal welfare? More broadly, can we identify the most effective, scalable strategies for marketing EA and EA-adjacent ideas and actions? We plan to conduct systematic testing and data collection aimed towards answering these questions and we want your feedback and ideas to make this effort as useful to the EA movement as possible.

Who are we?

We are the newly formed “EA Market Testing Team” – an informal group of professional marketers, business academics, economists, and representatives of EA organizations.

A non-exhaustive list of some of the people involved:

Joshua Lewis (Assistant Professor of Marketing at New York University)

David Reinstein (Senior Economist at Rethink Priorities, formerly a Senior Lecturer in Economics at the University of Exeter)

Luke Freeman (Executive Director at Giving What We Can)

Johnstuart Winchell (Senior Account Manager at Google) [1]

Noah Castelo (Assistant Professor of Marketing at the University of Alberta)

Dillon Bowen (PhD Student at the University of Pennsylvania)

Jack Lewars (Executive Director at One for the World)

Chloë Cudaback (Director of Communications at One for the World)

Neela Saldanha (Board Member at The Life You Can Save)

Bilal Siddiqi (Strategic Advisor at The Life You Can Save)

Our objective

We aim to identify the most effective, scalable strategies for marketing EA and, crucially, to share our results, data, tools, and methodologies with the larger EA community. We will publish our methods, findings, and data in both informal and academic settings.

Outreach to the EA forum: Seeking your input

We want to know any questions you have about marketing EA that our research might be able to answer, any ideas you have about what to test or how to test it, any risks you think we should bear in mind, and any other feedback that you think would be useful for us. You can either fill in our feedback form HERE or leave a Forum comment below. More specific prompts are in the feedback form and pasted below as a Forum comment.

Background

Broad Testing Approach

We will primarily run experiments with effective-altruism-aligned organizations. We plan to test and optimize over a key set of marketing and messaging themes. We will evaluate these primarily through behavioral metrics; in particular, how many people donate and/or pledge to effective causes, how many potential effective altruists join aligned organizations’ mailing lists, and how much time do people spend consuming EA-related content.

We expect that behavioral measures (e.g., actual donation choices) will be more informative than hypothetical ones (e.g., responses to questions about ‘what you would donate in a particular imagined scenario’).[2] We expect that using the naturally-occurring populations will lead to more relevant findings than convenience samples of undergraduates or professional survey participants who are aware that they are doing a research study.

We will test strategies using:

Advertising campaigns on Facebook, YouTube, LinkedIn, Google Ads, etc.

The content and presentation of EA organizations’ websites[3]

Emails to mailing lists

Surveys and focus groups

We focus on natural settings–the actual promotions, advertisements, and web-pages used by our partners, targeting large audiences – as our main goal is to identify which specific strategies work in the most relevant contexts. Ideally, EA orgs could directly apply our results to run cost-effective campaigns to engage new audiences and achieve sustainable growth.

Our second goal is to draw relevant generalizable conclusions about approaches to marketing EA. We will consider the robustness of particular approaches across contexts and particular implementations.

Approach to Working with EA Organizations

When we run tests with EA organizations, we will not charge for the time we spend on this project, nor on giving advice or support. [4]. Since running such tests (i) helps us understand how to market EA and (ii) may generate data that will contribute to academic publications, we have strong incentives to support this. Our combined experience in experimental design, empirical and statistical methods, advertising, and academic behavioral science, will enable us to improve current practice and enhance insight through:

Suggesting targeting and messaging strategies based on our prior experience in marketing and familiarity with relevant literature. (E.g., we might suggest leveraging ‘social proof’ by placing greater emphasis on community in a website heading, saying “join a community of effective givers” instead of “give effectively.”)

Increasing the rigor and power of experiments and trials through, e.g.:

Performing more detailed and sophisticated statistical analyses than those provided by software such as Google Optimize and Facebook Ads Manager

Increasing the power of standard (A/B and lift) testing with more precise block-randomized or ‘stratified’ designs, as well as sequential/adaptive designs with optimal ‘reinforcement learning’ while allowing statistical inference[5]

Mapping the robustness of particular strategies to distinct frames and language variations using techniques such as ‘stimulus sampling’

Distinguishing meaningful results from statistical flukes and confounds; identifying and mitigating interpretation issues that arise from platform idiosyncrasies such as algorithmic targeting, etc.

Designing experiments to maximize their diagnosticity[6]

Considering heterogeneity and personalized messaging and evaluating the extent to which results generalize across audiences and platforms (e.g., someone Googling “what charities are most effective?” might be very different from someone getting a targeted ad on Facebook based on their similarity with existing website traffic).

‘Market profiling’ to find both under-targeted ‘lookalike audiences’ and (casting the net more widely) to find individuals and groups with characteristics that would make them amenable to effective giving and EA ideas

Prioritizing Research Questions

What concrete research questions should we prioritize? We are open-minded; we want to learn how to promote EA and each of its cause areas as “effectively” as possible, and we will test whatever is most useful for achieving this end.

We will sometimes address very specific marketing problems that an EA org faces (e.g., “which of these taglines works best on our webpage?” or “what is the most efficient platform on which to raise awareness of our top charities?”). However, we also aim to design tests that inform which broad messaging strategies are most effective in a given setting, e.g., “should we use emotional imagery?” or “should we express EA as an opportunity to do good or an obligation to do good?” We list some more specific research questions below.

If you have feedback on any one of them, (e.g., you think it’s particularly important to test or should be deprioritized, or you have an insight about it we might find helpful, or evidence we may have overlooked, etc..) please mention this as a forum comment or mention it in the feedback form (LINKED) . In fact, we would encourage you to fill out this form before reading further; to share your original thoughts and ideas before being influenced by our examples and suggestions below.

Messaging Strategy: Example Research Questions

When and how should we emphasize, cite, and use...

Support from sources of authority such as news organizations, respected public figures, Nobel prize winners, etc., if at all? Are these more effective in some contexts than in others?

For example, we might find that citing such support (e.g., testimonials) is useful for persuading people to target their donations effectively, but not for persuading them to take a pledge to donate more (or vice-versa). (This would argue for GiveWell using these approaches but not Giving What We Can, or vice-versa).

Social esteem or status benefits of, e.g., being on a list of pledgers (relevant for GWWC) or working in a high-impact job (relevant for 80k hours)?

Social proof or community?

For example, “join a growing community of people donating to our recommended charities” vs. “donate to our recommended charities”

Effectiveness, e.g., estimates of ‘how much more impact’ effective altruist careers or donations have over typical alternatives?

Personal benefits of engaging with EA material, e.g., such as easily tracking how much you are giving, finding a fulfilling job?

A particular cause area?

We might find that emphasizing global health is useful for persuading people to start donating money (as inequality and personal privilege is a compelling reason to donate to this cause), whereas having several distinct messages each emphasizing a different cause area may be more successful on platforms that can dynamically target different audiences

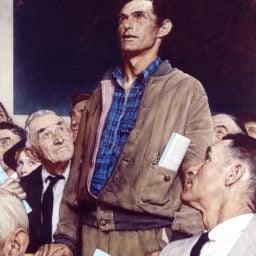

Emotional messages or imagery?

These might be good for generating one-off donations but not for long-term commitments like pledges or career trajectories.

Images below: from www.givingwhatwecan.org, highimpactathletes.org, and thelifeyoucansave.org, respectively

Targeting and market-profiling: Example (meta-) Research Questions

Which groups (perhaps under-targeted or under-represented groups in EA) do you suspect might be particularly amenable to EA-related ideas and to taking EA-favored actions; or at least particularly worth exploring? What particular contexts and environments might be worth testing and exploring?

When should we use public lists, e.g., of political donors, perhaps donors to politicians interested in relevant cause areas?.

When and how should we target based on particular types of online activity (watching YouTube videos, participation on relevant Reddit threads, reading relevant news articles, visiting ethical career sites, visiting Charity Navigator, searching relevant terms, etc.)?

How can we increase diversity in the effective altruism community (along various dimensions)? [7]

Risks, limitations to our work

Reproducibility and social science. The replication crisis in social sciences limits the insight we can glean from past work. Mitigating this: all the academics involved understand the severity of this crisis and have some expertise helping us to distinguish replicable from non-replicable research, and a commitment to doing so.[8] We are committed to practices such as pre-registration to limit researcher degrees of freedom, to using appropriate corrections and adjustments in light of testing multiple hypotheses in a family (considering ‘familywise error’), and to fostering transparent analysis (through dynamic documents) and enabling replications. Also mitigating: we will undertake new work, our own large-scale experiments and trials on public platforms, working with practitioners to directly test the applicability and relevance of previous academic findings.

Limits to generalizability: We may end up over-generalizing and giving bad advice. Something that works on one platform may not work on another platform due to idiosyncrasies in the audience, the targeting algorithms, the space constraints, etc.. Something that works for one organization may not be transferable to other organizations with different target audiences or different aims. Mitigating: We aim to test each treatment with multiple implementations (see ‘stimulus sampling’) across multiple contexts. We will look for experimental results that are robust across distinct settings before basing broader recommendations on these.

Marketing is an art and not a science? Following on from the previous point, it may be the case that this ‘space’ is too vast and complicated to explore in scientific ways, and general principles are few and far between. Perhaps ‘nuanced appreciation of individual contexts’ is much more useful than systematic experiments and trials. Nonetheless, we believe it is worth putting some resources into fair-minded exploration to consider the extent to which this is the case. We recognize that it is important to consider interaction effects and to consider context and external generalizability. In the extreme case that ‘there are few or no generalizable principles’, at least we will have helped to improve testing and marketing in important specific contexts.

Downsides to broadening EA: There may be downsides (as well as upsides) to making EA a broader movement, as argued on this forum and elsewhere. To the extent that our work focuses on

encouraging EA-aligned behaviors, particularly effective charitable giving, rather than on bringing people into the intellectual debates at the core of EA, and...

to the extent we are profiling people to e.g., get more participation on EA Forum and EA groups,

…. this need not imply watering down the movement. In fact our profiling work will also help better understand what sorts of people are likely to be deeply committed to hardcore EA ideas.

The possibility of mis-representing or negatively affecting public perceptions of EA through our marketing and outreach activities, or public responses to these As researchers and members of the EA community, we are committed to a scientific mindset, openness (and ‘Open Science’), and upholding integrity and accurate communications (ss well as the other key principles proposed by Will MacAskill). We are particularly aware of the mis-steps and lapses of Intentional Insights, which provides an important counterexample. We will take careful steps to uphold generally-agreed EA community norms and values. This includes:

Obtaining the explicit consent of any organizations before linking their name to our work or promotions,

Representing EA ideas as faithfully as possible, and where we are unsure, consulting experts and the community (or otherwise making it clear that the ideas we present are not themselves connected to EA), and

Avoiding practices which mislead the public (through techniques such as ‘astroturfing’).

Conclusion

To reiterate: our mission is to identify the most effective, scalable strategies for marketing EA and EA-aligned activities using rigorous testing approaches (as well as surveys, profiling and meta-analysis). Your ideas about ‘what to test’ and ‘how to test it’, as well as feedback on our current plans, will be immensely valuable.

We want your opinions, impressions, and experience. We have a few questions with prompts for open-ended answers. We do not expect you to answer all of the questions. But please do enter any relevant thoughts, opinions, and ideas. You can do so anonymously or leave your information if you would like us to contact you and/or acknowledge your ideas.

In answering these questions, please let us know your ‘epistemic status’ – do you have specific experience and knowledge informing your answers?[9]

- ↩︎

See also, JS’ opinion post ″Digital marketing is under-utilized in EA, which was important in bringing this initiative together)

- ↩︎

Other measures fall in-between these extremes, such as low-cost choices such as ‘clicks on an ad’ and self-reported previous behavior (‘recall’ measures).

- ↩︎

For brevity we refer to a broad range of organizations, some of which do not explicitly define themselves as EA (but are EA-adjacent) as ‘EA orgs’

- ↩︎

David Reinstein may be involved in message testing projects in his role at Rethink Priorities that are not affiliated with the EA Market Testing team; RP may charge organizations for this work. Separately, David’s time on the EAMT team (and other work) is funded in part by a grant through Longview Philanthropy.

- ↩︎

I.e., confidence/credible intervals over the differences in impacts between each approach

- ↩︎

E.g., designs to facilitate testing multiple hypotheses independently, ensuring that experimental variants only differ in ways that isolate the hypothesis of interest

- ↩︎

To achieve this, we might, e.g., consider dynamically displaying different messages to different audiences and citing support from people of varying backgrounds. We are also curious which dimensions of ‘diversification’, e.g., age, gender, culture, ideology, might be particularly fruitful for EA, and eager to hear your opinions.

- ↩︎

For example, , David Reinstein is a BITSS ‘Catalyst’

- ↩︎

If we get many responses to this survey we may follow up with a closed-ended ‘multiple-choice’ type survey.

- The Explanatory Obstacle of EA by (27 Nov 2021 14:20 UTC; 191 points)

- Marketing Messages Trial for GWWC Giving Guide Campaign by (8 Sep 2022 16:22 UTC; 59 points)

- Giving What We Can—Pledge page trial (EA Market Testing) by (16 May 2022 22:39 UTC; 56 points)

- EA Updates for October 2021 by (1 Oct 2021 15:25 UTC; 32 points)

- University & City Group Outreach Testing—A Callout to Group Leaders by (13 Dec 2022 9:32 UTC; 17 points)

- A case for targeted introductions to effective giving for specific (workplace) audiences by (14 Feb 2022 20:31 UTC; 10 points)

- 's comment on Why you should contribute to Giving What We Can as a writer or content creator (opportunities inside!) by (5 Feb 2022 22:04 UTC; 6 points)

- 's comment on Introducing High Impact Professionals by (26 Oct 2021 16:57 UTC; 4 points)

- 's comment on Propose and vote on potential EA Wiki articles / tags [2022] by (9 Apr 2022 17:17 UTC; 2 points)

- 's comment on The Future Fund’s Project Ideas Competition by (2 Mar 2022 20:21 UTC; 1 point)

Awesome! It is really good to finally see something like this happening! Luke and I have often talked about the need for it.

These are some quick, messy and rambling thoughts:

Next steps

In terms of the next steps, if I was in your shoes I’d look to do a single small easy project next as a test run.

I’d establish goals, resources/collaborative capacity (e.g., what do we collectively want to achieve in x time, and what do we have to get there). Then select someone to lead the project and who will support them and how and go from there. Also, get everyone on some project management platform like Asana.

[Note that I am not saying this is what you should do, just giving you my perspective based on what I think helped with keeping READI going. I am not a great project manager!]

Once everything is tested etc I’d do some research ideation and prioritisation with partner orgs. Once done you could then start tackling these research questions in order of priority.

I’d probably favour focusing on big and general issues (e.g, what is the best messaging for x in context y) rather than the needs of specific organisations (e.g., what should org do here on their website). Context shifting can be very demanding/inefficient and the advisory work might not generalise as much.

Risks, pitfalls, precautions

Related to the above, I actually think that the big challenge with a project like this is sustaining the coordination and collaboration.

I’d focus on keeping communication costs as low as possible. Make sure that you understand the needs of the key people in the collaboration and ensure that their cost-benefit ratios can sustain their involvement e.g., if people need publications/conversion rate improvements to justify their involvement then make sure that the projects are set up so that they will deliver those outcomes. Lots of one-to-many communication and not too many calls to reduce communication costs.

Research ideas

I have some ideas for the research questions/approaches (these are copied over from other places):

What if you got a range of different EA orgs who are promoting the same behaviour (e..g, donation) then implemented a series of interventions (e.g., a change in choice architecture) across their websites/campaigns (maybe on just a portion of traffic). Then use A/B testing to see what worked.

A research replication/expansion project (MANEA Labs? :P) where teams of researchers test relevant lab findings in the field with EA orgs. This database could offer some initial ideas to prioritise. Related to that, here is a post about this lab research which suggests that donor getting more choice potentially reduces their donation rates. Would be interesting to test in field on an EA charity aggregator like the TLYCS.

Potentially, using the EA survey, you could explore how different demographics, personality types and identities (e.g., social justice activist/climate change activist) interact with different moral views or arguments for key EA behaviours such as giving to effective charities/caring about the longterm etc. Could guide targeting.

Other comments

Unfortunately, I have no time to help at the moment so all I can offer are ideas/feedback for now.

More generally, I suspect that there will be some good opportunities to leverage the EA behaviour science community for some of this work (as I am sure you already know). Particularly if you can connect good data/research opportunities with upcoming academics keen to for publications. I’ll mention this initiative in the next edition.

I agree, and thanks for mentioning this. Perhaps a major part of our task, to make this work, is to come up with good systems and easy frameworks and ground rules for facilitating and enabling these cooperations. E.g.,

How can researchers/academics sign up and indicate their interests and suggestions? How is this information shared?

What must the researchers provide and promise (and commit to sharing with the community)

How do the partner organizations specify their interests and the areas they are willing to test and experiment with?

What must the partner organisations agree to do and share?

We provide some templates, generally-accepted protocols, tools (both statistical, ‘standard language’ templates, and IT/web design tools), and possible sources of funding (and coordination) for advertisements

Maybe we also organize some academic sources of value and credibility, like a conference

Agree! However, I’d personally avoid putting in much effort into any of that until there is clear evidence that enough researchers will get involved if you provide that.

This could be very useful. Obviously the EA Survey is a particular slice, and probably not the group we are going for when we think of outreach. Patterns within this group (EA survey takers) may not correspond to the patterns in the relevant populations. Nonetheless, it is a start, and certainly a dataset I have a good handle on.

But I think we should also reach out and spend some resources to survey broader (non-EA or only adjacent-to-adjacent, or relevant representative) groups

I especially like the idea of “personality types and identities (e.g., social justice activist/climate change activist) interact with different moral views or arguments for key EA behaviours such as giving to effective charities/caring about the longterm etc.”

Recent work on de-biasing and awareness gets at this a bit, at least breaking things up by political affiliation. It might be worth our digging more closely into the Fehr, Mollerstrom, and Perez-T paper and data. It seems like a very powerful experiment tied to a rich dataset.

Yeah, I think that I forgot to add the other part of this idea which is that we would compare the EA survey against a sample from the general public so that we have both EA and non-EA responses to questions of interest. This might give a sense of where EAs and Non-EAs differ and therefore how best to message them. I think that all types of segementation, like political affiliation, would be very useful for EA organisational marketing but that’s probably worth validating with those orgs.

Absolutely. Your LinkedIn post outlines the need for some robust ‘real-world’ testing in this area, to supplement the small-stakes Prolific and Mturk samples in the authors use (which I need to read more carefully).

The ‘charity aggregator/charity choice platform’ is one particular relevant environment worth testing on, as distinct from the ‘specific charitable appeal’.

As to the ‘give the donors choice’ in particular, I envision some potentially countervailing things (pros/cons) of giving choice, some of which may be more relevant in a context involving ‘people seriously considering donating’ rather than ‘people asked to do an Mturk/Prolific study.’

Quick thoughts on this...

Cons of enabling choice (some examples): Choice paralysis, raising doubts, repugnance of ‘Sophie’s choice’, departure from ‘identifiable victim’ frame (although I read something recently suggesting that the evidence for the IVE may not be as strong as claimed!), calculating/comparing mindset may push out the empathetic/charitable mindset

Pros: Standard ‘allows better matching of consumer desires and options’, gives stronger sense of tangibility and agency (‘I gave to person A for schoolbooks’) reducing the sense of a futile ‘drop in the bucket’, seems more considerate and respectful of donor, appealing to their ‘wise judgment’

Thanks—this is very useful. I’ll add it to my post so that more people read!

Where did you read about the research challenging the IVE btw? I’d be interested to read that.

Actually consider this to be one of the more well replicated and evidenced findings. The 2016 meta-analysis HERE: supported it. However, I’ve recently been exposed to something or some discussion that seemed pretty credible suggesting this no longer should be taken as a robust result.

It may have come from metascience2021 (COS etc conference) -- if I dig it up again, I’ll post it and maybe write a twitter on it.

Ah, just getting this turn of phrase—“Many Labs Project + EA”. That would be very nice to engineer and organize.

I’m glad to see that this is coming along; we’ve discussed it before. I’m working continue to update and build the “Increasing effective charitable giving: The puzzle, what we know, what we need to know next which works to outline the theories and evidence on the ‘barriers to effective giving’ and promising ‘tools’ for surmounting these. In doing this I hope to

add and incorporate the parts of other recent reviews that are most relevant to effective giving, meta-analysis, and curation work (including yours)

work to find, build, and improve systems for assessing the state of the evidence on each barrier/motivator

summarize these into key ‘action points’ for our market testing project (in our gitbook/wiki)

But this part of the project is a bit distinct, requiring particular skills and focus. It might be worth trying to organize a way to divide things up so that this ‘literature synthesis and meta-analysis’ is ‘owned’ by a single member of our team. If we are ‘all doing everything’ context-switching can really be a drag.

Yeah, in hindsight, I that offers a better initial source for picking ideas to prioritise than the database which is just individual papers. I like the ideas you mentioned about the website and I think that there is definitely a need for more work on it—ideally with a single product owner who can improve the presentation and user experience and so on. I think it has a lot of promise!

This is very much what we are focused on doing; hoping to do soon. Donations and pledges are the obvious common denominator across many of our partner orgs. Being able to test a comparable change (in choice architecture, or messaging/framing, etc.) will be particularly helpful in allowing us to measure the robustness of any effect across contexts and platforms.

There is some tradeoff between ‘run the most statistically powerful test of a single pairing, A vs B’ and ‘run tests of across a set of messages A through Z using an algorithm (e.g., Kasy’s ‘Exploration sampling’) designed to yield the highest-value state of learning (cf ’reinforcement learning). We are planning to do some of each, compromising between these approaches.

Yep! This makes me think of Christian and Griffiths ‘Algorithms to Live By’ on how Economies of scale work in reverse when something becomes ‘a sock matching problem’, or ‘everyone has to shake hands with everyone else’. I hope our use of Gitbook wiki (and maybe I’ll embed some videos) helps solve this prob. We’re also hoping to have efficient meetings with the whole group on particular themes (but not ’everyone has to weigh in on everyone else’s position in real time I guess).

‘Think about the needs and medium-term wins/outcomes for the collaborators’—very good idea.

We actually had some discussion of organizational issues which we cut out of the post (as it’s ‘our problem to sort out’). But this did include things like the risk of “‘too many stakeholders and goals leading to death by committee’, and ‘spreading ourselves too thin and being overambitious, with too many targets’”

Thanks! I hadn’t thought about that!

By “once everything is tested etc” I am guessing that you may and once we have a good understanding and track record of doing and gaining value from each type of advertising, split-testing, etc?

We are working to identify the key research questions are, considering

the importance for EA organisations and fundraisers of these ‘tools’ (how much could these be used)

the existing evidence, both on general psychological providers and specifically in the charitable donation (and effective action) contexts

the extent to which we can practically conduct trials and learn from these … (feasibility and applicability of interventions, the observability of relevant outcomes, generalizability of evidence across platforms and contexts..)

This is a good point, and I mostly agree, but of course we need to strike some balance. I suspect some things that seem like ‘very specific needs and practices for organization A’ might have greater applicability and relevance across organizations, and specific observations may lead to themes to study further. I’m also concerned about asserting and claiming to “test of general principles and theories of behavior” that prove to be extremely context-dependent. This is a major challenge of social science (and marketing), I think.

Agreed. I think we are still in the process of coming together and agreeing on which areas we can collaborate on and what the key priorities are. I suspect that it will be helpful to do some work and some small trials before meeting to decide on these overarching goals, to have a better sense of things. (Of course we have outlined these overarching goals in general, more or less as discussed above, but we need to fill in the specifics and the most promising angles and avenues.) I’m not sure we need one single ‘leader of everything’ but it will be good to have someone to ‘take ownership of each specific project’ (the whole ‘assigned to, reports to’ thing) as well as overall coordination.

We are currently using a combination of Slack (conversation), Gitbook/Github (documentation and organized discussion of plans and information), and Airtable (structured information as data). I think you are right that we need to use these tools in specific ways that enable project management, keeping track of where we are on each project, who needs to do what, etc.

I’d still probably recommend Asana or a similar task manager if you can get everyone to try them. Micheal Noetel introduced me to it and he uses it very well with several research teams using concepts like ‘sprints’ and various other software design inspired approaches.

Thanks for the comments Peter, sorry I’m slow in responding. This is very helpful!

Agreed!

[Aside: I am getting results from some ongoing related projects with relevant non-EA orgs, which I hope to write up soon, and I think Josh does/has/is as well. I believe Luke is also trialing some things atm. ]

We are indeed planning some small trials very soon, as you suggest, and I hope we can write these up and share them soon as well. It will be important to share what we learn not from the actual ‘outcomes of the trials’ but from the processes, considerations, etc.

Ask the EA Market testing team’s chatbot your questions on our Gitbook page. (http://bit.ly/eamtt)Go to the search bar, click ‘lens’ and ask away.

(It’s working pretty well, but may need some more configuration.)

Update: We received 10 responses to the survey. Thanks very much to all who responded. I intend to report in on this soon.

Delayed … will get to this soon, sorry.

Part II of EA Market Testing survey/input (alternative to survey form)

Whom should we target and how do we identify them?

Which groups do you suspect might be particularly amenable to EA-aligned ideas/actions, or worth exploring further? Which individual and group traits and characteristics would be particularly interesting to learn more about? What approaches to targeting might we take?

Risks, pitfalls, precautions

What down-side risks (if any) are you concerned about with our work? Do you have any advice as to what we should be very careful about, what to avoid, etc?

Further comments or suggestions (if any)

Anything you want to mention or suggest that you did not have a chance to get to above?

Part I of EA Market Testing survey/input (alternative to survey form)

This survey contains mainly open-ended questions, some of which you may find overlap with one another. Please do not feel compelled to complete them all—all fields are optional. (See the EA Forum post “EA Market Testing”) If we get many responses to this survey we may follow up with a closed-ended ‘multiple-choice’ type survey.

What questions do you think we should answer with our research?

Do you have any ‘burning questions’ or ideas? What do you want to learn?

Do you have any specific ideas for approaches to messaging or targeting people who might be receptive to EA?

What ‘EA messaging’ questions do you think we should answer with our research?

We want to better understand how to communicate EA ideas to new audiences and encourage effective altruistic actions and choices. There are a range of messages and approaches we might take, and a range of issues and questions involved in doing so. What questions should we answer? What approaches seem promising?

…