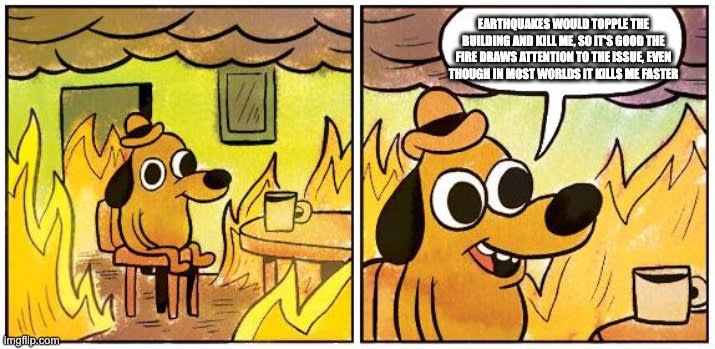

So, the tradeoff is something like 55% death spread over a five-year period vs no death for five years, for an eventual reward of reducing total chance of death (over a 10y period or whatever) from 89% to 82%.

Oh we disagree much more straightforwardly. I think the 89% should be going up, not down. That seems by far the most important disagreement.

(I thought you were saying that person-affecting views means that even if the 89% goes up that could still be a good trade.)

I still don’t know why you expect the 89% to go down instead of up given public advocacy. (And in particular I don’t see why optimism vs pessimism has anything to do with it.) My claim is that it should go up.

I was thinking of something like the scenario you describe as “Variant 2: In addition to this widespread pause, there is a tightly controlled and monitored government project aiming to build safe AGI.” It doesn’t necessarily have to be government-led, but maybe the government has talked to evals experts and demands a tight structure where large expenditures of compute always have to be approved by a specific body of safety evals experts.

But why do evals matter? What’s an example story where the evals prevent Molochian forces from leading to us not being in control? I’m just not seeing how this scenario intervenes on your threat model to make it not happen.

(It does introduce government bureaucracy, which all else equal reduces the number of actors, but there’s no reason to focus on safety evals if the theory of change is “introduce lots of bureaucracy to reduce number of actors”.)

Maybe I’m wrong: If the people who are closest to DC are optimistic that lawmakers would be willing to take ambitious measures soon enough

This seems like the wrong criterion. The question is whether this strategy is more likely to succeed than others. Your timelines are short enough that no ambitious measure is going to come into place fast enough if you aim to save ~all worlds.

But e.g. ambitious measures in ~5 years seems very doable (which seems like it is around your median, so still in time for half of worlds). We’re already seeing signs of life:

note the existence of the UK Frontier AI Taskforce and the people on it, as well as the intent bill SB 294 in California about “responsible scaling”

You could also ask people in DC; my prediction is they’d say something reasonably similar.

Oops, sorry for the misunderstanding.

Taking your numbers at face value, and assuming that people have on average 40 years of life ahead of them (Google suggests median age is 30 and typical lifespan is 70-80), the pause gives an expected extra 2.75 years of life during the pause (delaying 55% chance of doom by 5 years) while removing an expected extra 2.1 years of life (7% of 30) later on. This looks like a win on current-people-only views, but it does seem sensitive to the numbers.

I’m not super sold on the numbers. Removing the full 55% is effectively assuming that the pause definitely happens and is effective—it neglects the possibility that advocacy succeeds enough to have the negative effects, but still fails to lead to a meaningful pause. I’m not sure how much probability I assign to that scenario but it’s not negligible, and it might be more than I assign to “advocacy succeeds and effective pause happens”.

I’d say it’s more like “I don’t see why we should believe (1) currently”. It could still be true. Maybe all the other methods really can’t work for some reason I’m not seeing, and that reason is overcome by public advocacy.