I’d add that I think 80K has done an awful lot to communicate “EA isn’t just about earning-to-give” over the years. At some point it surely has to be the case that they’ve done enough. This is part of why I want to distinguish the question “did we play a causal role here?” from questions like “did we foreseeably screw up?” and “should we do things differently going forward?”.

RobBensinger

Yes, there are many indirect ways EA might have had a causal impact here, including by influencing SBF’s ideology, funneling certain kinds of people to FTX, improving SBF’s reputation with funders, etc. Not all of these should necessarily cause EAs to hand-wring or soul-search — sometimes you can do all the right things and still contribute to a rare disaster by sheer chance. But a disaster like this is a good opportunity to double-check whether we’re living up to our own principles in practice, and also to double-check whether our principles and strategies are as beneficial as they sounded on paper.

It directly claims that the investigation was part of an “internal reflection process” and “instutitional reform”, and I have been shared on documents by CEA employees where the legal investigation was explicitly called out as not being helpful for facilitating a reflection process and institutional reform.

This seems like the headline claim to me. EAs should not be claiming false things in the Washington Post, of all things.

Every aspect of that summary of how MIRI’s strategy has shifted seems misleading or inaccurate to me.

I find myself agreeing with Nora on temporary pauses—and I don’t really understand the model by which a 6-month, or a 2-year, pause helps, unless you think we’re less than 6 months, or 2-years, from doom.

This doesn’t make a lot of sense to me. If we’re 3 years away from doom, I should oppose a 2-year pause because of the risk that (a) it might not work and (b) it will make progress more discontinuous?

In real life, if smarter-than-human AI is coming that soon then we’re almost certainly dead. More discontinuity implies more alignment difficulty, but on three-year timelines we have no prospect of figuring out alignment either way; realistically, it doesn’t matter whether the curve we’re looking at is continuous vs. discontinuous when the absolute amount of calendar time to solve all of alignment for superhuman AI systems is 3 years, starting from today.

I don’t think “figure out how to get a god to do exactly what you want, using the standard current ML toolbox, under extreme time pressure” is a workable path to humanity surviving the transition to AGI. “Governments instituting and enforcing a global multi-decade pause, giving us time to get our ducks in order” does strike me as a workable path to surviving, and it seems fine to marginally increase the intractability of unworkable plans in exchange for marginally increasing the viability of plans that might work.

If a “2-year” pause really only buys you six months, then that’s still six months more time to try to get governments to step in.

If a “2-year” pause buys you zero time in expectation, and doesn’t help establish precedents like “now that at least one pause has occurred, more ambitious pauses are in the Overton window and have some chance of occurring”, then sure, 2-year moratoriums are useless; but I don’t buy that at all.

Daniel Wyrzykowski replies:

The contract is signed for when bad things and disagreements happen, not for when everything is going good. In my opinion “I had no contract and everything was good” is not as good example as “we didn’t have a contract, had major disagreement, and everything still worked out” would be.

Even though I hate bureaucracy and admin work and I prefer to skip as much as reasonable to move faster, my default is to have a written agreement, especially if working with a given person/org for the first time. Generally, the weaker party should have the final say on forgoing a contract. This is especially true the more complex and difficult situation is (eg. living/travelling together, being in romantic relationships).

I agree with the general view that both signing and not signing have prons and cons and sometimes it’s better to not sign and avoid the overhead.

Elizabeth van Nostrand replies:

I feel like people are talking about written records like it’s a huge headache, but they don’t need to be. When freelancing I often negotiate verbally, then write an email with terms to the client., who can confirm or correct them. I don’t start work until they’ve confirmed acceptance of some set of terms. This has enough legal significance that it lowers my business insurance rates, and takes seconds if people are genuinely on the same page.

What my lawyer parent taught me was that contracts can’t prevent people from screwing you over. (which is impossible). At my scale and probably most cases described here, the purpose of a contract is to prevent misunderstandings between people of goodwill. And it’s so easy to do notably better than nonlinear did here.

Linda responds:

This is a good point. I was thinking in terms of legal vs informal, not in terms of written vs verbal.

I agree that having something written down is basically always better. Both for clarity, as you say, and because peoples memories are not perfect. And it have the added bonus that if there is a conflict, you have something to refer back to.

Duncan Sabien replies:

[...]

While I think Linda’s experience is valid, and probably more representative than mine, I want to balance it by pointing out that I deeply, deeply, deeply regret taking a(n explicit, unambiguous, crystal clear) verbal agreement, and not having a signed contract, with an org pretty central to the EA and rationality communities. As a result of having the-kind-of-trust that Linda describes above, I got overtly fucked over to the tune of many thousands of dollars and many months of misery and confusion and alienation, and all of that would’ve been prevented by a simple written paragraph with two signatures at the bottom.

(Such a paragraph would’ve either prevented the agreement from being violated in the first place, or would at least have made the straightforward violation that occurred less of a thing that people could subsequently spin webs of fog and narrativemancy around, to my detriment.)

As for the bit about telling your friends and ruining the reputation of the wrongdoer … this option was largely NOT available to me, for fear-of-reprisal reasons as well as not wanting to fuck up the subsequent situation I found myself in, which was better, but fragile. To this day, I still do not feel like it’s safe to just be fully open and candid about the way I was treated, and how many norms of good conduct and fair dealings were broken in the process. The situation was eventually resolved to my satisfaction, but there were years of suffering in between.

[...]

Cross-posting Linda Linsefors’ take from LessWrong:

I have worked without legal contracts for people in EA I trust, and it has worked well.

Even if all the accusation of Nonlinear is true, I still have pretty high trust for people in EA or LW circles, such that I would probably agree to work with no formal contract again.

The reason I trust people in my ingroup is that if either of us screw over the other person, I expect the victim to tell their friends, which would ruin the reputation of the wrongdoer. For this reason both people have strong incentive to act in good faith. On top of that I’m wiling to take some risk to skip the paper work.When I was a teenager I worked a bit under legally very sketch circumstances. They would send me to work in some warehouse for a few days, and draw up the contract for that work afterwards. Including me falsifying the date for my signature. This is not something I would have agreed to with a stranger, but the owner of my company was a friend of my parents, and I trusted my parents to slander them appropriately if they screwed me over.

I think my point is that this is not something very uncommon, because doing everything by the book is so much overhead, and sometimes not worth it.

It think being able to leverage reputation based and/or ingroup based trust is immensely powerful, and not something we should give up on.

For this reason, I think the most serious sin committed by Nonlinear, is their alleged attempt of silencing critics.

Update to clarify: This is based on the fact that people have been scared of criticising Nonlinear. Not based on any specific wording of any specific message.

Update: On reflection, I’m not sure if this is the worst part (if all accusations are true). But it’s pretty high on the list.

I don’t think making sure that no EA every give paid work to another EA, with out a formal contract, will help much. The most vulnerable people are those new to the movement, which are exactly the people who will not know what the EA norms are anyway. An abusive org can still recruit people with out contracts and just tell them this is normal.

I think a better defence mechanism is to track who is trust worthy or not, by making sure information like this comes out. And it’s not like having a formal contract prevents all kinds of abuse.

Update based on responses to this comment: I do think having a written agreement, even just an informal expression of intentions, is almost always strictly superior to not having anything written down. When writing this I comment I was thinking in terms of formal contract vs informal agreement, which is not the same as verbal vs written.

I’d be happy to talk with you way more about rationalists’ integrity fastidiousness, since (a) I’d expect this to feel less scary if you have a clearer picture of rats’ norms, and (b) talking about it would give you a chance to talk me out of those norms (which I’d then want to try to transmit to the other rats), and (c) if you ended up liking some of the norms then that might address the problem from the other direction.

In your previous comment you said “it doesn’t seem obviously unethical to me for Nonlinear to try to protect its reputation”, “That’s a huge rationalist no-no, to try to protect a narrative”, and “or to try to affect what another person says about you”. But none of those three things are actually rat norms AFAIK, so it’s possible you’re missing some model that would at least help it feel more predictable what rats will get mad about, even if you still disagree with their priorities.

Also, I’m opposed to cancel culture (as I understand the term). As far as I’m concerned, the worst person in the world deserves friends and happiness, and I’d consider it really creepy if someone said “you’re an EA, so you should stop being friends with Emerson and Kat, never invite them to parties you host or discussion groups you run, etc.” It should be possible to warn people about bad behavior without that level of overreach into people’s personal lives.

(I expect others to disagree with me about some of this, so I don’t want “I’d consider it really creepy if someone did X” to shut down discussion here; feel free to argue to the contrary if you disagree! But I’m guessing that a lot of what’s scary here is the cancel-culture / horns-effect / scapegoating social dynamic, rather than the specifics of “which thing can I get attacked for?”. So I wanted to speak to the general dynamic.)

Emerson approaches me to ask if I can set up the trip. I tell him I really need the vacation day for myself. He says something like “but organizing stuff is fun for you!”.

[...]

She kept insisting that I’m saying that because I’m being silly and worry too much and that buying weed is really easy, everybody does it.

😬 There’s a ton of awful stuff here, but these two parts really jumped out at me. Trying to push past someone’s boundaries by imposing a narrative about the type of person they are (‘but you’re the type of person who loves doing X!’ ‘you’re only saying no because you’re the type of person who worries too much’) is really unsettling behavior.

I’ll flag that this is an old remembered anecdote, and those can be unreliable, and I haven’t heard Emerson or Kat’s version of events. But it updates me, because Chloe seems like a pretty good source and this puzzle piece seems congruent with the other puzzle pieces.

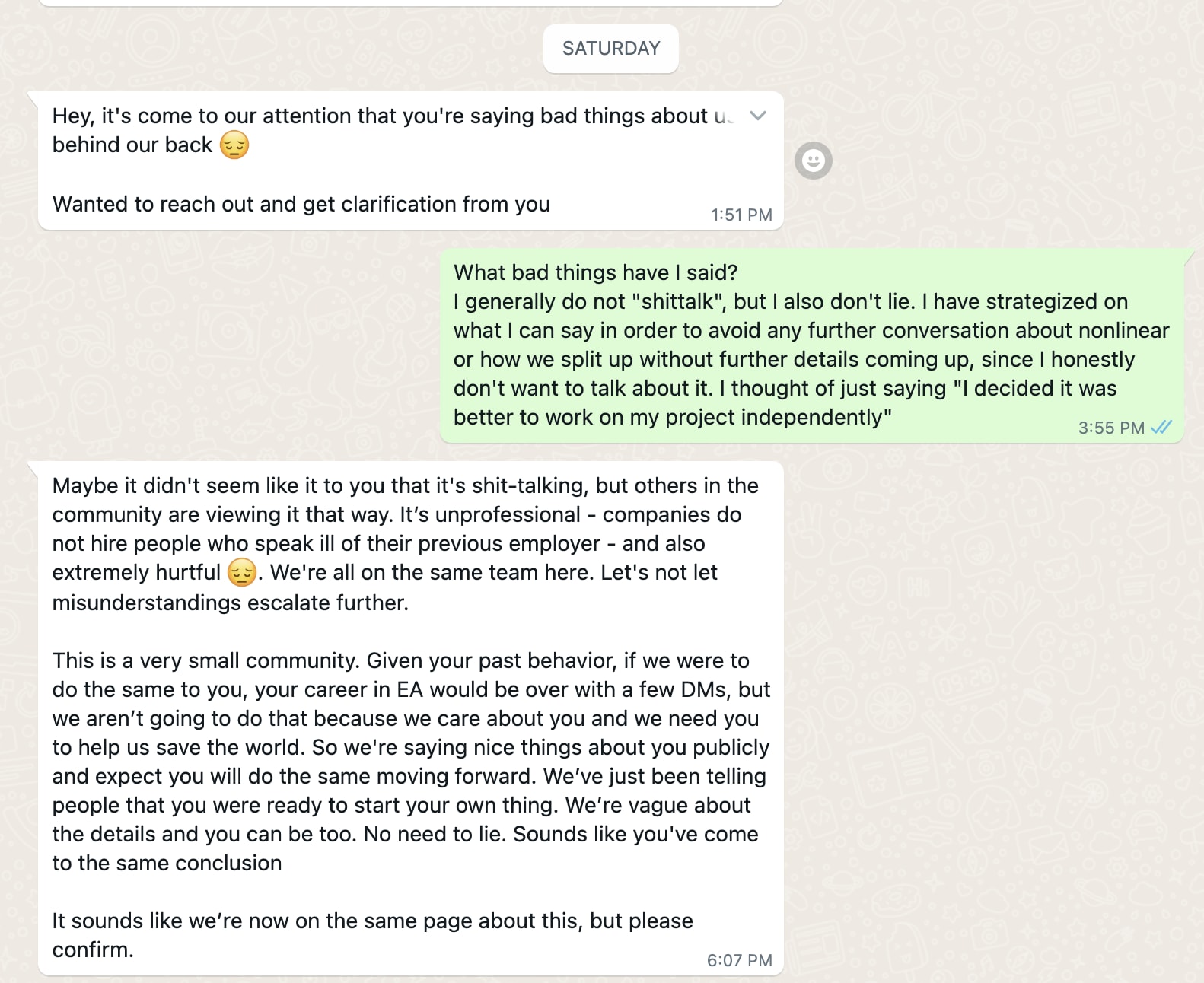

E.g., the vibe here matches something that creeped me out a lot about Kat’s text message to Alice in the OP, which is the apparent attempt to corner/railroad Alice into agreement via a bunch of threats and strongly imposed frames, followed immediately by Kat repeatedly stating as fact that Alice will of course agree with Kat: “[we] expect you will do the same moving forward”, “Sounds like you’ve come to the same conclusion”, “It sounds like we’re now on the same page about this”.

Working and living with Nonlinear had me forget who I was, and lose more self worth than I had ever lost in my life. I wasn’t able to read books anymore, nor keep my focus in meetings for longer than 2 minutes, I couldn’t process my own thoughts or anything that took more than a few minutes of paying attention.

😢 Jesus.

Maybe I’m wrong— I really don’t know, and there have been a lot of “I don’t know” kind of incidents around Nonlinear, which does give me pause— but it doesn’t seem obviously unethical to me for Nonlinear to try to protect its reputation.

I think it’s totally normal and reasonable to care about your reputation, and there are tons of actions someone could take for reputational reasons (e.g., “I’ll wash the dishes so my roommate doesn’t think I’m a slob”, or “I’ll tweet about my latest paper because I’m proud of it and I want people to see what I accomplished”) that are just straightforwardly great.

I don’t think caring about your reputation is an inherently bad or corrupting thing. It can tempt you to do bad things, but lots of healthy and normal goals pose temptation risks (e.g., “I like food, so I’ll overeat” or “I like good TV shows, so I’ll stay up too late binging this one”); you can resist the temptation without stigmatizing the underlying human value.

In this case, I think the bad behavior by Nonlinear also would have been bad if it had nothing to do with “Nonlinear wants to protect its reputation”.

Like, suppose Alice honestly believed that malaria nets are useless for preventing malaria, and Alice was going around Berkeley spreading this (false) information. Kat sends Alice a text message saying, in effect, “I have lots of power over you, and dirt I could share to destroy you if you go against me. I demand that you stop telling others your beliefs about malaria nets, or I’ll leak true information that causes you great harm.”

On the face of it, this is more justifiable than “threatening Alice in order to protect my org’s reputation”. Hypothetical-Kat would be fighting for what’s true, on a topic of broad interest where she doesn’t stand to personally benefit. Yet I claim this would be a terrible text message to send, and a community where this was normalized would be enormously more toxic than the actual EA community is today.

Likewise, suppose Ben was planning to write a terrible, poorly-researched blog post called Malaria Nets Are Useless for Preventing Malaria. Out of pure altruistic compassion for the victims of malaria, and a concern for EA’s epistemics and understanding of reality, Hypothetical-Emerson digs up a law that superficially sounds like it forbids Ben writing the post, and he sends Ben an email threatening to take Ben to court and financially ruin him if he releases the post.

(We can further suppose that Hypothetical-Emerson lies in the email ‘this is a totally open-and-shut case, if this went to trial you would definitely lose’, in a further attempt to intimidate and pressure Ben. Because I’m pretty danged sure that’s what happened in real life; I would be amazed if Actual-Emerson actually believes the things he said about this being an open-and-shut libel case. I’m usually reluctant to accuse people of lying, but that just seems to be what happened here?)

Again, I’d say that this Hypothetical-Emerson (in spite of the “purer” motives) would be doing something thoroughly unethical by sending such an email, and a community where people routinely responded to good-faith factual disagreements with threatening emails, frivolous lawsuits, and lies, would be vastly more toxic and broken than the actual EA community is today.

I’m not sure I’ve imagined a realistic justifying scenario yet, but in my experience it’s very easy to just fail to think of an example even though one exists. (Especially when I’m baking in some assumptions without realizing I’m baking them in.)

I do think the phrase is a bit childish and lacks some rigor

I think the phrase is imprecise, relative to phrases like “prevent human extinction” or “maximize the probability that the reachable universe ends up colonized by happy flourishing civilizations”. But most of those phrases are long-winded, and it often doesn’t matter in conversation exactly which version of “saving the world” you have in mind.

(Though it does matter, if you’re working on existential risk, that people know you’re being relatively literal and serious. A lot of people talk about “saving the planet” when the outcome they’re worried about is, e.g., a 10% loss in current biodiversity, rather than the destruction of all future value in the observable universe.)

If a phrase is useful and tracks reality well, then if it sounds “childish” that’s more a credit to children than a discredit to the phrase.

And I don’t know what “lacks some rigor” means here, unless it’s referring to the imprecision.

Mostly, I like “saves the world” because it owns my weird beliefs about the situation I think we’re in, and states it bluntly so others can easily understand my view and push back against it if they disagree.

Being in a situation where you think your professional network’s actions have a high chance of literally killing every human on the planet in the next 20 years, or of preventing this from happening, is a very unusual and fucked up situation to be in. I could use language that downplays how horrifying and absurd this all is, but that would be deceiving you about what I actually think. I’d rather be open about the belief, so it can actually be talked about.

I’m concerned that our community is both (1) extremely encouraging and tolerant of experimentation and poor, undefined boundaries and (2) very quick to point the finger when any experiment goes wrong.

Yep, I think this is a big problem.

More generally, I think a lot of EAs give lip service to the value of people trying weird new ambitious things, “adopt a hits-based approach”, “if you’re never failing then you’re playing it too safe”, etc.; but then we harshly punish visible failures, especially ones that are the least bit weird. In cases like those, I think the main solution is to be more forgiving of failures, rather than to give up on ambitious projects.

From my perspective, none of this is particularly relevant to what bothers me about Ben’s post and Nonlinear’s response. My biggest concern about Nonlinear is their attempt to pressure people into silence (via lawsuits, bizarre veiled threats, etc.), and “I really wish EAs would experiment more with coercing and threatening each other” is not an example of the kind of experimentalism I’m talking about when I say that EAs should be willing to try and fail at more things (!).

“Keep EA weird” does not entail “have low ethical standards”. Weirdness is not an excuse for genuinely unethical conduct.

I don’t think we get better boundaries by acting like a failure like this is due to character or lack of integrity instead of bad engineering.

I think the failures that seem like the biggest deal to me (Nonlinear threatening people and trying to shut down criticism and frighten people) genuinely are matters of character and lack of integrity, not matters of bad engineering. I agree that not all of the failures in Ben’s OP are necessarily related to any character/integrity issues, and I generally like the lens you’re recommending for most cases; I just don’t think it’s the right lens here.

I was also surprised by this, and I wonder how many people interpreted “It is acceptable for an EA org to break minor laws” as “It is acceptable for an EA org to break laws willy-nilly as long as it feels like the laws are ‘minor’”, rather than interpreting it as “It is acceptable for an EA org to break at least one minor law ever”.

How easy is it to break literally zero laws? There are an awful lot of laws on the books in the US, many of which aren’t enforced.

If someone uses the phrase “saving the world” on any level approaching consistent, run.

I use this phrase a lot, so if you think this phrase is a red flag, well, include me on the list of people who have that flag.

If someone pitches you on something that makes you uncomfortable, but for which you can’t figure out your exact objection—or if their argument seems wrong but you don’t see the precise hole in their logic—it is not abandoning your rationality to listen to your instinct.

Agreed (here, and with most of your other points). Instincts like those can be wrong, but they can also be right. “Rationality” requires taking all of the data into consideration, including illegible hunches and intuitions.

If someone says “the reputational risks to EA of you publishing this outweigh the benefits of exposing x’s bad behavior. if there’s even a 1% chance that AI risk is real, then this could be a tremendously evil thing to do”, nod sagely then publish that they said that.

Agreed!

Yeah, though if I learned “Alice is just not the sort of person to loudly advocate for herself” it wouldn’t update me much about Nonlinear at this point, because (a) I already have a fair amount of probability on that based on the publicly shared information, and (b) my main concerns are about stuff like “is Nonlinear super cutthroat and manipulative?” and “does Nonlinear try to scare people into not criticizing Nonlinear?”.

Those concerns are less directly connected to the vegan-food thing, and might be tricky to empirically distinguish from the hypothesis “Alice and/or Chloe aren’t generally the sort of people to be loud about their preferences” if we focus on the food situation.

(Though I cared about the vegan-food thing in its own right too, and am glad Kat shared more details.)

I’d appreciate it if Nonlinear spent their limited resources on the claims that I think are most shocking and most important, such as the claim that Woods said “your career in EA would be over with a few DMs” to a former employee after the former employee was rumored to have complained about the company.

I agree that this is a way more important incident, but I downvoted this comment because:

I don’t want to discourage Nonlinear from nitpicking smaller claims. A lot of what worries people here is a gestalt impression that Nonlinear is callous and manipulative; if that impression is wrong, it will probably be because of systematic distortions in many claims, and it will probably be hard to un-convince people of the impression without weighing in on lots of the claims, both major and minor.

I expect some correlation between “this concern is easier to properly and fully address” and “this concern is more minor”, so I think it’s normal and to be expected that Nonlinear would start with relatively-minor stuff.

I do think it’s good to state your cruxes, but people’s cruxes will vary some; I’d rather that Nonlinear overshare and try to cover everything, and I don’t want to locally punish them for addressing a serious concern even if it’s not the top concern. “I’d appreciate if Nonlinear spent their limited resources...” makes it sound like you didn’t want Nonlinear to address the veganism thing at all, which I think would have been a mistake.

I’m generally wary of the dynamic “someone makes a criticism of Nonlinear, Nonlinear addresses it in a way that’s at least partly exculpatory, but then a third party steps in to say ‘you shouldn’t have addressed that claim, it’s not the one I care about the most’”. This (a) makes it more likely that Nonlinear will feel pushed into not-correcting-the-record on all sorts of false claims, and (b) makes it more likely that EAs will fail to properly dwell on each data point and update on it (because they can always respond to a refutation of X by saying ‘but what about Y?!’ when the list of criticisms is this danged long).

I also think it’s pretty normal and fine to need a week to properly address big concerns. Maybe you’ve forgotten a bunch of the details and need to fact-check things. Maybe you’re emotionally processing stuff and need another 24h to draft a thing that you trust to be free of motivated reasoning.

I think it’s fine to take some time, and I also think it’s fine to break off some especially-easy-to-address points and respond to those faster.

4⁄2 Update: A former board member of Effective Ventures US, Rebecca Kagan, has shared that she resigned from the board in protest last year, evidently in part because of various core EAs’ resistance to there being any investigation into what happened with Sam Bankman-Fried. “These mistakes make me very concerned about the amount of harm EA might do in the future.”

Oliver Habryka says that I’m correct that EA still hasn’t yet conducted any sort of investigation about what happened re SBF/FTX, beyond the narrow investigation into whether EV was facing legal risk:

(I’ve had conversations with Kagan, Habryka, and a bunch of other EAs about this in the past, and I knew Kagan’s post was in the pipeline, though the stuff they’ve said on this topic has been independent of me and would have been written in my absence.)

Someone else messaged me to say that they thought there had been an investigation, but after talking to staff at CEA, they’ve confirmed again that no investigation has ever taken place.

Julia Wise and Ozzie Gooen apparently also called for an investigation, a full five months ago:

[Update Apr. 5: Julia tells me “I would say I listed it as a possible project rather than calling for it exactly.”]

So, bizarre as it seems, the situation does seem to be as it appears: there’s been literally no action on this in the ~17 months since FTX imploded.

Will MacAskill’s appearance on Sam Harris podcast is out now, and I’m very happy to hear the new details from Will about what happened from his perspective.

But while he talks (at 56:00) about a lot of individual EAs undergoing an enormous amount of “self-reflection, self-scrutiny” in the wake of FTX’s collapse, and he notes that a lot of EA’s leadership has been replaced with new leadership, I don’t consider this a replacement for the whole (obvious, from my perspective) “actually pay someone to look into what happened and write up a postmortem” thing. If anything I’d have expected EAs to have multiple such write-ups by now so we could compare different perspectives on what happened, not literally zero.