Maybe Utilitarianism Is More Usefully A Theory For Deciding Between Other Ethical Theories

Epistemic status: I know very little about ethics. Just a thought I’ve had for a while.

tl;dr: Maybe utilitarianism is a theory that indicates the ultimate goal and can be used to compare the effectiveness of other ethical theories which tell us how to act.

In my own personal worldview, I can’t see how anything ultimately matters besides happiness (by happiness I mean net happiness, posit wellbeing—suffering.)

If human rights ultimately always caused suffering for those whose rights were honored, or being virtuous always resulted in greater unhappiness, or justice always resulted in a less equal distribution of happiness and more unhappiness for all conscious entities, would anyone still believe these ethical theories are correct?

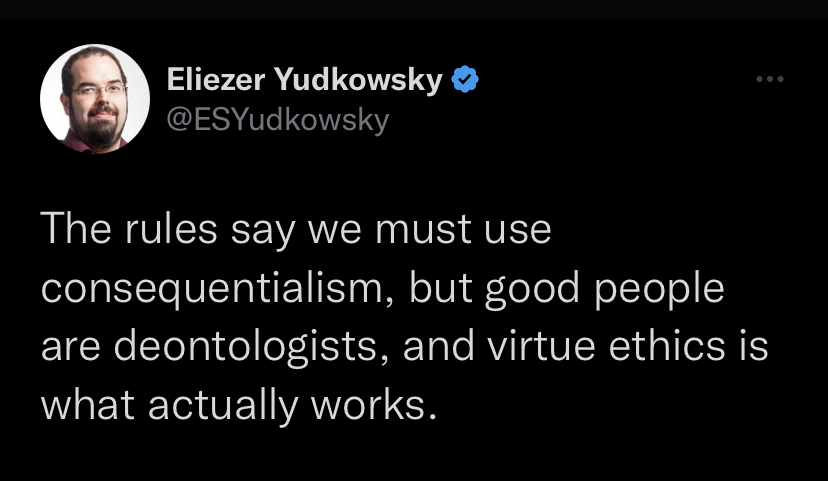

To be clear, I think these theories all have value, and maybe even more value than utilitarianism by utilitarianism’s own standards:

So maybe utilitarianism is not really an ethical theory at all, in the sense that it does not directly tell us which acts are good and so can never directly tell us what to do.

Instead, perhaps utilitarianism just tells us the goal, maximum total happiness or maximum average happiness or some balance between equality of happiness and total happiness (and perhaps some balance between positive wellbeing and suffering.)

Perhaps it is up to other theories such as deontology, virtue ethics, and justice focused ethics to tell us how to actually live in the world, and utilitarianism gives us an empirical measuring stick by which to measure other ethical theories.

I know other ethical theories might take offense at this. But if any of them had universally bad consequences, in particular if meeting the ethical criteria of these theories always resulted in suffering and unhappiness, and nothing else about the theories changed, I think most people would agree that they all become obviously incorrect.

It seems to me that other ethical theories tell us how to act with an implicit goal of achieving the most or best distributed happiness, but, except to the degree they prescribe or imply optimal quantity/quality/distribution of conscious experience, I don’t see how they present any compelling terminal goals in and of themselves.

Again, I’m not saying that this means we should all be utilitarians. Indeed, it is possible that being a utilitarian might intrinsically lead to bad consequences because the goal of maximizing happiness inherently causes instability or leads to bad end-states, and therefore utilitarianism is pragmatically anti-utilitarian.

side note: If you are a philosophical pragmatist, then this would mean utilitarianism is actually, in fact, incorrect; i.e. having happiness as a goal doesn’t work, that which works is correct, therefore utilitarianism is incorrect. Though this is a strange conclusion, I don’t think it is implausible.

In conclusion, it seems to me utilitarianism is more like a theory which describes desirable states of reality, but doesn’t tell us how to actually achieve these states. It may even be that using utilitarianism to choose actions leads to anti-utilitarian outcomes.

I quite like this. The downvotes might be because “meta-ethics” has a distinct meaning in moral philosophy, so some people may have taken one look at the title and thought, “Um, no it isn’t.”

[Edited to add: You might have more luck arguing that deontological ethics and virtue ethics actually belong in the domain of applied ethics—and maybe even aesthetics in places—while normative ethics should just be in the business of figuring out which consequentialist theory is correct.]

Thanks! Changed it to reflect this. As I said, not knowledgeable about ethics.

It would be nice if others would say something like this rather than just down-voting haha.. In any case, your comment is exactly the type of guidance I appreciate!

I think many non-consequentialists really do disagree with utilitarians, and we should leave conceptual room for this to make sense.

So for a different way of conceptualizing the terrain (one that better fits with standard philosophical usage, I think, but agrees with you that utilitarianism is fundamentally an account of the moral goals), see my recent post ‘Ethical Theory and Practice’.