Disentangling “Improving Institutional Decision-Making”

This post discusses what the cause area of “Improving institutional decision-making” (IIDM) currently encompasses, and presents some frameworks for thinking about IIDM’s pathways to impact and what (I think) IIDM should entail. I wrote it because I am currently wrapping up a project on prediction markets, and wanted to write an IIDM-style framework for evaluating the impact of studying or supporting prediction/decision markets— and promptly got quite confused about the whole cause area, so I decided to try to disentangle myself and it.

I have never done serious work in the IIDM sphere, so I definitely should not be treated like an expert on the topic. In fact, it’s likely that I have misunderstood some key aspects of IIDM approaches and would really appreciate any corrections on that front.

Outline

In this post, I write (and sometimes draw) out:

Two somewhat incompatible interpretations of IIDM that seem to often be used in EA; one is a value-neutral approach, and one takes value-alignment of institutions explicitly into account

A sketch of the possible pathways to impact of IIDM

A rough framework for understanding institutions’ impact from an IIDM point of view

An argument for why value-alignment is a critical consideration for IIDM as an EA cause area

Very rough conclusions or next steps

The EA community should distinguish between two types of work that are currently called IIDM:

Value-oriented IIDM (e.g. improving the alignment of institutions’ aims and welfare in the world, or selectively improving the efficiency of already benign or value-aligned institutions) and

Value-neutral IIDM (e.g. developing technical decision-making tools).

Value-oriented IIDM typically has a clearer pathway to impact than value-neutral IIDM does. (For interventions or projects in the style of the latter, I would like to see more of an argument explicitly tracing their impact than “this fits under IIDM, which is useful.” What is happening in reality is likely more reasonable, but still not as carefully laid out as I wish it was.)

Two interpretations of IIDM

Improving institutional decision-making (IIDM) is an established cause area in the community; it has an EA Forum tag, a problem profile page on 80,000 Hours, and 45 of 442 people manually filled it in as a cause area they felt should be a priority on the 2020 EA survey (it tied for first place among causes that had not been listed as options). Personally, I think IIDM-style work is a very promising area for effective altruism.

But the conversation about what IIDM actually entails is still ongoing. This post by the Effective Institutions Project summarizes what survey respondents thought the scope of IIDM should be and gives the following working definition of IIDM:

IIDM is about increasing the technical quality and effective altruism alignment of the most important decisions made by the world’s most important decision-making bodies. It emphasizes building sustained capacity to make high-quality and well-aligned decisions within powerful institutions themselves. (Emphasis mine.)

Note that,

A large majority of survey respondents who provided a response to the request for feedback on the definition (N=43) said that they disliked having “increasing … effective altruism alignment” within the definition. The most common concern expressed was that it may put off those outside of the EA community engaging with IIDM: “outsiders may be a bit suspicious if we say that we try to make institutions aligned with our ideology”. Several respondents went further, arguing that IIDM-like initiatives should be explicitly aligned with institutions’ own preferences. (Emphasis mine.)

This leads me to believe that there are two conflicting ways people in the EA community view IIDM:

Technical or value-neutral IIDM

IIDM seeks to improve the decision-making or decision quality of important institutions.[1]

Example: The Center for Election Science Appeal for 2020 is a post about work like ballot initiatives for approval voting. They make the argument that this work will have a positive impact, but they do not try to change values or target their work selectively. (As far as I can tell.)

Value-oriented IIDM

IIDM seeks to improve the (welfare) impact of decisions made at institutions both by improving the abstract or technical quality of decision-making at (important) institutions, as described above, and by making the aims of those institutions more aligned with general wellbeing.

This can be broken down into two vectors of value-oriented IIDM

A technical approach to IIDM that is also targeted. Here, IIDM involves selectively improving decision-making (in technical ways like in the first interpretation), but only at institutions whose aims are already aligned with general wellbeing. (The selectiveness of the approach is what makes is value-oriented rather than value-neutral.) Note that this approach is likely to be specific-strategy by definition.

A value-aligning approach to IIDM. Here, IIDM attempts to align the aims of institutions with the general goal of improving wellbeing.

Example: Institutions for Future Generations is a post about ways to orient institutions towards benefiting the long-term future.

Indeed, different work in the IIDM “EA field” seems to approach the question with these different mentalities. Here are some examples.

The 80,000 Hours problem profile seems to assume the first (value-neutral) position on IIDM; there is no mention of attempting to change the aims of the institutions whose decision-making 80,000 Hours suggests EAs should improve.[2]

The Center for Election Science (CES) seems to mostly work on the first kind of IIDM (they work to improve the quality of voting methods used, without attempting to modify voters’ values). (One example post.)

The EA Forum’s wiki description of IIDM seems to favor the value-alignment side of things. For instance, it uses the example that EAs have tried to “increase the attention policy-makers give to existential risk.”

The Effective Institutions Project also seems to aim for the second type of IIDM (value-aligned IIDM), and they have implicitly made the distinction I’m trying to make (that there are two dimensions to IIDM) in a recent post, by noting that,

“Within [the broad scope of IIDM], we see two overall pathways by which better institutional decisions can lead to better outcomes. First, an institution’s mission or stated goals can become more altruistic, in the sense that they more directly contribute to a vision of a better world. [...] Second, an institution can take steps to increase the likelihood that its decisions will lead to the outcomes it desires.” (Emphasis mine.)

Work in the longtermist institutional reform sphere, including posts that fall under the institutions for future generations tag, also leans more towards the value-alignment side of things. Their main goal seems to be to incorporate the interests of future beings into the aims of present important institutions.

There are other important axes (or qualities) along which work in the IIDM sphere is heterogeneous, but I will ignore most of them for the sake of simplicity and because they seem less critical to creating a coherent definition of the field or cause area. I would still like to flag some of them in a footnote because I have large uncertainties about which differences are critical to the frameworks.[3]

Terms used, disclaimers

Terms and how I use them:[4]

When I say “we” in this post, I mean the EA community

When I call an intervention or an approach “value-neutral,” I mean that it does not attempt to change the aims of any institutions (e.g. to make them more EA-aligned), nor does it specifically target institutions that are already value-aligned in some way (e.g. EA institutions, or institutions whose aims tend to also support the common good). Examples of value-neutral IIDM include developing sources of information available to all kinds of institutions, or perhaps things like voting reform.

When I call an intervention or an approach “value-oriented,” I am mostly attempting to distinguish it from value-neutral interventions; I include interventions that try to change institutions’ aims, and I include approaches that try to improve decision-making exclusively at institutions that are already value-aligned.

“Specific-strategy” interventions have specific institutions in mind (and a clear and a clear sense of how to improve their decision-making). For instance, trying to make the CDC’s decisions more grounded in expected value reasoning by influencing their organizational structure would be a specific-strategy intervention. Research on prediction markets or decision theory is not specific-strategy. (I may also occasionally use “targeted” to mean the same thing. The term “specific-strategy” is taken from this post.)

“Generic-strategy” interventions are those that are not specific-strategy; these might be broad approaches that could be applied to all sorts of institutions. They have the upside of wider applicability (e.g. not just for the CDC), and the downside of not being very customized. (I may also occasionally use “broad” to mean something similar. The term “generic-strategy” is taken from this post.)

An “institution” is a formally organized group of people, usually delineated by some sort of legal entity in the jurisdiction(s) in which that group operates. (Taken nearly verbatim from this post. Like the post’s authors, I am excluding more nebulous groups from my use of “institution.” For instance, I exclude “the media.”)

“Decision-making” refers to all of the aspects of the process leading up to an institution’s actions over which that institution has agency. (Taken verbatim from this post.) Note that institutional decision-making can involve more parts than individual decision-making (e.g. different employees at a single institution can have contradictory aims), but I am largely ignoring the nuances and grouping all of the factors under the term.

This post was significantly inspired by the Effective Institutions Project’s post from last month, Which Institutions? (A Framework). My post is, in part, an attempt to add to that one (although you should be able to read it independently).

Disclaimer: I sometimes write hypothetical statements like “If you believe that voters’ values are net harmful, [...]” in order to acknowledge possible starting assumptions people may have that would lead to different conclusions. I do not always agree with these hypotheticals. I.e. I do not assume that these premises are true or likely.

How does IIDM help? A sketch of pathways to impact

Improving institutional decision-making seems to plausibly benefit by actually leading to better the outcomes of institutions’ decisions, and through two less direct pathways — by improving the broad intellectual environment and by helping some institutions survive while others fail. I will list the latter two first, and the most direct way third, as the discussion on the most direct pathway is quite extensive.

The pathways:

IIDM can improve our intellectual and political environment.

IIDM can help select which institutions will survive and flourish, shaping the future.

IIDM can actually improve the direct outcomes of institutions’ decisions, where “improving outcomes” involves having a greater positive impact on the world (from an EA or moral point of view rather than from the point of view of the institutions).

In brief, my sense is that:

“Improving our intellectual and political environment” (1) seems too vague to be emphasized as a direct pathway to impact for a cause area for the EA movement. (This opinion is very weakly held.)

“Selecting which institutions will survive and flourish” (2) seems very difficult to trace and predict, at least in general.

“Improving the outcomes of institutions’ decisions” (3) involves many types of work and approaches (which should be clarified), and the value-oriented or targeted approaches are the ones that make the most sense.

(1) IIDM can improve our intellectual and political environment

How this works. It is possible that work on improving institutional decision-making will add clarity to the world, allowing for a better intellectual and political environment. Note that this seems relatively dependent on the form of IIDM work that one takes on. For instance, advocacy of evidence-based decision-making targeted at important institutions could have this effect, but improved enforcement of corporate hierarchies might not.

Rough illustrations/examples.

Bureaucratic redundancies are cut out of certain systems in the US government, which removes obstacles to public understanding of government decisions. This, in turn, leads to more informed voters and better policy decisions. (An analogous example could be plausible at universities instead of governments.)

A number of tech companies begin to run post-mortems on their decisions, which generates new knowledge about public values, particular economic processes, or other questions of interest. (Alternatively, this makes post-mortems a “norm,” which in turn improves accountability in public discourse.)

The ambient level of institutional competence is raised (e.g. when new research develops improved aggregations of forecasts), which has good downstream effects of some kind.

My impression is that this is too fuzzy or indirect (at least, as of now) to be a main argument for IIDM as a coherent “EA cause area.” In other words, it seems like a plausible pathway to impact for IIDM, but does not seem like a very effective thing to aim for.

Additionally, my impression is that, in related discussions about similarly broad or indirect work (like kinds of research), there seems to be a consensus that foundational work is effective (only) if there is a clear use for the work. The most relevant discussions seem to be about things like research in physics, mathematics, or education, where applications in AI safety are often emphasized. The sense I get is that most foundational research is not marginal enough to be prioritized by the EA movement. EA organizations end up doing things like suggesting that people go into more applied fields in academia. (Warning: this is an oversimplification of the discussion, which is much more nuanced but seems to lean the way I have described.)[5]

Intuitively, however, “more clarity” or “more competence” seem like robustly good things. It is also possible that some types of IIDM work would benefit the world very significantly via this pathway, but that this impact tends to be quite hard to trace.[6]

(2) IIDM can help select which institutions will survive and flourish

How this works. Improving decision-making at some key value-aligned institutions should increase the chances that those institutions (and their traditions, values, etc.) will survive while others will fail.[7] This could, in turn, choose which values and patterns of power persist into the future.

Rough illustrations.

In the event of political or economic conflict, one could imagine varying quality of institutional decision-making in different governments playing a crucial role in determining the result of the conflict. In other words, if a given country’s decision-making systems are improved, it has a greater chance of dominating in the case of economic or military competition, which would then shape the future of humanity (e.g. through cultural persistence).

Suppose a number of large companies are vying for dominance in some market (let’s say three providers of meat alternatives) and it seems that some of them are much more value-aligned than others (e.g. if one uses a process that harms the environment or sentient beings). We could make the argument that improving the decision-making of some of these (and not others) might help the value-aligned ones survive and the others fail.

Two media organizations are competing for the same audience, and one is more value-aligned than the other.

These effects could be important, but extremely hard to predict accurately— even to the extent that there might be sign errors in the calculation of expected impact. In other words, it would be extremely hard to produce candidate IIDM interventions that would have sufficiently predictable outcomes via this pathway, as the outcomes would depend on many very uncertain factors.[8] I would also guess that there is a tradeoff between the size of the impact and the predictability of the effect of an intervention. (For example, on the level of governments, the effect of one dominating the other would probably be enormous but hard to objectively evaluate. If, however, there are two directly competing institutions with a contained and easily measurable impact, it is plausible that we could evaluate which one is more value-aligned and improve its odds of survival— but the impact would probably be relatively small.)

Still, the impact of some interventions along this pathway could be enormous, so it seems worth someone doing more work to better understand the possibilities (or maybe people have already done this work and I have simply not found it).

(3) IIDM can improve the outcomes of institutions’ decisions

How this works. The most direct way IIDM benefits the world seems to be by directly affecting the outcomes of institutions’ decisions. In order to understand how this could happen, I sketched out how institutions have a positive impact at all, and in turn, how IIDM helps an institution have a greater positive impact. This is what the next section of the post discusses. After that, I return to a breakdown of this pathway through which IIDM can have a positive impact into more specific sub-pathways.

Value-alignment vs. decision quality of institutions

We could sort institutions by infinitely many properties, but let’s focus on two of them: how aligned the aims of the institution are to public good or to EA values (value-alignment), and how good the institution’s decision-making is, relative to the institution’s intrinsic goals.[9] (Note that the aims of an institution do not have to be intentionally altruistic; an institution can have private aims that align with public good.) We can then plot various institutions on a graph with these axes.

I think that, very roughly, the benefit of an institution is directly related to the product of its measures on these two axes (how aligned its goals are to general wellbeing and how good its decision-making is). In other words, the quality of the goals of an institution and the quality of its decision-making (or of its capacity to execute decisions that achieve their goals) are somewhat multiplicative. Note that there are many other important factors, like the scope of an institution’s power, but we will set them aside for now.

Benefit = [value-alignment] * [decision quality] * [other factors]

This gives us the following heuristics and visualization:

Note that

You can think of the general benefit from an institution in terms of the (signed) area of its relevant rectangle (the rectangle corresponds to the point that represents the institution on the graphic).

If an institution is very value-aligned but with poor decision-making systems (like A in the diagram), then improving its decision-making will have a big positive impact.

If an institution’s goals are not very aligned with goals like public wellbeing, like C or D in the diagram (e.g. if all the institution wants to do is open giant factory farms), then improving its decision-making will have a negligible or negative impact.

In general, in the diagram above, we want to broadly move institutions up and to the right.

The case for targeted or value-oriented IIDM

We can split up the IIDM influence on the outcomes of institutions’ decisions into three sub-pathways, which I summarize here and analyze below.

Broadly improve decision-making at institutions (in value-neutral ways)

If you believe that institutions are generally already value-aligned, or if you think that better technical decision-making at institutions will lead to value-alignment, then you might think that broadly working to improve our decision-making tool-kits will result in a greater positive impact from institutions as a whole.

Align the aims of institutions with public good

We can try to align the aims of important institutions with general wellbeing.

Improve decision-making at institutions that already bring good to the world (e.g. at EA institutions)

We can find institutions whose aims are already (perhaps incidentally) aligned with increasing wellbeing, and improve those institutions’ ability to carry out those aims. This would also then increase the positive impact from those institutions.[10]

Now, if we (A) focus blindly on technical or value-neutral IIDM — improving the efficiency of decision-making without taking values into account (this amounts to shifting points to the right without moving them up) — we might increase the benefits from benign (but inefficient) institutions, but also end up increasing the negative impact of a bunch of actively harmful institutions. So we would either have to avoid improving decision-making at harmful institutions, or make the argument that the increase in harm would be smaller than the increase in benefits from other institutions. (This is plausible— more on this below.)

At the other extreme, we could blindly improve the extent to which institutions are value-aligned (i.e. shift institutions up on the plot— an extreme version of (B) above).

There is not a lot of risk in interventions that broadly value-align institutions (e.g. setting up altruistic incentives) except if there is a significant number of organizations that are well-meaning but have such bad decision quality that they are actively counterproductive to their aims, and if improving their aims would have a corresponding worsening in (counter)productivity. For instance, one can imagine some incompetent animal welfare advocacy group that actually worsens the public’s views on animal welfare. Making them more focused on farmed animal welfare — arguably an improvement in terms of value-alignment — might redirect the public’s ire towards that cause, which could be (even) worse. Such an institution would be to the left of the vertical axis in this picture (it would have negative decision quality).[11] My guess is that such institutions are relatively rare, or have a small impact, and that they do not live for very long, but I might be very wrong. (Semi-relevant xkcd.)

Focusing on improving institutions’ value-alignment can also fail from an EA point of view by not having a large impact (per unit of effort or resources); it is possible that shifting the aims of institutions is generally very difficult, or that the potential benefits from institutions is overwhelmingly bottlenecked by decision-making ability, rather than by the value-alignment of institutions’ existing aims. My sense is that shifting IIDM entirely towards a focus on value-alignment only would be unreasonable.

(And in any case, this project would then become more similar to work done around value lock-in, moral advocacy, or moral circle expansion than to work that has generally been collected under the IIDM umbrella. The possible exception is specifically targeting important institutions and attempting to shift their values or aims.)

My conclusions on improving the outcomes of institutions’ decisions

Ideally, then, we want to do one of the following:

(C) Shift selectively , by improving the decision quality of already value-aligned institutions (or perhaps by improving the value-alignment of some powerful institutions)

By default, this includes meta-EA work on how to improve the effectiveness of EA institutions. It probably also includes things like meta-science, on how to improve academia or our information systems, some attempts to make the aims of industrial entities more EA-value-aligned, and some policy or government-related work.

I’m not sure where building institutions for future generations would fit in, or whether it should go under IIDM at all. My weak impression is that the creation of new institutions should not be a type of IIDM, but the re-orientation of existing institutions to include the interests of future generations (e.g. by setting up a committee to evaluate x-risks) would in fact fall under IIDM.

2. (Resolution of A and B) If taking a broad generic-strategy approach, shift up or diagonally (right and up by simultaneously increasing both value alignment and decision quality of institutions— up enough to ensure that interventions that are net harmful do not contribute to a net negative impact from the intervention).[12]

This would include work like researching cooperation systems, broad advocacy for rationality and evidence-based decision-making, or theoretical work on how one might increase the effectiveness of charity groups.

If an intervention is chiefly developing (e.g. technical) tools for decision-making, without providing those tools to a specific set of institutions, then those tools should probably be asymmetric weapons that benefit the common good more easily than they can be used to harm it.

Note: Owen Cotton-Barratt suggests what I think is a diagonal (if quite diffused) approach to improving decision-making in this post. He also argues for developing “truth-seeking self-aware altruistic decision-making,” which I think is a variation on shifting diagonally, in this different post (this is a form of improving technical decision-making, e.g. by being more truth-seeking, in a way that is also “altruistic” or value-aligned).

An alternative to shifting diagonally would involve somehow shifting up first, and then shifting to the right. (See something analogous here.)

Both of these options (shifting selectively and shifting diagonally) take the value-alignment of institutions’ goals into account and/or directly try to influence it.

Maybe un-targeted, value-neutral IIDM actually has a big positive impact

It’s possible that value-neutral and value-oriented IIDM are nominally different, but tightly related in practice. Maybe if we focus on broadly and technically improving institutional decision-making, we will tend to improve the welfare outcomes (from an EA point of view). This position assumes that institutions’ goals are generally or asymmetrically benign, or perhaps that the institutions whose goals we would end up improving tend to already (disproportionately) have benign goals. I’ll explore some of these possibilities below, but ultimately I remain skeptical (although very open to good arguments for this position, or to changing my mind about parts of the arguments below).

(a) Maybe the average/median institution’s goals are already aligned with public good

This seems plausible, since institutions exist to promote someone’s benefit and don’t tend to prioritize harming anyone. My best guess at the distribution of institutions is illustrated here.

For instance, many institutions likely increase economic growth as a side effect of accomplishing their private aims. Economic growth and public wellbeing are connected,[13] so this could be a strong argument for the case that the aims of institutions tend to in fact be value-aligned.

And yet, it seems possible that there are some institutions that cause an overwhelming amount of harm (e.g. the farming industry or some x-risk-increasing endeavors like gain-of-function research), and that the value-neutral version of IIDM fails to take that into account.

I have not seen an analysis of whether this is unlikely enough to write off and stop considering, and cannot come up with one for this post.

(b) Maybe improving decision-making tends to also improve value-alignment

For example, in their framework for evaluating democratic interventions (from a longtermist point of view), Marie Buhl and Tom Barnes[14] identify “Accuracy” as one of the features or qualities of democracy. (“Accuracy” is described as “The mechanism for selecting representatives [...] accurately reflects the preferences of the decision-making group.”) They decide that this feature should be maximized, largely because it seems to decrease the risks of great power conflict and authoritarianism. (“Accuracy” got 8⁄10 on how much one should maximize it. For context, “Responsiveness” got 6⁄10, and “Voter competence” got 10⁄10.)

This suggests that increasing “accuracy” of governments or institutions, which is work that is a form of (at least nominally) value-neutral IIDM, improves decision-making in ways that are aligned with EA values (like preserving the long-term future). Thus the value-neutral approach incidentally achieves the aims of the value-oriented approach. (On the other hand, improving “responsiveness” might also fall under value-neutral IIDM, and it only got 6⁄10.)

However, I don’t see a clear argument for how an abstract intervention that improves decision-making would also incidentally improve the value-alignment of an institution. (See also the discussion on the “Orthogonality Thesis” in AI, which is the idea that the intelligence of AIs, as it grows, will not necessarily lead them to converge to a common or close set of goals.) So for any given proposed decision-quality-targeted intervention, I think an EA framework for evaluating it should explicitly consider how it would improve the value-alignment of an institution and/or consider whether the institution is already value-aligned. This brings me back to the value-oriented version of IIDM.

(c) Broad benefits of improving decision-making in a society

Alternatively, one could view technical or neutral IIDM as a project that is focused on developing a foundational set of tools for decision-making. Like the claim outlined in (a), this pathway to impact seems plausible to me— it doesn’t contradict my intuitions (more clarity in the world seems good)— but in need of a more careful analysis. Note that if it works, it would improve the outcomes of institutions’ decisions (so I listed it here for completeness), but it is closer to Pathway 1 (“IIDM can improve our intellectual and political environment”) — please see a relevant discussion earlier in this post.

(d) Maybe value-aligned institutions will disproportionately benefit from the development of broad decision-making tools

It could be that there is a connection between the value-alignment of an institution and its ability or willingness to make use of available decision-making tools, and that this connection leads to value-aligned institutions becoming more efficient faster than value-misaligned institutions on average. As a result of this, the net impact of developing and improving institutional decision-making tools is positive (even if the tools could theoretically be used for good or for harm). This does not seem plausible to me; I know of efficient and technologically progressive institutions that seem extremely harmful, and of benign, well-meaning institutions that are slow to change and inefficient. So unless we deliberately, selectively encourage only value-aligned institutions to use the tools we develop, I think we should assume that a basically random (at least along the “value-alignment” dimension) set of institutions will adopt any given set of tools by a given time. (And not that more value-aligned institutions will adopt them first.)

To recap, in picture form:

My doubts and conclusions on value-neutral IIDM

In the end, I am not convinced that a broad and value-neutral approach to improving institutional decision-making is a project that results in better decisions by institutions (where “better” means having a greater positive impact on the world).

Here are a couple of scenarios that I think weaken the case for value-neutral IIDM that is discussed in this section:

If you believe that voters’ values are net harmful (e.g. if you think they would vote for an industrial farming hellscape, given the opportunity, or if you think technocracy is generally better), then increasing the efficiency of the process that translates voters’ values to policies does not bring welfare

If you think that certain important corporations’ intrinsic aims are net harmful or that the average institution’s aims are basically morally neutral, then broadly improving the decision-making of institutions doesn’t seem to directly benefit the world (i.e. the impact is small or negative)

As a result, I think value-oriented IIDM makes more sense than value-neutral IIDM. I.e. an argument for an IIDM intervention should:

Claim that the intervention is targeted at value-aligned institutions (e.g. meta-EA work), or

If the intervention is generic-strategy, claim that the intervention would move institutions up (increase value-alignment) or diagonally (increase value-alignment and technical decision-quality), not just to the right (where only decision quality is improved)

I also (weakly) think that, for the sake of clarity and because the approaches seem quite different, we should generally distinguish between “meta EA” work and IIDM work. For instance, I think improving decision-making at an EA organization should probably be understood as meta EA. On the other hand, there could be IIDM-style projects whose main or most immediate application is in improving EA organizations, which complicates the matter. For instance, if someone develops good forecasting platforms or analyzes perverse truth-hiding incentives within corporations, this could be very useful for targeted IIDM later, and also at EA organizations now.

Conclusion

I distinguished some clashing interpretations of improving institutional decision-making as an EA cause area. I also made a case for the value-oriented approach, which can be further separated into specific-strategy interventions and broad work that improves both the value-alignment and the technical decision-making of institutions. Along the way, I offered some heuristics for thinking about the effects of an institution or an IIDM intervention.

I bet I have made various mistakes and I know that I made serious oversimplifications—please let me know if you see ways to improve this. I see this post as a rough step to get on the same page about approaches to IIDM and to further make sense of the whole area.

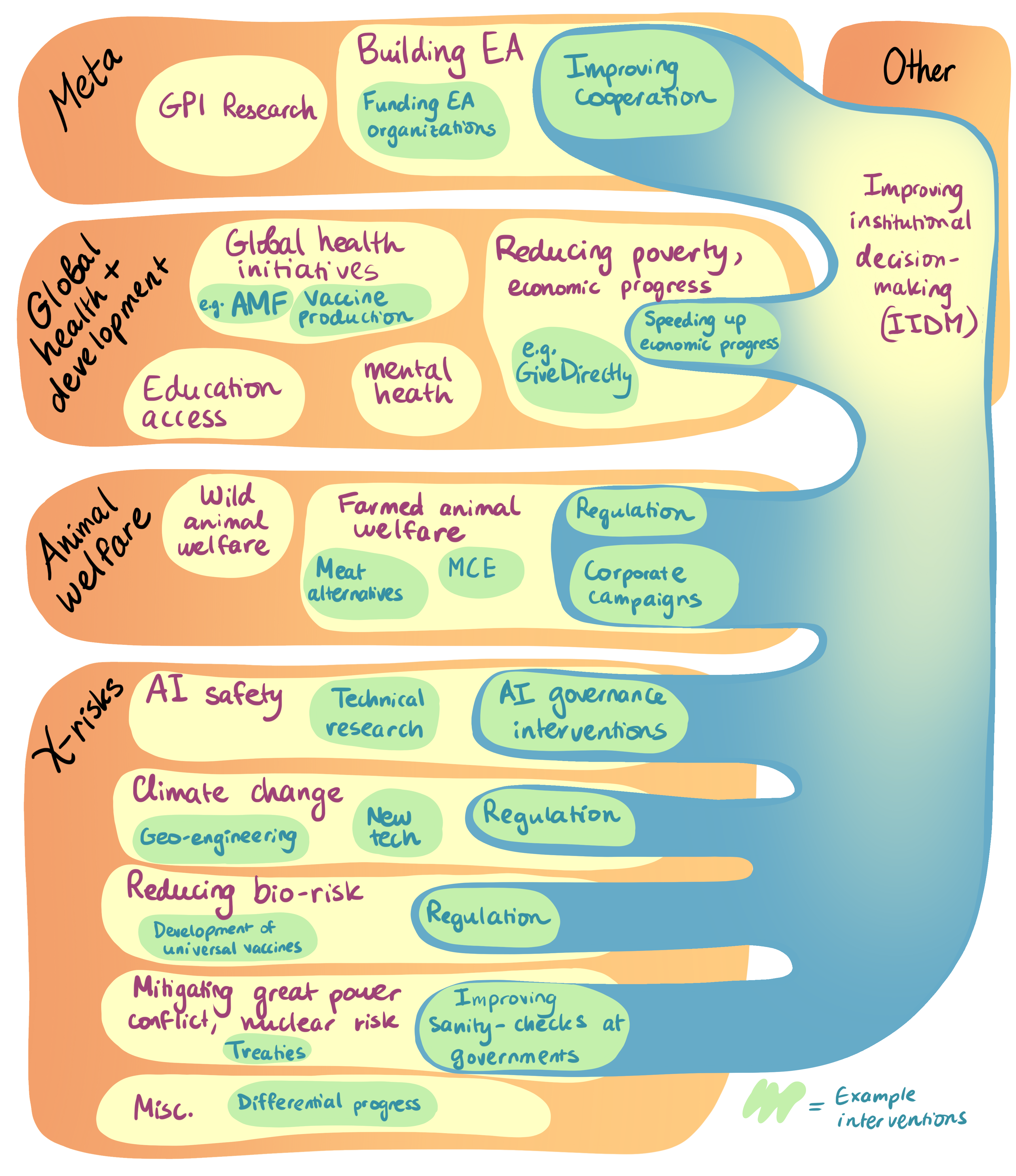

As a final addition, here is a rough sketch of where I think IIDM stands in relation to some other “EA cause areas.” This visualization is meant to convey the way IIDM intersects with some main EA causes. It is not meant as a classification of all EA work.[15]

Please note that while “cause areas” are a heuristic for looking for new interventions, evaluating interventions, or generally trying to understand how one can have a big positive impact, cause areas are only a proxy for those goals and have been criticized for e.g. possibly leading to an overly narrow focus in the community. Note also that I view longtermism as a dimension to different interventions or work in EA rather than as a distinct cause area. For instance, I believe that there can be longtermist work on animal welfare. The causes here are in no particular order.

Credits

This essay is a project of Rethink Priorities.

It was written by Lizka, an intern at Rethink Priorities. Thanks to Eric Neyman, Natalia Mendonça, Emily Grundy, Charles Dillon, Michael Aird, Linch Zhang, Janique Behman, and Peter Wildeford for their extremely helpful feedback. Any mistakes are my own. If you like our work, please consider subscribing to our newsletter. You can see all our work to date here

Notes

- ↩︎

As mentioned before, “decision-making” involves everything from clarifying organizations’ goals, to aligning personal goals of employees to institutions’ goals (e.g. by reducing perverse or internal-politics incentives), to improving efficiency of systems and mechanisms that translate broad goals to actions (or “decisions”) by those organizations. It does not, however, include aligning the aims of those organizations with “the collective good.”

- ↩︎

This is from September 12, 2021.

- ↩︎

Other crucial distinctions: types of institutions and IIDM work; (1.) IIDM at private vs. public institutions (e.g. companies vs. governments). (2.) “Classic” IIDM vs. longtermist IIDM. (3.) IIDM at simple-model institutions with a hierarchy and obvious decision-makers vs IIDM at complex institutions with distributed or implicitly democratic decision-making processes. (4.) Powerful institutions vs institutions with a small influence. (5.) Work that is more theoretical or removed (research on IIDM, development of tools, etc.) and work that is more applied or direct (actually restructuring incentives at an institution, setting up external incentives, introducing ballot initiatives, etc.)

- ↩︎

I really appreciate this post’s care in explaining their terms and took many of my definitions directly from it.

- ↩︎

I have not found a very satisfying summary of the arguments on both sides (of, say, the “pure science as an EA cause area” debate), and may come back to this in a future post. I would also appreciate any resources anyone might have. (To be honest, I have mostly been on the side of the debate that argues against the conclusions in this post.) [UPDATE: this post has a nice (and brief) exploration of the theory of change of “raising the sanity waterline.”]

- ↩︎

“Robustly good” interventions with a hard to trace but plausibly significant impact, and which are pretty accessible for people in different positions could also be good candidates for “Task Y.”

- ↩︎

Note that there is also EA-driven work on creating (value-aligned) institutions that will survive and flourish. I think this is very close to IIDM, but it does not seem quite as neatly relevant to the “IIDM cause area,” even though it involves institutions.

- ↩︎

Caveat: if the sign of an intervention is very uncertain (say, only 55% certain), that decreases the expected impact by a factor of 10 but still could be really large.

- ↩︎

The quality of an institution’s decision-making is some measure of how much the institution is able to use its resources for its aims. This is a complicated measure— it has many more parts than are relevant for improving a single person’s decision-making. IIDM can involve aligning employees’ incentives with those of the institution (e.g. removing perverse goals like managers wanting failing projects to look successful and hiding information about their failure, or corporate sabotage, etc.), improving the quality of information used in decision-making, improving coordination systems, and more. I simplify all of this by roughly grouping all of these factors into one measure of decision quality. (If you view decision quality as multidimensional, this would be a projection of decision quality onto one line.)

- ↩︎

There is also the possibility of selectively harming institutional decision-making: sabotaging evil or accidentally harmful institutions’ decision-making by e.g. adding frivolous bureaucratic processes so that their negative impact is smaller. I am adding it here for completeness, but in all seriousness, I think such efforts would probably end up being bad for the world, as they can easily go wrong. [Coming soon: link to a relevant post.]

- ↩︎

If an institution is in the upper left quadrant of the “decision quality vs value alignment” plot (i.e. it is at (x,y), where x, which corresponds to decision quality, is negative, and y is positive), then its signed area is negative, which is what it should be (the impact of a well-meaning but actively counterproductive institution is in fact negative). This also means that increasing its value alignment (moving it up on the plot) corresponds to an even worse impact from that institution. I think in practice, the counter-intuitiveness of this also points to an issue in my definition of “value-alignment” from an EA point of view, since effectiveness is part of the EA community’s values.

- ↩︎

Note that using this model, the specific ratio of the extents to which an intervention moves institutions broadly up and broadly to the right (the slope of the vector of the intervention) will matter and will depend on the initial distribution/placement of institutions on the value-alignment vs efficiency of decision-making axes. (If we use the illustration, we are basically wanting to increase the sum of all signed areas of the rectangles.) I’m ignoring this for the sake of simplicity (and to avoid producing an even longer post), and also because my best guess is that institutions are at least slightly positively value-aligned on average. Still, I wanted to flag this issue.

- ↩︎

For instance, GDP is related to various national health proxies (see for instance this article, or this one). EAs have suggested that speeding up development would be valuable. There is also a different discussion with a focus on mitigating some risks associated with economic progress by doing things like speeding up development of risk-reducing technologies (e.g. vaccines).

- ↩︎

Note: Marie and Tom are my fellow summer interns at Rethink Priorities.

- ↩︎

- Hello from the new Content Specialist at CEA by (8 Mar 2022 12:08 UTC; 184 points)

- List of Interventions for Improving Institutional Decision-Making by (24 Nov 2022 3:54 UTC; 92 points)

- Distillation and research debt by (15 Mar 2022 11:45 UTC; 71 points)

- About my job: “Content Specialist” by (8 Sep 2023 18:55 UTC; 66 points)

- Improving science: Influencing the direction of research and the choice of research questions by (20 Dec 2021 10:20 UTC; 65 points)

- Issues with Futarchy by (7 Oct 2021 17:24 UTC; 59 points)

- What’s the Theory of Change/Theory of Victory for Farmed Animal Welfare? by (1 Dec 2021 0:52 UTC; 55 points)

- Introducing The Long Game Project: Tabletop Exercises for a Resilient Tomorrow by (17 May 2023 8:56 UTC; 48 points)

- Two directions for research on forecasting and decision making by (11 Mar 2023 15:33 UTC; 48 points)

- Potentially great ways forecasting can improve the longterm future by (14 Mar 2022 19:21 UTC; 43 points)

- EA Updates for October 2021 by (1 Oct 2021 15:25 UTC; 32 points)

- 's comment on Doing EA Better: Preamble, Summary, and Introduction by (25 Jan 2023 23:35 UTC; 22 points)

- 's comment on Forecasting Newsletter: Looking back at 2021. by (28 Jan 2022 4:29 UTC; 11 points)

- Where are the long-termist theories of victory / impact? by (24 Aug 2022 13:45 UTC; 10 points)

- Introducing The Long Game Project: Improving Decision-Making Through Tabletop Exercises and Simulated Experience by (LessWrong; 13 Jun 2023 21:45 UTC; 4 points)

- 's comment on Convergence thesis between longtermism and neartermism by (2 Jan 2022 19:39 UTC; 2 points)

Wow! It’s really great to see such an in-depth response to the definitional and foundational work that’s been taking place around IIDM over the past year, plus I love your hand-drawn illustrations! As the author or co-author of several of the pieces you cited, I thought I’d share a few thoughts and reactions to different issues you brought up. First, on the distinctions and delineations between the value-neutral and value-oriented paradigms (I like those labels, by the way):

I don’t quite agree that Jess Whittlestone’s problem profile for 80K falls into what you’re calling the “value neutral” category, as she stresses at several points the potential of working with institutions that are working on “important problems” or similar. For example, she writes: “Work on ‘improving decision-making’ very broadly isn’t all that neglected. There are a lot of people, in both industry and academia, trying out different techniques to improve decision-making....However, there seems to be very little work focused on...putting the best-proven techniques into practice in the most influential institutions.” The definition of “important” or “influential” is left unstated in that piece, but from the context and examples provided, I read the intention as one of framing opportunities from the standpoint of broad societal wellbeing rather than organizations’ parochial goals.

This segues nicely into my second response,

which is that I don’t think the value-neutral version of IIDM is really much of a thing in the EA community. CES is sort of an awkward example to use because a core tenet of democracy is the idea that one citizen’s values and policy preferences shouldn’t count more than another’s; I’d argue that the impartial welfarist perspective that’s core to EA philosophy is rooted in similar ideas. By contrast, I think people in our community are much more willing to say that someorganizations’values are better than others, both because organizations don’t have the same rights as human beings and also because organizations can agglomerate disproportionate power more easily and scalably than people. I’ve definitely seen disagreement about how appropriate or effective it is to try to change organizations’ values, but not so much about the idea that they’re important to take into account in some way.[Edit: no longer endorsed, see comment below.]There is a third type of value-oriented approach that you don’t really explore but I think is fairly common in the EA community as well as outside of it: looking for opportunities to make a positive impact from an impartial welfarist perspective on a smaller scale within a non-aligned organization (e.g., by working with a single subdivision or team, or on one specific policy decision) without trying to change the organization’s values in a broader sense.

I appreciated your thought-provoking exploration of the two indirect pathways to impact you proposed. Regarding the second pathway (selecting which institutions will survive and flourish), I would propose that an additional complicating factor is that non-value-aligned institutions may be less constrained by ethical considerations in their option set, which could give them an advantage over value-aligned institution from the standpoint of maximizing power and influence.

I did have a few critiques about the section on directly improving the outcomes of institutions’ decisions:

I think the 2x2 grid you use throughout is a bit misleading. It looks like you’re essentially using decision quality as a proxy for institutional power, and then concluding that intentions x capability = outcomes. But decision quality is only one input into institutional capabilities, and in the short term is dominated by institutional resources—e.g., the government of Denmark might have better average decision quality than the government of the United States, but it’s hard to argue that Denmark’s decisions matter more. For that reason, I think that selecting opportunities on the basis of institutional power/positioning is at least as important as value alignment. The visualization approach you took in the “A few overwhelmingly harmful institutions” graph seems to be on the right track in this respect.

One issue you don’t really touch on except in a footnote is the distinction between stated values and de facto values for institutions, or internal alignment among institutional stakeholders. For example, consider a typical private health insurer in the US. In theory, its goal is to increase the health and wellbeing of millions of patients—a highly value-aligned goal! Yet in practice, the organization engages in many predatory practices to serve its own growth, enrichment of core stakeholders, etc. So is this an altruistic institution or not? And does bringing its (non-altruistic) actions into greater alignment with its (altruistic) goals count as improving decision quality or increasing value alignment under your paradigm?

While overall I tend to agree with you that a value-oriented approach is better, I don’t think you give a fair shake to the argument that “value-aligned institutions will disproportionately benefit from the development of broad decision-making tools.” It’s important to remember that improving institutional decision-making in the social sector and especially from an EA perspective is a very recent concept. The professional world is incredibly siloed, and it’s not hard at all for me to imagine that ostensibly publicly available resources and tools that anyone could use would, in practice, be distributed through networks that ensure disproportionate adoption by well-intentioned individuals and groups. I believe that something like this is happening with Metaculus, for example.

One final technical note: you used “generic-strategy” in a different way that we did in the “Which Institutions?” post—our definition imagines a specific organization that is targeted through a non-specific strategy, whereas yours imagines a specific strategy not targeted to any specific organization. I agree that the latter deserves its own label, but suggest a different one than “generic-strategy” to avoid confusion with the previous post.

I’ve focused mostly on criticisms here for the sake of efficiency, but I really was very impressed with this article and hope to see more writing from you in the future, on this topic and others!

Thank you for this response! I think I largely agree with you, and plan to add some (marked) edits as a result. More specifically,

On the 80K problem profile:

I think you are right; they are value-oriented in that they implicitly argue for the targeted approach. I do think they could have make it a little clearer, as much (most?) of the actual work they recommend or list as an example seems to be research-style. The key (and important) exception that I ignored in the post is the “3. Fostering adoption of the best proven techniques in high-impact areas” work they recommend, which I should not have overlooked. (I will edit that part of my post, and likely add a new example of research-level value-neutral IIDM work, like a behavioral science research project.)

“I don’t think the value-neutral version of IIDM is really much of a thing in the EA community”

Once again, I think I agree, although I think there are some rationality/decision-making projects that are popular but not very targeted or value-oriented. Does that seem reasonable? The CES example is quite complicated, but I’m not sure that I think it should be disqualified here. (To be clear, however, I do think CES seems to do very valuable work—I’m just not exactly sure how to evaluate it.)

Side note, on “a core tenet of democracy is the idea that one citizen’s values and policy preferences shouldn’t count more than another’s”

I agree that this is key to democracy. However, I do think it is valid to discuss to what extent voter’s values align with actual global good (and I don’t think this opinion is very controversial). For instance, voters might be more nationalistic than one might hope, they might undervalue certain groups’ rights, or they might not value animal or future lives. So I think that, to understand the actual (welfare) impact of an intervention that improves a government’s ability to execute its voters’ aims, we would need to consider more than democratic values. (Does that make sense? I feel like I might have misinterpreted what you were trying to say, a bit, and am not sure that I am explaining myself properly.) On the other hand, it’s possible that good government decision-making is bottlenecked more by its ability to execute its voters’ aims than it is by the voters’ values’ ethical alignment—but I still wish this were more explicitly considered.

“It looks like you’re essentially using decision quality as a proxy for institutional power, and then concluding that intentions x capability = outcomes.”

I think I explained myself poorly in the post, but this is not how I was thinking about it. I agree that the power of an institution is (at least) as important as its decision-making skill (although it does seem likely that these things are quite related), but I viewed IIDM as mostly focused on decision-making and set power aside. If I were to draw this out, I would add power/scope of institutions as a third axis or dimension (although I would worry about presenting a false picture of orthogonality between power and decision quality). The impact of an institution would then be related to the relevant volume of a rectangular prism, not the relevant area of a rectangle. (Note that the visualizing approach in the “A few overwhelmingly harmful institutions” image is another way of drawing volume or a third dimension, I think.) I might add a note along these lines to the post to clarify things a bit.

About “the distinction between stated values and de facto values for institutions”

You’re right, I am very unclear about this (and it’s probably muddled in my head, too). I am basically always trying to talk about the de facto values. For instance, if a finance company whose only aim is to profit also incidentally brings a bunch of value to the world, then I would view it as value-aligned for the purpose of this post. To answer your questions about the typical private health insurance company, “does bringing its (non-altruistic) actions into greater alignment with its (altruistic) goals count as improving decision quality or increasing value alignment under your paradigm”—it would count as increasing value alignment, not improving decision quality.

Honestly, though, I think this means I should be much more careful about this term, and probably just clearly differentiate between “stated-value-alignment” and “practical-value-alignment.” (These are terrible and clunky terms, but I cannot come up with better ones on the spot.) I think also that my own note about “well-meaning [organizations that] have such bad decision quality that they are actively counterproductive to their aims” clashes with the “value-alignment” framework. I think that there is a good chance that it does not work very well for organizations whose main stated aim is to do good (of some form). I’ll definitely think more about this and try to come back to it.

“The professional world is incredibly siloed, and it’s not hard at all for me to imagine that ostensibly publicly available resources and tools that anyone could use would, in practice, be distributed through networks that ensure disproportionate adoption by well-intentioned individuals and groups. I believe that something like this is happening with Metaculus, for example.”

This is a really good point (and something I did not realize, probably in part due to a lack of background). Would you mind if I added an excerpt from this or a summary to the post?

On your note about”generic-strategy”: Apologies for that, and thank you for pointing it out! I’ll make some edits.

Note: I now realize that I have basically inverted normal comment-response formatting in this response, but I’m too tired to fix it right now. I hope that’s alright!

Once again, thank you for this really detailed comment and all the feedback—I really appreciate it!

It does, and I admittedly wrote that part of the comment before fully understanding your argument about classifying the development of general-use decision-making tools as being value-neutral. I agree that there has been a nontrivial focus on developing the science of forecasting and other approaches to probability management within EA circles, for example, and that those would qualify as value-neutral using your definition, so my earlier statement that value-neutral is “not really a thing” in EA was unfair.

Yeah, I also thought of suggesting this, but think it’s problematic as well. As you say, power/scope is correlated with decision quality, although more on a long-term time horizon than in the short term and more for some kinds of organizations (corporations, media, certain kinds of nonprofits) than others (foundations, local/regional governments). I think it would be more parsimonious to just replace decision quality with institutional capabilities on the graphs and to frame DQ in the text as a mechanism for increasing the latter, IMHO. (Edited to add: another complication is that the line between institutional capabilities that come from DQ and capabilities that come from value shift is often blurry. For example, a nonprofit could decide to change its mission in such a way that the scope of its impact potential becomes much larger, e.g., by shifting to a wider geographic focus. This would represent a value improvement by EA standards, but it also means that it might open itself up to greater possibilities for scale from being able to access new funders, etc.)

No problem, go ahead!

I’ve been skeptical of much of the IIDM work I’ve seen to date. By contrast, from a quick skim, this piece seemed pretty good to me because it has more detailed models of how IIDM may or may not be useful, and is opinionated in a few non-obvious but correct-seeming ways. I liked this a lot – thanks for publishing!

Like, if anyone feels like handing out prizes for good content, I’d recommend that this piece of work should receive a $10k prize (though perhaps I’d want to read it in full before fully recommending).

Hi Lizka, WOW – Thank you for writing this. Great to see Rethink Priorities working on this. Absolutely loving the diagrams here.

I have worked in this space for a number of years mostly here, have been advocating for this cause within EA since 2016 and advised both Jess/80K and the effective institutions project on their writeups. Thought I would give some quick feedback. Let me know if it is useful.

I thought your disentanglement did a decent job. Here are a few thought I had on it.

I really like how you split IIDM into “A technical approach to IIDM” and “A value-aligning approach to IIDM.”

However I found the details of how you split it to be very confusing. It left me quite unsure what goes into what bucket. For example intuitively I would see increasing the “accuracy of governments” (i.e. aligning governments with the interests of the voters) as “value-aligning” yet you classify it as “technical”.

That said despite this, I very much agreed with the conclusion that “value-oriented IIDM makes more sense than value-neutral IIDM” and the points you made to that effect.

I didn’t quite understand what “(1) IIDM can improve our intellectual and political environment” was really getting at. My best guess is that by (1) you mean work that only indirectly leads to “(3) improved outcomes”. So value-oriented (1) would look like general value spreading. Is that correct?

I agree with “for the sake of clarity … we should generally distinguish between ‘meta EA’ work and IIDM work”. That said I think it is worth bearing in mind that on occasion the approaches might not be that different. For example I have been advising the UK government on how to asses high-impact risks which is relevant for EAs too.*

One institution can have many parts. Might be a thing to highlight if you do more disentanglement. E.g. Is a new office for future generations within a government, a new institution or improving an existing institution?

One other thought I had whilst reading.

I think it is important not to assign value to IIDM based on what is “predictable”.

For example you say “it would be extremely hard to produce candidate IIDM interventions that would have sufficiently predictable outcomes via this pathway, as the outcomes would depend on many very uncertain factors.” Predictions do matter but one of the key cases for IIDM is that it offers a solution to the unpredictable, the unknown unknows, to the uncertainty of the EA (and especially longtermist) endeavour. All the advice on dealing with high-uncertainty and things that are hard to predict suggest that interventions like IIDM are the kinds of interventions that should work – as set out by Ian David Moss here (from this piece).

Finally, at points you seemed uncertain about tractability of this work. I wanted to add that so far I have found it much much easier than I expected. Eg you say “it is possible that shifting the aims of institutions is generally very difficult or that the potential benefits from institutions is overwhelmingly bottlenecked by decision-making ability, rather than by the value-alignment of institutions’ existing aims”. (I am perhaps still confused about what you would count as shifting aims Vs decision-making ability see my point 1. above, but) my rough take on this is that I have found shifting the aims of government to be fairly easy and that there are not too many decision-making bottlenecks.

So super excited to see more EA work in this space.

* Oddly enough, despite being in EA for years, I think I have found it easier to influence the UK government to get better at risk identification work than the EA community. Not sure what to do about that. Just wanted to say that I would love to input if RP is working in this space.

I’m curious why you think aligning governments with the interests of voters is value-aligning rather than technical. I can see that being the case for autocratic regimes, but isn’t that the whole point of representative democracy?

(not the author)

4. When I hear “(1) IIDM can improve our intellectual and political environment”, I’m imagining something like if the concept of steelmanning becomes common in public discourse, we might expect that to indirectly lead to better decisions by key institutions.

Nice post : )

I mostly agree with your points, though am a bit more optimistic than it seems like you are about untargeted, value-neutral IIDM having a positive impact.

Your skepticism about this seems to be expressed here:

I think this is true, but it still seems like the aims of institutions are pro-social as a general matter—x-risk and animal suffering in your examples are side effects that aren’t means to the ends of the institutions, which are ‘increase biosecuirty’ and ‘make money’, and if improving decisionmaking helps orgs get at their ends more efficiently then we should think they will have fewer bad side effects if they have better decisonmaking. Also generally orgs’ aims (e.g. “make money”) will presuppose the firm’s, and therefore humanity’s survival, so it still seems good to me as a general matter for orgs to be able to pursue their aims more effectively.

Really nice and useful exploration, and I really liked your drawings.

FWIW, I intuitively would’ve drawn the institution blob in your sketch higher, i.e. I’d have put fewer than (eyeballing) 30% of institutions in the negatively aligned space (maybe 10%?). In moments like this, including a quick poll into the forum to get a picture what others think would be really useful.

Other spontaneous ideas, besides choosing more representative candidates:

increased coherence of the institution could lead to an overall stronger link between its mandate and its actions

increased transparency and coherence could reduce corruption and rent-seeking

Given what I said beforehand, I’d be interested in learning more about examples of harmful institutions that have generally high capacity.

Thank you for this comment!

I won’t redraw/re-upload this sketch, but I think you are probably right.

That’s a really good idea, thank you! I’ll play around with that.

Thank you for the suggestions! I think you raise good points, and I’ll try to come back to this.

An update: after a bit of digging, I discovered this post, “Should marginal longtermist donations support fundamental or intervention research?”, which contains a discussion on a topic that is quite close to “should EA value foundational (science/decision theory) research,” (in the pathway (1) section of my post). The conclusions of the post I found do not fit into my vague impressions of “the consensus.” In particular, the conclusion of that post is that longtermist research hours should often be spent on fundamental research (which is defined by its goals).

(Disclaimer: the author, Michael, is employed at Rethink Priorities, where I am interning. I don’t know if he still endorses this post or its conclusions, but the post seems relevant here and very valuable as a reference.)

fwiw, I think I still broadly endorse the post and its conclusions.

(I only skimmed your post, so can’t comment on how my views and my post aligns/conflicts with the views in your post.)

Thanks! Some brief ‘deep’ counterpoints. I don’t see how “decision-making quality” and

“values” or/which is implied by “value alignment” can be orthogonal to each other, thus facilitating the above graphs. To my mind bad values would promote bad decisions. (And to my mind bad values would have some relation and thus alignment with ‘our’ values, hopefully a negative one, but most likely not a priori without a relation). Relatedly, I also don’t really believe in the existence of “value-neutrality”, and moreover I think it is a dangerous, or more mildly counter-productive, concept to deploy (with effects that are misleadingly regarded as ‘neutral’ and perhaps attended less to), e.g. the economy might be—or often is—regarded as neutral, yet very significantly disregards the interests of future generations, or non-human animals.

I suppose the post’s content fits with moral relativists’ and anti-realists’ worldviews, but with more difficulty with moral realists’, or then just to relate to a way that people often ‘happen to talk’.

Follow-up: Perhaps to put altruism and effectiveness—properly understood—on the y-axis and x-axis respectively would be better, i.e. communicate what we would want to communicate and not suffer from the above-mentioned shortcomings?

Thanks for the comment! I feel funny saying this without being the author, but feel like the rest of my comment is a bit cold in tone, so thought it’s appropriate to add this :)

I lean more moral anti-realist but I struggle to see how the concept of “value alignment” and “decision-making quality” are not similarly orthogonal from a moral realist view than an anti-realist view.

Moral realist frame: “The more the institution is intending to do things according to the ‘true moral view’, the more it’s value-aligned.”

“The better the institutions’s decision making process is at predictably leading to what they value, the better their ‘decision-making quality’ is.”

I don’t see why these couldn’t be orthogonal in at least some cases. For example, a terrorist organization could be outstandingly good at producing outstandingly bad outcomes.

Still, it’s true that the “value-aligned” term might not be the best, since some people seem to interpret it as a dog-whistle for “not following EA dogma enough” [link] (I don’t, although might be mistaken). “Altruism” and “Effectiveness”as the x and y axes would suffer from the problem mentioned in the post that it could alienate people coming to work on IIDM from outside the EA community. For the y-axis, ideally I’d like some terms that make it easy to differentiate between beliefs common in EA that are uncontroversial (“let’s value people’s lives the same regardless of where they live”), and beliefs that are more controversial (“x-risk is the key moral priority of our times”).

About the problematicness of ” value-neutral”: I thought the post gave enough space for the belief that institutions might be worse than neutral on average, marking statements implying the opposite as uncertain. For example crux (a) exists in this image to point out that if you disagree with it, you would come to a different conclusion about the effectiveness of (A).

(I’m testing out writing more comments on the EA forum, feel free to say if it was helpful or not! I want to learn to spend less time on these. This took about 30 minutes.)

Thanks for the post and for taking the time! My initial thoughts on trying to parse this are below, I think it will bring mutual understanding further.

You seem to make a distinction between intentions on the y-axis and outcomes on the x-axis. Interesting!

The terrorist example seems to imply that if you want bad outcomes you are not value-aligned (aligned to what? to good outcomes?). They are value-aligned from their own perspective. And “terrorist” is also not a value-neutral term, for example Nelson Mandela was once considered one, which would I think surprise most people now.

If we allow “from their own perspective” then “effectiveness” would do (and “efficiency” to replace the x-axis), but it seems we don’t, and then “altruism” (or perhaps “good”, with less of an explicit tie to EA?) would without the ambiguity “value-aligned” brings on whether or not we do [allow “from their own perspective”].

(As not a moral realist, the option of “better value” is not available, so it seems one would be stuck with “from their own perspective” and calling the effective terrorist value-aligned, or moving to an explicit comparison to EA values, which I was supposing was not the purpose, and seems to be even more off-putting via the mentioned alienating shortcoming in communication.)

Next to value-aligned being suboptimal, which I also just supported further, you seem to agree with altruism and effectiveness (I would now suggest “efficiency” instead) as appropriate labels, but agree with the author about the shortcoming for communicating to certain audiences (alienation), with which I also agree. For other audiences, including myself, the current form perhaps has shortcomings. I would value clarity more, and call the same the same. An intentional opaque-making change of words might additionally come across as deceptive, and as aligned with one’s own ideas of good, but not with such ideas in a broader context. And that I think could definitely also count as / become a consequential shortcoming in communication strategy.

And regarding the non-orthogonality, I was—as a moral realist -more thinking along the lines of: being organized (etc., etc.), is presumably a good value, and it would also improve your decision-making (sort of considered neutrally)...