What can superintelligent ANI tell us about superintelligent AGI?

To what extent can humans forecast the impacts of superintelligent AGI?

From one point of view, trying to understand superintelligence seems utterly intractable. Just as a dog or chimpanzee has little hope of comprehending the motivations and powers of humans, why should humans have any hope of comprehending the motivations and powers of superintelligence?

But from another point of view, forecasting the impacts of superintelligence may yet be possible. The laws of reality that constrain us will similarly constrain any superintelligence. Even if a superintelligence achieves a more refined understanding of physics than us humans, it very likely won’t overturn laws already known. Thus, any inventions optimized against those physical laws, even if superior to our own, may end up looking familiar rather than alien.

No matter how intelligent an AGI is, it will still be bound by physics. No matter how smart you are, you still must obey the law of conservation of energy. Just like us, an AGI wishing to affect the world will require an energy industry full of equipment to extract energy from natural sources. Just like us, its energy will have to come from somewhere, whether it’s the sun (solar, wind, biofuels, fossil fuels, hydro), the Earth (geothermal, nuclear), or the Moon (tidal, nuclear). Just like us, any heat engines will be limited by Carnot efficiency. Just like us, energy will need to be transported from where it is collected to where it is consumed, likely by electromagnetic fields in the presence of bound electrons (e.g., chemical fuels) or unbound electrons (e.g., electricity) or neither (e.g., lasers). If there are economies of scale, as there likely will be, that transportation will take place across networks with fractal network topologies, similar to our electric grids, roads, and pipelines. The physics of energy production are so constrained and so well understood that no matter what a superintelligence might build (even fusion electricity, or superconducting power lines, or wireless power), I suspect it will be something that humans had at least considered, even if our attempts were not as successful.

One way to preview superintelligent AGI is to consider the superintelligent narrow AIs humanity has attempted to develop, such as chess AI.

Lessons from chess AI: superintelligence is not omnipotence

In 2017, DeepMind revealed AlphaZero. In less than 24 hours of (highly parallelized) training, it was able crush Stockfish, the reigning AI world chess champion. AlphaZero was trained entirely de novo, with no learning from human games and no human tuning of chess-specific parameters.

AlphaZero is superhuman at chess. AlphaZero is so good at chess that it could defeat all of us combined with ease. Though the experiment has never been done, were we to assemble all the world’s chess grandmasters and give them the collective task of coming up with a single move a day to play against AlphaZero, I’d bet my life savings that AlphaZero would win 100 games before the humans won 1.

From this point of view, AlphaZero is godlike.

Its margin of strength over us is so great that even if the entire world teamed up, it could defeat all of us combined with ease

It plays moves so subtle and counterintuitive that they are beyond the comprehension of the world’s smartest humans (or at least beyond the tautological comprehension of ‘I guess it wins because the computer says it wins’).

...but on the other hand, pay attention to all the things that didn’t happen:

AlphaZero’s play mostly aligned with human theory—it didn’t discover any secret winning shortcuts or counterintuitive openings.

AlphaZero rediscovered openings commonly played by humans for hundreds of years:

When DeepMind looked inside AlphaZero’s neural network they found “many human concepts” in which it appeared the neural network computed quantities akin to what humans typically compute, such as material imbalance (alongside many more incomprehensible quantities, to be fair).

AlphaZero was comprehensible—for the most part, AlphaZero’s moves are comprehensible to experts. Its incomprehensible moves are rare, and even then, many of them become comprehensible after the expert plays out a few variations. (By comprehensible, I don’t mean in the sense that an expert can say why move A was preferred to move B, but in the sense that an expert can articulate pros and cons that explain why move A is a top candidate.)

AlphaZero was not invincible—in 1200 games against Stockfish, it lost 5⁄600 with white and 19⁄600 with black.

AlphaZero was only superhuman in symmetric scenarios—although AlphaZero can reliably crush grandmasters in a fair fight, what about unfair fights? AlphaZero’s chess strength is estimated to be ~3500 Elo. A pawn is worth ~200 Elo points as estimated by Larry Kaufman, which implies that a chess grandmaster rated ~2500 Elo should reliably crush AlphaZero if AlphaZero starts without its queen (as that should reduce AlphaZero’s effective strength to ~1500 Elo). Even superintelligence beyond all human ability is not enough to overcome asymmetric disadvantage, such as a missing queen. Superintelligence is not omnipotence.

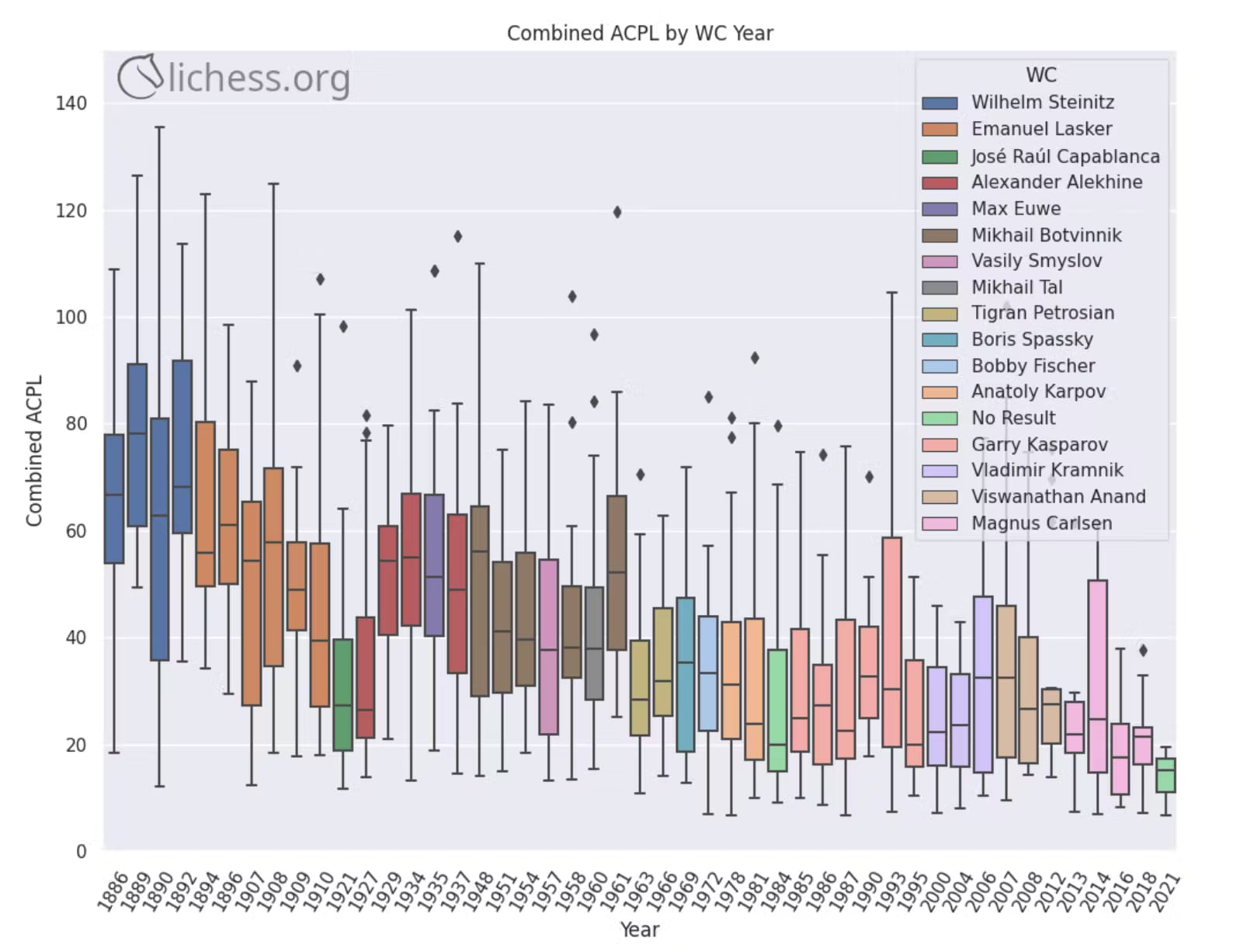

AlphaZero hasn’t revolutionized human chess—although players have credited AlphaZero with new inspiration, for the most part human chess is played at a similar strength and style. AlphaZero didn’t teach us any secret shortcuts to winning, or any special attacks that cannot be defended, or any special defenses that cannot be pierced. Arguably the biggest learnings were to push the h pawn a little more frequently and to be a little less afraid of sacrificing pawns to restrict an opponent’s mobility. Even chess computers at large haven’t dramatically transformed chess, although they are now indispensable study tools, especially for opening preparation. Historical rates of human chess progress as measured by average centipawn loss (ACPL) in world championship (WC) games show no transformative step function occurring in the 2000s or 2010s. Progress may have accelerated a little if you squint, but it wasn’t a radical departure from pre-AI trends.

Ultimately, superhuman AIs didn’t crush humans at chess by discovering counterintuitive opening secrets or shortcuts that dumb humans missed. In fact, AIs rediscovered many of the same openings that humans play. Rather, AIs do what humans do—control the center, capture pieces, safeguard their king, attack weaknesses, gain space, restrict their opponent’s options—but the AIs do this more effectively and more consistently.

In the 5th century BCE, Greek philosophers thought the Earth was a sphere. Although they were eventually improved upon by Newton, who modeled the Earth as an ellipsoid, they still had arrived at roughly the right concept. And in chess we see the same thing: although a superhuman AI ‘understands’ chess better than human experts, its understanding still mostly reflects the same general concepts.

Refocusing back on AGI, suppose we invent superhuman AGI for $1T in 2100 and we ask it for advice to optimize humanity’s paperclip production. Whatever advice it comes up with, I don’t think it will be unrecognizable magic. Paperclip production is a fairly well understood problem: you bring in some metal ore, you rearrange its atoms into paperclips, and you send them out. A superintelligent AGI asked to optimize paperclip production will probably reinvent much of the same advice we’ve already derived: invest in good equipment, take advantage of economies of scale, select cheap yet effective metals, put factories near sources of energy and materials, ship out paperclips in a hierarchical network with caches along the way to buffer unanticipated demand, etc. If it gives better advice than a management consultant, I expect it to do so not by incomprehensible omnipotent magic that inverts the laws of physics, but by doing what we’re doing already—just smarter, faster, and more efficient.

More practically, if we invent AGI and ask it to invent fusion power plants, here’s how I think it will go: I don’t expect it to think for a moment and then produce a perfect design, as if by magic. Rather, I expect it to focus on known plasma confinement approaches, run some imperfect simulations, and then strategize over what experiments will allow it to acquire the knowledge needed to iteratively improve its designs. Relative to humans, its simulations may be better and its experimentation may be more efficient, but I expect its intelligence will operate within the same constraints that we do.

Intelligence is not omnipotence.

(This post is a lightly edited excerpt from Transformative AGI by 2043 is <1% likely)

The situation in go looks different:

New top-of-the-line go bots play different openings than we had in 2015, including ideas we thought were bad (e.g. early 3-3 invasions).

Humans have now adopted go bot opening sequences.

That said:

Go bots are not literally omnipotent, there is a handicap level at which humans can beat them.

We can gain insight into go bot play by looking at readouts and thinking for ages.

It is possible for humans to beat top go bots in a fair fight…

… but the way that happened was by training an adversary go bot to specifically do that, and copying that adversary’s play style.

Note that if you take observations of tic-tac-toe superintelligent ANI (plays the way we know it would play, we can tie with it if we play first), then of AlphaZero chess, then of top go bots, and extrapolate out along the dimension of how rich the strategy space of the domain is (as per Eliezer’s comment), I think you get a different overall takeaway than the one in this post.

Presumably if AlphaZero did not become superhuman by “hacking” it is because the hacks aren’t there. But in the real world case, the hacks are there—life itself is built out of nanotech, the quintessential supposedly physics violating technology. Knowing that, why would a paperclip maximizer forgo its use, and stick to ordinary factories?

Very interesting post, and one I’m sympathetic too, thanks!

A possible counter-argument is that the limited power of superintelligence in this domain is due to the space of strategies in chess being too constrained for for discovering radically new ideas. It is sometimes said that the space of possible strategies in Go is much larger than in chess, so I wonder if AlphaGo Zero presents a useful complementary case study. If, compared to chess, AlphaGo Zero discovers more strategies and ideas that humans have missed, or which are very hard to understand, that would suggest that as the state space grows more open and complex, superintelligence will become more powerful.

Nice post! I think the inherent limitations of AI are extremely important to evaluating AI risks and highly under-discussed. (Coincidentally, I’m also writing a post looking at the performance of chess engines in this context which should be done soon.)

When it comes to chess, I think it’s worth noting that alphazero is not the best chess playing AI, stockfish is. If you look at chess engine tournaments, stockfish and leelazero (the open source implementation of alphazero’s design) were pretty evenly matched in the years up to 2020, even though stockfish was not using neural networks and relying more on hard-coded intuition and trial-and-error. In 2020, stockfish did incorporate neural networks, but in a hybrid manner, switching between NN and “classical” mode depending on the position. Since then it’s dominated both classical engines and purely NN engines, which tells us that human expert knowledge still counts for something here.

Nah. The Stockfish Improvements comes from NNUE Evaluation which roughly speaking replaces the mostly hand crafted evaluation function by experts with one by a a NN. So it actually says the opposite of what you claimed.

Stockfish+NNUE is better than leelazero because the search part of Leelazero is not subject to reinforcement learning. That part is where Stock fish (admittedly hand expert coded) is better.

This is a good post.

There are counterarguments about how the real world is a much richer and more complex environment than chess (e.g. consider that a superintelligence can’t beat knowledgeable humans in tic-tac-toe but that doesn’t say anything interesting).

However, I don’t really feel compelled to elaborate on those counterarguments because I don’t genuinely believe them and don’t want to advocate a contrary position for the sake of it.

Cf. Superintelligence Is Not Omniscience and AI will change the world, but won’t take it over by playing “3-dimensional chess”.

Nice post, Ted!

For reference, ANI stands for artificial narrow intelligence.

Q1. Is it possible to create mathematical models of reality? If so, AGI will solve the nuclear fusion problem, or perhaps any problem, like magic. Running some sim and building directly the solution, with possibly a really small number of trial errors — I’d imagine that atoms are not perfect and there must be some level of chaos in reality that cannot be fully replicated in a math model.

Q2. How much energy could require to run such a simulation? The main constrains of AGI will be energy consumption, no? According Wolfram, the universe is the result of a calculation w/o shortcuts, and if that’s the case AGI have to run the calculation, either in reality or simulation.

With that in mind, AGI being godlike seems a stretch. To solve a problem AGI will need computation and energy, which cost money, but to gain money it could exploit the stock market with some manipulation, either direct or indirect; A potential scenario could look like the recent pandemic, in which AGI short the market and then cause the pathogen leak.

Seems disingenuous to write a post this long, and not mention the obvious notion that more complicated and poorly known domains (than chess) will place weaker minds at a greater disadvantage to more powerful ones (compared to chess). This is why a superintelligence could not beat a short Python program at logical Tic-Tac-Toe, and 21st-century Russia could easily roll over 11th-century Earth with tanks and if need be nuclear weapons (even though both of those populations are the same species). Chess occupies a middle ground between those two points on the spectrum, but I’d say lies most of the way toward Tic-Tac-Toe.

I claim the word “disingenuous” is in fact breaking Forum norms, but not egregiously. It is unnecessarily unkind. I also do not think it rises to the level of formal warning. However, I deeply regret that Ted felt like taking a step back from the Forum on the basis of this comment. I think this shows the impact of unkind comments.

I’m not a moderator, but I used to run the Forum, and I sometimes advise the moderation team.

While “disingenuous” could imply you think your interlocutor is deliberately lying about something, Eliezer seems to mean “I think you’ve left out an obvious counterargument”.

That claim feels different to me, and I don’t think it breaks Forum norms (though I understand why JP disagrees, and it’s not an obvious call):

I don’t want people to deliberately lie on the Forum. However, I don’t think we should expect them to always list even the most obvious counterarguments to their points. We have comments for a reason!

I’m more bothered by criticism that accuses an author of norm-breaking (“seems dishonest”) than criticism that merely accuses them of not putting forward maximal effort (“seems to leave out X”)

To get deeper into this: I read “seems dishonest” as an attack — it implies that the author did something seriously wrong and should be downvoted or warned by mods. I read “seems to leave out X” as an invitation to an argument.

The ambiguity of “disingenuous” means I’d prefer to see people get more specific. But while I wish Eliezer hadn’t used the word, I also think he successfully specified what he meant by it, and the overall comment didn’t feel like an attack (to me, a bystander; obviously, an author might feel differently).

*****

I don’t blame anyone who wants to take a break from Forum writing for any reason, including feeling discouraged by negative comments. Especially when it’s easy to read “seems disingenuous” as “you are lying”.

But I think the Forum will continue to have comments like Eliezer’s going forward. And I hope that, in addition to pushing for kinder critiques, we can maintain a general understanding on the Forum that a non-kind critique isn’t necessarily a personal attack.

(Ted, if you’re reading this: I think that Eliezer’s argument is reasonable, but I also think that yours was a solid post, and I’m glad we have it!)

*****

The Forum has a hard balance to strike:

I think the average comment is just a bit less argumentative / critical than would be ideal.

I think the average critical comment is less kind than would be ideal.

I want criticism to be kind, but I also want it to exist, and pushing people to be kinder might also reduce the overall quantity of criticism. I’m not sure what the best realistic outcome is.

I think it’s important that Eliezer used the words “and not mention the obvious notion that” (emphasis added).

The use of the word “obvious” suggests that Eliezer thinks that Ted is either lying by not mentioning an obvious point, or he’s so stupid that he shouldn’t be contributing to the forum.

If Eliezer had simply dropped the word “obvious”, then I would agree with Aaron’s assessment.

However as is, I agree with JP’s assessment.

(Not that I’m a moderator, nor am I suggesting that my opinion should receive some special weight, just adding another perspective.)

My opinion placed zero weight on the argument that Eliezer is a high profile user of the forum, and harsh words from him may cut deeper, therefore he (arguably) has a stronger onus to be kind.

Pulling the top definitions off a Google search, disingenous means:

not candid or sincere, typically by pretending that one knows less about something than one really does (Oxford Languages)

lacking in candor; also : giving a false appearance of simple frankness : calculating (Merriam-Webster)

not totally honest or sincere. It’s disingenuous when people pretend to know less about something . . . (Vocabulary)

lacking in frankness, candor, or sincerity; falsely or hypocritically ingenuous; insincere (Dictionary.com)

I don’t see a whole lot of daylight between calling an argument disingenous and calling it less-than-honest, lacking in candor, or lacking in sincerity. I also don’t see much difference between calling an argument less-than-honest and calling its proponent less-than-honest. Being deficient in honesty, candor, or sincerity requires intent, and thus an agent.

To me, there’s not a lot of ambiguity in the word’s definition. But I hear the argument that the context in which Eliezer used it created more ambiguity. In that case, he should withdraw it, substitute a word that is not defined as implicating honesty/candor/sincerity, and apologize to Ted for the poor word choice. If he declines to do any of that after the community (including two moderators supported by a number of downvoters and agreevoters) have explained that his statement was problematic, then I think we should read disingenous as the dictionaries define it. And that would warrant a warning.

Stepping back, I think it would significantly damage Forum culture to openly tolerate people calling other people’s arguments “disingenous” and the like without presenting clear evidence of the proponent’s dishonesty. It’s just too easy to deploy that kind of language as a personal attack with plausible deniability (I am not suggesting that was Eliezer’s intent here). One can hardly fault Ted for reading the word as the dictionaries do. I read it the same way.

Although there will always be difficulties with line-drawing and subjectivity, I think using consistent dictionary definitions as the starting point mitigates those concerns. And where there is enough ambiguity, allowing the person to substitute a more appropriate term and disavow the norm-breaking interpretation of their poor word choice should mitigate the risk of overwarning.

Sorry for seeming disingenuous. :(

(I think I will stop posting here for a while.)

I see some disagree votes on Ted’s comment. My guess at what they mean:

“Ted, please don’t be put off, Eliezer is being unnecessarily unkind. Your post was a useful contribution”.

Why would this notion be obvious? Seems just as likely, if not more, that in a more complicated domain your ability to achieve your goals hits diminishing returns w.r.t intelligence more quickly than in simple environments. If that is the case the ‘disadvantage of weaker minds’ will be smaller, not larger.

(I don’t find the presented analogy very convincing, especially in the ‘complicated domain’ example of modern Russia overpowering medieval Earth, since it is only obvious if we imagine the entire economy/infrastructure/resources of Russia. Consider instead the thought experiment of say 200 people armed with 21st century knowledge but nothing else, and it seems not so obvious they’ll be easily able to ‘roll-over 11th-century Earth’).

Maybe the wording people found off=putting, but I think the point is correct. AIs haven’t really started to get creative yet, which shouldn’t be underestimated. Creativity is expanding the matrix of possibilities. In chess, that matrix remains constrained. Sure, there are physical constraints, but an ASI can run circles around us before it has to resort to reversing entropy.

Edited:

Speaking in my own opinion, I think this comment breaks Forum norms by being unnecessarily rude. I’ll raise it to the moderator team and discuss it.