The Effective Altruist Case for Using Genetic Enhancement to End Poverty

Original post: https://parrhesia.substack.com/p/the-effective-altruist-case-for-using

I. Wealth, Poverty, and Effective Altruism

The disparity in living conditions between the world’s richest and poorest countries is staggering. Citizens of highly developed nations enjoy luxuries and conveniences entirely out of reach for the billions who must survive on just a few dollars per day. Beyond the harshness of extreme poverty, the inhabitants of the least developed nations often must endure challenges such as malnutrition, inadequate healthcare, rampant corruption, and authoritarian rule. These conditions starkly contrast with the peaceful environment and high quality of life common in affluent Western liberal democracies.

With international income disparities reaching beyond fifty-fold, addressing this inequality is one of the most critical issues in social science and global policy. Considerable endeavors have been made to improve the conditions of the worst-off nations, but these gaps persist. After the Second World War and European decolonization in the mid-20th century, concerted efforts were made to foster political stability and stimulate economic growth in newly independent nations. Particularly important were international organizations such as the United Nations (UN), the International Monetary Fund (IMF), and the World Bank, which collectively provide financial support and policy guidance to combat global poverty.

Beyond supranational organizations, non-governmental organizations (NGOs) have also made important contributions to improve the welfare of those in developing nations. Through private and public funding contributions, humanitarian organizations like Oxfam, CARE, and the Red Cross aid the poor by providing essential services such as clean water, medical care, and emergency relief. According to charity evaluator GiveWell, the most cost-effective charitable organizations are the Malaria Consortium and Against Malaria Foundation, for preventing malaria through medicine and bed nets, and Helen Keller International, which aids in preventing vitamin A deficiency.

Organizations like GiveWell are part of an important burgeoning social and philosophical movement called Effective Altruism (EA), which aspires to “do good better.” A notable forerunner to the movement was utilitarian philosopher Peter Singer, who laid out the basic case for the importance of charitable giving to the poor in his 1971 article “Famine, Affluence, and Morality,” in which Singer begins with the basic premise that one has a moral obligation to save a drowning child and concludes that there is no major moral distinction between saving a nearby drowning child and saving a child from malnutrition in a faraway country. Singer argues that Western nations need to give far more disposable income to save lives in the developing world.

Effective Altruists continue to employ the same basic arguments to emphasize the importance of charitable giving. According to GiveWell, for every $5,000 given to the Malaria Consortium, one life is saved in expectation. With such a benchmark of importance, the immense amount of money spent on luxuries in wealthy nations looks incredibly frivolous. Adopting this mentality makes it apparent that other charitable organizations and government expenditure is extremely wasteful. Consider this illustrative passage from Singer in a 2013 Washington Post opinion editorial:

According to Make-A-Wish, the average cost of realizing the wish of a child with a life-threatening illness is $7,500. That sum, if donated to the Against Malaria Foundation and used to provide bed nets to families in malaria-prone regions, could save the lives of at least two or three children (and that’s a conservative estimate). If donated to the Fistula Foundation, it could pay for surgeries for approximately 17 young mothers who, without that assistance, will be unable to prevent their bodily wastes from leaking through their vaginas and hence are likely to be outcasts for the rest of their lives. If donated to the Seva Foundation to treat trachoma and other common causes of blindness in developing countries, it could protect 100 children from losing their sight as they grow older. (Singer, 2013)

While many do not consider these comparisons compelling and find this way of thinking distasteful, this approach is characteristic of the EA mindset. Even among Effective Altruists, some are more willing than others to consider unusual priorities, such as shrimp welfare or even the welfare of skin mites. Similarly, a growing strain of EA is the “longtermist” faction, which considers the welfare of yet-to-exist people important. Some even go as far as to consider future people an overwhelming moral priority due to the sheer number of people who could potentially exist (see Bostrom, 2003; Greaves & MacAskill, 2019). Those who accept the longtermist framework are often sympathetic to the idea that reducing existential risk from threats such as misaligned artificial intelligence, nuclear war, and pandemics is a moral priority (see Bostrom, 2002).

An intermediate position could be appealing to longtermists unsympathetic to science-fiction-like scenarios but still concerned about poverty and health in developing nations. Namely, we should fund efforts toward making systemic changesthat eliminate the causes of wealth and wellness disparities. Striking at the root of the problem may be a better use of resources than ameliorating the symptoms. As an illustration, perhaps spending directly on anti-malaria bed nets would be less efficient than funding the creation of genetically engineered malaria-resistant mosquitoes to eliminate the disease permanently.

A structural approach to the fight against global poverty could be challenging. In many cases, obvious improvements would be implausible from a political standpoint. While persuading dictators to abandon their ideologies and embrace freedom would be an incredible achievement, it is not particularly feasible. In many cases, Nations will resist outside influence, and many competing interests in Democratic elections would make influencing elections challenging. Effective altruists typically find that the most good can be done in areas that are not only important but both neglected and tractable.

Even if implementing policy solutions was feasible, identifying the systemic causes of prosperity and welfare is difficult. Good outcomes often tend to go together without a clear first cause. Cogent arguments for causation can often be made in either direction on many important issues. For example, while most believe education is the driver of economic prosperity, some believe it is largely wasteful and serves mostly as a signaling mechanism (see Caplan, 2018). While Democracy is positively associated with economic productivity, there are cases of high levels of economic growth under non-democratic governance, such as in the case of Singapore under Lee Kuan Yewand China under Deng Xiaoping.

In the case of national economic and social progress, monocausal explanations are surely oversimplifications. Many factors are relevant to economic productivity and well-being: geography, climate, culture, religion, education, government, disease prevalence, and so forth. Some stark examples make this apparent. North and South Korea share many similarities but have vastly different living standards due to North Korea’s totalitarian governance. While some prosperous nations are deprived of natural resources, the oil-rich nations of the Middle East make it very apparent that natural resources can be incredibly important.

Even though it is possible to identify contributions to prosperity, many are highly resistant to influence from charitable organizations. A charity cannot help a country change its location, natural resources, history, or climate. However, changing economic, social, and political institutions is possible but difficult. Many would agree that good institutions are largely the product of factors such as wise leadership, good economic incentives, moral citizens, brilliant entrepreneurs, and a productive workforce. However, such a claim raises the question of how one can attain such good factors, and if good institutions contribute to these good factors, what causes such virtuous circles to emerge?

II. Institutions and Intelligence

Designing and operating functional legal systems, economies, businesses, and universities is complicated. While there are obvious exceptions, many will notice that successful leaders and innovators in government, academia, and industry are often well-educated and smart. One plausible means of improving institutions would be improving the intelligence of those responsible for making important decisions and discovering innovative solutions to problems. In determining the extent to which improving intelligence may improve institutions, we would need to understand measures of intelligence and whether or not they have the desired benefits.

Intelligence, in the ordinary sense, refers to a person’s ability to reason but contains different connotations in certain contexts and does not have a universally agreed upon usage. Generally, people regarded as intelligent are especially gifted in academic subjects. Debates regarding what intelligence truly is and whether or not psychometric tests capture all the nuances of the ordinary use of the term intelligence are not particularly fruitful. No quantitative measurement can capture the full concept of intelligence because there is no objective, wholly accepted definition. Some researchers avoid the use of the term “intelligence” altogether in favor of terms such as “cognitive ability” or “mental ability” (see Jensen, 1998, pp. 48-49). Nevertheless, many intelligence researchers are sympathetic to the following definition provided by Linda Gottfredson:

Intelligence is a very general mental capability that, among other things, involves the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly and learn from experience. It is not merely book learning, a narrow academic skill, or test-taking smarts. Rather, it reflects a broader and deeper capability for comprehending our surroundings –“catching on,”“making sense” of things, or “figuring out” what to do. (Gottfredson, 1997)

Cognitive ability is measured by intelligence tests, which produce scores known as IQs. Originally, IQ stood for “intelligence quotient” and was calculated using the ratio of mental age and actual age. Children who had a higher cognitive level would receive scores over 100. The term IQ is still used, but scores are calculated using population norming with 100 set to a population average and 15 as a standard deviation. These psychometric tests of cognitive ability, such as the Wechsler Adult Intelligence Scale, aim to provide a score that reflects a general ability rather than a collection of disparate skills.

Given the nature of cognitive ability, trying to capture a general cognitive ability is a worthwhile goal. Batteries of test items that have cognitively demanding tasks will all positively correlate, indicating the “existence” of a latent psychological construct known as the g factor (Jensen, 1998, pp. 73-104). The legitimacy of the latent factor g is evidenced by a phenomenon coined by Charles Spearman called “the indifference of the indicator” (Spearman, 1927, pp. 197-198). Tests that measure g can be highly correlated while sharing very different test items. As long as the tests draw from a diverse array of cognitively demanding tasks, they will predict similar levels of cognitive ability despite having different content (Warne, 2020, pp. 31-34).

While some may object that IQ merely measures the ability to take an intelligence test, the well-replicated academic and socioeconomic correlates pose a major issue. If IQ were only picking up on a narrow skill of the ability to answer intelligence test items, it would not correlate with a host of positive outcomes typically associated with intelligent people. A test that was truly meaningless, such as scoring participants by the number of heads they receive after 100 coin flips, would have approximately zero correlation with any real-world variables of interest.

The case for the importance of IQ for numerous real-world outcomes was made in the controversial book The Bell Curve (1994) by psychologist Richard Herrnstein and political scientist Charles Murray. They cogently argued that cognitive ability was playing a more important role than socioeconomic status in influencing various socioeconomic outcomes such as being in poverty, finishing high school, finishing college, being unemployed, having an illegitimate first birth, having a low-weight baby, committing a crime, and other significant outcomes (Herrnstein & Murray, 1994, pp. 127-268).

Despite the backlash Herrnstein and Murray faced, the central argument that IQ is an important influence on socioeconomic outcomes is not particularly controversial among intelligence researchers today. One survey found that experts believed 45% of the variance in socioeconomic status (SES) was explained by intelligence, with 38% of experts believing more than 50% of SES variance was attributable to intelligence (Rinderman et al., 2020).

Furthermore, the claim that IQ has an important role in determining SES was not at all a minority view among experts at the time of the publication of The Bell Curve. In response to the controversy surrounding the book, education researcher Linda Gottfredson drafted a public statement, “Mainstream Science on Intelligence,” signed by 52 researchers who were “experts in intelligence and allied fields.” Some notable signatories include intelligence researcher Arthur Jensen and behavioral geneticists Robert Plomin and Thomas Bouchard. The statement included the following claims:

IQ is strongly related, probably more so than any other single measurable human trait, to many important educational, occupational, economic, and social outcomes. Its relation to the welfare and performance of individuals is very strong in some arenas in life (education, military training), moderate but robust in others (social competence), and modest but consistent in others (law-abidingness). Whatever IQ tests measure, it is of great practical and social importance.

A high IQ is an advantage in life because virtually all activities require some reasoning and decision-making. Conversely, a low IQ is often a disadvantage, especially in disorganized environments. Of course, a high IQ no more guarantees success than a low IQ guarantees failure in life. There are many exceptions, but the odds for success in our society greatly favor individuals with higher IQs. (Gottfredson, 1997)

Later research continues to corroborate the importance of cognitive ability for socioeconomic outcomes and point to an overall pattern in which positive and socially desirable outcomes have a positive correlation with intelligence while undesirable outcomes have a negative correlation (see Marks, 2022). Tarmo Strenze summarized the correlates of cognitive ability determined by meta-analyses in his chapter “Intelligence and Success” from Handbook of Intelligence (2015):

It would be unsurprising to find that such relationships continue to exist at the national scale. If cognitive ability and good educational outcomes are positively correlated within nations, then the average cognitive ability and average educational outcomes would share the same sign when performing a cross-country comparison. If income and job performance are positively associated with cognitive ability within nations, then it should not be surprising to observe that nations with higher average levels of cognitive ability are more economically productive.

III. The Importance of National IQ

In 2002, psychologist Richard Lynn and political scientist Tatu Vanhanen published their seminal book in the field of national intelligence entitled IQ and the Wealth of Nations. Their starting assumption was that since IQ and earnings had a positive correlation, the relationship should persist when comparing nations, meaning that nations with higher average IQ should be more economically productive (Lynn & Vanhanen, p. 4).

Lynn and Vanhanen collected IQ scores from various studies and made corrections, such as adjusting for the FLynn Effect, to produce their national estimates. They normed their data to the United Kingdom average, which they set to 100. Their national IQ (NIQ) estimates faced considerable scrutiny. Many criticisms against Lynn and Vanhanen’s NIQ scores are less applicable now because many of the most heavily criticized samples are no longer used in recent estimates (Warne, 2023). Richard Lynn and another researcher, David Becker, performed a recalculation of the numbers with cited sources for their book The Intelligence of Nations (2019). Unfortunately, Richard Lynn passed away during the writing of this article, but David Becker maintains the website View on IQ, which contains the most up-to-date estimates and various discussions of the data.

In his article “National Mean IQ Estimates: Validity, Data Quality, and Recommendations,” Russel Warne, a well-respected researcher in the field of intelligence, addresses the legitimacy of various criticisms and discusses the shortcomings of the data. While he considers scores from the USA and UK as measures of intelligence, he considers the notion that some national IQ scores are measuring intelligence to be “questionable at best” and takes a critical view of scores that are estimated through geographic imputation (Warne, 2023). Nonetheless, Warne does not believe the correct approach is a wholesale rejection of the data:

National IQ estimates may not be perfect, but when used thoughtfully and interpreted conservatively, they can be instrumental in testing theories and providing insights that would otherwise be unavailable. I urge social scientists to reject the false dichotomy of accepting mean national IQ scores uncritically or rejecting the entire dataset. (Warne, 2023)

While many may have concerns about datasets specifically associated with Richard Lynn, perhaps on account of concerns about a continuous bias throughout all estimates, there are other NIQ estimates and estimates of similar psychological constructs available. German psychologist Heiner Rindermann created national IQs for his book Cognitive Capitalism (2018) by considering psychometric testing and student assessments. Angrist et al. (2021) created a database for Human Learning Outcomes (HLO), considering educational achievement scores from multinational exams such as PIRLS, PISA, and TIMSS. In another study, Patel and Sandefur (2020)administered an exam that combined test items from different assessments to 2300 Indian primary school students in order to create a “Rosetta Stone” of human capital to compare across various student assessment tests. Another group of economists, Gust et al. (2022), used student assessment scores in mathematics and science to evaluate “basic skills.” Warne (2023) compares the relationship between the measures:

Table 1 from “National Mean IQ Estimates: Validity, Data Quality, and Recommendations” by Russel Warne (2023)

Although there is considerable overlap in the use of the same international test scores in some cases, the estimates show considerable intercorrelation. Patel and Sandefur (2020), Angrist et al. (2021), and Gust et al. (2022) did not claim to be creating IQ scores, but their estimates are highly correlated with NIQ estimates. Authors are sometimes unwilling to claim they’re creating IQ scores or cite relevant literature due in part to the intense criticism and controversy surrounding Lynn and IQ research more broadly. Researchers in this area often face intense criticism, harassment, and abuse (Carl & Woodley of Menie, 2019). Although some of the authors do not claim to be measuring cognitive ability, they produce scores that highly correlate with national IQ scores because international tests such as PISA are cognitively demanding and thus g-loaded.

While one should be hesitant to draw certain conclusions about individual nations from national IQ estimates, it can be reasonably asserted that there is a moderate to strong relationship between national average cognitive ability and economic productivity. The exact relationship depends on the years, the measure of productivity, the measure of NIQ, and the transformations applied. A considerably large number of measures are available in Becker’s dataset at View on IQ. Lynn and Vanhanen (2012) found correlations with a range of economic indicators and specifications using NIQ data. The 2002 L&V NIQs correlated with 1996 GDP/c at 0.66, 1998 Real GDP/c at 0.73, 1998 GNP-PPP/c at 0.77, and 2002 GNI-PPP/c at 0.68 (Lynn & Vanhanene, 2012, pp. 75-80). A logarithmic transform of Lynn and Vanhanen’s (2002) estimate, proposed by Meisenberg (2004), produced a correlation of 0.82. The log transformation continues to produce an impressive fit even with more recent data:

Not only is there a strong positive correlation between NIQ and GDP/c, but NIQ and various other important outcomes often, but not universally, show a positive relationship. Becker (2019) assembled a review with hundreds of socioeconomic relationships with IQ, lending evidence to the overall trend. For example, IQ had a positive relationship with variables like GDP growth, employment, economic freedom, literacy, various educational outcomes, human development (HDI), quality water, sanitation, Democracy, gender equality, property rights, and the rule of law (Becker, 2019). Kirkegaard (2014) constructed an index of national social progress, which he termed the S factor, that highly correlated with NIQ (r=0.86-0.87).

More sophisticated reasoning and methods are necessary to persuasively argue that NIQ not only correlates with positive outcomes but causes those outcomes. For example, Francis and Kirkegaard (2022) employ the use of instrumental variables, one being 19th-century literacy rates, to conclude that high NIQ causes higher growth rather than vice versa. An important indication of the direction of causality would be if increases in wealth that are largely exogenous to institutional quality result in higher IQs. For example, if a high GDP caused higher IQ, we would expect oil-rich nations like Qatar, the UAE, and Kuwait to score closer to nations like the USA, UK, and Japan in NIQ. However, they score more in line with their geographic neighbors, suggesting, but not proving, that NIQ causes prosperity rather than vice versa (Christainsen, 2013).

A simple causal argument would be an appeal to the commonsense notions that smart people make good decisions and good decisions lead to good leadership and positive outcomes. Progressives hesitant to embrace IQ would probably be more sympathetic to the idea that education causes the good outcomes seen in developed nations. However, as discussed above, educational outcomes are moderately to highly correlated with measures of cognitive ability, and, as will be argued in the next section, educational interventions are largely ineffective at creating large and lasting genuine gains in cognitive ability. This is some evidence that IQ is the primary source of both prosperity and high academic scores.

III. On The Failure of Environmental Interventions

Provided an Effective Altruist is convinced of the importance of NIQ, she would next want to determine if there are any cost-effective means of influencing IQ. Provided the assumptions are correct, major improvements in national IQ would bring about not just major changes in GDP but also a whole host of positive outcomes. To provide perspective, we can imagine that a 40-point increase in IQ in a country like Liberia could plausibly make Liberian living conditions on the level of a developed nation like Italy. While North Korea, which is often excluded due to lack of data, would likely score high in NIQ and extremely low in GDP, there is a conspicuous absence of extremely poor but high IQ countries. Even moderate increases in NIQ could plausibly eliminate the most extreme forms of poverty.

To determine the plausibility of large returns from environmental interventions, it is worthwhile to consider the extent to which environmental differences influence differences in IQ within nations. In developed countries, intelligence tends to have a moderate heritability in childhood but a high heritability in adulthood. The heritability of intelligence increases with age, a phenomenon termed “The Wilson Effect,” and some researchers have estimated very high adulthood heritabilities, such as 80% (Bouchard, 2013). While violation of the equal environments assumption may cause overestimation, other issues may lead to underestimation of the heritability of intelligence, such as measurement error and the presence of assortative mating.

To correctly understand the relevance of high heritability, it is necessary to understand the meaning of the term heritability. It is the proportion of phenotypic variation attributable to genetic differences within a population at a given time. This is in contrast with environmentality, which is the proportion attributable to differences in environment. These estimates are typically derived using studies of twins and adopted siblings. Monozygotic (MZ) or “identical” twins share approximately 100% of their genomes, whereas dizygotic (DZ) or “fraternal” twins share an average of approximately 50% of the genes that vary within the larger population. Using Falconer’s formula, one can estimate the heritability as twice the difference between the MZ correlation and the DZ correlation. Other more sophisticated methods are also used to estimate heritability (see Barry et al., 2022). The remaining environmental influence can be partitioned into the environment that siblings share and the environment that they do not share, which are known as the shared and non-shared environment, respectively.

The concept of heritability has endured a great deal of attack from philosophers like Richard Lewontin, Ned Block, and Gerald Dworkin. Since various controversial conclusions are premised on the validity of heritability as a concept, arguments advanced by these philosophers have been well-received by the ideological opponents of so-called “genetic determinism.” However, these philosophical objections are often misleading or confusing (see Sesardić, 2005). One undesirable conclusion that has motivated backlash is that high heritability estimates suggest that successful environmental interventions are less likely. While it is true that heritable does not mean genetically determined, a high heritability does indicate that huge returns from interventions are unlikely unless the intervention is extreme or modifies an aspect of the environment that does not vary substantially already (see Kirkegaard, 2023; Sesardić, 2005, pp. 153-182).

While some traits have sizeable measures of non-shared environmentality, the source of variation is typically unsystematic. Plomin and Daniels (1987) coined the term “Gloomy Prospect” to describe this finding that non-shared environmental influences are “unsystematic, idiosyncratic, or serendipitous events such as accidents, illnesses, and other traumas, as biographies often attest.” While successful high-impact environmental interventions are technically possible, interventions will be mistargeted and ineffective without a known systemic source of influence.

The limited role of the environment in the case of intelligence is further corroborated by the lack of efforts to permanently improve cognitive ability in the developed world. Protzko (2015) analyzed 39 randomized controlled trials and found that “after an intervention raises intelligence the effects fade away…because children in the experimental group lose their IQ advantage and not because those in the control groups catch up.” In many cases, early childhood interventions lose their advantage entirely within a few years. When gains do not last outside of a child’s schooling, the intervention is not worthwhile.

This skepticism was endorsed many years earlier by the expert signatories of Gottfredson’s “Mainstream Science on Intelligence” when they endorsed the claim that “we do not know yet how to manipulate [the environment] to raise low IQs permanently” (Gottfredson, 1997). Such findings have been corroborated by the elusive quest for the phenomenon known as “far-transfer,” in which training in one cognitive trait provides benefit in a dissimilar trait. Hyland and Johnson (2006) believe “the pursuit of general transferable core/key skills is a wasteful chimera‐hunt and should now be abandoned.” Psychologist Douglas Deterrman has a similar attitude:

Transfer has been studied since the turn of the [twentieth] century. Still, there is very little empirical evidence showing meaningful transfer to occur and much less evidence for showing it under experimental control (Deterrman, 1993, p. 21).

When selection effects and genetic confounding are not considered, the benefits of schooling and other interventions can sometimes appear larger than they truly are (Schmidt, 2017). Studies that merely show correlations between certain educational or family characteristics without accounting for genetic confounding should not be taken as persuasive of the importance of the environment. When potential genetic confounding is accounted for, effect sizes are often much smaller in magnitude. For example, Smith-Woolley et al. (2018) used educational attainment polygenic scores to determine if school type in the UK was influencing testing outcomes and found the proportion of variance accounted for fell from 7% to less than 1% after accounting for genetic influence. If actual effect sizes for the importance of schooling are near zero, there will still be studies with large outcomes, but they will tend to come from poorly designed and low-powered studies.

While there are notable examples of important educational interventions, these should be evaluated with proper context. Meta-analysis can provide a broader look at a research question. After examining 141 large-scale educational randomized control trials with 1.2 million total students, Lortie-Forgues and Inglis (2019) found a mean effect size of 0.06 standard deviations (SDs) and a mean confidence interval width of 0.30 SD. Such returns paint a dismal picture of the prospect of improving educational outcomes through educational interventions, including IQ. Educational outcomes are more easily malleable than IQ because you can influence standardized test scores without boosting IQ, but boosting IQ will increase standardized test scores. It follows that improving IQ may be an even more difficult problem.

Even if an intervention successfully improves IQ scores, it may not actually improve the underlying trait of intelligence (Hu, 2022). Tests inadvertently pick up on skills that are not actually general cognitive ability. Gains that improve IQ scores without improving the underlying trait are said to be “hollow.” Since all cognitively demanding test items should be g-loaded, educational interventions that do not improve all or close to all test items may indicate hollow gains. Using structural equation modeling, Lasker & Kirkegaard (2022) corroborated the findings of Ritchie et al. (2015), namely that the effect of education is on specific skills rather than general cognitive ability. While improvement in specific skills is worthwhile itself, the benefits of high IQ are primarily driven by general cognitive ability.

The extent to which the failure of interventions in wealthy nations is applicable to developing nations is unclear. If interventions are largely ineffective, this is evidence that they may be ineffective in the developing world. However, there is a plausible case to be made for certain threshold effects or influences unique to the conditions of poor nations. In some countries, children suffer from extreme levels of malnutrition and exposure to parasites. Extremely few children in the developed world face such obstacles. An intervention that prevents extreme malnutrition might appear ineffective in the United States but shows gains in Yemen or South Sudan. When nutrient deprivation is so great that it disrupts proper brain formation, it is likely to depress not only IQ scores but also cognitive ability. Similarly, when groups are wholly unexposed to logical reasoning, they are likely to score lower on IQ tests. Such issues are not wholly uncommon, and interventions would play an important role in such instances. Furthermore, for populations unexposed to academic tests, IQ scores will likely underestimate ability.

The extent to which we can expect environmental interventions to work as a means of improving NIQ largely depends on the extent to which we think environmental differences are driving international differences. If we suspect that NIQ differences are driven entirely by environmental differences, then improvements in nutrition and education may equalize scores. If genetic differences are playing a causal role, equalizing environments will not equalize NIQ scores. A reasonable prior assumption is non-trivial levels of influence from both. Various lines of evidence point to the prospect of zero genetic influence globally being exceptionally unlikely. For example, interventions are largely ineffective in the USA, with an average IQ of approximately 97-99, and the US still lags behind Singapore with an NIQ of approximately 106-107 (Becker, 2019). While some dismiss the genetic influence of genes on NIQ as “not interesting,” it is extremely relevant to the near future of humanity, especially considering that countries with lower NIQ typically have higher fertility (Francis, 2022).

Even if one embraces the 100% environmental explanation for national differences in IQ, one can still consider the possibility of environmental interventions being less cost-effective or more limited in magnitude relative to what could be called “genetic interventions.” Furthermore, since there are little to no means of permanently boosting IQ in more developed countries, there may be stagnation once a country reaches beyond a certain threshold of average nutrition and education.

Looking toward genetic interventions may be more fruitful, even if we accept that environmental interventions are important to some extent. IQ gains without diminishing marginal returns are implausible, given that adults in academic institutions or pursuing academic interests do not continue to add IQ points cumulatively until they achieve superintelligence. Some forms of genetic enhancement would not suffer from this problem of diminishing returns, and could in fact create superintelligent humans. Also importantly, if a genetic intervention could be administered at birth and reduce the need for additional years of schooling, it could save a tremendous amount of a student’s time.

IV. Genetic Enhancement

Viewing genes as a means of influencing outcomes is not a new idea. Francis Galton coined the term “eugenics,” meaning “good birth” in his 1883 book “Inquiries into Human Fertility and Its Development.” The idea received widespread popularity among intellectuals during the early 20th century, but the enthusiasm turned to repugnance following the end of World War II and the exposure of the atrocities committed by Nazi Germany in the pursuit of “racial hygiene.” Advocating for eugenic policies became unpopular, and a widespread rejection of the idea of an association between genes and behavior overtook various academic disciplines. Whether explicitly or implicitly, many social scientists and policy advocates continue to operate under the assumption that the mind has no innate traits, an idea called “the blank slate.”

Intellectuals today, even geneticists, continue to take a firm stance against “eugenics” (see Harden, 2021; Rutherford, 2022). Opponents of practices such as screening embryos know that the accusation of “eugenics” is an effective tool because it so widely elicits repugnance. Whether or not a practice like embryo screening qualifies as actual “eugenics” is a fact about the English language rather than morality. The Chinese equivalent, “yousheng,” is used almost exclusively in a positive manner when referring to preimplantation diagnosis (Cyranoski, 2017). Furthermore, the mere accusation of eugenics is insufficient evidence of the practice being repugnant since most people endorse some form of “eugenics.” Even if some practices are eugenic, there are surely morally defensible forms of eugenics (see Weit et al., 2021).

The era of “new eugenics” characterized by the use of reprogenetic technology will be morally incomparable to the atrocities of the past because this form will not only be harmless but actually be consensual and improve human welfare. An irony of the “eugenics” objection to some forms of reprogenetic technology is that the new eugenics facilitates better-informed consensual reproductive decisions, while those who want to ban such technology are advocating coercion in reproduction. It could even be argued that parents have a moral duty to engage in “eugenics” if it means they expect that child to live the best life (see Savulescu, 2001). Not only are old forms of eugenics unethical, but they are also inefficient compared to the immense potential of genetic enhancement technology.

New eugenics, characterized by reprogenetic technology and voluntary choice, was only made possible through a series of technological advances. One such breakthrough was the birth of Louise Brown on the 25th of July 1978 at Oldham General Hospital in Greater Manchester, UK. She was the first human born who was conceived outside of a human body in a laboratory through the process of in vitro fertilization (IVF). This process of combining sperm and egg inside a laboratory is possible because it enables couples struggling with fertility issues to increase their chances of a successful pregnancy.

In order to further increase the odds of a healthy pregnancy, different methods were invented to determine which embryo is best to implant. In 2001, a 38-year-old woman with a 7-year history of infertility successfully conceived a child after her embryos were screened via the use of a fluorescent probe and microscope through a process called fluorescence in situ hybridization (FISH) (Wilton et al., 2001). The intended goal of FISH was to reduce the probability of an abnormal number of chromosomes, a condition called aneuploidy, which results in conditions such as Down syndrome (trisomy 21) and Edwards syndrome (trisomy 18).

Since 2001, screening methods have improved and expanded. A woman using IVF can not only screen for aneuploidy, a process called preimplantation genetic testing for aneuploidies (PGT-A), but she may elect to screen for monogenic disorders (PGT-M) or structural rearrangements (PGT-SR) if there is cause for concern. Some couples become aware that they are at an increased risk of a monogenic disorder, a disorder related to one gene, because they have a family history that puts their future offspring at risk. An example of a monogenic disorder is Tay-Sachs, which couples would be want to avoid since most afflicted children die soon after birth.

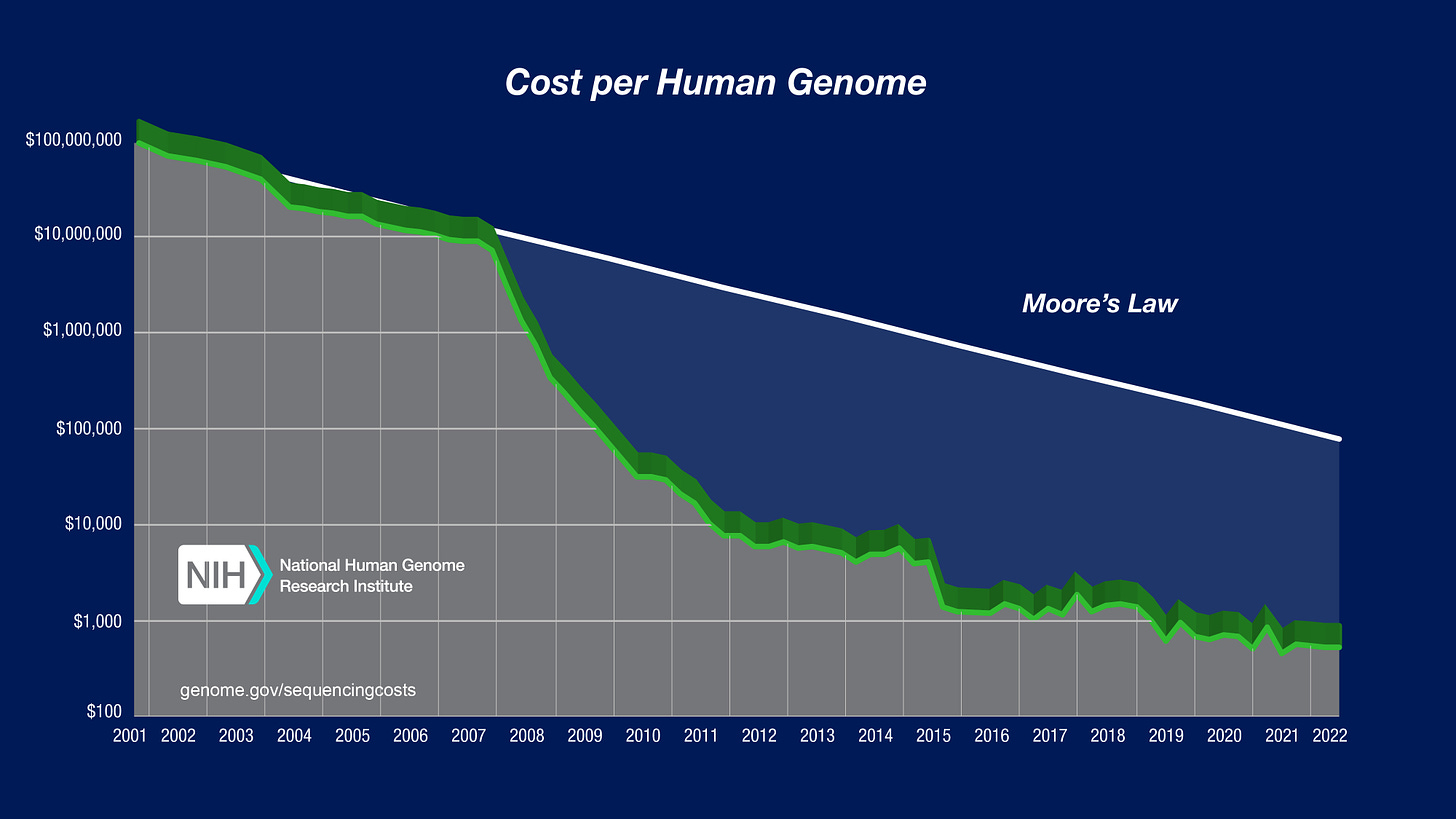

Most common conditions are not monogenic but polygenic, meaning they are influenced by many different genes. Some polygenic disorders are Alzheimer’s, diabetes, obesity, schizophrenia, heart disease, and hypertension. It is now possible to screen for these through preimplantation genetic screening for polygenic disorders (PGT-P). This form of testing was only made possible by extremely recent advances in scientific knowledge and technology. The first baby born using PGT-P, Aurea Smigrodzki, was born as recently as 2020 (Genomic 2021, Q2). Such an achievement would not have been possible without the precipitous fall in the cost of gene sequencing since the completion of the Human Genome Project in 2003.

Different types of genomic sequencing have different costs since they vary in their level of thoroughness. The crucial fall in cost facilitated two important changes that made way for PGT-P: (1) large genomic databases could be assembled, which permitted the discovery of genetic variants associated with disorders, and (2) parents could reasonably afford to genotype their embryos, permitting the existence of a viable company such as Life View (Genomic Prediction).

During the “candidate gene” era, many insufficiently statistically powered studies produced many false positive results due to low thresholds for statistical significance. Now, there are large samples of hundreds of thousands of people, such as in the UK Biobank, which allows Genome-Wide Association Studies (GWAS) with high standards for significance. While the typical threshold in many natural and social sciences is p < 0.05, GWAS studies typically set the standard of p < 0.00000005. This threshold was determined through Bonferroni correction for the number of genes and helps to avoid the problem of too many false positives.

When performing selection, one may be interested in a polygenic risk scoring approach rather than concerns about significance. In this approach, a model is constructed and validated with the aim of maximizing an outcome, such as selecting the individual or sibling with the most disability-adjusted life years (DALYs) (see Widen et al., 2022). When selecting among embryos, one can determine expected gains by considering the gains from selection among siblings. Since polygenic testing has a variant concordance in excess of 99%, DNA from embryos is being read accurately and is akin to selection among siblings, at least in the case of the Genomic Prediction platform (Treff et al., 2019).

Many have expressed concern about the ethicality and utility of embryo screening (see Forzano et al., 2021; Karavani et al., 2019; Polyakov et al., 2022; Rutherford, 2022; Turley et al., 2021). Many of the objections forwarded are weak or misguided (see Fleischman et al., 2023). Perhaps most notably, a central issue in these author’s ethical argument is that they neglect to make relative comparisons between available methods. When couples intend to transfer an embryo, they face what Tellier et al. (2021) term the “embryo choice problem.” Clinics and couples will use some method to choose, and one should not claim that PGT-P is of little utility, unproven, or unethical without considering the typical alternatives, namely visually assigned embryo grade or randomness.

There are worthwhile benefits to PGT-P. Widen et al. (2022) constructed a health index that led to a gain of roughly 4 DALYs among individuals of European ancestry. The benefits of selection among siblings of European ancestry would be between 3 and 4 DALYs. Furthermore, they found “correlations between disease risks are found to be mostly positive, and generally mild,” a finding which “supports the folk notion of a general factor which characterizes overall health” (Widen et al., 2022).

Life View (Genomic Prediction) only provides information about disease and not cognitive traits, and two co-founders, Steve Hsu and Nathan Treff, have affirmed they do not intend to select on the basis of cosmetic or cognitive traits. On his podcast Manifold, Hsu explained that he believes in the necessity of a society-wide conversation and widespread acceptance before they should provide this product, and has stated that currently, the testing is “just too controversial.” However, Hsu acknowledges that it is possible. Choosing embryos that have desirable psychological characteristics, especially intelligence, is controversial and evokes disgust in many, but perhaps not as many as one would expect. Meyer et al. (2023) surveyed 6823 people about the ethicality of PGT-P to improve their child’s odds of attending a top-100 college, and most did not have a moral objection.

With such acceptance and a considerable portion eager to use the service, embryo selection for psychological characteristics may soon be more widely adopted than many anticipate. Selection is possible because all psychological characteristics, including cognitive ability, are heritable. This finding is so well-replicated that it has been named the “first law of behavioral genetics” by Turkheimer (2000) and continues to be regarded as one of the most replicated findings in all of behavioral genetics (Plomin et al., 2016). While intelligence is an important factor, any psychological trait that is associated with positive outcomes at the individual or national level, such as the general factor of personality, could be selected for using embryo selection, provided there is sufficient data to determine which variants are important.

Estimates of potential returns from selection for intelligence are contingent on a number of variables. Karavani et al. (2019) used polygenic scores for cognitive ability at the time of writing to estimate the potential returns from intelligence and found an approximately 2.5-point return from selection on IQ among ten embryos. While the authors claim that selection has “limited utility,” it is very important to note, as Steve Hsu does, that screening can be used to prevent an extreme downside like intellectual disability. Many couples will be interested in selection and may inadvertently select for higher IQ due to the beneficial pleiotropic relationship between health, mental well-being, and cognitive ability.

Currently, returns for selection on IQ are higher and will continue to grow as the polygenic scores (PGS) for intelligence improve. An important early contribution to the conversation around embryo screening for intelligence was the 2014 article “Embryo Selection for Cognitive Enhancement: Curiosity or Game-changer?” by Future of Humanity Institute’s Carl Shulman and Nick Bostrom. Shulman and Bostrom (2014) estimated the maximum expected gain from selection among ten embryos at 11.5 IQ points. A later 2016 estimate by polymath researcher Gwern Branwen estimated an expected gain of 9 IQ points in his comprehensive article “Embryo Selection for Intelligence.” The expected gain from embryo selection, defined as the difference between the maximal and average polygenic scores among n embryos, is proportional to the standard deviation of phenotypic variation times the root of the proportion of variance explained by polygenic scores times the root of the logarithm of the number of embryos (Karavani et al., 2019).

While improving polygenic scores for intelligence would improve the returns from embryo selection, the more important bottleneck is the number of embryos available for selection, which is limited by the number of eggs available. When couples elect to use IVF, it is typically because they are having fertility issues. A single cycle of IVF will sometimes produce few to no viable embryos, and couples will require additional cycles. When eggs are retrieved from younger women not experiencing fertility issues, such as during an egg donation, the number of eggs retrieved is much higher. Women interested in polygenic selection may benefit from egg freezing (oocyte cryopreservation).

The most promising means of increasing the critical bottleneck of embryos is likely the creation of gametes from stem cells derived from somatic cells like skin or blood cells. Currently, it is possible to create stem cells from somatic cells, which results in what are called induced pluripotent stem cells (iPSCs). Through a process known as in vitro gametogenesis (IVG), it would be possible to convert the iPSCs to gametes. Shulman and Bostrom (2014) estimate that selection from 100 embryos would yield an 18.8 IQ return, and selection from 1000 would yield a 24.3 IQ return.

Another important benefit of IVG would be that it would increase the use of polygenic screening because it would likely be much more worthwhile and easier than IVF currently. Some couples who may otherwise be sympathetic to polygenic screening may be resistant to undergoing IVF for what they might see as marginal gains. However, if the process were as simple as a blood draw and not prohibitively expensive, the use of polygenic screening may increase drastically. It is conceivable that in some developed nations, embryo selection could become the norm.

It is possible that we will see the first case of human IVG within a decade. It has already been possible to create eggs from stem cells in mice (Hikabe et al., 2016). An impressive recent achievement was the creation of a mouse from two male mice using iPSCs and IVG (Murakami et al., 2023). Startups such as Conception and Ivy Natal are working on making IVG a reality, and prediction markets have a median estimate of 2033 for the first human born using this technology. Additional funding from EA donors would likely accelerate the discovery and adoption.

Achieving IVG would be one of the most important discoveries ever. Relative to the importance of the technology and the potential benefits, this technology should surely be researched more. While facilitating massive multiple embryo selection is important, IVG would also allow for iterated embryo selection (IES). Bostrom and Shulman (2014) describe the process in their article as follows:

1. Genotype and select a number of embryos that are higher in desired genetic characteristics;

2. Extract stem cells from those embryos and convert them to sperm and ova, maturing within 6 months or less (Sparrow, 2013);

3. Cross the new sperm and ova to produce embryos;

4. Repeat until large genetic changes have been accumulated.

This process could condense generations of intentional selection into months. Since iPSCs would be able to be converted to sperm and egg via IVG, there is no need for a person to mature to an age at which they can produce gametes. After enough generations, one could achieve a maximal polygenic score given currently known variants. IES could be costly and take a long time, but it may only need to be done a small number of times, and then all parents interested in sperm or egg donations would draw from these lines as a source (see Branwen, 2016).

Prior to fertilization and the creation of embryos, it is possible to perform gamete selection. Sperm is not limited to the same extent as eggs, and billions can be produced by fertile men without the need for conversion from stem cells. Branwen (2016) estimates that the potential return from selecting between 5 eggs and 10,000 sperm is approximately 2.04 standard deviations. That is prior to any selection of embryos.

During polygenic screening, the embryo can be biopsied in order for the DNA to be sequenced. This is not an issue when there are sufficiently many cells in the embryo. However, if one were trying to select among a large number of sperm, determining how to read the DNA poses a potential issue since reading DNA requires the destruction of the cell. One possible solution, in the case of sperm selection, is using phenotypic traits to identify the best sperm. For example, we could select sperm on the basis of its swimming ability. Since the issue of sperm selection is already relevant in the case of IVF, many have already investigated the issue and proposed different selection methods that do not involve the destruction of the sperm (Vaughan & Sakkas, 2019).

For selection on the basis of phenotypic traits, one does not need to be limited to one method. Provided we have a large amount of input of various types of information like motility and morphology, we could use machine learning sperm selection techniques to identify sperm with minimal deleterious mutations. The expected returns would be a function of the correlation between the phenotypic trait and the quality index determined by machine learning. Expected returns could be very large if selection among extremely large numbers of sperm was feasible, but it is not clear how strong the potential correlation could be. Also, there is the potential issue of ceiling effects on selection for phenotypic sperm quality (Branwen, 2016).

While machine learning on phenotypic traits may be promising, genotypic selection would be better. There may be a means of getting genetic information without destroying the sperm. During spermogenesis, sperm are generated in pairs. Each sperm has a complementary brother sperm. If you know the father’s DNA, and you know the brother sperm’s DNA, you can infer the other brother sperm’s DNA without destroying the cell. However, locating the brother sperm is a major issue. Emil Kirkegaard explains a possible solution in his article “Can we pick the best sperm?”:

[…] if we could monitor the generation of sperm in vitro (in a lab), we could simply see which ones are generated in pairs, and then compartmentalize them. Once the complementary brother sperm are compartmentalized, we sequence one of them, and infer the genotype of the remaining one. As far as I can tell (search1, search2), no one is pursuing this idea, but the potential for the improvement of embryo selection is huge. I imagine one could do this by taking a biopsy from men’s testicles to get the spermatogonium cells […] and then monitor the process in a lab.

If discovering IVG is more difficult than imagined, there are some possible alternatives other than sperm selection. One proposal by the pseudonymous writer Metacelsus is what he termed iterated meiotic selection (IMS). This process would perhaps be more feasible than iterated embryo selection, given it does not rely on the discovery of IVG. Metacelsus also believes IMS would be more powerful and faster since meiosis is faster than gametogenesis. He claims that since it is already possible to culture haploid stem cells and fuse cells together, “Meiosis is all you need”:

Take a diploid cell line (probably ESC or iPSC or PGCLC)

Induce meiosis and form many haploid cell lines.

Genotype the haploid lines and select the best ones.

Fuse two haploid cells to re-generate a diploid cell line.

Repeat as desired. At the end, either differentiate the cells into oocytes or perform nuclear transfer into a donor oocyte.

Since the process of reproduction is very complicated and less than maximally efficient at bringing about desirable outcomes across many dimensions, there are many possible areas for improvement and likely many undiscovered possible approaches. For example, one potentially more efficient method would be chromosome selection. Perhaps the most fine-grained selection would be at the level of genes, which is now possible to a limited extent.

Clustered regularly interspaced short palindromic repeats (CRISPR) are a family of DNA sequences that are used in the bacterial immune system to protect against viral infections. Cas9 is an enzyme that can be used for guidance in recognizing and cleaving strands of DNA. Together, they form CRISPR-cas9, a technology that can be used for gene editing. In November of 2018, a genome-editing researcher, He Jiankui, claimed to have edited and implanted two embryos in an effort to disable the gene CCR5 in an attempt to reduce the probability of HIV infection. In 2019, he was sentenced to three years in prison by the Chinese government.

One major issue with CRISPR that caused serious criticism of He Jiankui is the potential for off-target edits. This is when a location in the genome that was not the intended target of an edit gets inadvertently modified in some way. Such an issue can have unintended and unknown side effects. While it is conceivable that a single edit could occur without any off-target mutations, it becomes less likely when performing a large number of edits. Perhaps the most important breakthrough for reprogenetics would be the ability to make an extremely large number of edits without any off-target mutations or unwanted side effects. Such precise editing, as opposed to selection, requires a more precise understanding of the genome which permits differentiating between causal single-nucleotide polymorphisms (SNPs) and tag SNPS that are merely inherited with causal SNPs due to linkage disequilibrium. With a strong understanding of the genome, it would be possible to create extremely healthy and intelligent people.

The potential returns to selection for intelligence are enormous because the architecture of cognitive ability involves a very large number of SNPs. In the distribution of all possible levels of cognitive ability, most people fall somewhere far away from either extreme of having all negative or all positive alleles. On the extreme left tail, you would have someone non-functional. However, at the extreme right tail, you would have people beyond anyone who has ever lived. In his article “On the genetic architecture of intelligence and other quantitative traits,” Steve Hsu explains:

There is good evidence that existing genetic variants in the human population (i.e., alleles affecting intelligence that are found today in the collective world population, but not necessarily in a single person) can be combined to produce a phenotype which is far beyond anything yet seen in human history. This would not surprise an animal or plant breeder – experiments on corn, cows, chickens, drosophila, etc. have shifted population means by many standard deviations relative to the original wild type.

Take the case of John von Neumann, widely regarded as one of the greatest intellects in the 20th century, and a famous polymath. He made fundamental contributions in mathematics, physics, nuclear weapons research, computer architecture, game theory and automata theory. In addition to his abstract reasoning ability, von Neumann had formidable powers of mental calculation and a photographic memory. In my opinion, genotypes exist that correspond to phenotypes as far beyond von Neumann as he was beyond a normal human.

The quantitative argument for why there are many SD’s to be had from tuning genotypes is straightforward. Suppose variation in cognitive ability is

1. highly polygenic (i.e., controlled by N loci, where N is large, such as 10k), and

2. approximately linear (note the additive heritability of g is larger than the non-additive part).

Then the population SD for the trait corresponds to an excess of roughly N^{1/2} positive alleles (for simplicity we suppress dependence on minor allele frequency). A genius like von Neumann might be +6 SD, so would have roughly 6N^{1/2} more positive alleles than the average person (e.g., ∼ 600 extra positive alleles if N = 10k). But there are roughly +N^{1/2} SDs in phenotype (∼ 100 SDs in the case N ∼ 10k) to be had by an individual who has essentially all of the N positive alleles! As long as N^{1/2} ≫ 6, there is ample extant variation for selection to act on to produce a type superior to any that has existed before. The probability of producing a “maximal type” through random breeding is exponentially small in N, and the historical human population is insufficient to have made this likely.

Profoundly large returns are possible, but one concern may be that cognitive ability is useless at extremely high levels. However, intelligence typically has a linear relationship with positive outcomes, and the benefits continue at high levels (Brown et al., 2021). One important contribution to this area is the study of mathematically precocious youth (SMPY), which took a cohort of highly intelligent children in the 1970s and followed them throughout their lives. Even among elite children, the most elite still were more likely to have a Ph.D., peer-reviewed publication, patent, and high income. While it is theoretically possible that there is some limit to the positive impact of intelligence, it has yet to be found.

V. Inequality and Adoption by Elites

One of the major issues with any new beneficial technology is that the wealthy and elite get access before the public at large. Since the benefits of cognitive enhancement are profound, disparate use would surely result in social and economic inequality. However, banning beneficial technology because there is an unfair adoption is not morally defensible, and it is the wealthy who can evade such bans. Many of the benefits of genetic enhancement would have positive network effects (Anomaly & Jones, 2020). Therefore, we should be glad when some benefit, even if the benefits are not evenly distributed.

Fortunately, there may be benefits independent of the average that are gained through elite adoption. Even if adoption were merely by the elites, this would have positive outcomes for the larger society. Elites play a disproportionate role in the economic productivity of nations because they occupy important roles in government and business. If one is interested in increasing economic output and creating better institutions, it would be wise to drastically improve the size and abilities of the elite. Intelligence researcher Arthur Jensen commented on the disproportionate importance:

The social implications of exceptionally high ability and its interaction with the other factors that make for unusual achievements are considerably greater than the personal implications. The quality of a society’s culture is highly determined by the very small fraction of its population that is most exceptionally endowed. The growth of civilization, the development of written language and of mathematics, the great religious and philosophic insights, scientific discoveries, practical inventions, industrial developments, advancements in legal and political systems, and the world’s masterpieces of literature, architecture, music and painting, it seems safe to say, are attributable to a rare small proportion of the human population throughout history who undoubtedly possessed, in addition to other important qualities of talent, energy, and imagination, a high level of the essential mental ability measured by tests of intelligence. (Jensen 1980, p. 114)

In an effort to empirically investigate this question, Carl and Kirkegaard (2022)investigated the benefit of the top 5% independent of the average national IQ level and found additional benefits beyond the benefit from the average IQ. This is fortunate, considering the most likely scenario is that elites adopt the technology more rapidly than the population at large. Government subsidies and low costs would ameliorate the issue of inequality. While addressing issues that arise due to inequality is a worthwhile goal, Effective Altruists should be sympathetic to the idea of increasing overall human flourishing, even if it its benefits are unevenly distributed. The alternative is a world largely devoid of any technological or social progress.

VI. Conclusion

Cognitive ability is an extremely important variable at the individual and national level. Greatly increasing the national average IQ and smart fraction IQ would likely improve numerous economic and social outcomes dramatically. Various plausible pathways for exceptional levels of enhancement are imminent, but the genetic enhancement technology is drastically under-considered relative to its importance due in large part to a stigma that has overtaken any discussion of “eugenics.”

Although the focus of this essay has largely been ending global poverty, genetic engineering could also be used to allow humanity to address existential risks, improve economic growth, and eliminate animal suffering. This makes genetic enhancement technology extremely important, neglected, and tractable. Relative to its importance, genetic enhancement receives little discussion among Effective Altruists, making it a plausible candidate for a “Cause X.” There are many possible approaches that Effective Altruists could take given the above information and methods, and surely intelligent EAs will find other means. Here are some potential approaches:

Improve polygenic scores for cognitive ability. The accuracy of PGSs can be increased by improving statistical methods, increasing the size of genomic databases, and getting more ethnically diverse datasets. Improving the size of databases is likely a better approach than algorithmic improvements (Raben, 2023). Expanding access to genomic data may involve fighting political activism due to ideological resistance to studying IQ and genes (see Lee, 2022). Collecting datasets of high IQ individuals, similar to the Cognitive Genomics Project, may be especially informative. Furthermore, identify any positive or negative pleiotropic effects.

Inform the public about the benefits of genetic enhancement. Many suggest not discussing polygenic screening in an effort to evade regulation. One major issue is that if no one discusses this technology, parents will not know it is available. If advocates for genetic enhancement remain silent, critics may dominate the conversation with false information. Rebutting false and misleading claims is beneficial (e.g., Nathan Treff at an ASRM public discussion of PGT-P and Fleischman et al.’s rebuttal of Adam Rutheford). Furthermore, if the immense benefits are not known, then efforts to fund research into technology like in vitro gametogenesis and CRISPR-Cas9 may be drastically under-considered.

Lobby for legal changes to facilitate more genetic enhancement. In governments with restrictions on selection on the basis of cognitive traits, advocates for genetic enhancement should lobby for reproductive autonomy. Perhaps more importantly, efforts to provide employer and government-subsidized IVF and screening are immensely important. For example, China will soon offer free fertility treatment under its national healthcare plan. If members of governments understood the long-term benefits, they might be more eager to provide IVF and screening to reduce the economic burden of disability and disease, as well as boost economic productivity through increasing IQ. If IVF simply involved a blood draw, was free, and had extremely large mental and physical health benefits for children, we could imagine extremely high levels of adoption.

Fund research into in vitro gametogenesis (IVG). IVG would be an extremely important breakthrough for genetic enhancement. It would lift the bottleneck of the number of embryos and facilitate selection from massive batches as well as iterated embryo selection, which could have basically maximal returns. It would also make IVF a much easier and perhaps less expensive process. EA funding could be extremely important in accelerating its discovery.

Fund research into gene editing. Cost-effective multiplex genome editing with minimal to no unintended consequences would surpass the effectiveness of any selection method. Across various dimensions, such as health, happiness, and intelligence, people could be born who are exceptional in every regard.

Fund research into other genetic enhancement technology. Any method that could feasibly bring large returns should be investigated: cloning, chromosome selection, iterated meiotic selection, synthetic genome creation, etc. Any method that is not discovered may prove beneficial.

Determine the returns to various selection procedures and their cost-effectiveness. Similar to the approach of Branwen (2016), we ought to try to consider the possible costs and benefits of technology while considering a huge number of factors. Furthermore, we should also investigate the potential long-term benefits at different levels of adoption. If returns are extremely large, perhaps a market could arise for parents to take out loans to pay for genetic enhancement that they or their children pay off later.

Investigate and discuss the impact of genetic enhancement more. It would be beneficial if more Effective Altruists were informed about this technology and its potential impact. The benefits of genetic engineering go beyond alleviating global poverty. We could create farm and wild animals that never suffer, human beings exceptionally resistant to disease, geniuses that help us with existential risks, and combat the general decline in genotypic IQ that poses a threat to humanity’s future.

The implications of widespread genetic enhancement, especially for intelligence, are profound. While many may object that such a worldview on the causes of national differences and the importance of IQ is distasteful or that nefarious actors share my worldview, we cannot hide from the truth. While the relative importance of genes and IQ is often regarded as a dismal finding, certain scientific and technological advances would permit us to drastically improve humanity. We should be happy to learn this. If genetic enhancement could be an important means for improving human welfare, Effective Altruists should want to know. In order to do the most good, it is crucial to have a correct understanding of the true causes of good outcomes. We must face reality for a better tomorrow.

- 's comment on An instance of white supremacist and Nazi ideology creeping onto the EA Forum by (16 Apr 2024 22:44 UTC; 44 points)

- 's comment on Yarrow’s Quick takes by (26 Oct 2025 7:19 UTC; 15 points)

- Ben Stewart’s Quick takes by (16 Jul 2023 15:04 UTC; 6 points)

- An instance of white supremacist and Nazi ideology creeping onto the EA Forum by (16 Apr 2024 16:29 UTC; -17 points)

I can view an astonishing amount of publications for free through my university, but they haven’t opted to include this one, weird… So should I pay money to see this “Mankind Quarterly” publication?

When I googled it I found that Mankind Quarterly includes among its founders Henry Garrett an American psychologist who testified in favor of segregated schools during Brown versus Board of Education, Corrado Gini who was president of the Italian genetics and eugenics Society in fascist Italy and Otmar Freiherr von Verschuer who was director of the Kaiser Wilhelm Institute of anthropology human heredity and eugenics in Nazi Germany. He was a member of the Nazi Party and the mentor of Josef Mengele, the physician at the Auschwitz concentration camp infamous for performing human experimentation on the prisoners during World War 2. Mengele provided for Verschuer with human remains from Auschwitz to use in his research into eugenics.

It’s funded by the Pioneer Fund which according to wikipedia:

Something tells me it wouldn’t be very EA to give money to these people.

So what about the second source?

I can check Christainsen’s work since it’s in a reputable journal and thus available through my university. He himself says in the paper:

Cultural factors are harder to measure and thus get neglected in research thanks to the streetlight effect. Still we might sample a subsection of more easily measurable cultural interventions like eduction and see which way they point. We can use the education index to compare the mentioned countries. Countries like the USA, UK and Japan score high on it (0.9, 0.948, 0.851 respectively) while countries like Qatar, the UAE and Kuwait score lower (0.659, 0.802, 0.638 respectively). That seems like a promising indication, but can education actually increase IQ?

You cited Ritchie in this post, but he and his colleagues also have a later meta-analysis showing that education can greatly increase intelligence:

Now you might worry that this is not “true intelligence/g-factor” and a “hollow” gain, but I fear that here we run into the issue that there’s no consensus on what the “true intelligence” actually is. It may be hollow according to your definition but not mine. Even if there was consensus we might disagree about what IQ actually measures. The debate about what aspects of “true intelligence” IQ actually captured is summarized on wikipedia as:

_______

Yeah, I really wouldn’t trust how that book picks its data. As stated in “A systematic literature review of the average IQ of sub-Saharan Africans”:

They’re not the only one who find Lynn’s choice of data selection suspect. Wikipedia describes him as:

____

I suggest you remove the capital L typo, otherwise people might erroneously think Lynn had something to do with its discovery.

_______

That book has so many problems that instead of typing it all out I would like to direct people to this video which points out a lot of them. (It also goes over a lot of Lynn’s other scientific malpractices)

______

I don’t think anyone thinks the environment explains 100%, but given that it’s much larger and has many more variables it seems reasonable to assume it can explain more of it. Since we profess ourselves to be effective altruists I would also like to see a price comparison between the interventions. This post doesn’t really discuss how high the prices for “genetic interventions” are, while environmental interventions like giving iodine are really cheap. Giving iodine used to be one of GiveWell’s top charities:

Iodine deficiency causes an average drop of 13 IQ points, which means we can gain much more than the estimated 9 IQ points of embryo selection at a tiny fraction of the cost.

________

I think the real worry here is that the elites will use their (increased) power to ensure that the government doesn’t give subsidies to the poor so they can keep their relative power in society. A similar dynamic is already happening in education with the money for public schools vs private schools so I suspect this would also happen with other ‘intelligence-increasing interventions’.

_______

I would argue that’s a good thing. Like @titotal commented on the ‘most people endorse some form of “eugenics”’ post:

____

I do feel some amount of warped-mirror empathy for the fact that you clearly spend a lot of time writing a long post with lots of citations on a politically unpopular position that doesn’t get a lot of karma. A similar thing happened to me albeit from the polar opposite side of the political spectrum, which is why part of me wanted to spend time giving you something I didn’t get, a rigorous reply. But another part of me remembers that the last time I spend time arguing IQ and genetics on this forum a bunch of HBD-proponents brigaded me and I lost karma and voting power.

So I obviously did end up writing this comment

because I’m an idiot,but I think I will leave it at that.Feel free to reply to this comment but I now feel exhausted and fear a back and forth will get me brigaded, sorry :/

Regarding Mankind Quarterly and the Pioneer Fund: The relationship between genes, IQ, race, and GDP is very controversial. Prestigious journals are hesitant to publish articles about these topics. Using the beliefs of the founding members in the 1930s to dismiss an article published in 2022 is an extremely weak heuristic. The US government funds a lot of research but it committed unethical acts in the name of eugenics. Sam Bankman-Fried, a fraudster, funded a lot of EA projects. If I linked to some research that was performed using FTX money, I would not consider it worthy of dismissal. Furthermore, bad people can fund and conduct good research. I cited a lot of more mainstream journals for less controversial claims. I don’t consider instrumental variables that strong of evidence, but it felt worth mentioning. I will email you the PDF if you are interested.

Regarding cultural factors: If there are national differences in genotypic IQ, then measures of quality of education and culture will be genetically confounded. I do not doubt that schooling increases scores on tests of mental ability, but the gains appear “hollow.” Hollow is a technical term meaning it is not increasing g. I am not concerned about what “true intelligence” is because “intelligence” is an ordinary term without a precise definition. We can call what IQ scores are trying to measure “GMA” and not care that it’s not “intelligence” but still care that GMA is correlated with good outcomes and we have a means of increasing GMA. The benefits of increases in general mental ability generalize to other areas (career, academic success, good life choices), whereas non-general gains will be limited. As an extreme example, it is obvious why giving children Raven Progressive Matrices is not going to make them drive better. But evidence suggests having higher IQ, reduces risk of traffic accident.

Regarding Richard Lynn: I address this within the article. Until his death, Lynn and colleagues where updating the NIQ scores. Looking at the most recent version on ViewOnIQ from Becker, I see that Lynn has excluded all those samples for the Nigerian estimate and incorporated the Maqsud estimates. He has also included several other more recent estimates, but arrived at a similar estimate still. You can view the samples used and estimates here if you download the file. More importantly, focusing on Lynn is a mistake as I mention in the article. Other less controversial researchers estimate “universal basic skills” or “harmonized learning outcomes” and produce estimates which correlate highly with the NIQ estimates. See the chart from Warne 2023. A side point, but Wikipedia is politically biased. I intentionally capitalized the L to give credit as Richard Lynn’s discovery preceeded Flynn’s first publication. Although, his discovery was preceeded by Runquist.

On The Bell Curve: You say “That book has so many problems that instead of typing it all out I would like to direct people to this video which points out a lot of them.” I don’t plan on watching the 2 hour 39 minute video just to respond to you. At the time, a large number of claims (like the one I make) was not particularly controversial among intelligence researchers (see Gottfredson and APA response). I discuss this in the article. Furthermore, the more recent Rindermann et al. (2020) found in a survey among intelligence experts that many believed SES was substantially explained by intelligence (see in the article). I am also going to make a point about isolated demands for rigor. You are dismissive of some the researchers and journals for lack of academic quality, but in response to a book co-authored by a Harvard psychologist, you give me a YouTube video with a psuedonymous guy with a skull avatar that is part of LeftTube or “Bread Tube”. I am not suggesting this means that I can merely dismiss anything that he’s saying, but I will admit that this feels like a double standard given the Mankind Quarterly critique.