You can give me anonymous feedback here: https://forms.gle/q65w9Nauaw5e7t2Z9

Charles He

Ok, thanks, this makes sense. (As a digression, yes, the software tag is misleading.)

Subscribing to the new subforums adds a tag to the user’s profile, see below.

This reads like a career/attribute tag.

I suspect people might read into this tag inappropriately, e.g. associating them with the skills/experiences/perspectives of being a SWE, when the person may not be a SWE at all or have any of these traits.

Also as a UX thing, getting a publicly viewable side effect of a subscription seems unexpected and out of the norm.

Your comment is really interesting for a lot of reasons. Just curious, did you click on my profile or try to figure out who I am?

It’s not really possible for me to communicate how much I don’t want my first comment to be true.

I’d be careful about this claim. I’m not an expert, but I also don’t see cited sources here.

I think scientific citation is good when done cluefully and in good faith. However, there are levels of knowledge that don’t interact well with casual citations in an online forum and this is difficult to communicate. For literatures, I think truth/expertise can be orthogonal to agreement with some literatures, because knowledge is often thin, experts are wrong or the papers represent a surprisingly small number of experts with different viewpoints[1].

In a small, low-density farm with better ventilation and greater care invested in caring for or separating out sick animals, whatever virus is emitted by sick animals may be at a much lower concentration in the air. This would limit virus-to-human contact. By contrast, a high-density large farm puts a huge number of viral bioreactors (animals) in a cramped facility and exposes the same small number of people to that high-viral-concentration environment over and over again.

I think this is a good argument to begin a conversation with an expert.

I’m not sure this argument is proportionately informed about current intensive farming practices that would be a useful update to my comment.

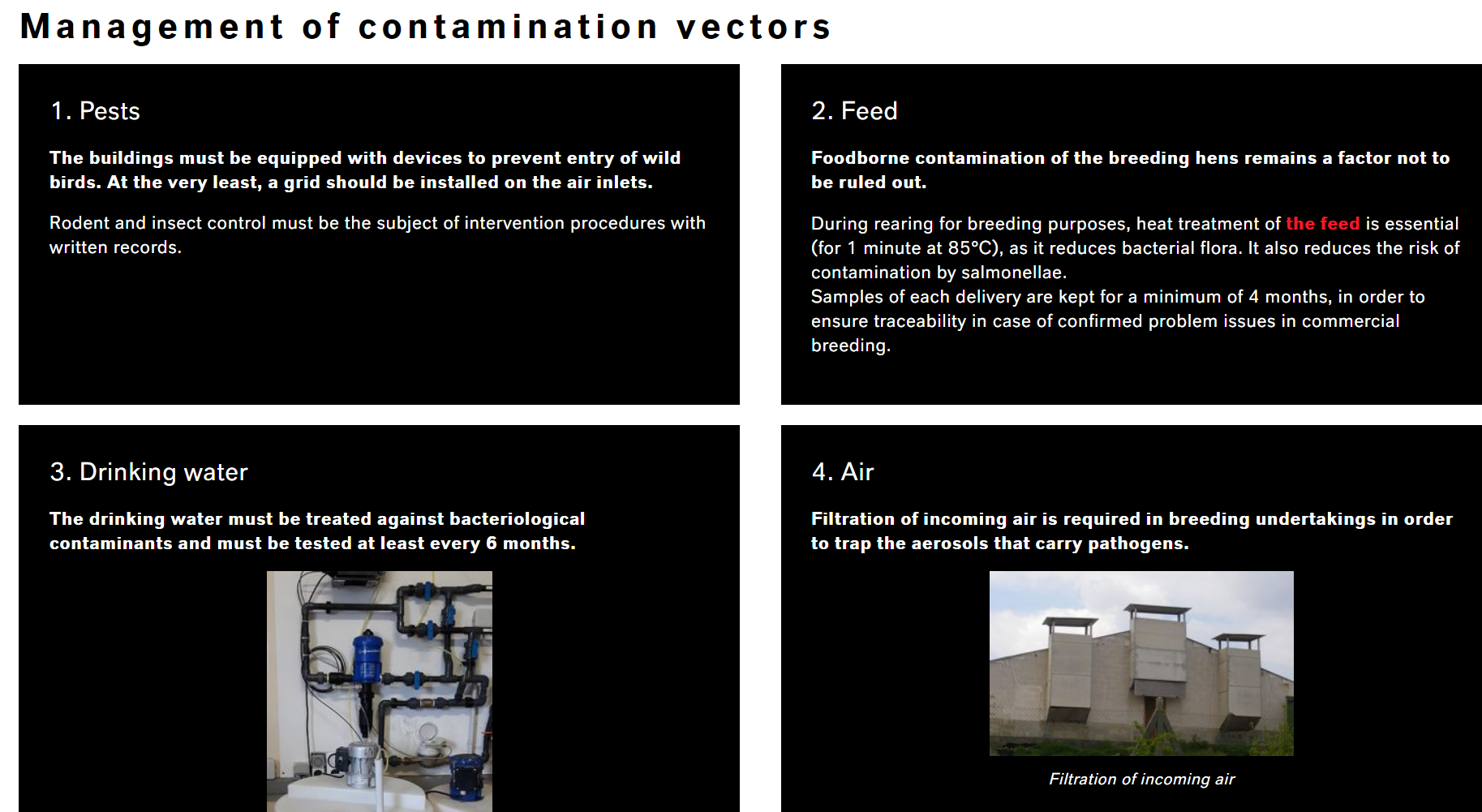

For one, ventilation systems are substantial in farms, and get more sophisticated in more intensive farming (until they mess up or turn it off, cruelly cooking the animals to death).

More generally, I think it’s good to try to communicate how hostile, artificial and isolated from the outside world intensive farming environments are.

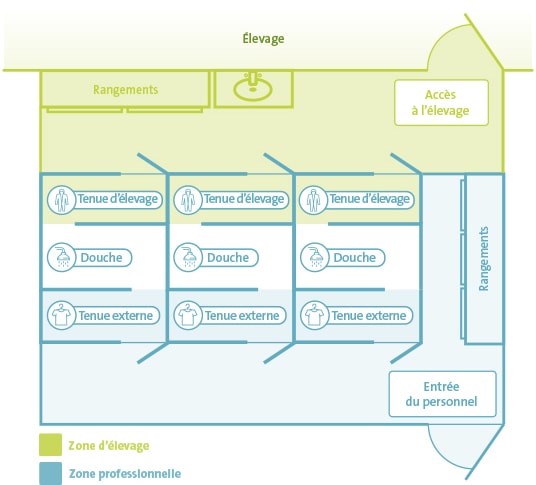

Workers often literally wear respirators:

Together with the respirator, farm workers often wear full body coveralls, gloves.

This produces a suit that should look familiar to people working in biosecurity.

I can keep going—you can get showers, change stations, positive/negative pressure airflow, and maybe literally airlocks.

Here is a representative equipment manufacturer.

https://www.theseo-biosecurity.com/en/our-expert-appraisals/breeders/

It is imperative to ensure that the surroundings of the breeding building(s) are protected and that their access is forbidden to any persons or animals. It is therefore essential to install a mesh fence to enclose these boundaries. The passage through this fence must be secured by a gate for personnel as well as for vehicles.

Note how alien and hostile these environments are: the suits and preparations resemble a biohazard lab.

I think you can see how large, intensive farms allow this to happen.

I think the above gives a good sense of the very issue in the top comment:

Basically, once you have an intensive farm, you can put capex into intensifying and concentrating and greatly increasing suffering in one place, and dissolve a lot of biosecurity concerns plausibly. That’s what factory farms do well.

I’m not super interested in elaborating because I’m hoping someone shuts this down and proves this wrong. Help? Rethink Priorities?

- ^

I actually do have the cites. I distrust the EA forum to handle scientific expertise and I’m really hoping not to make the case or codify this comment—maybe someone else might make the counter case decisively.

No wet market, no factory farms, better regulation and protocol for labs → No source of zoonotic viruses

Extremely unfortunately from the perspective of animal welfare, factory farming is possibly a net benefit for disease prevention.

I think this is mainly because this enormously reduces human-animal interactions. So zoonotic diseases have a lower chance to both develop and spread to humans compared to open air/backyard farms

Diseases can be contained in the unnatural, environments inside factory farms[1].

Note that this is not because factory farms are clean or healthy.

Factory farms are both disgusting, and chronic sources of deadly disease, deadly bird flu is literally happening across the US constantly

In a deep sense, the animals tend to be very unhealthy for a number of reasons, including genetics, e.g. they would be much more likely to perish from disease and other issues, in an outside backyard farm

If this isn’t true, I would really like to be updated.

This truth is very inconvenient for farm animal welfare people. But it is good to say the truth and inform people working in other areas.

- ^

Often by, well, sort of torturing the animals to death by heat.

Are you saying you meditate just 10 minutes a week and see a causal large effect?

That seems impressive but also highly unusual, compared with all practices and experiences with meditation among the practitioners I’ve seen.

Ok, writing quickly. Starting on the “object level about the beliefs”:

It seems like sentiment or buzz, like the tweets about GPT-4 mentioned in the other comment can be found. That gives a different view than silence mentioned in your post. It seems it could be found by searching twitter or other social media.

It seems like the content in my comment (e.g. I’ve suggested that there are various projects that OpenAI has under way and these compete for attention/PR effort) is sort of publicly apparent, low hanging speculation.

Let’s say that OpenAI was actually unusually silent on GPT models and let’s also accept many views of AI safety in EA. It’s unclear why P(very extreme progress, more than 1 year with no OpenAI GPT release) would be large given some sort of extreme progress.

In the most terrible scenarios, we would be experiencing some sort of hostile situation already.

In other scenarios, OpenAI would aptly “simulate” a normally functioning OpenAI, e.g. releasing an incremental new model.

In my view P(very extreme progress | more than 1 year with no new GPT release) is low because many other underlying states of the world would produce silence that is unrelated to some extreme breakthrough, e.g. pivot, management change, general dysfunction, etc.

It seems like it’s a pretty specific conjunction of beliefs where there is some sort of extreme development and:

OpenAI is sort of sitting around, looking at their baby AI, but not producing any projects or other work.

They are stunned into silence and this disrupts their PR

This delay is lasting years

No one has leaked anything about it

I tried to stay sort of “object level” above, because the above sort of lays out the base level reasoning which you can find flaws in or contrast to my own.

From a meta sense, it’s not just a specific conjunction of beliefs, but it’s very hard to disprove a scenario where people are orchestrating silence about something critical—generally this seems like a situation where I want to make sure I have considered other viewpoints.

“Become silent about it”

If you mean online media or the general vibe, one explanation is that DALL-E and diffusion models have been huge, taken up a lot of attention and OpenAI might have focused on that.

Like, very sophisticated tech leaders have gotten interested in these models, even when IMO language models have overwhelmingly larger business relevance for their products.

Actually, Copilot/codex have taken off and might be big. IMO this is underrated.

This might involve collab (with MS), and collabs are slower and harder/less gains to coordinate a buzzy media event on.

As a digression (or maybe not a digression) GPT-3 hasn’t had the sort of massive tractability you (and others) might have expected, even after 2 years, and this reduces the incentives for further, investment on a 10x model.

Related to this, several other companies have spawned their own language models of similar/larger size. So this might somewhat reduce the attraction to winning on size or even reduce the returns to climbing the hill of dominating LLM in some broader sense.

The instruct models and other sophistication is less legible and provide a larger barrier to entry (and produce other sorts of value), whereas parameter size / compute spend is not a moat.

GPT-3 has been somewhat uneconomic to run, for the larger models. Making a 10x model would make this even worse.

There is uh, deeper stuff. Umm, maybe more on this later.

Like any other buzz cycle, the first iteration is going to get a lot of buzz and appear in a lot of outlets. Articles mentioning GPT-3 haven’t even stopped coming out.

openAI has made very extreme progress in the last years and decided to not publicise it for strategic reasons (e.g. to prevent from increasing the “race to AGI”)

It’s good and valid you write this, but this is very likely different from the truth. For one thing, in multiple senses/channels, OpenAI isn’t totally locked down, and extreme sorts of developments would come out. Secondly, I think we’ve been truthfully told what they are working on (in the other comments), e.g. training refinements/sophistication approach to LLM.

Also, as a meta comment, in my opinion, it seems possible the beliefs that formed this valid question, may come from an information environment that seems not identical to an ideal information environment that would optimally guide future decisions related to AI or AI safety.

As a digression, following up on this:

“greater than X”, where “X” is a level of suffering above what is acceptable for “content warning” or social discussion, including on the EA forum.

From the triple perspectives of basic logic/social reality/actual practice, “understanding suffering”, especially past the bounds of “X”, seems to me to be an obvious requirement for EA activity; i.e. no matter what your cause area or activity, it’s important. Certainly if someone is a leader in EA and doesn’t understand it, that person is not an EA.

Also,

It seems implausible/dubious for people to talk about cause prioritization or pretty much anything important, if they are censored or grow up in an environment that doesn’t talk about it

Solving this is hard, but people that grow up online and on “meta EA” seem particularly disfavored, I feel bad for them

Several classes of people/cause areas consistently have much more understanding/awareness of suffering. From the perspective of leadership and governance, this seems to have implications for the future of EA (e.g. if you think truth, rightness, ethics, virtue should dominate governance more than money or shinyness).

There’s a lot going on here but quick thoughts:

Many creatures in both classes of farm animals and lab testing animals probably suffer “greater than X”, where “X” is a level of suffering above what is acceptable for “content warning” or social discussion, including on the EA forum.

Much of the suffering in farms is probably due to predictable neglect (e.g. running out of air, suffocated and crushed or cannibalized alive).

Some animals in labs suffer much less (e.g. checking that there are no long term side effects) and live in clinical environments where neglect is far lower.

Farmed animals usually get killed in a way that’s designed to be quick and minimize suffering.

Unfortunately, this isn’t even close to true. As one example, see ventilator shutdowns.

This kind of suffering is normalized, like literally the American Vet Association is struggling to try to remove it from “not recommended”.

Unfortunately, this form of killing is not at all the limit of suffering from killing on factory farms, and in turn, killing is not even the main source of suffering in factory farms.

*Opens “top recommended organizations”*

*Pauses*

*Breathes deeply*

A bit to unpack, but yeah, forecasting is beating out global health and wellbeing, for top priority.

The smells are large, e.g. shipping the org chart, etc.

https://80000hours.org/problem-profiles/

Animal welfare is not even in the first or second tier.

Like, literally nanotech is beating it out, as well as “malevolent actors”, and “improving governance of public goods”.

Ah, maybe the above might be an async indexing thing? (e.g. async/chron tasks updates the indexes every 24 hours and the above example is too recent to be indexed)

Amber Dawn has her posts listed:

Co authors (second authors and later?) of posts don’t appear to have their posts in the profile?

Sidhu has no post listed:

This seems mild, but could be bad if someone likes to co author a lot.

For onlookers, just to comment on one small piece of this, in early Oct 2022, SBF gave signals of updating/backed off on his spend (“billion dollar donation figure”).

This was picked up by 10-20 outlets, suggesting this was an active signal from SBF.

I appreciate the thoughtful consideration and I agree with you. IMO I think you are right, including your points like the medical establishment is often wrong and slow. I’m less certain, but it’s possible the DSM (and maybe a lot of physiatry) is a mess.

Finally, the opioid comparison strikes me as strained.

Yes, this should be deleted. Maybe I was trying to gesture at creating subcultures that normalize drug use inappropriately, and I was using “opioid” as an example in support of this, but this might be wrong and, if anything, supports your points equally or better.

The main difference is my concern about EA having subpopulations/subcultures with different resources.

I support the OP, but I’m worried she’s an outlier, being in a place where there is a huge amount of support, creating agency for her exploration (read the 80,000 hours CEO’s story here).

I don’t want to minimizing her journey, such personal work and progress should be encouraged and written up more, because it’s great!

But, partially because this is impractical for many, I’m worried that something will get lost in translation, or some bad views might piggy back on this e.g. normalizing low-fidelity beliefs about drug use (that are Schedule II stimulants!).

Ok there’s been a couple comments on “don’t go to your doctor”, “drug hack”.

Above:

My two cents: Vyvance / Adderall is worth a try for depression… [Low side effects. Thankfully it’s completely in and out of your system in 24 hours.]

Perpetuating the illusion that mental health professionals usually know what they’re doing can be harmfwl

Basically, I’m pretty sure this is wrong/dangerous.

Lisdexamfetamine is indeed being explored to treat depression, and some anti depressants like buproprion have a very stimulant sort of flavor.

But applying these theories directly as a sign of to take stimulants is very very simplistic.

Also, there’s a clownish level of abuse of medications in US/western society (see opoids).To be gearsy, one issue is that stimulants bring you up, but then you almost always have a crash or a low phase—it’s easy to see how this low phase could be very very bad for some people. These substances also have relationships with other disorders/issues that are complicated. Also, these side effects definitely aren’t “limited to being in out of your system in 24 hours”.

I don’t have a long list of references for this or time to provide this, but you can google to explore the standard issues and side effects the stimulant drugs:

Just don’t tell your doctor that’s what you want it for

especially in this crowd, because most people here can do better by trusting their own cursory research.

RE: This and other “anti doctor stuff”.

There’s a lot to write here, but ultimately, these comments and stuff shouldn’t be on a major internet forum, definitely not the EA forum.

The following gives one pretty good argument:

Many EAs are privileged. So for this audience, these people should just see a doctor/psychiatrist to get data points, as well as their trusted network for what works.

For the people who aren’t able to see a doctor, and are under resourced, that’s bad and unfortunate, especially since on average, they have further challenges—but on average it’s likely that taking internet forum drug advice will make things further bad, especially given coincidence of further issues.

So the above sort of is a logical case that explains why internet forum for “drug hacking” (especially for mood/stimulants/depression) is bad.

This is more complicated (someone I know has a relationship with doctors where they sort of punch through and get whatever script they want, which is exactly in spirit of the above comment; doctors, psychologists are very mixed in quality; being an outlier makes all of this worse).

It’s good to acknowledge the facts above—but unfortunately properly situating this all, without causing an “EA forum style” cascade that sort of makes it worse, is hard, in addition to time, it’s sort of, “I don’t have enough crayons” or “I can’t count that low” sort of flavor.

This was one of the best written posts on the forum. It’s clearly motivated, expresses the issues, the context and your uncertainty and confidence well.

I think you have good answers already.

Here’s some other considerations (that are mostly general):

Fit is important. How well you feel in the lab matters a lot. If you feel like the people are overbearing or if you have to fight, that’s bad. This can be hard to figure out and worth thinking about. There is a honeymoon period, and small things can play a big role 1-2 years later. Many people become miserable.

(For an MA, it might matter less) but the actual placement record of the PI is pretty important. This is not just for the usual reason of academic status, but I think it’s gives an important sense of how good the work/lab is.

Especially for an MA, the PI actually might not be very hands on or determine your experience (especially for high status PIs). Often it’s actually the post-docs (lab techs sometimes) that are extremely important for students and can make you successful, or make your life a living hell.

Note that people leave and a small trap is joining a lab where the talent (post docs) take a new position, which change everything for you.

Asking around for reputation is important.

It’s unusual and I’m unsure how likely it is, but it’s possible you might be able to swing some sort of EA mentorship or grant, which might make your second choice more interesting if you can point it toward pandemics.

Overall (maybe because of my bias described below), I generally distrust labs where you are fitted in a slot to solve a problem for a PI. I recommend a lab where you can gain general programming skills or have freedom to network and express your abilities.

More background:

I’m against/biased against a lot of “hard science” grad work, because (maybe outside of a small number of labs/PIs) I think you often just serve as cheap labor that doesn’t actually involve a lot intellectual activity (this is a distinct from the other problem a lot of research activity is winning games in academia).

This might be different and not apply to bioinformatics or something exposed to a lot of programming.

Below is one case where EA Forum dark mode might be failing.

Might be a minor CSS/”missed one case” sort of thing .

https://forum.effectivealtruism.org/posts/A5mh4DJaLeCDxapJG/ben_west-s-shortform