I’m not sure if this hits what you mean by ‘being ineffective to be effective’, but you may be interested in Paul Graham’s ‘Bus ticket theory of genius’.

Will Aldred

The moderation team is issuing @Eugenics-Adjacent a 6-month ban for flamebait and trolling.

I’ll note that Eugenics-Adjacent’s posts and comments have been mostly about pushing against what they see as EA groupthink. In banning them, I do feel a twinge of “huh, I hope I’m not making the Forum more like an echo chamber.” However, there are tradeoffs at play. “Overrun by flamebait and trolling” seems to be the default end state for most internet spaces: the Forum moderation team is committed to fighting against this default.

All in all, we think the ratio of “good” EA criticism to more-heat-than-light criticism in Eugenics-Adjacent’s contributions is far too low. Additionally, at −220 karma (at the time of writing), Eugenics-Adjacent is one of the most downvoted users of all time—we take this as a clear indication that other users are finding their contributions unhelpful. If Eugenics-Adjacent returns to the Forum, we’ll expect to see significant improvement. I expect we’ll ban them indefinitely if anything like the above continues.

As a reminder, a ban applies to the person behind the account, not just to the particular account.

If anyone has questions or concerns, feel free to reach out or reply in this thread. If you think we’ve made a mistake, you can appeal.

I’m also announcing this year’s first debate week! We’ll be discussing whether, on the margin, we should put more effort into reducing the chances of avoiding human extinction or increasing the value of futures where we survive.

Nice! A couple of thoughts:

1.

In addition to soliciting new posts for the debate week, consider ‘classic repost’-ing relevant old posts, especially ones that haven’t been discussed on the Forum before?

Tomasik’s ‘Risks of astronomical future suffering’ comes to mind, as well as Assadi’s ‘Will humanity choose its future?’ and Anthis’s ‘The future might not be so great’.[1]

2.

Alongside the debate statement voting, I think it could be very cool to let users create and post their own distribution over {great, fine, non-, bad, hellish} futures,[2] in line with the following diagram. You could then aggregate[3] users’ distributions and display a wisdom-of-the-EA-Forum-crowd prediction for the future:

Source: ‘Beyond Maxipok’ (Cotton-Barratt, 2024) - ^

This is, of course, not an endorsement of Anthis’s former conduct.

- ^

On the user end, this would just mean entering five percentages (which add up to 100).

- ^

Although a mean aggregation would be simplest, a better aggregation (in my view) would be to take the median user’s percentage within each of {great, fine, non-, bad, hellish}, and then normalize so that the five aggregate percentages add up to 100. (And you could optionally weight users’ distributions/predictions in line with their karma / strong-upvote power.)

(For context, the Metaculus forecasting platform’s ‘community prediction’ aggregation is a weighted median.)

- ^

+1. I appreciated @RobertM’s articulation of this problem for animal welfare in particular:

I think the interventions for ensuring that animal welfare is good after we hit transformative AI probably look very different from interventions in the pretty small slice of worlds where the world looks very boring in a few decades.

…

If we achieve transformative AI and then don’t all die (because we solved alignment), then I don’t think the world will continue to have an “agricultural industry” in any meaningful sense (or, really, any other traditional industry; strong nanotech seems like it ought to let you solve for nearly everything else). Even if the economics and sociology work out such that some people will want to continue farming real animals instead of enjoying the much cheaper cultured meat of vastly superior quality, there will be approximately nobody interested in ensuring those animals are suffering, and the cost for ensuring that they don’t suffer will be trivial.

[...] if you think it’s at all plausible that we achieve TAI in a way that locks in reflectively-unendorsed values which lead to huge quantities of animal suffering, that seems like it ought to dominate effectively all other considerations in terms of interventions w.r.t. future animal welfare.

I’ve actually tried asking/questioning a few animal welfare folks for their takes here, but I’ve yet to hear back anything that sounded compelling (to me). (If anyone reading this has an argument for why ‘standard’ animal welfare interventions are robust to the above, then I’d love to hear it!)

Agree. Although, while the Events dashboard isn’t up to date, I notice that the EAG team released the following table in a post last month, which does have complete 2024 data:

EAG applicant numbers were down 42% from 2022 to 2024,[1] which is a comparable decline to that in monthly Forum users (down 35% from November 2022’s peak to November 2024).[2]

To me, this is evidence that the dropping numbers are driven by changes in the larger zeitgeist rather than by any particular thing the Events or Online team is doing (as @Jason surmises in his comment above).

Bug report (although this could very well be me being incompetent!):

The new @mention interface doesn’t appear to take users’ karma into account when deciding which users to surface. This has the effect of showing me a bunch of users with 0 karma, none of whom are the user I’m trying to tag.[1] (I think the old interface showed higher-karma users higher up?)

More importantly, I’m still shown the wrong users even when I type in the full username of the person I’m trying to tag—in this case, Jason. [Edit: I’ve tried @ing some other users, now, and I’ve found that most of the time there isn’t this problem. It looks like the problem occurs for users with a single word username that’s a common(-ish) name, e.g., Jason, geoffrey, Joseph.] And there doesn’t appear to be a way to ‘scroll down’ to find the right user [when there is this problem],[2] which means that I’m unable to tag them.

(Sorry that this is a pretty critical comment. I appreciate the work you do :) )

OP has provided very mixed messages around AI safety. They’ve provided surprisingly little funding / support for technical AI safety in the last few years (perhaps 1 full-time grantmaker?), but they have seemed to provide more support for AI safety community building / recruiting

Yeah, I find myself very confused by this state of affairs. Hundreds of people are being funneled through the AI safety community-building pipeline, but there’s little funding for them to work on things once they come out the other side.[1]

As well as being suboptimal from the viewpoint of preventing existential catastrophe, this also just seems kind of common-sense unethical. Like, all these people (most of whom are bright-eyed youngsters) are being told that they can contribute, if only they skill up, and then they later find out that that’s not the case.

- ^

These community-building graduates can, of course, try going the non-philanthropic route—i.e., apply to AGI companies or government institutes. But there are major gaps in what those organizations are working on, in my view, and they also can’t absorb so many people.

- ^

While not a study per se, I found the Huberman Lab podcast episode ‘How Smartphones & Social Media Impact Mental Health’ very informative. (It’s two and a half hours long, mostly about children and teenagers, and references the study(ies) it draws from, IIRC.)

For previous work, I point you to @NunoSempere’s ‘Shallow evaluations of longtermist organizations,’ if you haven’t seen it already. (While Nuño didn’t focus on AI safety orgs specifically, I thought the post was excellent, and I imagine that the evaluation methods/approaches used can be learned from and applied to AI safety orgs.)

I hope in the future there will be multiple GV-scale funders for AI GCR work, with different strengths, strategies, and comparative advantages

(Fwiw, the Metaculus crowd prediction on the question ‘Will there be another donor on the scale of 2020 Good Ventures in the Effective Altruist space in 2026?’ currently sits at 43%.)

Epistemic status: strong opinions, lightly held

I remember a time when an org was criticized, and a board member commented defending the org. But the board member was factually wrong about at least one claim, and the org then needed to walk back wrong information. It would have been clearer and less embarrassing for everyone if they’d all waited a day or two to get on the same page and write a response with the correct facts.

I guess it depends on the specifics of the situation, but, to me, the case described, of a board member making one or two incorrect claims (in a comment that presumably also had a bunch of accurate and helpful content) that they needed to walk back sounds… not that bad? Like, it seems only marginally worse than their comment being fully accurate the first time round, and far better than them never writing a comment at all. (I guess the exception to this is if the incorrect claims had legal ramifications that couldn’t be undone. But I don’t think that’s true of the case you refer to?)

A downside is that if an organization isn’t prioritizing back-and-forth with the community, of course there will be more mystery and more speculations that are inaccurate but go uncorrected. That’s frustrating, but it’s a standard way that many organizations operate, both in EA and in other spaces.

I don’t think the fact that this is a standard way for orgs to act in the wider world says much about whether this should be the way EA orgs act. In the wider world, an org’s purpose is to make money for its shareholders: the org has no ‘teammates’ outside of itself; no-one really expects the org to try hard to communicate what it is doing (outside of communicating well being tied to profit); no-one really expects the org to care about negative externalities. Moreover, withholding information can often give an org a competitive advantage over rivals.

Within the EA community, however, there is a shared sense that we are all on the same team (I hope): there is a reasonable expectation for cooperation; there is a reasonable expectation that orgs will take into account externalities on the community when deciding how to act. For example, if communicating some aspect of EA org X’s strategy would take half a day of staff time, I would hope that the relevant decision-maker at org X takes into account not only the cost/benefit to org X of whether or not to communicate, but also the cost/benefit to the wider community. If half a day of staff time helps others in the community better understand org X’s thinking,[1] such that, in expectation, more than half a day of (quality-adjusted) productive time is saved (through, e.g., community members making better decisions about what to work on), then I would hope that org X chooses to communicate.

When I see public comments about the inner workings of an organization by people who don’t work there, I often also hear other people who know more about the org privately say “That’s not true.” But they have other things to do with their workday than write a correction to a comment on the Forum or LessWrong, get it checked by their org’s communications staff, and then follow whatever discussion comes from it.

I would personally feel a lot better about a community where employees aren’t policed by their org on what they can and cannot say. (This point has been debated before—see saulius and Habryka vs. the Rethink Priorities leadership.) I think such policing leads to chilling effects that make everyone in the community less sane and less able to form accurate models of the world. Going back to your example, if there was no requirement on someone to get their EAF/LW comment checked by their org’s communications staff, then that would significantly lower the time and effort barrier to publishing such comments, and then the whole argument around such comments being too time-consuming to publish becomes much weaker.

All this to say: I think you’re directionally correct with your closing bullet points. I think it’s good to remind people of alternative hypotheses. However, I push back on the notion that we must just accept the current situation (in which at least one major EA org has very little back-and-forth with the community)[2]. I believe that with better norms, we wouldn’t have to put as much weight on bullets 2 and 3, and we’d all be stronger for it.

Open Phil has seemingly moved away from funding ‘frontier of weirdness’-type projects and cause areas; I therefore think a hole has opened up that EAIF is well-placed to fill. In particular, I think an FHI 2.0 of some sort (perhaps starting small and scaling up if it’s going well) could be hugely valuable, and that finding a leader for this new org could fit in with your ‘running specific application rounds to fund people to work on [particularly valuable projects].’

My sense is that an FHI 2.0 grant would align well with EAIF’s scope. Quoting from your announcement post for your new scope:

Examples of projects that I (Caleb) would be excited for this fund [EAIF] to support

A program that puts particularly thoughtful researchers who want to investigate speculative but potentially important considerations (like acausal trade and ethics of digital minds) in the same physical space and gives them stipends—ideally with mentorship and potentially an emphasis on collaboration.

…

Foundational research into big, if true, areas that aren’t currently receiving much attention (e.g. post-AGI governance, ECL, wild animal suffering, suffering of current AI systems).

Having said this, I imagine that you saw Habryka’s ‘FHI of the West’ proposal from six months ago. The fact that that has not already been funded, and that talk around it has died down, makes me wonder if you have already ruled out funding such a project. (If so, I’d be curious as to why, though of course no obligation on you to explain yourself.)

Thanks for clarifying!

Be useful for research on how to produce intent-aligned systems

Just checking: Do you believe this because you see the intent alignment problem as being in the class of “complex questions which ultimately have empirical answers, where it’s out of reach to test them empirically, but one may get better predictions from finding clear frameworks for thinking about them,” alongside, say, high energy physics?

For a variety of reasons it seems pretty unlikely to me that we manage to robustly solve alignment of superintelligent AIs while pointed in “wrong” directions; that sort of philosophical unsophistication is why I’m pessimistic on our odds of success.

This is an aside, but I’d be very interested to hear you expand on your reasons, if you have time. (I’m currently on a journey of trying to better understand how alignment relates to philosophical competence; see thread here.)

(Possibly worth clarifying up front: by “alignment,” do you mean “intent alignment,” as defined by Christiano, or do you mean something broader?)

Hmm, interesting.

I’m realizing now that I might be more confused about this topic than I thought I was, so to backtrack for just a minute: it sounds like you see weak philosophical competence as being part of intent alignment, is that correct? If so, are you using “intent alignment” in the same way as in the Christiano definition? My understanding was that intent alignment means “the AI is trying to do what present-me wants it to do.” To me, therefore, this business of the AI being able to recognize whether its actions would be approved by idealized-me (or just better-informed-me) falls outside the definition of intent alignment.

(Looking through that Christiano post again, I see a couple of statements that seem to support what I’ve just said,[1] but also one that arguably goes the other way.[2])

Now, addressing your most recent comment:

Okay, just to make sure that I’ve understood you, you are defining weak philosophical competence as “competence at reasoning about complex questions [in any domain] which ultimately have empirical answers, where it’s out of reach to test them empirically, but where one may get better predictions from finding clear frameworks for thinking about them,” right? Would you agree that the “important” part of weak philosophical competence is whether the system would do things an informed version of you, or humans at large, would ultimately regard as terrible (as opposed to how competent the system is at high energy physics, consciousness science, etc.)?

If a system is competent at reasoning about complex questions across a bunch of domains, then I think I’m on board with seeing that as evidence that the system is competent at the important part of weak philosophical competence, assuming that it’s already intent aligned.[3] However, I’m struggling to see why this would help with intent alignment itself, according to the Christiano definition. (If one includes weak philosophical competence within one’s definition of intent alignment—as I think you are doing(?)—then I can see why it helps. However, I think this would be a non-standard usage of “intent alignment.” I also don’t think that most folks working on AI alignment see weak philosophical competence as part of alignment. (My last point is based mostly on my experience talking to AI alignment researchers, but also on seeing leaders of the field write things like this.))

A couple of closing thoughts:

I already thought that strong philosophical competence was extremely neglected, but I now also think that weak philosophical competence is very neglected. It seems to me that if weak philosophical competence is not solved at the same time as intent alignment (in the Christiano sense),[4] then things could go badly, fast. (Perhaps this is why you want to include weak philosophical competence within the intent alignment problem?)

The important part of weak philosophical competence seems closely related to Wei Dai’s “human safety problems”.

(Of course, no obligation on you to spend your time replying to me, but I’d greatly appreciate it if you do!)

- ^

They could [...] be wrong [about; sic] what H wants at a particular moment in time.

They may not know everything about the world, and so fail to recognize that an action has a particular bad side effect.

They may not know everything about H’s preferences, and so fail to recognize that a particular side effect is bad.

…

I don’t have a strong view about whether “alignment” should refer to this problem or to something different. I do think that some term needs to refer to this problem, to separate it from other problems like “understanding what humans want,” “solving philosophy,” etc.

(“Understanding what humans want” sounds quite a lot like weak philosophical competence, as defined earlier in this thread, while “solving philosophy” sounds a lot like strong philosophical competence.)

- ^

An aligned AI would also be trying to do what H wants with respect to clarifying H’s preferences.

(It’s unclear whether this just refers to clarifying present-H’s preferences, or if it extends to making present-H’s preferences be closer to idealized-H’s.)

- ^

If the system is not intent aligned, then I think this would still be evidence that the system understands what an informed version of me would ultimately regard as terrible vs. not terrible. But, in this case, I don’t think the system will use what it understands to try to do the non-terrible things.

- ^

Insofar as a solved vs. not solved framing even makes sense. Karnofsky (2022; fn. 4) argues against this framing.

Thanks for expanding! This is the first time I’ve seen this strong vs. weak distinction used—seems like a useful ontology.[1]

Minor: When I read your definition of weak philosophical competence,[2] high energy physics and consciousness science came to mind as fields that fit the definition (given present technology levels). However, this seems outside the spirit of “weak philosophical competence”: an AI that’s superhuman in the aforementioned fields could still fail big time with respect to “would this AI do something an informed version of me / those humans would ultimately regard as terrible?” Nonetheless, I’ve not been able to think up a better ontology myself (in my 5 mins of trying), and I don’t expect this definitional matter will cause problems in practice.

- ^

For the benefit of any readers: Strong philosophical competence is importantly different to weak philosophical competence, as defined.

The latter feeds into intent alignment, while the former is an additional problem beyond intent alignment.[Edit: I now think this is not so clear-cut. See the ensuing thread for more.] - ^

“Let weak philosophical competence mean competence at reasoning about complex questions which ultimately have empirical answers, where it’s out of reach to test them empirically, but one may get better predictions from finding clear frameworks for thinking about them.”

- ^

By the time systems approach strong superintelligence, they are likely to have philosophical competence in some sense.

It’s interesting to me that you think this; I’d be very keen to hear your reasoning (or for you to point me to any existing writings that fit your view).

For what it’s worth, I’m at maybe 30 or 40% that superintelligence will be philosophically competent by default (i.e., without its developers trying hard to differentially imbue it with this competence), conditional on successful intent alignment, where I’m roughly defining “philosophically competent” as “wouldn’t cause existential catastrophe through philosophical incompetence.” I believe this mostly because I find @Wei Dai’s writings compelling, and partly because of some thinking I’ve done myself on the matter. OpenAI’s o1 announcement post, for example, indicates that o1—the current #1 LLM, by most measures—performs far better in domains that have clear right/wrong answers (e.g., calculus and chemistry) than in domains where this is not the case (e.g., free-response writing[1]).[2] Philosophy, being interminable debate, is perhaps the ultimate “no clear right/wrong answers” domain (to non-realists, at least): for this reason, plus a few others (which are largely covered in Dai’s writings), I’m struggling to see why AIs wouldn’t be differentially bad at philosophy in the lead-up to superintelligence.

Also, for what it’s worth, the current community prediction on the Metaculus question “Five years after AGI, will AI philosophical competence be solved?” is down at 27%.[3] (Although, given how out of distribution this question is with respect to most Metaculus questions, the community prediction here should be taken with a lump of salt.)

(It’s possible that your “in some sense” qualifier is what’s driving our apparent disagreement, and that we don’t actually disagree by much.)

- ^

- ^

On this, AI Explained (8:01–8:34) says:

And there is another hurdle that would follow, if you agree with this analysis [of why o1’s capabilities are what they are, across the board]: It’s not just a lack of training data. What about domains that have plenty of training data, but no clearly correct or incorrect answers? Then you would have no way of sifting through all of those chains of thought, and fine-tuning on the correct ones. Compared to the original GPT-4o in domains with correct and incorrect answers, you can see the performance boost. With harder-to-distinguish correct or incorrect answers: much less of a boost [in performance]. In fact, a regress in personal writing.

- ^

Note: Metaculus forecasters—for the most part—think that superintelligence will come within five years of AGI. (See here my previous commentary on this, which goes into more detail.)

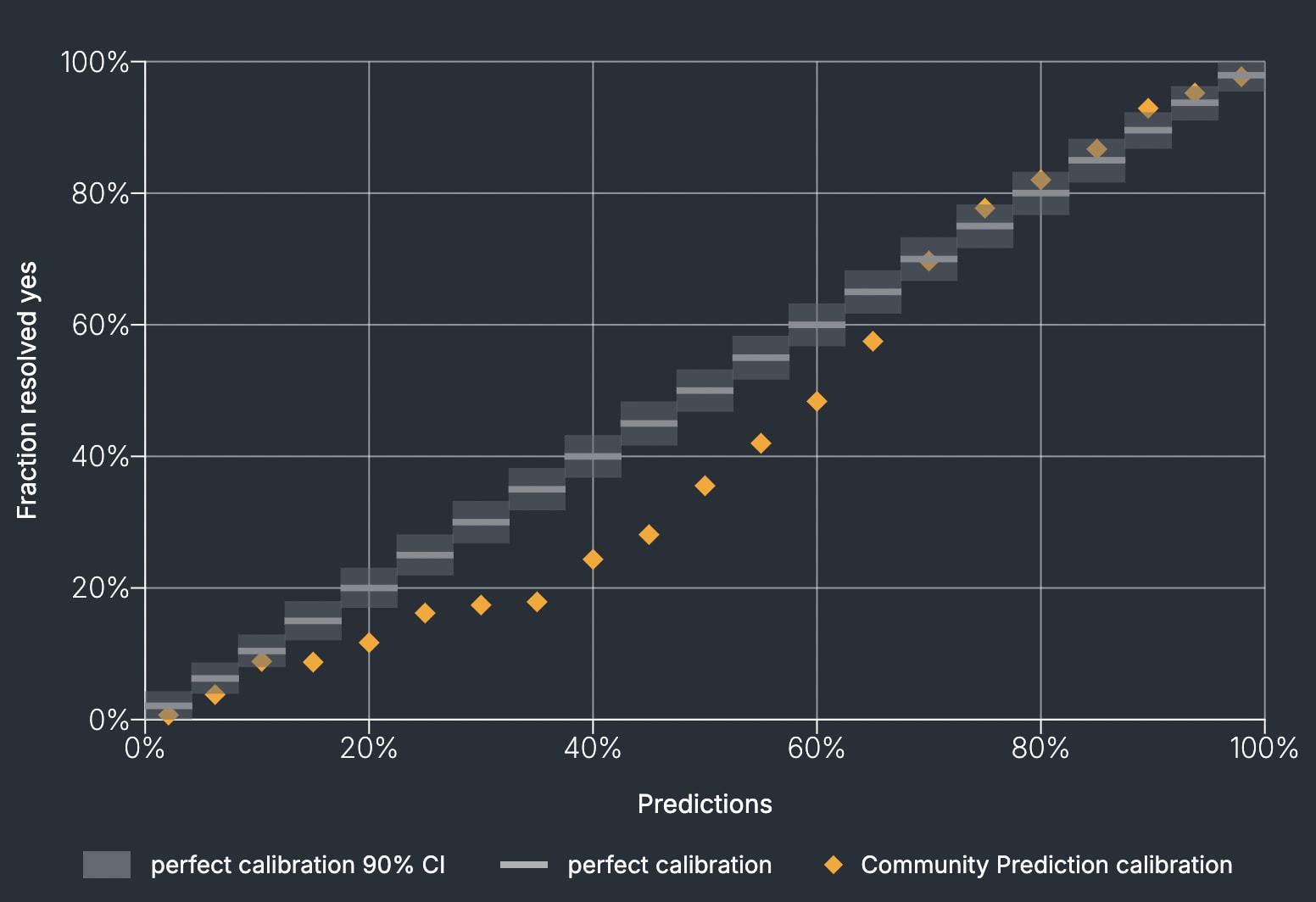

Imagine if we could say, “When Metaculus predicts something with 80% certainty, it happens between X and Y% of the time,” or “On average, Metaculus forecasts are off by X%”.

Fyi, the Metaculus track record—the “Community Prediction calibration” part, specifically—lets us do this already. When Metaculus predicts something with 80% certainty, for example, it happens around 82% of the time:

Building on the above: the folks behind Intelligence Rising actually published a paper earlier this month, titled ‘Strategic Insights from Simulation Gaming of AI Race Dynamics’. I’ve not read it myself, but it might address some of your wonderings, @yanni. Here’s the abstract:

We present insights from ‘Intelligence Rising’, a scenario exploration exercise about possible AI futures. Drawing on the experiences of facilitators who have overseen 43 games over a four-year period, we illuminate recurring patterns, strategies, and decision-making processes observed during gameplay. Our analysis reveals key strategic considerations about AI development trajectories in this simulated environment, including: the destabilising effects of AI races, the crucial role of international cooperation in mitigating catastrophic risks, the challenges of aligning corporate and national interests, and the potential for rapid, transformative change in AI capabilities. We highlight places where we believe the game has been effective in exposing participants to the complexities and uncertainties inherent in AI governance. Key recurring gameplay themes include the emergence of international agreements, challenges to the robustness of such agreements, the critical role of cybersecurity in AI development, and the potential for unexpected crises to dramatically alter AI trajectories. By documenting these insights, we aim to provide valuable foresight for policymakers, industry leaders, and researchers navigating the complex landscape of AI development and governance.

[emphasis added]

Hmm, I’m not a fan of this Claude summary (though I appreciate your trying). Below, I’ve made a (play)list of Habryka’s greatest hits,[1] ordered by theme,[2][3] which might be another way for readers to get up to speed on his main points:

Leadership

The great OP gridlock

On behalf of the people

You are not blind, Zachary[4]

Reputation[5]

Convoluted compensation

Orgs don’t have beliefs

Not behind closed doors

Funding

Diverse disappointment

Losing our (digital) heart

Blacklisted conclusions

Impact

Many right, some wrong

Inverting the sign

A bad aggregation

‘Greatest hits’ may be a bit misleading. I’m only including comments from the post-FTX era, and then, only comments that touch on the core themes. (This is one example of a great comment I haven’t included.)

although the themes overlap a fair amount

My ordering is quite different to the karma ordering given on the GreaterWrong page Habryka links to. I think mine does a better job of concisely covering Habryka’s beliefs on the key topics. But I’d be happy to take my list down if @Habryka disagrees (just DM me).

For context, Zachary is CEA’s CEO.