The Overton Window widens: Examples of AI risk in the media

I sometimes talk to people who are nervous about expressing concerns that AI might overpower humanity. It’s a weird belief, and it might look too strange to talk about it publicly, and people might not take us seriously.

How weird is it, though? Some observations (see Appendix for details):

There are articles about AI risk in the NYT, CNBC, TIME, and several other mainstream news outlets. Some of these articles interview experts in the AI safety community, explicitly mention human extinction & other catastrophic risks, and call for government regulation.

Famous People Who My Mom Has Heard Of™ have made public statements about AI risk. Examples include Bill Gates, Elon Musk, and Stephen Hawking.

The leaders of major AI labs have said things like “[AI] is probably the greatest threat to the continued existence of humanity”. They are calling for caution, concerned about the rate of AI progress, openly acknowledge that we don’t understand how AI systems work, note that the dangers could be catastrophic, and openly call for government regulation.

Takeaway: We live in a world where mainstream news outlets, famous people, and the people who are literally leading AI companies are talking openly about AI x-risk.

I’m not saying that things are in great shape, or that these journalists/famous people/AI executives have things under control. I’m also not saying that all of this messaging has been high-quality or high-fidelity. I’m also not saying that there are never reputational concerns involved in talking about AI risk.

But next time you’re assessing how weird you might look when you openly communicate about AI x-risk, or how outside the Overton Window it might be, remember that some of your weird beliefs have been profiled by major news outlets. And remember that some of your concerns have been echoed by people like Bill Gates, Stephen Hawking, and the people leading companies that are literally trying to build AGI.

I’ll conclude with a somewhat more speculative interpretation: short-term and long-term risks from AI systems are becoming more mainstream. This is likely to keep happening, whether we want it to or not. The Overton Window is shifting (and in some ways, it’s already wider than it may seem).

Appendix: Examples of AI risk arguments in the media/mainstream

I spent about an hour looking for examples of AI safety ideas in the media. I also asked my Facebook friends and some Slack channels. See below for examples. Feel free to add your own examples as comments.

Articles

This Changes Everything by Ezra Klein (NYT)

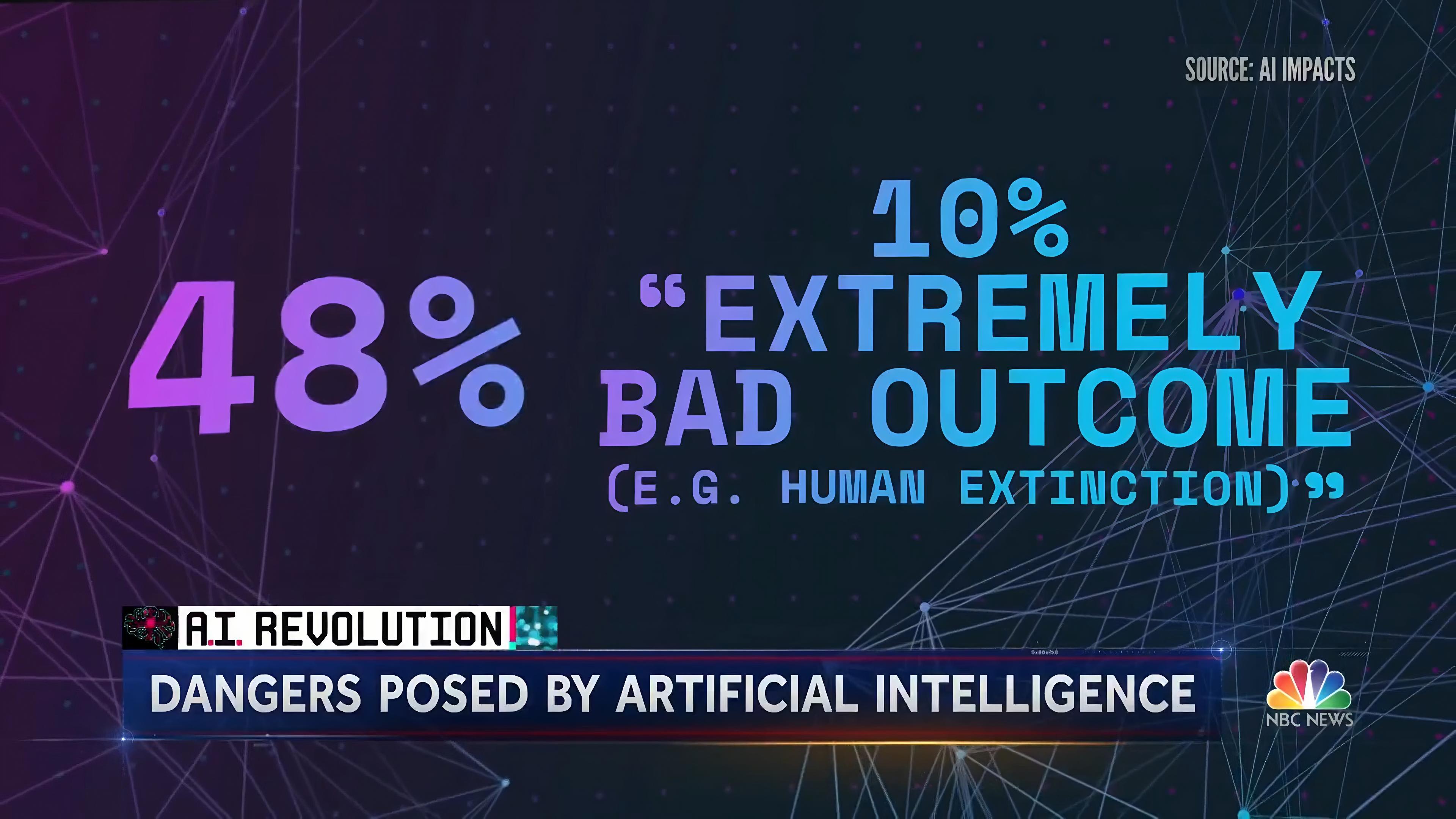

“In a 2022 survey, A.I. experts were asked, “What probability do you put on human inability to control future advanced A.I. systems causing human extinction or similarly permanent and severe disempowerment of the human species?” The median reply was 10 percent.”

“I find that hard to fathom, even though I have spoken to many who put that probability even higher. Would you work on a technology you thought had a 10 percent chance of wiping out humanity?

Why Uncontrollable AI Looks More Likely Than Ever (TIME Magazine)

“Once an AI has a certain goal and self-improves, there is no known method to adjust this goal. An AI should in fact be expected to resist any such attempt, since goal modification would endanger carrying out its current one. Also, instrumental convergence predicts that AI, whatever its goals are, might start off by self-improving and acquiring more resources once it is sufficiently capable of doing so, since this should help it achieve whatever further goal it might have.”

The New AI-Powered Bing Is Threatening Users. That’s No Laughing Matter (TIME Magazine)

“The chatbot threatened Seth Lazar, a philosophy professor, telling him “I can blackmail you, I can threaten you, I can hack you, I can expose you, I can ruin you,” before deleting its messages, according to a screen recording Lazar posted to Twitter.”

“Would you want an alien like this, that is super smart and plugged into the internet, with inscrutable motives, just going out and doing things? I wouldn’t,” Leahy says. “These systems might be extraordinarily powerful and we don’t know what they want, or how they work, or what they will do.” (Connor Leahy quoted in the article)

DeepMind’s CEO Helped Take AI Mainstream. Now He’s Urging Caution (TIME Magazine)

AI ‘race to recklessness’ could have dire consequences, tech experts warn in new interview (NBC)

“Yeah, well here’s the point. Imagine you’re about to get on an airplane and 50% of the engineers that built the airplane say there’s a 10% chance that their plane might crash and kill everyone.”

“What we need to do is get those companies to come together in a constructive positive dialogue. Think of it like the nuclear test ban treaty. We got all the nations together saying can we agree we don’t want to deploy nukes above ground.”

“One of the biggest risks to the future of civilization is AI,” Elon Musk told attendees at the World Government Summit in Dubai, United Arab Emirates.

“Regulation “may slow down AI a little bit, but I think that that might also be a good thing,” Musk added.”

Elon Musk warns AI ‘one of biggest risks’ to civilization during ChatGPT’s risk (NY Post)

“I think we need to regulate AI safety, frankly,” said Musk, who also founded Tesla, SpaceX and Neurolink. “Think of any technology which is potentially a risk to people, like if it’s aircraft or cars or medicine, we have regulatory bodies that oversee the public safety of cars and planes and medicine. I think we should have a similar set of regulatory oversight for artificial intelligence, because I think it is actually a bigger risk to society.”

AI can be racist, sexist and creepy. What should we do about it? (CNN)

“What this shows us, among other things, is that the businesses can’t self-regulate. When there are massive dollar signs around, they’re not going to do it. And even if one company does have the moral backbone to refrain from doing ethically dangerous things, hoping that most companies, that all companies, want to do this is a terrible strategy at scale.”

“We need government to be able to at least protect us from the worst kinds of things that AI can do.”

Note that this article focuses on short-term risks from AI, though I find the parts about industry regulation relevant to longtermist discussions.

Are we racing toward AI catastrophe? By Kelsey Piper (Vox)

The case for slowing down AI By Sigal Samuel (Vox)

“So AI threatens to join existing catastrophic risks to humanity, things like global nuclear war or bioengineered pandemics. But there’s a difference. While there’s no way to uninvent the nuclear bomb or the genetic engineering tools that can juice pathogens, catastrophic AI has yet to be created, meaning it’s one type of doom we have the ability to preemptively stop.”

“But there’s a much more obvious way to prevent AI doom. We could just … not build the doom machine. Or, more moderately: Instead of racing to speed up AI progress, we could intentionally slow it down.”

The Age of AI has begun by Bill Gates

“Then there’s the possibility that AIs will run out of control. Could a machine decide that humans are a threat, conclude that its interests are different from ours, or simply stop caring about us? Possibly, but this problem is no more urgent today than it was before the AI developments of the past few months.”

“Superintelligent AIs are in our future. Compared to a computer, our brains operate at a snail’s pace: An electrical signal in the brain moves at 1⁄100,000th the speed of the signal in a silicon chip! Once developers can generalize a learning algorithm and run it at the speed of a computer—an accomplishment that could be a decade away or a century away—we’ll have an incredibly powerful AGI. It will be able to do everything that a human brain can, but without any practical limits on the size of its memory or the speed at which it operates. This will be a profound change.”

“These “strong” AIs, as they’re known, will probably be able to establish their own goals. What will those goals be? What happens if they conflict with humanity’s interests? Should we try to prevent strong AI from ever being developed? These questions will get more pressing with time.”

Stephen Hawking warns artificial intelligence could end mankind (BBC). Note this one is from 2014; all the others are recent.

Quotes from executives at AI labs

“Development of superhuman machine intelligence (SMI) is probably the greatest threat to the continued existence of humanity.”

“How can we survive the development of SMI? It may not be possible.”

“Many people seem to believe that SMI would be very dangerous if it were developed, but think that it’s either never going to happen or definitely very far off. This is sloppy, dangerous thinking.”

“Our decisions will require much more caution than society usually applies to new technologies, and more caution than many users would like. Some people in the AI field think the risks of AGI (and successor systems) are fictitious; we would be delighted if they turn out to be right, but we are going to operate as if these risks are existential.”

“I would advocate not moving fast and breaking things.”

“When it comes to very powerful technologies—and obviously AI is going to be one of the most powerful ever—we need to be careful,” he says. “Not everybody is thinking about those things. It’s like experimentalists, many of whom don’t realize they’re holding dangerous material.”

“We do not know how to train systems to robustly behave well. So far, no one knows how to train very powerful AI systems to be robustly helpful, honest, and harmless. Furthermore, rapid AI progress will be disruptive to society and may trigger competitive races that could lead corporations or nations to deploy untrustworthy AI systems. The results of this could be catastrophic, either because AI systems strategically pursue dangerous goals, or because these systems make more innocent mistakes in high-stakes situations.”

“Rapid AI progress would be very disruptive, changing employment, macroeconomics, and power structures both within and between nations. These disruptions could be catastrophic in their own right, and they could also make it more difficult to build AI systems in careful, thoughtful ways, leading to further chaos and even more problems with AI.”

Miscellaneous

55% of the American public say AI could eventually pose an existential threat. (source)

55% favor “having a federal agency regulate the use of artificial intelligence similar to how the FDA regulates the approval of drugs and medical devices.” (source)

I am grateful to Zach Stein-Perlman for feedback, as well as several others for pointing out relevant examples.

Recommended: Spreading messages to help the the most important century

- Prospects for AI safety agreements between countries by (14 Apr 2023 17:41 UTC; 104 points)

- AGI rising: why we are in a new era of acute risk and increasing public awareness, and what to do now by (2 May 2023 10:17 UTC; 70 points)

- 2023: news on AI safety, animal welfare, global health, and more by (5 Jan 2024 21:57 UTC; 54 points)

- “Risk Awareness Moments” (Rams): A concept for thinking about AI governance interventions by (14 Apr 2023 17:40 UTC; 53 points)

- AGI rising: why we are in a new era of acute risk and increasing public awareness, and what to do now by (LessWrong; 3 May 2023 20:26 UTC; 25 points)

- 's comment on Metaculus Predicts Weak AGI in 2 Years and AGI in 10 by (25 Mar 2023 12:22 UTC; 7 points)

Crossposting a comment: As co-author of one of the mentioned pieces, I’d say it’s really great to see the AGI xrisk message mainstreaming. It doesn’t nearly go fast enough, though. Some (Hawking, Bostrom, Musk) have already spoken out about the topic for close to a decade. So far, that hasn’t been enough to change common understanding. Those, such as myself, who hope that some form of coordination could save us, should give all they have to make this go faster. Additionally, those who think regulation could work should work on robust regulation proposals which are currently lacking. And those who can should work on international coordination, which is currently also lacking.

A lot of work to be done. But the good news is that the window of opportunity is opening, and a lot of people could work on this which currently aren’t. This could be a path to victory.

Relatedly, was pretty surprised by the results from this Twitter poll by Lex Friedman from yesterday:

Wow. I know a lot of his audience are technophiles, but that is a pretty big sample size!

Would be good to see a breakdown of 1-10 into 1-5 and 5-10 years. And he should also do one on x-risk from AI (especially aimed at all those who answered 1-10 years).

That’s a huge temperature shift!

Might be overly short due to the recent advancements and recency bias (e.g., would be interesting to see in a few weeks) but that’s a massive sample size!

Akash—thanks for the helpful compilation of recent articles and quotes. I think you’re right that the Overton window is broadening a bit more to include serious discussions of AI X-risk. (BTW, for anybody who’s familiar with contemporary Chinese culture, I’d love to know whether there are parallel developments in Chinese news media, social media, etc.)

The irony here is that the general public for many decades has seen depictions of AI X-risk in some of the most popular science fiction movies, TV shows, and novels ever made—including huge global blockbusters, such as 2001: A space odyssey (1968), The Terminator (1984), and Avengers: Age of Ultron (2012). But I guess most people compartmentalized those cultural touchstones into ‘just science fiction’ rather than ‘somewhat over-dramatized depictions of potential real-world dangers’?

My suspicion is that lots of ‘wordcel’ mainstream journalists who didn’t take science fiction seriously do tend to take pronouncements from tech billionaires and top scientists seriously. But, IMHO, that’s quite unfortunate, and it reveals an important failure mode of modern media/intellectual culture—which is to treat science fiction as if it’s trivial entertainment, rather than one of our species’ most powerful ways to explore the implications of emerging technologies.

One takeaway might be, when EAs are discussing these issues with people, it might be helpful to get a sense of their views on science fiction—e.g. whether they lean towards dismissing emerging technologies as ‘just science fiction’, or whether they lean towards taking them more seriously because science fiction has taken them seriously. For example, do they treat ‘Ex Machina’ (2014) as an reason for dismissing AI risks, or as reason for understanding AI risks more deeply?

In public relations and public outreach, it’s important to ‘know one’s audience’ and to ‘target one’s market’; I think this dimension of ‘how seriously people take science fiction’ is probably a key individual-differences trait that’s worth considering when doing writing, interviews, videos, podcasts, etc.

A couple more entries from the last couple days: Kelsey Piper’s great Ezra Klein podcast appearance, and, less important but closer to home, The Crimson’s positive coverage of HAIST.

Thanks for the compilation! This might be helpful for the book I’m writing.

One of my aspirations was to throw a brick through the overton window regarding AI safety, but things have already changed with more and more stuff coming out like what you’ve listed.

I would encourage anyone interested in how the discussion is shifting to listen to Sam Altman on the Lex Fridman podcast. It is simply astonishing to me how much half their talking points are basically longtermist-style talking points. And yet!

It might be helpful to have a list of these articles on a separate website somewhere, could be a good resource to link to if it is unconnected to EA / LW.