I second David Pearce, and I’d add digital sentience to the topic list. (Pearce appears to have a sophisticated view on consciousness, and his bottom line belief is that digital consciousness—at least, the type that would run on classical computers—is not possible.)

Will Aldred

What do you propose you would talk about?

Just a note to say that we—the moderators—began looking into this two days ago. The current status is that Elliot has let the user who was allegedly asked to comment by Nonlinear know to reach out to us, if they are comfortable, so that we might determine whether what has happened here is an instance of brigading.

At this point, it seems worth mentioning that it is not the opinion of the moderators that every activity that looks like this is brigading. See the linked post for our full take on brigading: the summary is “your vote (or comment) should be your own”.

Yes, especially given that impact of x-risk research is (very) heavy-tailed.

I agree with your “no lock-in” view in the case of alignment going well: in that world, we’d surely use the aligned superintelligence to help us with things like understanding AI sentience and making sure that sentient AIs aren’t suffering.

In the case of misalignment and humanity losing control of the future, I don’t think I understand the view that there wouldn’t be lock-in. I may well be missing something, but I can’t see why there wouldn’t be lock-in of things related to suffering risk—for example, whether or not the ASI creates sentient subroutines which help it achieve its goals but which incidentally suffer—that could in theory be steered away from even if we fail at alignment, given that the ASI’s future actions (even if they’re very hard to exactly predict) are decided by how we build it, and which we could likely steer away from more effectively if we better understood AI sentience (because then we’d know more about things like what kinds of subroutines can suffer).

Scenario 1: Alignment goes well. In this scenario, I agree that our future AI-assisted selves can figure things out, and that pre-alignment AI sentience work will have been wasted effort.

Scenario 2: Alignment goes poorly. While I don’t technically disagree with your statement, “If AIs are unaligned with human values, that seems very bad already,” I do think it misleads through lumping together all kinds of misaligned AI outcomes into “very bad,” when in reality this category ranges across many orders of magnitude of badness.[1] In the case that we lose control of the future at some point, to me it seems worthwhile to try to steer away from some of the worse outcomes (e.g., astronomical “byproduct” suffering of digital minds, which is likely easier to avoid if we better understand AI sentience), before then.

- ^

From the roughly neutral outcome of paperclip maximization, to the extremely bad outcome of optimized suffering.

- ^

This is very informative to me, thanks for taking the time to reply. For what it’s worth, my exposure to theories of consciousness is from the neuroscience + cognitive science angle. (I very nearly started a PhD in IIT in Anil Seth’s lab back in 2020.) The overview of the field I had in my head could be crudely expressed as: higher-order theories and global workspace theories are ~dead (though, on the latter, Baars and co. have yet to give up); the exciting frontier research is in IIT and predictive processing and re-entry theories.

I’ve been puzzled by the mentions of GWT in EA circles—the noteworthy example here is how philosopher Rob Long gave GWT a fair amount of air time in his 80k episode. But given EA’s skew toward philosopher-types, this now makes a lot more sense.

Oh, yes, you’re right, I misremembered. Thanks for flagging, I’ve edited my above response.

Highly engaged EA.

As far as I’m aware, this was a term introduced by the CEA Groups team in around 2021, as a way of (proxy-)measuring the impact of different EA groups. The reasoning being that groups that turn more people into highly engaged EAs (HEAs) are more impactful. HEA corresponds to “Core” in CEA’s funnel model, I believe, so essentially an HEA is someone who has made a significant career decision based on EA principles.

This is not central to the original question (I agree with you that poverty and preventable diseases are more pressing concerns), but for what it’s worth, one shouldn’t be all that nonplussed at how the “insights” one might hear from “enlightened” people sound more like the sensation of insight than the discovery of new knowledge. Most people who’ve found something worthwhile in meditation—and I’m speaking here as an intermediate meditator who’s listened to many advanced meditators—would agree that progress/breakthroughs/the goal in meditation is not about gaining new knowledge, but rather, about seeing more clearly what is already here. (And doing so at an experiential level, not a conceptual level.)

I don’t find IIT plausible, despite it’s popularity, and am not sure what effect it’s inclusion would have on the present arguments.

It sounds like you’re giving IIT approximately zero weight in your all-things-considered view. I find this surprising, given IIT’s popularity amongst people who’ve thought hard about consciousness, and given that you seem aware of this.

Additionally, I’d be interested to hear how your view may have updated in light of the recent empirical results from the IIT-GNWT adversarial collaboration:

In 2019, the Templeton Foundation announced funding in excess of $6,000,000 to test opposing empirical predictions of IIT and a rival theory (Global Neuronal Workspace Theory GNWT). The originators of both theories signed off on experimental protocols and data analyses as well as the exact conditions that satisfy if their championed theory correctly predicted the outcome or not. Initial results were revealed in June 2023. None of GNWT’s predictions passed what was agreed upon pre-registration while two out of three of IIT’s predictions passed that threshold.

There was near-consensus that Open Phil should generously fund promising AI safety community/movement-building projects they come across

Would you be able to say a bit about to what extent members of this working group have engaged with the arguments around AI safety movement-building potentially doing more harm than good? For instance, points 6 through 11 of Oli Habryka’s second message in the “Shutting Down the Lightcone Offices” post (link). If they have strong counterpoints to such arguments, then I imagine it would be valuable for these to be written up.

(Probably the strongest response I’ve seen to such arguments is the post “How MATS addresses ‘mass movement building’ concerns”. But this response is MATS-specific and doesn’t cover concerns around other forms of movement building, for example, ML upskilling bootcamps or AI safety courses operating through broad outreach.)

One example is what Ben Garfinkel has said about the GovAI summer research fellowship:

We’re currently getting far more strong applications than we have open slots. (I believe our acceptance rate for the Summer Fellowship is something like 5% and will probably keep getting lower. We now need to reject people who actually seem really promising.)

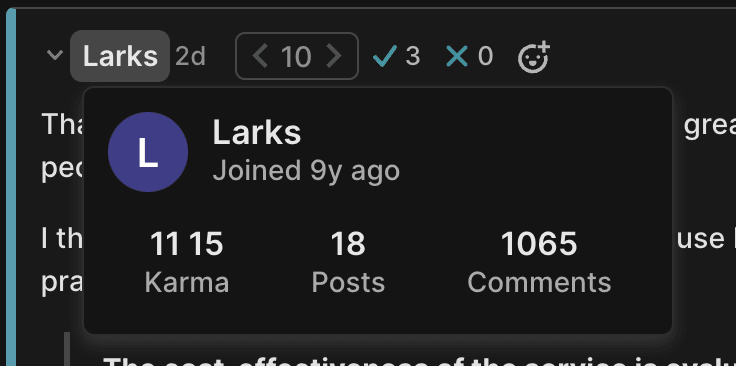

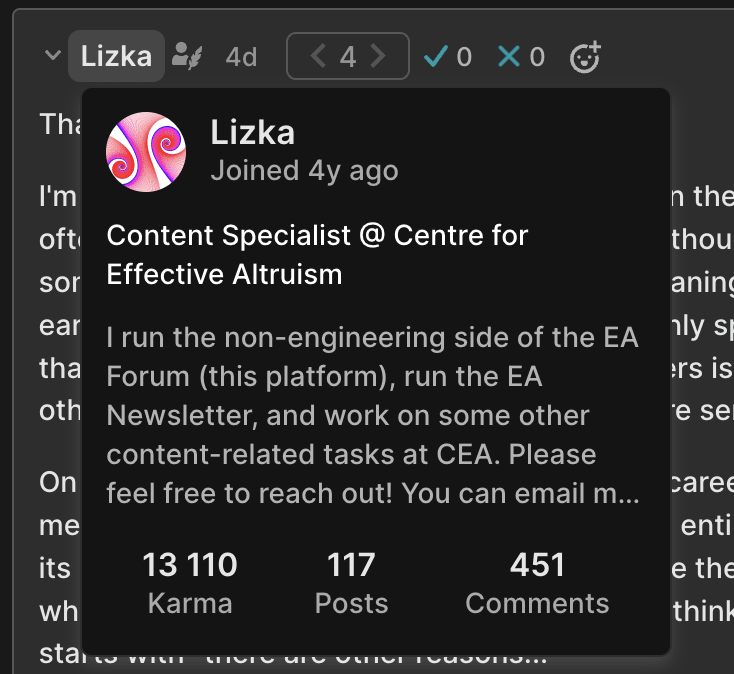

The “hover over a username to see their profile preview” feature is neat. There appears to be a minor bug, however, wherein 5-digit levels of karma don’t always display correctly in these previews (because the third digit gets omitted). Here’s an example of an incorrect display:

And here’s an example of a correct display:

I found this piece interesting and surprising. Back when I used to eat a lot of beans I would always soak them, and I guess I just assumed this was common practice amongst bean consumers everywhere.

Aside from the benefits you’ve mentioned, another purported benefit of soaking is removing the Phytohaemagglutinin toxin: “The FDA also recommends soaking the beans for five hours to remove any residual toxins and then tossing the water out” (source). (An uncertainty here is that I’m not sure if the types of beans eaten in sub-Saharan Africa contain this toxin.) This toxin-removal consideration potentially raises the “unquantified health benefits” part of your cost-effectiveness estimate, though my guess is that any additional impact here is small compared to impact via the main two mechanisms. (Impact through this toxin-removal mechanism seems BOTEC-able in theory, but I likely won’t attempt it myself.)

It’s worth noting that this salary scale is for the UC system in general (i.e., all ten UC universities/campuses). Most of these campuses are in places with low costs of living (by Bay Area standards), and most are not top-tier universities (therefore I’d expect salaries to naturally be lower). I think UC Berkeley salaries would make for a better reference. (However, I’m finding it surprisingly hard to find an analogous UC Berkeley-specific salary table.)

[I’m being very pedantic here, sorry.]

I think Robi’s point still holds, because the statement in the post, “at the beginning of their careers, are contemplating a career shift [...], or have accumulated several years of experience,” arguably doesn’t include very senior people (i.e., people with many years of experience, where many > several).

Not exactly what you’re looking for (because it focuses on the US and China rather than giving an overview of lots of countries), but you might find “Prospects for AI safety agreements between countries” useful if you haven’t already read it, particularly the section on CHARTS.

There appears to be a bug where a question post cross-posted from LessWrong goes up on this Forum as a regular post, as happened here.

Wei Dai on meta questions about metaphilosophy, the intellectual journey that led him there, and implications for AI safety.