Case Study of EA Global Rejection + Criticisms/Solutions

Intro:

I am the person rejected from EA Global who was anonymously featured in the Scott Alexander post about Opening EAG.

I have decided to publicly identify myself and offer my story as an in-depth case study on the EAG admissions process. I’ve dedicated much of my life to EA principles since 2010 and yet I kept getting rejected from attending EAG. This experience brought up a lot of sadness and frustration as I felt like I was being pushed out of a community that I had always closely identified with. It also led to me to question if the admission committee was accurately accounting for the “cost of rejection” in their decision-making process. In the process of creating this post, I had a lot of time to reflect on EA as a personal identity vs a philosophy vs a community vs organizations. They are distinct from one another and yet are still tangled together. I will share many criticisms about the admissions process and will also be proposing some solutions to improving it. By posting my story, I hope to lower the barrier for an honest discussion about EAG Admissions, EA disillusionment, gatekeeping, community building, and solutions for improving EA communities and organizational processes.

Goals:

To allow reader to compare my application to the fact that I was rejected 3 times from EAG

To offer more insight into the EAG admissions process by showing all my correspondences with CEA

To critically discuss problems I see with the admissions process and propose some solutions

To promote more easily accessible EA communities such as Gather Town

To create a safer space for others to share their own stories so more experiences can be heard and accounted for

Disclosures:

This is my first forum post.

Since I know this post could potentially come across as bitter, I want to say upfront that it’s always easy to criticize the actions of others and imagine that one could do things better. I make no claim about being able to do a better job at conference admissions. Through sharing my perspective, I hope to contribute to a discussion that helps everyone create a better event.

Please feel free to skip to the “Criticisms and Proposed Solutions” section if you want to skip my lengthy personal story. To optimize for both transparency and brevity, I have also created an appendix which I will link to throughout this post.

TLDR Timeline Summary:

2001 − 2010 - Hadn’t learned about Effective Altruism yet, but tried my best to do good for non-human animals

2010 − 2021- Discovered effective altruism and was engaged in EA work for 10+ years. Around 2012, I pivoted career paths because earning to give was the main EA recommendation at the time and went on to become a physician. I still pursued independent projects effectively and altruistically throughout medical school and residency, but was not directly involved in the EA community.

Fall 2021- Decided to rejoin the EA community after EAGxVirtual

Winter 2021 - Got rejection #1 for EAG London

April 19, 2022 - Applied to EAG DC and heard no response (4 months) so assumed I was rejected

July 2022- Made my original post as a reaction to a friend’s facebook post about EA Disillusionment

August 15, 2022 - Had a long video chat with a sympathetic CEA staff member, “X,” who saw my post and received feedback, tips for re-integrating with the EA community, and encouragement for reapplying to EAG DC

August 18, 2022 - Attended my first EA NYC event because of my talk with X

August 22, 2022 - Got official rejection #2 for EAG DC (felt sad, but figured it was because my application from 4 months earlier was not that great)

Aug—September, 2022 - Continued to integrate with the EA NYC community and even appeared in a NY Times article that discussed EA

September 6, 2022 - Reapplied to EAG DC with gusto and confidence!

September 12, 2022 - Had a well-connected friend personally recommend me for EAG DC, but they were quickly told my application would likely be rejected again

September 13, 2022 - Officially got rejection #3, requested feedback, and was denied (felt incredibly frustrated and sad)

September 13, 2022 cont. - Found out my post from 2 months ago about being disillusioned with EA was already widely known, albeit anonymously. I started going on EA Gather Town and telling other EA’s my story. In response, I heard many other stories of EA’s quietly feeling rejected/disillusioned because of experiences with CEA and other EA organizations.

Now—Decided to take advantage of my unique position in order to open up more dialogue about personal experiences with rejection in the EA community so we can properly account for them and use that feedback to improve the processes of EA organizations

MY EAG REJECTION CASE STUDY:

Before my Post:

Purpose: To provide context so readers can understand why I felt so strongly when I said what I said in my my post

2001-2010 - Before I found EA:

I first became vegetarian when I was 12 after learning about the enormous scale of animal suffering in factory farms. My choice was quite atypical among my peers and they would often mock me by doing things like eating hamburgers in my presence and declaring, “mmmm… this cow is sooo delicious.” I read Animal Liberation by Peter Singer in highschool and tried to argue with my peers about the ethics of eating meat, but it was exhaustive and I saw no behavioral changes as a result of these interactions. I tried a couple other interventions like a protest against KFC when I was 14 and starting an Animal Rights club in high school, which my advisor convinced me to change to the Ecology Club to be more tractable.

2010-2021 - After I found EA:

When I found out that Peter Singer was coming to my University in 2010 to give the kickoff speech for some group called Giving What We Can, I excitedly went.

Effective Animal Advocacy:

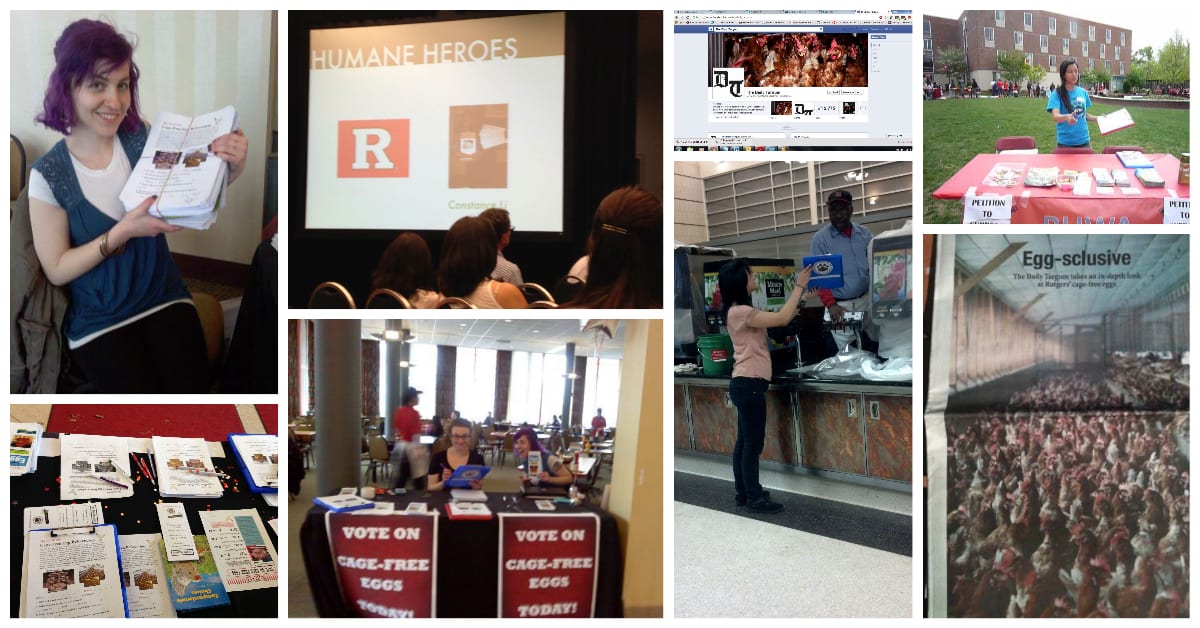

After the event, I became very involved with this small EA community which was led by philosophy grad students Nick Beckstead, Tim Campbell, and Mark Lee. Around this time, I also started studying communication because I wanted to better understand how to have effective conversations with others. In 2012, I attended the Ivy League Vegan Conference in Boston where Will MacAskill was giving the keynote speech. There, I met many people who would become influential figures in my life including members of The Humane League (back when they only had 6 employees), Sam Bankman-Fried, Eitan Fisher (Founder of what is now Animal Charity Evaluators), and many others. I have a cherished memory of many of us sleeping on the floor of an off-campus living room because it seemed unethical and unnecessary to spend money on hotels. Many of these people helped me as I ran the Rutgers cage-free egg campaign which has so far resulted in over 2 million dollars diverted to purchasing only cage-free eggs for the entire university. I was finally in a community that had a strong action-oriented, cost-efficient attitude towards helping non-human animals. Feeling eager to do whatever it took for the greater good, I made a major career decision based on the top EA recommendation at the time, Earning to Give. I then pivoted my career path from veterinarian to physician.

I incorporated an EA mindset into my Animal Advocacy and was in good company

Medical School:

During medical school, I didn’t have the time to be in any EA or Animal Advocacy organizations. Instead, I leaned into my new position as a future doctor and founded the Medical Vegetarian Society (MedVeg). It was a highly active student group which influenced fellow med students to change their perspectives on plant-based diets through biweekly potlucks, a collectivist garden, group trips, free food, exciting events, and a celebrity doctor! I specifically targeted my club activities to prioritize building a healthy community because I knew applied social norms were highly effective for behavior change. In the 4 years of med school, students only have one free summer and I spent mine interning with two plant-based nutrition organizations in DC, nutritionfacts.org and Physicians Committee for Responsible Medicine (PCRM).

I applied effective strategies to build a healthy community of med students that supported plant-based diets

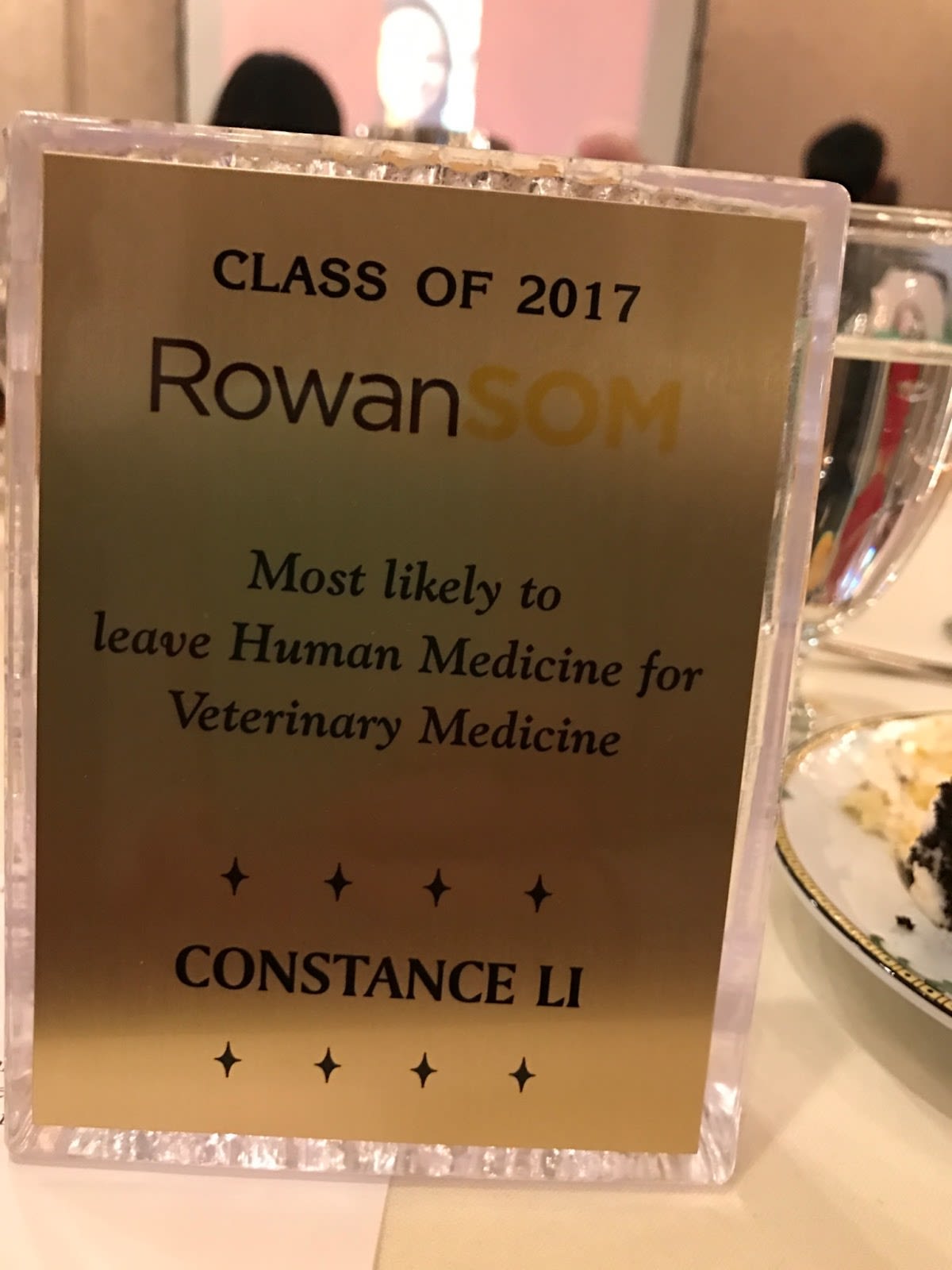

Upon graduation, I received a very ironic superlative that is still my proudest award to this day.

Residency:

During residency, I found myself very time and location constrained. I maintained a low cost of living and continued to donate to effective organizations. I still identified as an EA and this self-sacrifice (being in a high-stress environment that I had little control over) was for the greater good! For my altruism outlet, I did the most readily accessible intervention available, Trap, Neuter, Release (TNR) for stray cats in my neighborhood. I managed to help grow our group, Northwest Philly Cat Squad, from 5 to over 30 members and we spayed/neutered hundreds of cats. I saw that TNR mirrored the classic example of the parable about the babies being thrown down the river. My fellow cat trappers and I all had limited time and resources so maximizing our efficiency was an intoxicating exercise. We would prioritize female cats in high concentration areas with cooperative colony feeders in partnership with efficient nonprofit sponsors and often discussed addressing systemic issues like bottlenecks in the rescue/adoption chain. Working on the ground while also co-directing the group’s activities produced very tight feedback loops, which I came to appreciate even more as essential to maximizing one’s impact.

As a thank you for getting this far, please enjoy all these pictures of cats I’ve caught!

Rejoining the EA Community (Fall 2021)

In Fall 2021 after I graduated residency, I was figuring out how to start up my medical practice 20% of the time and trapping cats 80% of the time. It was then that I saw the invite to EAGxVirtual and figured I would go since it was a low barrier opportunity for me to re-integrate with the greater EA community. During the conference, I made many meaningful connections through 1:1 interactions. Afterwards, I felt very optimistic about the utility of rejoining the greater EA community. I knew I had to move on from the cats to finally start earning to give, so I made the difficult choice of gradually setting up boundaries for my time.

First Rejection from EAG (Winter 2021)

After EAGxVirtual, I started connecting with people in EA Philly and applied to EAG London. I had never heard of a conference that required an application before, so I naively assumed it was more of a formality and didn’t put too much effort into it. I was rejected. One of my friends from EA Philly, who was an undergrad at UPenn at the time, told me that their application was accepted quite readily and expressed surprise that mine got rejected. They skipped happily away to London while I tried to brush the sting of it off.

Second Attempt at EAG (April 2022)

I applied again to EAG DC and didn’t hear anything back for months and just figured I was rejected and they neglected to tell me. At this point, I had relocated to New Jersey to live with my parents because I’m still in that frugal early EA mindset.

Entrepreneurship and Upskilling: (Winter 2021 - ongoing)

After my second application, my business started picking up steam. I trialed through many different practices including doing independent COVID testing, working for other practices, contracting with hospitals, doing home musculoskeletal ultrasounds, etc. Through this process, I finally found a good model which was tractable, profitable, and prosocial. I then focused all my attention into process improvement and upskilling in marketing, customer service, managing staff, bookkeeping/accounting, etc. Owning and operating my own business led to a lot more reflection about process improvement. During this time, I again didn’t have the capacity to be an active part of the EA community and didn’t directly engage much besides listening to the 80k or Clearer Thinking podcasts.

My Post:

Purpose: To explain the situational context behind my original post.

Deciding to Make the Post (July 2022)

I was scrolling through facebook when I found an EA forum post about EA Disillusionment on Ruairí Donnelly’s facebook page. I had never read any EA forum posts before. Intrigued by this title, I read the post and felt like the content of it rang true to my own feelings. I proceeded to comment with my own story as a reply to the facebook post. I didn’t think much more about it and was unaware my casual comment was later turned into an anonymous “EA Blackpill” tweet that was later widely shared and used in Scott Alexander’s EA Forum post.

After my Post:

Purpose: To give context as to why I am writing this current forum post

Conversation with Concerned CEA Staff Member (August 2022)

After seeing my comment on facebook, a concerned CEA staff member, who I will refer to as “X” going forward for privacy, reached out to me. On August 15th, we connected on video chat for over an hour and they gave me some helpful tips to re-engage with the EA community such as getting more involved with the nearby EA NYC group and amending/re-submitting my application to EAG DC. This conversation turned my attitude around enough to the point that I decided to re-engage in the community by going to my first EA event since moving away from Philly.

My First EA NYC Event (August 18, 2022)

I went to an EA NYC event, which was a talk on Population Ethics by my old friend Tim Campbell, who co-founded the Rutgers GWWC group with Nick. The event went amazingly well and I again felt a strong sense of community unified by purpose that I hadn’t felt in a long time. I was so touched that I sent X quite a long appreciation voice message at 1am. A couple days later, I received rejection #2. I felt a little sad, but I had a consolation prize in finding the EA NYC community so that lessened the sting. From my conversation with X, I now understood exactly how competitive EAG was and realized I didn’t apply enough effort into my applications up to this point.

Continued Involvement in EA NYC (Aug—September, 2022)

Over the next month, I continued to keep participating in the EA NYC community. I went to many of their official events as well as some adjacent social events. I felt quite accepted by the EA NYC community and no longer felt disillusioned.

Re-applying to EAG DC (August 22, 2022)

Finally feeling determined, financially equipped, and integrated enough with the EA community, I re-applied to EAG DC. I spent 2 hours working on a stronger amended application. I made sure to do my research and based my amended application on CEA’s published EAG 2022 application information and careful consideration of the insider insight about the process I got from X.

I decided to pull out ALL THE STOPS because I really, really wanted to go and knew I had to meet a high bar.

My Application to EAG DC:

General Highlights:

Shameless name-dropping: I stated that Nick Beckstead was highly influential in my decision to pursue Earning to Give. I also mentioned that Sam Bankman-Fried did the statistical analysis for my cage-free egg campaign at Rutgers in 2013.

A note: I didn’t resort to any name-dropping in my first 2 applications to EAG, but I did for this 3rd one because I know that personal connections often have a hand in these sorts of decisions. I was fairly confident that, if asked directly, these two hard-hitters of EA would remember me and speak of my intentional work ethic positively.

Showcasing my Most Effective Intervention: I elaborated on the Rutgers cage-free egg campaign, which resulted in a victory whereby $250k USD was added to the dining hall budget each year, which at this point, has accumulated to over $2 million USD. I utilized my available social resources and thought analytically about why my predecessor’s attempt at this campaign in the 2 years prior had failed. I even made the impact go further by getting plenty of news coverage and helping to make a documentary about it.

Demonstrating Real-Life Success: I included that I started my own medical practice 9 months earlier and was already making $60,000 USD per month with 10-20% growth each month. I thought the timeline of starting up my business would showcase how effectively I utilized the scout mindset early on to identify a model that was tractable, scalable, and profitable. The profit and growth numbers were meant to be a reflection on my ability to optimize for process improvement. I even provided a link to my google reviews to demonstrate that my business was also prosocial.

Highlights According to CEA’s Published Criteria for EAG 2022 Admission:

I stated I became a physician based on the EA dogma of “Earning to Give” (ETG) the time, which took 9 years of my life to see to completion. I didn’t elaborate more because I figured that would be sufficient for this criteria.

While I didn’t volunteer for any sanctioned (funded) EA projects, I did give multiple examples of starting organizations and leading interventions which were highly effective. I think it can be understood that my time was unpaid for these and thus I was a volunteer.

I said that I have so far paid myself $0 from my business and planned to focus my resources to execute effective interventions aimed at reducing animal suffering. I gave a couple concrete examples of interventions I was considering and remained open to others by mentioning I was in scout mode for cost-effective opportunities and underutilized talent. I mentioned that I was no longer on the ETG track because I believed more in my own ability to effect change. (I didn’t include another important reason I had for this, but you can read about that here.)

Providing more detail about my actions and the ways in which ideas in EA influenced my decisions: My entire application took 2 hours to complete and was incredibly detailed. Keywords that I mentioned throughout my application when describing different actions included scout-mode, cost-effectiveness, tight feedback loops, taking advantage of neglected interventions, leaning into my most valuable assets, reducing future animal suffering, and longtermist frameworks etc.

Job Opportunities: I stated that I wanted to transition my business to a form of passive income/funding so that I could focus on more direct EA work through hiring 1-2 people for effective interventions that I would fund. I figured I would be adding value by creating more opportunities for the community rather than taking them away.

Free Medical Advice: I am a physical medicine and rehab physician, which is a broadly applicable and useful speciality. In my application, I stated that I could offer useful and free medical advice and ultrasound scanning for other EA’s during the conference. I want to help reduce chronic discomfort/pain for other EA’s so they could go on to be more effective in achieving their altruism goals. Plus, I also really enjoy being of service to others and find it particularly useful in making connections.

Connection Facilitation: I included a link to my google reviews to demonstrate “my general likeability and professionalism.” I figured I could utilize my prosocial extroversion and affinity for group dynamics to increase the number of connections made at EAG, one of the metrics that I found that CEA uses to measure the success of their conferences.

Finding Out About Rejection #3 (September 12, 2022)

One of my well-connected friends from EA NYC offered to give a personal recommendation for me directly to the admissions team. I was overjoyed to have a community endorsement to add to my “EA Resume.” However, the response my friend received from the admission lead was that my application would likely be rejected again. No explanation was provided so my mind suddenly went to the worst possible scenario

“OMG! It’s because my main cause area is reducing animal suffering and EA doesn’t care about that anymore!”

….It’s easy to let insecurities take over the narrative when there is no explanation to fill in the unknown gap. This horrifying thought was already one of the main bases of my disillusionment when I made my original post.

Correspondences with CEA

The following include my personal thoughts about CEA’s communications. They are discussed further in the “Hypotheses for Rejection” and “Criticisms/Solutions” sections.

I messaged X to give them an update about rejection #3:

Soon afterwards, I received this email:

I replied to the email containing rejection #3:

I received a reply which really rubbed me the wrong way:

I replied with mildly sassy response when it was confirmed there would be no feedback:

It was shortly after this that I found out about the Scott Alexander post, which was an interesting story, but unecessary for this post. Interested folks can read about if they want.

Personal Hypotheses for Rejection:

These are meant to be examples of bad epistemics (I couldn’t fact-check)

After getting comments from reviewers for this post, I did see many potential red flags in my application that I didn’t initially consider. I have included them in this list.

Perhaps the admissions team…

is a part of the systematic deprioritization animal issues in EA (a larger and more complicated concern of mine that is better suited to be discussed in another post)

was not looking for early career Earning to Give folks for applications #1 + #2 and then labeled as a troublemaker for #3 after finding out I was the one in Scott Alexander’s forum post

thought I may not be earnest in my application since I had talked to X and got some insider insight about the screening process

thought I sounded too passionate about animal suffering and that I was approaching with a soldier mindset rather than a scout one

thought my proposed interventions were not sufficiently traditional, ethical, high-priority, or well-researched enough

was worried that I would compete with well-vetted EA organizations for talent

thought I sounded overconfident about my achievements

didn’t like that I mentioned finding a romantic partner in EA as one of the things that I imagined would have happened if the event had “gone exceptionally well”

didn’t think I sounded professional

thought my offer of medical advice and ultrasound scanning was more of a liability to the conference than it would be a benefit to other attendees

Factor X—Something I haven’t considered yet

I spent many hours thinking in circles through these various hypotheses…

I think that everything in the list (with the exception of the first 3) could have been resolved by redirecting me to resources to better understand the expected norms of the conference so I could update my priors. One of my priors when I wrote my amended application was that EA’s appreciate things that are weird and honest. I had openly done or talked about all of the things in my application around other EA’s without having received any significant feedback that they were inappropriate. For this reason, I found them less compelling as a basis for rejection unless the reviewer made the assumption that I wasn’t someone who could be redirected. I hope that wasn’t the case because I also said,

“I have been in scout mode to identify both opportunities and underutilized talent. I have many ideas for projects that take advantage of neglected interventions for reducing animal suffering and need to find people with similar enthusiasm and availability to help develop them.”

I thought this statement would be sufficient to communicate that my ideas were still in early stages and that, while I was passionate about my end goal, I was still open to changing my approach and thought EAG could help me meet people who could challenge my epistemics and improve my process. I did use the term “future projects I am planning” later on so I could see how that might give the impression to the reviewer that I was rigidly set on doing all of those interventions (I’ve already given up on one of them due to new information).

I also initially considered capacity limits as a possible reason, but later came to find out that they are not a factor in admissions.

TLDR; When there is a gaping hole of nothingness, the brain has the uncanny ability to fill it with all sorts of possible hypothetical explanations. Mixing in strong emotions and insecurity (often the case with rejections) places people at even higher risk for cognitive distortion and reactionary behavior. I was already worried that non-human animals would not have a place at the new longtermist table in the EA community. That is why my mind immediately grabbed onto that when reaching for an explanation.

As much as we aspire to be perfectly rational, we are still human in the end.

Criticisms and Proposed Solutions

Because I heard EAs love criticism!

#1 CEA Admissions Decisions are Easily Confused for Worthiness:

All my rejection hypotheses boiled down to the CEA’s admissions judgment on whether I was good enough of an EA to get into EAG. In the admissions lead’s own words, “We simply have a specific bar for admissions and everyone above that bar gets admitted.” Therefore, it is implied that if you didn’t get in, then you are below that bar. This is especially demoralizing because there is then the tendency to start comparing yourself to accepted applicants.

Proposed Solution: Not sure

This is a difficult problem to solve because it involves each applicant’s personal sense of identity. This forum post is my small contribution to addressing this problem. There seems to be a taboo about people sharing personal stories of rejection. Some possible explanations could be feelings of shame or risk of personal reputation. Hopefully as you read on in the criticisms, you will realize how much room for improvement there is in the admissions system for EAG. It’s relatively easy to mistake their control of conference admissions with an authoritative judgment of how fit you are to be in the greater EA community, but… it definitely is not!!

#2 Coming Up With Better Explanations Isn’t Solving the Problem:

I see evidence of many different iterations of CEA communications (FAQ, Google Doc, Forum Post, Email, and most recently comments in the EA Forum) that contain various messages that try to broadly address the need applicants have for feedback and clarity. But are these needs being met? It seems like many people have serious questions about their application decisions and are being denied feedback. A common explanation for this is that staff do not have the capacity to handle all the feedback demanded. As I discuss later on, much of this problem could be addressed with automated systems and a good process improvement cycle.

Proposed Solution: Instead of coming up with better explanations, consider a different strategy to address the emotional and epistemic needs of rejected applicants. I will address these in criticisms 3-5.

#3 Not Taking Advantages of Opportunities for Feedback:

Feedback… It’s what EA’s Crave! When people do not have a clear and actionable reason for their rejection, their minds can spiral and often come to the conclusion that either they are unworthy, which poses a risk for declining individual mental and community health. People who apply to EAG are already self-selecting as highly motivated members of the community and this energy could be channeled into a growth mindset to create something productive. Seems like giving feedback to EAG applicants is something that could be an opportunity to direct them in a more effective direction. Perhaps a volunteer could even do this after an automated system is set up.

Proposed solution: Create an automated system for feedback and optimize for applicant satisfaction and engagement.

Categorize rejected applicants according to concerns about their application that could be improved upon and create a triage system to provide actionable feedback. Once some templates and a system is set up, I imagine it can be pretty efficient. Here is one way it could be done—the admissions team labels each rejected application in their inbox with colored flags corresponding to different reasons for rejection and then a remote assistant can just fire off the pre-templated emails. There is also room here for more targeted triage using spreadsheets and customizable templates according to characteristics of applicants such as location, earning potential, cause area, stage of career, etc. An added bonus is that all this work can just become data points to feed into your process improvement cycle which I describe in #4.

Here are some simple examples:

Insufficient Understanding of EA Principles → Read the EA Handbook

Work is Not Impactful Enough → Read the 80k Career Guide

Not Involved Enough in EA Communities → Join a local EA Hub or attend an EAGx

All of these links can be fed through a google analytics tracker so you can get really valuable data points for which links tend to get opened and how long someone spends engaging with those resources. Then you can even do A:B testing to optimize the messaging. Including surveys in these emails can provide a valuable feedback mechanism for process improvement!

There is so much opportunity to redirect the energy of rejected applicants into something positive.

#4 Poor Process Improvement Mechanisms:

The best examples of industries that have gained success through process improvement are the car industry, the airline industry, and more recently, the healthcare industry. It seems natural that EA would implement these models as well. The admissions team states that they have thought carefully about the question of “how costly is rejection” and determined that there is a “relatively small discouragement effect” because rejection did not have a great effect on the likelihood of applicants to apply/reapply (a proxy outcome measure for discouragement) for future conferences. This is the only example I could find.

If CEA has other measures they use to assess how costly rejection is, then it would be beneficial to building community trust if they published them. For now, I will explain why I think this choice for a proxy outcome measure is likely a poor correlate for actual discouragement.

As I said in my correspondence with X, it is so easy to autofill the applications with prior answers again and again. Rejected applicants can still be measured as being “not discouraged” every time they apply even if they are feeling progressively more discouraged or disillusioned with each rejection. There are a lot of other costs to rejection that cannot be captured with this statistic too. Examples of other outcomes to consider include aspects of mental health, individual productivity, and community participation/epistemological integrity/diversity.

Ultimately, the “cost of rejection” is just one factor to calculate when considering the overall impact of the admissions process.

“EAG exists to make the world a better place, rather than serve the EA community or make EAs happy. This unfortunately sometimes means EAs will be sad due to decisions we’ve made — though if this results in the world being a worse place overall, then we’ve clearly made a mistake.”

It is really hard when an applicant, who wants to contribute to the greater good and believes that EAG will serve them well in that goal, gets rejected and is told that the reason is that the admissions team does not think their attendance would have made the world a better place. There seems to be something intuitively wrong with this, but it’s hard to know what that is because of how opaque the admissions process is, which I discuss in #6.

Proposed Solution: Continue to update the process improvement model

It’s really hard to have a good process improvement model for “cost of rejection” without directly surveying the rejected applicants. Complaints are a gift! A humble place to start is with good qualitative feedback surveys—don’t make rejected applicants resort to writing a whole forum post on their frustrations.

Here are some suggestions for qualitative questions:

Why do you think you got rejected?

How did it the rejection impact you?

If you disagree with our decision, please tell us why.

How has this rejection affected your EA plans (career, study, community, conferences, etc) going forward?

Hone in on what people complain about the most and then develop quantitative ways to measure that.

Here are some suggestions for quantitative (0-10) questions:

Sense of belonging in the EA community

Dedication to EA principles

Likelihood to pursue a career directly or indirected related to EA

Sadness/Anxiety

Create a list of possible interventions to optimize for these desired outcomes, pick the best one, implement it, and measure it. Use that information to refine your model and repeat the process. As anyone who has gone through a process improvement model can attest to, it is an overwhelming task to find the best way to go about measuring these things. It’s much easier to use a readily available statistic like “likelihood of applicants to apply/reapply.” EA is about doing the most good though so we need to put in the work to measuring costs and benefits accurates.

#5 Poor Quality of Communication with Rejected Applicants:

Good communication is a skill. It takes concentrated effort and lots of feedback to develop, but once you get good at it, it doesn’t take much more time or effort to word things more considerately. There were many points in my correspondence with X and the Admissions Team regarding my rejected application that could have been handled better. I wrote some specific comments regarding opportunities for improvement in wording choice. However, I think the most glaring example of poorly considered/coordinated communication can be seen in the contrast between In the admissions lead’s comments about having a specific bar for admissions and “everyone above that bar [getting] admitted” and X saying ”please don’t take our judgment personally.”

Proposed Solution #1- Include plausible reasons for the rejection decision in the default email

One simple way to do this could be to include a link to a list of plausible and sensitively-written explanations for an EAG rejection such as Julia Wise’s 2019 EA forum post. Rejected applicants could substitute these explanations in place of potentially more detrimental explanations that could be based in insecurity. However this leads down the rabbit hole to criticism #2 (better explanations don’t solve the underlying problem). Also, the plausibility of any alternate explanations would now be dubious given the explicit “pass the bar” criteria.

Proposed Solution #2- Equip staff with the tools to better relate to rejected applicants

CEA staff involved in communicating about rejections can be trained on authentic relating or nonviolent communication methods. These are incredibly impactful communication methods that place an emphasis on listening and considering the emotional needs of others before responding. When people feel understood, they are much more likely to extend the same courtesy back and then communicating becomes easier and more productive.

#6 Too Opaque:

In general, there seems to be a lot of power concentrated in CEA and when you mix that with an opaque selection process, this tends to engender distrust. This is unfavorable to building a unified movement. As I discussed earlier in this post, there are very few metrics that CEA makes publicly available that help with understanding the reasoning for their admissions process. I believe there are many more that can be published without giving away the “teacher’s password.”

Proposed Solution: Make it more transparent!

Publish the existing rejection rates for each conference so people can set their expectations accurately when applying

Publish that internal report about “how costly rejection” is so people can see the metrics

Create surveys (described in #4) to assess the downstream effects of the admissions process. Publish the results.

Have a system for 3rd parties to perform randomized audits of application decisions.

#7 Perception of Gatekeeping by a Small, Elite Group:

Even if the CEA admissions team states they are going by some thoughtful, well-researched algorithm, there is still the problem of the perception of gatekeeping. It seems natural for people to feel that way when a small committee, which can easily be subject to personal bias, has the power to deny access to a highly anticipated event.

Proposed Solutions:

Start a process improvement cycle for community trust

Blind the admission process (at least partially) to minimize unconscious bias

Increase transparency (discussed below)

Call the conference something that sounds less broad than EA Global

Suggestions include:

CEA Global

CEA International

EA Focused

Closing Thoughts

In the end, I still have the feeling that I did in my original post that I don’t really fit into this newer brand of EA… and CEA doesn’t really recognize me as “EAG” worthy. Nonetheless, I can still personally identify as an EA doing impactful work and feel like I fit into parts of the EA community.

I have accepted that I am unlikely to ever get a satisfactory answer to support that the current admission process serves to optimize for the greatest good. It’s really hard to accept any claims about this without seeing all the proxy metrics used for it, including how they are measuring “cost of rejection” without sending out any surveys to the rejected applicants.

Working on this post, telling my story, and hearing others along the way has been a catharsis. I recognize I’m in a particularly privileged position. I live near a large EA hub, am financially well-resourced, and am capable of creating my own career opportunities. Others I have talked to have not had these advantages. They rely on EA grants for their projects or EA organizations for obtaining jobs and therefore may be more hesitant to directly and publicly criticize authoritative organizations like CEA.

I would like to invite anyone who has a story to tell to share it in the comments, privately message me, or just jump onto EA Gather Town anytime to talk to another EA about it. It is a wholesome EA community where anyone is welcome and there is no application. The people there have given me good company and conversation as I wrote this post. I had many interesting conversations such as how essential omega-3’s are (not very), the value of street epistemology, and the complicated dating lives of EA’s.

Let’s create a warmer community and be present for one another. There are many out there that have the capacity to listen and appreciate your unique talents. EA is a huge global community with different niches. Don’t leave just because one part of the community couldn’t/wouldn’t accept you.

Altruists, we are all in this together for the greater good!

I’d like to extend my deepest gratitude to the reviewers for this post:

Scott Alexander, Aaron Gertler, Cornelis Dirk, Jonathan Yan, Annabel Luketic, and Emrik Garden

Update May 30, 2023:

Since this post was made, I’ve heard from a lot of animal EA folks that they have felt similarly marginalized or looked down upon for having a “lower priority” cause area. (though no one says it explicitly… it’s all implicit and reflected in ~vibes~ and noticing where the money and attention goes). So I’ve been on a mission to bring more EA into animal advocacy and more animal advocacy into EA. I’ll be at EAGxNYC 2023 (Aug 18 − 20) so if you are there, come say hi!

I also did go to the Animal Vegan Advocacy Summit of 2022 where I was a speaker and organized a subgroup meetup for EA’s:

We broke out into discussion groups for community building, longtermism, theory of change, and newcomers.

The AVA Summit is a fantastic event for those interested in getting actively involved with animal advocacy. I highly encourage EA’s interested in animal welfare to go. There is a huge international presence and many newer, smaller organizations connected with their funders there. This year, I’ll be facilitating a workshop on leveraging AI to accelerate progress for animals. I want to see more animal advocates working smarter, not harder.

My vision is that animal advocates become cyborgs.

And if you want to get more involved with animal welfare right now, please join the Impactful Animal Advocacy Slack group where dedicated advocates are collaborating every day.

With lots of love,

Constance

- The Cost of Rejection by (8 Oct 2021 12:35 UTC; 308 points)

- A Practical Guide for Aspiring Super Connectors by (15 Jun 2025 8:30 UTC; 149 points)

- Thoughts on the Purpose of EAGs by (12 Jun 2024 23:28 UTC; 49 points)

- 's comment on How CEA approaches applications to our programs by (11 Nov 2022 15:28 UTC; 35 points)

- EA & LW Forums Weekly Summary (19 − 25 Sep 22′) by (28 Sep 2022 20:13 UTC; 25 points)

- EA & LW Forums Weekly Summary (19 − 25 Sep 22′) by (LessWrong; 28 Sep 2022 20:18 UTC; 16 points)

- 's comment on CEA will continue to take a “principles-first” approach to EA by (23 Aug 2024 11:51 UTC; 13 points)

- Monthly Overload of EA—October 2022 by (1 Oct 2022 12:32 UTC; 13 points)

- 's comment on Short bios of 17 “senior figures” in EA by (30 Jun 2023 13:27 UTC; 7 points)

- 's comment on Why don’t people post on the Forum? Some anecdotes by (7 Oct 2022 21:35 UTC; 3 points)

- I’m About to Raise $1 Billion for (and Fix the Problems of) Effective Altruism Using a DAO. Anyone Care to Join Me? by (20 Feb 2023 10:48 UTC; -25 points)

Hi Constance,

I was sad to read your initial post and recognize how disappointed you are about not getting to come to this EAG. And I see you’ve put a lot of work into this post and your application. I’m sorry that the result wasn’t what you were hoping for.

After our call (I’m happy to disclose that I am “X”), I was under the impression that you understood our decision, and I was happy to hear that you started getting involved with the in-person community after we spoke.

As I mentioned to you, I recommend that you apply to an EAGx event, which might be a better fit for you at this stage.

It’s our policy to not discuss the specifics of people’s applications with other people besides them. I don’t think it would be appropriate for me to give more detail about why you were rejected publicly, so it is hard to really reply to the substance of this post, and share the other side of this story.

I hope that you continue to find ways to get involved, deepen your EA thinking, and make contributions to EA cause areas. I’m sorry that this has been a disappointing experience for you. At this point, given our limited capacity, and the time we’ve spent engaging on calls, email, and Facebook, I’m going to focus on building up our team in order to run more EAG and EAGx events.

Thank you for sharing your thoughts on the process more generally. My team is focused on EAG right now, but we plan to reflect on any structural changes after the event.

This of course is correct as a default policy. But if Constance explicitly said she wants to have this conversation more publicly, would you comment publicly? Or could you comment in a private message to her, and endorse her sharing the message if she chose to?

(Good luck with EAG DC in the meantime.)

Thanks for the suggestion, Zach!

I did explain to Constance why she was initially rejected as one of the things we discussed on an hour-long call. We also discussed additional information she was considering including, and I told her I thought she was a better fit for EAGx (she said she was not interested). It can be challenging to give a lot of guidance on how to change a specific application, especially in cases where the goal is to “get in”. I worry about providing information that will allow candidates to game the system.

I don’t think this post reflects what I told Constance, perhaps because she disagrees with us. So, I want to stick to the policy for now.

Hi Amy, I think it’s hard to justify a policy of never discussing someone’s application publicly even when they agree to it and it’s in the public interest. This is completely different from protecting people’s privacy.

This seems to me to be a recurring theme regarding CEA procedures. I encountered a very similar approach from another CEA staff member regarding a completely different, high profile topic that was discussed on the forum. (This was in a private message, so I won’t share it at the moment).

And it’s a valid thing to worry about—but it also means trading away accountability. If CEA can’t be transparent, how can community members evaluate its impact or feel comfortable relying on support/funding/events that CEA makes available? In this tradeoff, it personally seems to me that CEA is way too far on the opaque side.

If you read Amy’s reply carefully, it sounds like she told Constance some of the reasons for rejection in private and then Constance didn’t summarize those reasons (accurately, or at all?) in her post. If so, it’s understandable why Amy isn’t sure whether Constance would be okay having them shared (because if she was okay, she’d have already shared them?). See this part of Amy’s reply:

FWIW, based on everything Constance writes, I think she seems like a good fit for EAG to me and, more importantly, can be extremely proud of her altruism and accomplishments (and doesn’t need validation from other EAs for that).

I’m just saying that on the particular topic of sharing reasons for the initial rejections, it seems like Amy gave an argument that’s more specific than “we never discuss reasons, ever, not even when the person herself is okay with public discussion.” And you seem to have missed that in your reply or assumed an uncharitable interpretation.

Amy’s comment was in response to Zach asking:

And I was refering to that hypothetical.

Strong agree with all of this. ‘Gaming the system’ feels like weaksauce—it’s not like there’s an algorithm evaluators have to agree to in advance, so if CEA feel someone’s responded to the letter but not spirit of their feedback, they can just reject and say that in the rejection.

I strongly disagree, [edit: deleted a sentence]. Happy to talk about this via DM, will send you a DM with my thoughts.

edit: DM sent

Please do, I’d be interested to hear your take :)

Hi Amy,

I’m still trying to figure out how to best use the comments on this forum, but I did make a reply with a clarification on what you said about me not being interested in EAGx. I just want to comment it again here to make sure that it is seen.

“I also want to mention that I am actually open to going to EAx conferences and was just talking to Dion today about my desire to go to EAxSingapore next year. I think I might have said I wasn’t able to go to EAGxVirtual because it is the same weekend as the AVA Summit, which I am a speaker for. It might also have been that I didn’t have a desire to travel so far for a conference at that time and all the EAx conferences that were listed on the events page would have required me to fly since I’m in the US on the east coast. EAxBoston had already passed at that point so the only conference left on the list that would have been readily accessible to me in terms of location was EAG DC. This might have been construed as a lack of interest in attending EAx events in general, but I assure you this is not the case. I do not have an exact memory of what was said, but hopefully, this provides some clarity.”

Understandably not comfortable sharing why Constance was rejected yet seemingly not at all uncomfortable with calling her a liar anyway.

Hi Amy,

I appreciate you taking the time to comment. I know you must be really busy with running EAG DC AND taking care of your child. I think it is fair to say from our conversation, I came to understand that there is a distinct reason that could be pointed to for my rejection from EAG. However, I lack the institutional trust to believe this is the only reason or that it is a good reason to support the goal of EAG “to make the world a better place.” I have updated my closing thoughts to reflect this better.

I also want to mention that I am actually open to going to EAx conferences and was just talking to Dion today about my desire to go to EAxSingapore next year. I think I might have said I wasn’t able to go to EAGxVirtual because it is the same weekend as the AVA Summit, which I am a speaker for. It might also have been that I didn’t have a desire to travel so far for a conference at that time and all the EAx conferences that were listed on the events page would have required me to fly since I’m in the US on the east coast. EAxBoston had already passed at that point so the only conference left on the list that would have been readily accessible to me in terms of location was EAG DC. This might have been construed as a lack of interest in attending EAx events in general, but I assure you this is not the case. I do not have an exact memory of what was said, but hopefully, this provides some clarity.

I hope the event goes smoothly and I would be happy to give my input on any discussion around structural changes for the process going for future events!

What was this distinct reason? If this was mentioned in the post, I didn’t see it.

If it wasn’t mentioned in the post, it feels disingenuous of you to not mention it and give the impression that you were left in the dark and had to come up with your own list of hypotheses. It’s quite difficult for a third party to come to any conclusions without this piece of information.

This comment feels unnecessarily combative, even though I agree with the practical point that without this piece of information, 3rd party observers can’t really get an accurate picture of the situation. So I agreed with but downvoted the comment.

I’ve edited it slightly to work on this, though it is not easy to make this point without appearing slightly callous, I think.

I haven’t read the comments and this has probably been said many times already, but it doesn’t hurt saying it again:

From what I understand, you’ve taken significant action to make the world a better place. You work in a job that does considerable good directly, and you donate your large income to help animals. That makes you a total hero in my book :-)

Thank you for those kind words! I plan to continue taking significant action to help the world become a better place. While I was hoping that attending EAG could help me in that journey, I’ve come to learn that there are many other avenues available.

I have a similar-ish story. I became an EA (and a longtermist, though I think that word did not exist back then) as a high school junior, after debating a lot of people online about ethics and binge-reading works from Nick Bostrom, Eliezer Yudkowsky and Brian Tomasik. At the time, being an EA felt so philosophically right and exhilaratingly consistent with my ethical intuitions. Since then I have almost only had friends that considered themselves EAs.

For three years (2017, 2018 and 2019) my friends recommended I apply to EA Global. I didn’t apply in 2017 because I was underage and my parents didn’t let me go, and didn’t apply in the next two years because I didn’t feel psychologically ready for a lot of social interaction (I’m extremely introverted).

Then I excitedly applied for EAG SF 2020, and got promptly rejected. And that was extremely, extremely discouraging, and played an important role in the major depressive episode I was in for two and a half years after the rejection. (Other EA-related rejections also played a role.)

I started recovering from depression after I decided to distance myself from EA. I think that was the only correct choice for me. I still care a lot about making the future go well, but have resigned to the fact that the only thing I can realistically do to achieve that goal is donate to longtermist charities.

I am really sorry to hear about your experience. I know how devastating rejection can feel, especially when it comes from an organization you so much admire and identify with.

I can imagine the decision felt like a judgement of who you are as a person and of your worthiness as a ‘real’ Effective Altruist. I can tell how important effective altruism and the EA movement are to your identity and sense of yourself and your purpose, and I am sure you put so much of yourself into your application. How could the decision not feel personal?

I hope you know that EA Global’s (or any other EA origination’s) decision not to accept your application to their conference is NOT a judgement of who you are as a person, or your intelligence, competency, or ability to do good in the world. It is especially not a judgement of your moral worth. It is not even a true judgement of your dedication to EA causes or your value as a member of the EA movement. EA Global, despite sharing the underlying mission of all things EA, to do significant good, is also just an institution, comprised of a whole bunch of employees, with all the institutional priorities, institutional constraints, and institutional complexities of any institution. It is not a god, it does not see you or know you, and it does not have the authority to make any ruling regarding your true worth or value. The decision-making process is both bureaucratic and human. A binary decision from an impenetrable institution conceals all elements of randomness, arbitrariness, and human best-guessedness that goes into all human decision making processes.

No institution can be an arbiter of your worth or your value.[1] I really hope that you can internalize this. You know what your values are. You identify with the EA movement because your values are so strong and you feel so intensely driven by them. Remember that effective altruism is a philosophy and a movement, not a club.

Being an effective altruist means living your life in a way that aims to promote good in the world/universe to the extent that you are able. Qualification entails the dedication of one’s time and resources to this goal, not an invitation or acceptance letter or membership card or special club jacket. I am sure you are doing good, and I hope you can continue to do so with confidence in your worth, importance and total validity.

As a note, I am a total outsider here and can presume neither any insider knowledge to the workings of EAG nor the authority from which to be dispensing advice or telling anyone what to do, but it made me very sad to read your account and that of Constance, and this feels right. It didn’t feel right to me that this kind of institutional decision can make such good people feel so bad and demotivated, and i just wanted to offer my personal take by way of explanation for why that is, and with hope that it might make your or someone in your situation feel a tiny bit better.

Besides possibly the Catholic Church or other intensely hierarchical religious institution, if you subscribe to that sort of thing. )

Data point: in the three cases I know of, undergraduates with around 30–40 hours of engagement with EA ideas were accepted to EAG London 2022.

I don’t think much can be said from a small number of data points. The admissions strategy could very well be “Get a lot of super early career people together with some really experienced EAs so they can provide mentoring”, for example. And this would be a valid decision, that we could debate the merits of, and maybe find good or bad. But there’d not be an obvious “mistake” about accepting undergrads and rejecting specific other people.

Disclosure: I also got rejected from EAG London this year, but did attend the one before and an EAGx.

(This is the “pro CEA” part of my opinion—see my top level comment for the other side)

I didn’t say that CEA’s admissions process was mistaken or bad. (In fact, I don’t believe that it’s bad!) I’m just sharing what may be relevant context for others’ thought and discussion on EA conference admissions.

Sorry that your experience of this has been rough.

Some quick thoughts I had whilst reading:

There was a vague tone of “the goal is to get accepted to EAG” instead of “the goal is to make the world better,” which I felt a bit uneasy about when reading the post. EAGs are only useful in so far as they let community members to better work in the real world.

Because of this, I don’t feel strongly about the EAG team providing feedback to people on why they were rejected. The EAG team’s goals isn’t to advise on how applicants can fill up their “EA resume.” It’s to facilitate impactful work in the world.

I remembered a comment that I really liked from Eli: “EAG exists to make the world a better place, rather than serve the EA community or make EAs happy.”

[EDIT after 24hrs: I now think this is probably wrong, and that responses have raised valid points.] You say”[others] rely on EA grants for their projects or EA organizations for obtaining jobs and therefore may be more hesitant to directly and publicly criticize authoritative organizations like CEA.” I could be wrong, but I have a pretty strong sense that nearly everyone I know with EA funding would be willing to criticise CEA if they had a good reason to. I’d be surprised if {being EA funded} decreased willingness to criticise EA orgs. I even expect the opposite to be true.

(Disclaimer that I’ve received funding from EA orgs)

Sorry that the tone of the above is harsh—I’m unsure if it’s too harsh or whether this is the appropriate space for this comment.

I’ve err-ed on the side of posting because it feels relevant and important.

I disagree, I know several people who fit this description (5 off the top of my head) who would find this very hard. I think it very much depends on factors like how well networked you are, where you live, how much funding you’ve received and for how long, and whether you think you could work for and org in the future.

Here’s an anonymous form where people can criticize us, in case that helps.

When people already well-respected in the community criticise something in EA, it can often be a source of prestige and a display of their own ability to think independently. But if a relative newcomer were to suggest the very same criticisms, it will often be interpreted very differently. Other aspiring EAs might intuitively classify them as “normie” rather than “EA above the pack”.

So depending on where in the local status hierarchy you find yourself, you might have very different perceptions on how risky it is for community members in general to voice contrarian opinions.

The part about newcomers doesn’t reflect my experience FWIW, though my sample size is small. I published a major criticism while a relative newcomer (knew a handful of EAs, mostly online, was working as a teacher, certainly felt like I had no idea what I was doing). Though it wasn’t the goal of doing so, I think that criticism ended causing me to gain status, possibly (though it’s hard to assess accurately) more status that I think I “deserved” for writing it.

[I no longer feel like a newcomer so this is a cached impression from a couple of years ago and should therefore be taken with a pinch of salt]

I disagree. If anything EA has a problem that Alexrjl hinted at that you gain too much status for criticising EA. Scott Alexander’s recent post made me update in that direction.

(Sidenote: I gave your comment an upvote because I appreciate it, but an agreement downvote since I disagree. And it is just making me happy right now to see how useful explicitly seperating these two voting systems can be)

FWIW, I don’t feel like a newcomer and I write a lot of contrarian (but honest) comments. I don’t generally feel like being massively downvoted gains me status. I’m often afraid I’m lowering my chances of ever getting hired by an EA org.

Hm, I understand why you say that, and you might be right (e.g., I see some signs of the OP that are compatible with this interpretation). Still, I want to point out that there’s a risk of being a bit uncharitable. It seems worth saying that anyone who cares a lot about having a lot of impact should naturally try hard to get accepted to EAG (assuming that they see concrete ways to benefit from it). Therefore, the fact that someone seems to be trying hard can also be evidence that EA is very important to them. Especially when you’re working on a cause area that is under-represented among EAG-attending EAs, like animal welfare, it might matter more (based on your personal moral and empirical views) to get invited.[1]

Compare the following two scenarios. If you’re the 130th applicant focused on trying out AI safety research and the conference committee decides that they think the AI safety conversations at the conference will be more productive without you in expectation because they think other candidates are better suited, you might react to these news in a saint-like way. You might think: “Okay, at least this means others get to reduce AI safety effectively, which benefits my understanding of doing the most good.” By contrast, imagine you get rejected as an advocate for animal welfare. In that situation, you might legitimately worry that your cause area – which you naturally could think is especially important at least according to your moral views and empirical views – ends up neglected. Accordingly, the saint-like reaction of “at least the conference will be impactful without me” doesn’t feel as appropriate (it might be more impactful based on other people’s moral and empirical views, but not necessarily yours). (That doesn’t mean that people from under-represented cause areas should be included just for the sake of better representation, nor that everyone with an empirical view that differs from what’s common in EA is entitled to have their perspective validated. I’m just pointing out that we can’t fault people from under-represented cause areas for thinking that it’s altruistically important for them to get invited – that’s what’s rational when you worry that the conference wouldn’t represent your cause area all that well otherwise. [Even so, I also think it’s important for everyone to be understanding of others’ perspectives on this. E.g., if lots of people don’t share your views, you simply can’t be too entitled about getting representation because a norm that gave all rare views a lot of representation would lead to a chaotic and scattered and low-quality conference. Besides, if your views or cause area are too uncommon, you may not benefit from the conference as much, anyway.]

I strongly agree with this. And your footnote example is also excellent-excellent. I don’t see why it isn’t obvious that Constance’s goal of getting into EAG is merely intrumental to her larger goal of making the world a better place (primarily for animal suffering since that is what she currently seems to believe is the world’s most pressing issue).

I have received EA funding in multiple capacities, and feel quite constrained in my ability to criticise CEA publicly.

I’m sorry to hear that. Here’s an anonymous form, in case that helps.

I’m aware of the form, and trying to think honestly about why I haven’t used it/don’t feel very motivated to. I think there’s a few reasons:

Simple akrasia. There’s quite a long list of stuff I could say, some quite subjective, some quite dated, some quite personal and therefore uncomfortable to raise since it feels uncomfortable criticising individuals. The logistics of figuring out which things are worth mentioning and which aren’t are quite a headache.

Direct self-interest. In practice the EA world is small enough that many things I could say couldn’t be submitted anonymously without key details removed. While I do believe that CEA are generally interested in feedback, it’s difficult to believe that, with the best will in the world, if I identify individuals in particularly strong ways and they’re still at the org, it doesn’t lower my expectation of good future interactions with them.

Indirect self-interest/social interest. I like everyone I’ve interacted with from CEA. Some of them I’d consider friends. I don’t want to sour any of those relationships.

Fellow-interest. Some of the issues I could identify relate to group interactions, some of which don’t actually involve me, but that I’m reasonably confident haven’t been submitted, presumably for similar reasons. I’m especially keen not to accidentally put anyone else in the firing line.

In general I think it’s much more effective to discuss issues publicly than anonymously (as this post does) - but that magnifies all the above concerns.

Lack of confidence that submitting feedback will lead to positive change. I could get over some of the above concerns if I were confident that submitting critical feedback would do some real good, but it’s hard to have that confidence—both because CEA employees are human, and therefore have status quo bias/a general instinct to rationalise bad actions, and because as I mentioned some of the issues are subjective or dated, and therefore might turn out not to be relevant any more, not to be reasonable on my end, or not to be resolveable for some other reason.

I realise this isn’t helpful on an object level, but perhaps it’s useful meta-feedback. The last point gives me an idea: large EA orgs could seek out feedback actively, by eg posting discussion threads on their best guess about ‘things people in the community might feel bad about re us’ with minimal commentary, at least in the OPs, and see if anyone takes the bait. Many of the above concerns would disappear or at least alleviate if it felt like I was just agreeing with a statement rather than submitting multiple whinges.

(ETA: I didn’t give you the agreement downvote, fwiw)

Thanks for sharing your reasons here! I definitely don’t think that this problem fully fixes this problem, and it’s helpful to hear how it’s falling short. Some reactions to your points:

Yeah, this makes sense.

Totally makes sense. I haven’t reflected deeply about whether I should offer to keep information shared in the form with other staff (currently I’m not offering this). On the one hand, this might help me to get more data. On the other hand, it seems good to be able to communicate transparently within the team, and I might be left wanting to act on information but unable to do so due to a confidentiality agreement. Maybe I should think about this more.

Again, totally makes sense.

Ditto.

I’m not so sure that it is better to discuss issues publicly—I think that it can make the discussion feel more high stakes in ways that make it harder to resolve. If you’re skeptical that we’ll act without public pressure, that seems like a reason to go public though (though I think maybe you should be less skeptical, see below).

I can see why you’d have this worry, and I think that outside-view we’re probably under-reacting to criticism a bit. FWIW, I did a quick, very rough categorization of the 18 responses I’ve got to the form so far.

I think that 2 were gibberish/spam (didn’t seem to refer to CEA or EA at all).

One was about an issue that had already been resolved by the time it was submitted.

One was generic positive feedback

Four were several-paragraph long comments sharing some takes on EA culture/extra projects we might take on. I think that these fed into my model of what’s important in various ways, and I have taken some actions as a result, but I don’t think I can confidently say “we acted on this” or “it’s resolved”.

Eight were reasonably specific bits of feedback (e.g. on particular bits of text on our websites, or saying that we were focusing too much on a program for reasons). Of these:

I think that we’ve straightforwardly resolved 6 of these (like they suggested we change some text, and the text is now different in the way that they suggested).

One is a bigger issue (mental health support for EAs): we’ve been working on this but I wouldn’t say it’s resolved.

One was based on a premise that I disagree with (and which they didn’t really argue for), so I didn’t make any change.

Two were a bit of a mix between d) and e), and said in part that they didn’t trust CEA to do certain things/about certain topics. My take is that we are doing the things that these people don’t trust us to do, but they probably still disagree. I don’t expect that I’ve resolved the trust issue that these people raise.

Meta:

Obviously I might be biased in my assessment of the above, you might not trust me.

My summary is that we’re probably pretty likely to fix specific feedback, but it’s harder to say whetheer we’ll respond effectively to more general feedback.

This all makes me think that maybe I should publicly respond to submissions (but also that could be a lot of work).

Thanks for the idea about writing comments that help people share their thoughts without getting into details.

Wow, thanks so much for sharing this publicly!

I also wanna give general encouragement for sharing a difficult rejection story.

Hi Evie,

I appreciate that you decided to post this.

Tone—I did worry that the tone might read like that. To me, getting into EAG was only instrumental for my greater goal of making the world a better place. I do have a tendency to focus a lot of energy into on perceived barriers to efficicacy so it might have come off like getting into EAG was my final objective. Please feel free to point out various parts of the post that seem to suggest otherwise and I can update them.

Making the world a better place—This is a really difficult thing to measure and there is not a lot of transparency around how they are measuring it. Part of why I made this post was to provide more data points to answer Eli’s other question of, “how costly is rejection?” That needs to be factored into calculating how much good EAG is producing. I just don’t think it is properly accounted for.

Hesitant to criticize—I would agree with Vaidehi and say that there are many factors to consider in how comfortable individuals are to criticize EA organizations. Just to add my own data point, there were a couple people that reviewed this post to that were hesitant to be identified in one way or another out of concern for a negative consequence in the future. Starting 1-2 weeks ago since I found out about my rejection, I have probably talked to 15-20 EA’s and about 80% have expressed wariness about saying/doing something that would upset a large EA organization.

It seems to me to be completely valid to acknowledge that there is a real cost to rejection that is felt by individuals at a very personal level. Part of this cost will be the utilitarian frustration of being thwarted from taking advantage of what one imagines could be a highly effective means of furthering one’s goals (i.e. doing good), but part of this cost for many people will be the very personal hurt of rejection, and that both can felt at the same time. We are social beings with identities and values that are rooted in and affirmed by community. This is the reason the philosophy of effective altruism has gained traction in calling itself a community and building institutions around that. Community is important to who we are, what we beleive, and what we do. If doing good is central to one’s sense of purpose and identity, and one has found in the EA community an identity and moral framework that provides a means through which one can live out one’s values, than a rejection handed down by the highly respected leaders of this community will be incredibly painful on a personal level. Our goals and values are inextricably tied up with our identities and relationships.

The psychological cost of rejection is real and i think potentially detrimental to the greater purpose of the organization in so far as it discourages and demotivates people who are driven by a common altruistic purpose, and contributes to a wider sense of the EA community as gated and exclusionary. To the extent that one cares about these costs, I do not see the gain in refusing to acknowledge that the psychological toll of rejection is real, is valid, and is intrinsically bound up with any more purely instrumental costs.

I roughly agree with these.

I’m not sure about this. I expect people relying on EA grants are reluctant to criticize authoritative orgs like CEA, particularly publicly and non-anonymously. I’d guess that they’re more reluctant than people not on EA grants, relative to the amount of useful criticism they could provide.

I think the first point is subtly wrong in an important way.

EAGs are not only useful in so far as they let community members do better work in the real world. EAGs are useful insofar as they result in a better world coming to be.

One way in which EAGs might make the world better is by fostering a sense of community, validation, and inclusion among those who have committed themselves to EA, thus motivating people to so commit themselves and to maintain such commitments. This function doesn’t bare on “letting” people do better work per se.

Insofar as this goal is an important component of EAG’s impact, it should be prioritized alongside more direct effects of the conference. EAG obviously exists to make the world a better place, but serving the EA community and making EAs happy is an important way in which EAG accomplishes this goal.

EDIT: Lukas Gloor does a much better job than me at getting across everything I wanted to in this comment here

From my reading her goals are not simply get into EAG. It seems obvious to me that her goal to get into EAG is instrumental to the end of making the world a better place. The crux is not “Constance just wants to get into EAG.” The crux I think is Constance believes she can help make the world a better place much more through connecting with people at EAG. The CEA does not appear to believe this to be the case.

The crux should be the focus. Focusing on how badly she wants to get into EAG is a distraction.

For many EAs you cannot have a well-run conference that makes the world a better place without it also being a place that makes many EAs very happy. I’d think the two goals are synonymous for a great many EAs.

In their comment Eli says:

Let’s also remember that EAs that get rejected from EAG that believe their rejection resulted in the world being a worse place overall will also be sad—probably moreso because they get both the FOMO but also a deeper moral sting. In fact, they might be so sad it motivates them to write an EA Forum post about it in the hopes of making sure that the CEA didn’t make a mistake.

I like Eli’s comment. It captures something important. But I also don’t like it because it can also provide a false sense of clarity—seperating goals that aren’t actually always that seperate—and this false clarity can possibly provide a motivated reasoning basis that can be used to more easily believe the EAG admission process didn’t make a mistake and make the world a worse place. Why? Because it makes it easier to dismiss an EA that is very sad about being rejected from EAG as just someone who “wants to get into EAG.”

I’m wary of this claim. Obviously in some top level sense it’s true, but it seems reminiscent of the paradox of hedonism, in that I can easily believe that if you consciously optimise events for abstract good-maximisation, you end up maximising good less than if you optimise them for the health of a community of do-gooders.

(I’m not saying this is a case for or against admitting the OP—it’s just my reaction to your reaction)

Thank you for sharing a very personal story, I think it will lead to even more improvements to the overall process and community health.

As someone that was accepted to EAG with basically no EA accomplishments, I do strongly believe that admissions are not about “worthiness” but about “how much would going to EAG help you or others do the most good”.

Looking at the application, as a random nobody, I personally agree with the CEA decision (even ignoring the fact that they probably have a lot more information).

Not sure about discussing why in a comment, but happy to do it if someone would find it useful.

Edit: I really liked this paragraph

And the fact that you actually found a solution/improvement (the gather town space) to solve your needs, I wish I had more of this mindset!

A number of people have asked me whether I gave Constance permission to post a selection of my private Facebook communications and my email/the events team’s emails as part of this Forum post. I did not. I felt a bit uncomfortable with this, but I also did not ask her to take them down.

I saw that she had some suggestions for how I could improve my messages and my emails / other events team emails in the redline comments, and I agree some of her suggestions would have been improvements.

Amy, I knew this would be at least a bit uncomfortable for you. I tried to minimize that through anonymizing your identity in the screenshots and sharing the google doc draft with you the night before I posted it.

Ultimately, I was very disappointed in the quality of communication around the application decision making process. When it was made clear that there would be no further ability to discuss the reason for my rejection privately, I decided to make a public post. My primary goal is to increase transparency and reduce the likelihood that other rejected applicants would have a negative experience. I thought the screenshots would be necessary to do that, but didn’t think your identity was necessary for it. I did see that you identified yourself in the comments shortly after the post went up so I appreciate your sense of accountability for what it’s worth.

I’m honestly confused and surprised you got rejected, based on reading your linked application. I would probably have found it valuable to talk to you at a conference like this, for insights into how you do what you do, because you clearly do some of it well.