GWWC lists StrongMinds as a “top-rated” charity. Their reason for doing so is because Founders Pledge has determined they are cost-effective in their report into mental health.

I could say here, “and that report was written in 2019 - either they should update the report or remove the top rating” and we could all go home. In fact, most of what I’m about to say does consist of “the data really isn’t that clear yet”.

I think the strongest statement I can make (which I doubt StrongMinds would disagree with) is:

“StrongMinds have made limited effort to be quantitative in their self-evaluation, haven’t continued monitoring impact after intervention, haven’t done the research they once claimed they would. They have not been vetted sufficiently to be considered a top charity, and only one independent group has done the work to look into them.”

My key issues are:

Survey data is notoriously noisy and the data here seems to be especially so

There are reasons to be especially doubtful about the accuracy of the survey data (StrongMinds have twice updated their level of uncertainty in their numbers due to SDB)

One of the main models is (to my eyes) off by a factor of ~2 based on an unrealistic assumption about depression (medium confidence)

StrongMinds haven’t continued to publish new data since their trials very early on

StrongMinds seem to be somewhat deceptive about how they market themselves as “effective” (and EA are playing into that by holding them in such high esteem without scrutiny)

What’s going on with the PHQ-9 scores?

In their last four quarterly reports, StrongMinds have reported PHQ-9 reductions of: −13, −13, −13, −13. In their Phase II report, raw scores dropped by a similar amount:

However, their Phase II analysis reports (emphasis theirs):

As evidenced in Table 5, members in the treatment intervention group, on average, had a 4.5 point reduction in their total PHQ-9 Raw Score over the intervention period, as compared to the control populations. Further, there is also a significant visit effect when controlling for group membership. The PHQ-9 Raw Score decreased on average by 0.86 points for a participant for every two groups she attended. Both of these findings are statistically significant.

Founders Pledge’s cost-effectivenes model uses the 4.5 reduction number in their model. (And further reduces this for reasons we’ll get into later).

Based on Phase I and II surveys, it seems to me that a much more cost-effective intervention would be to go around surveying people. I’m not exactly sure what’s going on with the Phase I / Phase II data, but the best I can tell is in Phase I we had a ~7.5 vs ~5.1 PHQ-9 reduction from “being surveyed” vs “being part of the group” and in Phase II we had ~3.0 vs ~7.1 PHQ-9 reduction from “being surveyed” vs “being part of the group”. [an earlier version of this post used the numbers ‘~5.1 vs ~4.5 PHQ-9’ but Natalia pointed out the error in this comment] For what it’s worth, I don’t believe this is likely the case, I think it’s just a strong sign that the survey mechanism being used is inadequate to determine what is going on.

There are a number of potential reasons we might expect to see such large improvements in the mental health of the control group (as well as the treatment group).

Mean-reversion—StrongMinds happens to sample people at a low ebb and so the progression of time leads their mental health to improve of its own accord

“People in targeted communities often incorrectly believe that StrongMinds will provide them with cash or material goods and may therefore provide misleading responses when being diagnosed.” (source) Potential participants fake their initial scores in order to get into the program (either because they (mistakenly) think there is some material benefit to being in the program or because they think it makes them more likely to get into a program they think would have value for them.

What’s going on with the ‘social-desirability bias’?

Both the Phase I and Phase II trials discovered that 97% and 99% of their patients were “depression-free” after the trial. They realised that these numbers were inaccurate during their Phase II trial. They decided on the basis of this, to reduce their numbers from 99% in Phase II to 92% on the basis of the results two weeks prior to the end.

In their follow-up study of Phases I and II, they then say:

While both the Phase 1 and 2 patients had 95% depression-free rates at the completion of formal sessions, our Impact Evaluation reports and subsequent experience has helped us to understand that those rates were somewhat inflated by social desirability bias, roughly by a factor of approximately ten percentage points. This was due to the fact that their Mental Health Facilitator administered the PHQ-9 at the conclusion of therapy. StrongMinds now uses external data collectors to conduct the post-treatment evaluations. Thus, for effective purposes, StrongMinds believes the actual depression-free rates for Phase 1 and 2 to be more in the range of 85%.

I would agree with StrongMinds that they still had social-desirability bias in their Phase I and II reports, although it’s not clear to me they have fully removed it now. This also relates to my earlier point about how much improvement we see in the control group. If pre-treatment are showing too high levels of depression and the post-treatment group is too low how confident should we be in the magnitude of these effects?

How bad is depression?

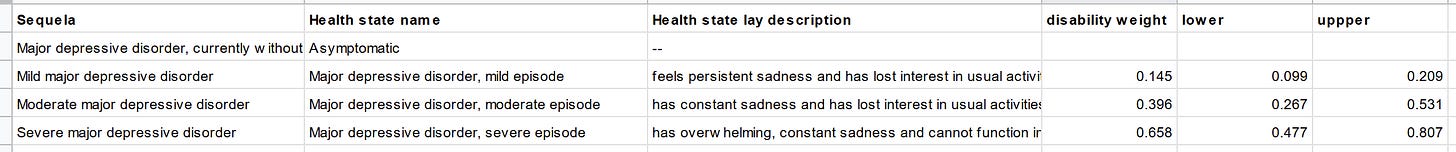

Severe depression has a DALY weighting of 0.66.

(Founders Pledge report, via Global Burden of Disease Disability Weights)

The key section of the Disability Weights table reads as follows:

My understanding (based on the lay descriptions, IANAD etc) is that “severe depression” is not quite the right way to describe the thing which has a DALY weighting of 0.66. “severe depression during an episode has a DALY weighting of 0.66” would be more accurate.

Assuming linear decline in severity on the PHQ-9 scale.

(Founders Pledge model)

Furthermore whilst the disability weights are linear between “mild”, “moderate” and “severe” the threshold for “mild” in PHQ-9 terms is not ~1/3 of the way up the scale. Therefore there is a much smaller change in disability weight for going 12 points from 12 − 0 than for 24-12. (One takes you from ~mild to asymptomatic ~.15 and one takes you from “severe episode” to “mild episode” ~0.51 which is a much larger change).

This change would roughly halve the effectiveness of the intervention, using the Founders Pledge model.

Lack of data

My biggest gripe with StrongMinds is they haven’t continued to provide follow-up analysis for any of their cohorts (aside from Phase I and II) despite saying they would in their 2017 report:

Looking forward, StrongMinds will continue to strengthen our evaluation efforts and will continue to follow up with patients at 6 or 12 month intervals. We also remain committed to implementing a much more rigorous study, in the form of an externally-led, longitudinal randomized control trial, in the coming years.

As far as I can tell, based on their conversation with GiveWell:

StrongMinds has decided not pursue a randomized controlled trial (RCT) of its program in the short term, due to:

High costs – Global funding for mental health interventions is highly limited, and StrongMinds estimates that a sufficiently large RCT of its program would cost $750,000 to $1 million.

Sufficient existing evidence – An RCT conducted in 2002 in Uganda found that weekly IPT-G significantly reduced depression among participants in the treatment group. Additionally, in October 2018, StrongMinds initiated a study of its program in Uganda with 200 control group participants (to be compared with program beneficiaries)—which has demonstrated strong program impact. The study is scheduled to conclude in October 2019.

Sufficient credibility of intervention and organization – In 2017, WHO formally recommended IPT-G as first line treatment for depression in low- and middle-income countries. Furthermore, the woman responsible for developing IPT-G and the woman who conducted the 2002 RCT on IPT-G both serve as mental health advisors on StrongMinds’ advisory committee.

I don’t agree with any of the bullet points. (Aside from the first, although I think there should be ways to publish more data within the context of their current data).

On the bright side(!) as far as I can tell, we should be seeing new data soon. StrongMinds and Berk Ozler should have finished collecting their data for a larger RCT on StrongMinds. It’s a shame it’s not a direct comparison between cash transfers and IPT-G, (the arms are: IPT-G, IPT-G + cash transfers, no-intervention) but it will still be very valuable data for evaluating them.

Misleading?

(from the StrongMinds homepage)

This implies Charity Navigator thinks they are one of the world’s most effective charities. But in fact Charity Navigator haven’t evaluated them for “Impact & Results”.

WHO: There’s no external validation here (afaict). They just use StrongMinds own numbers and talk around the charity a bit.

I’m going to leave aside discussing HLI here. Whilst I think they have some of the deepest analysis of StrongMinds, I am still confused by some of their methodology, it’s not clear to me what their relationship to StrongMinds is. I plan on going into more detail there in future posts. The key thing to understand about the HLI methodology is that follows the same structure as the Founders Pledge analysis and so all the problems I mention above regarding data apply just as much to them as FP.

The “Inciting Altruism” profile, well, read it for yourself.

Founders Pledge is the only independent report I’ve found—and is discussed throughout this article.

GiveWell Staff Members personal donations:

I plan to give 5% of my total giving to StrongMinds, an organization focused on treating depression in Africa. I have not vetted this organization anywhere nearly as closely as GiveWell’s top charities have been vetted, though I understand that a number of people in the effective altruism community have a positive view of StrongMinds within the cause area of mental health (though I don’t have any reason to think it is more cost-effective than GiveWell’s top charities). Intuitively, I believe mental health is an important cause area for donors to consider, and although we do not have GiveWell recommendations in this space, I would like to learn more about this area by making a relatively small donation to an organization that focuses on it.

This is not external validation.

The EA Forum post is also another HLI piece.

I don’t have access to the Stanford piece, it’s paywalled.

Another example of them being misleading is in all their reports they report the headline PHQ-9 reduction numbers, but everyone involve knows (I hope) that those aren’t really a relevant metric without understanding the counterfactual reduction they actually think is happening. It’s either a vanity metric or a bit deceptive.

Conclusion

What I would like to happen is:

Founders Pledge update or withdraw their recommendation of StrongMinds

GWWC remove StrongMinds as a top charity

Ozler’s study comes out saying it’s super effective

Everyone reinstates StrongMinds as a top charity, including some evaluators who haven’t done so thus far

Hi Simon, thanks for writing this! I’m research director at FP, and have a few bullets to comment here in response, but overall just want to indicate that this post is very valuable. I’m also commenting on my phone and don’t have access to my computer at the moment, but can participate in this conversation more energetically (and provide more detail) when I’m back at work next week.

I basically agree with what I take to be your topline finding here, which is that more data is needed before we can arrive at GiveWell-tier levels of confidence about StrongMinds. I agree that a lack of recent follow-ups is problematic from an evaluator’s standpoint and look forward to updated data.

FP doesn’t generally strive for GW-tier levels of confidence; we’re risk-neutral and our general procedure is to estimate expected cost-effectiveness inclusive of deflators for various kinds of subjective consideration, like social desirability bias.

The 2019 report you link (and the associated CEA) is deprecated— FP hasn’t been resourced to update public-facing materials, a situation that is now changing—but the proviso at the top of the page is accurate: we stand by our recommendation.

This is because we re-evaluated StrongMinds last year based on HLI’s research. The new evaluation did things a little bit differently than the old one. Instead of attempting to linearize the relationship between PHQ-9 score reductions and disability weights, we converted the estimated treatment effect into WELLBY-SDs by program and area of operation, an elaboration made possible by HLI’s careful work and using their estimated effect sizes. I reviewed their methodology and was ultimately very satisfied— I expect you may want to dig into this in more detail. This also ultimately allows the direct comparison with cash transfers.

I pressure-tested this a few ways: by comparing with the linearized-DALY approach from our first evaluation, from treating the pre-post data as evidence in a Bayesian update on conservative priors, and by modeling the observed shifts using the assumption of an exponential distribution in PHQ-9 scores (which appears in some literature on the topic). All these methods made the intervention look pretty cost-effective.

Crucially, FP’s bar for recommendation to members is GiveDirectly, and we estimate StrongMinds at roughly 6x GD. So even by our (risk-neutral) lights, StrongMinds is not competitive with GiveWell top charities.

A key piece of evidence is the 2002 RCT on which the program is based. I do think a lot—perhaps too much—hinges on this RCT. Ultimately, however, I think it is the most relevant way to develop a prior on the effectiveness of IPT-g in the appropriate context, which is why a Bayesian update on the pre-post data seems so optimistic: the observed effects are in line with the effect size from the RCT.

The effect sizes observed are very large, but it’s important to place in the context of StrongMinds’ work with severely traumatized populations. Incoming PHQ-9 scores are very, very high, so I think 1) it’s reasonable to expect some reversion to the mean in control groups, which we do see and 2) I’m not sure that our general priors about the low effectiveness of therapeutic interventions are likely to be well-calibrated here.

Overall, I agree that social desirability bias is a very cruxy issue here, and could bear on our rating of StrongMinds. But I think even VERY conservative assumptions about the role of this bias in the effect estimate would cause SM still to clear our bar in expectation.

Just a note on FP and our public-facing research: we’re in the position of prioritizing our research resources primarily to make recommendations to members, but at the same time we’re trying to do our best to provide a public good to the public and the EA community. I think we’re still not sure how to do this, and definitely not sure how to resource for it. We are working on this.

The page doesn’t say deprecated and GWWC are still linking to it and recommending it as a top charity. I do think your statements here should be enough for GWWC to remove them as a top charity.

This is what triggered the whole thing in the first place—I have had doubts about StrongMinds for a long time (I privately shared doubts with many EAs ~a year ago) but I didn’t think it was considered a top charity and think it’s a generally “fine” charity and we should collect more data in the area. Sam Atis’ blog led me to see it was considered a top charity, and that was what finally tipped me over the edge.

I didn’t find this on your website. I have looked into the WELLBY-SDs—I have serious doubts about it and I’m working on a blog explaining why, but I don’t feel confident enough yet explaining publicly why I think they aren’t the right metric. Hopefully this is coming soon. (Although I don’t think the transform really makes much difference to the outcome—this is a GIGO issue, not a modelling issue).

I haven’t seen this model, so I can’t comment. (Is it public somewhere?). I think it’s possible but unlikely it’s 6x more effective although my guess at the moment is that it’s less than 1x as effective.

That isn’t obvious to me, and I would welcome you publishing that analysis.

I think my main takeaway is my first one here. GWWC shouldn’t be using your recommendations to label things top charities. Would you disagree with that?

“I think my main takeaway is my first one here. GWWC shouldn’t be using your recommendations to label things top charities. Would you disagree with that?”

Yes, I think so- I’m not sure why this should be the case. Different evaluators have different standards of evidence, and GWWC is using ours for this particular recommendation. They reviewed our reasoning and (I gather) were satisfied. As someone else said in the comments, the right reference class here is probably deworming— “big if true.”

The message on the report says that some details have changed, but that our overall view is represented. That’s accurate, though there are some details that are more out of date than others. We don’t want to just remove old research, but I’m open to the idea that this warning should be more descriptive.

I’ll have to wait til next week to address more substantive questions but it seems to me that the recommend/don’t recommend question is most cruxy here.

EDIT:

On reflection, it also seems cruxy that our current evaluation isn’t yet public. This seems very fair to me, and I’d be very curious to hear GWWC’s take. We would like to make all evaluation materials public eventually, but this is not as simple as it might seem and especially hard given our orientation toward member giving.

Though this type of interaction is not ideal for me, it seems better for the community. If they can’t be totally public, I’d rather our recs be semi-public and subject to critique than totally private.

I’m afraid that doesn’t make me super impressed with GWWC, and it’s not easy for non-public reasoning to be debunked. Hopefully you’ll publish it and we can see where we disagree.

I think there’s a big difference between deworming and StrongMinds.

Our priors should tell us that “removing harmful parasites substantially improves peoples lives and can be done very cheaply” whereas our priors should also tell us (at least after a small amount of research) “treating severe depression is exceptionally difficult and costly”

If “big if true” is the story then it becomes extremely important to be doing high quality research to find out if it’s true. My impression (again from the outside) is that this isn’t happening with StrongMinds, and all indications seem to point towards them being extremely avoidant of any serious data analysis.

“big if true” might be a good analogy, but if that’s the case StrongMinds needs to be going in a very different direction than what they appear (again from the outside) to be doing.

I agree the recommend / don’t recommend is my contention in this post. I would love to hear GWWC’s reasoning to see why are happy with their recommendation.

Simon, I loved your post!

But I think this particular point is a bit unfair to GWWC and also just factually inaccurate.

For a start GWWC do not “recommend” Strong Minds. They very clearly recommend giving to an expert-managed Fund where an expert grantmaker can distribute the money and they do not recommend giving StrongMinds (or to Deworm the World, or AMF, etc). They say that repeatedly across their website, e.g. here. They then also have some charities that they class as “top rated” which they very clearly say are charities that have been “top rated” by another independent organisation that GWWC trusts.

I think this makes sense. Lets consider GWWC’s goals here. GWWC exist to serve and grow its community of donors. I expect that maintaining a broad list of charities on their website across cause areas and providing a convenient donation platform for those charities is the right call for GWWC to achieve those goals, even if some of those charities are less proven. Personally as a GWWC member I very much appreciate they have such a broad a variety of charities (e.g., this year, I donated to one of ACE’s standout charities and it was great to be able to do so on the GWWC page.) Note again this is a listing for donors convenience and not an active recommendation.

My other though is that GWWC has a tiny and very new research team. So this approach of list all the FP “top rated” charities makes sense to me. Although I do hope that they can grow their team and take more of a role doing research like your critique and evaluating the evaluators / the Funds.

(Note on conflicts of interest: Some what tangential but for transparency I have a role at a different FP recommended charity so this could affect me.)

I suspect this is a reading comprehension thing which I am failing at (I know I have failed at this in the past) but I think there are roughly two ways in which GWWC is either explicitly or implicitly recommending StrongMinds.

Firstly, by labelling it as a “Top Charity” then to all but the most careful reader (and even a careful reader) will see this as some kind of endorsement or “recomendation” to use words at least somewhat sloppily.

Secondly, it does explicitly recommend StrongMinds:

Their #1 recommendation is “Donate to expert-managed funds” and their #2 recommendation is “Donate to charities recommended by trusted charity evaluators”. They say:

At the top of that section, so it is clear to me the recommendation extends across the whole section. I agree with you they advise expert-managed funds above StrongMinds, but I don’t think that’s the same.

I also think it makes sense. My only complaint with GWWC is they aren’t open about what the process is for accepting a recommendation from a trusted advisor and their process doesn’t include some explicit “public details of the recommendation”. (The point is made most eloquently by Jeff here.

I think you are conflating two things here. One is GWWC listing a charity which you can donate to. Another is labelling it as “Top-rated”. For example, I can donate to GiveDirectly, SCI, Deworm the World via GWWC, but none of those are labeled as “Top-rated”:

(Note that StrongMinds is listed 4th in that category, on the very first page)

All I’m asking is for GWWC to remove the “Top-rated” label.

It might not be an active recommendation (although as I pointed out, I believe it is) but it’s clear an implicit recommendation.

All the more reason to have some simple rules for what is included. Allow recommendations to go out of date and don’t recommend things where the reasoning isn’t public. None of those rules are especially arduous for a small team to maintain.

Oh dear, no my bad. I didn’t at all realise “top rated” was a label they applied to Strong Minds but not to Give Directly and SCI and other listed charities, and thought you were suggesting StrongMinds be delisted from the site. I still think it makes sense for GWWC to (so far) be trusting other research orgs, and I do think they have acted sensibly (although have room to grow in providing a checks and balance). But I also seemed to have misundestood your point somewhat, so sorry about that.

I agree that beforemy post GWWC hadn’t done anything wrong.

At this point I think that GWWC should be able to see that their current process for labelling top-rated charities is not optimal and they should be changing it. Once they do that I would fully expect that label to disappear.

I’m disappointed that they don’t seem to agree with me, and seem to think that no immediate action is required. Obviously that says more about my powers of persuasion than them though, and I expect once they get back to work tomorrow and they actually look in more detail they change their process.

Hi Simon,

I’m back to work and able to reply with a bit more detail now (though also time-constrained as we have a lot of other important work to do this new year :)).

I still do not think any (immediate) action on our part is required. Let me lay out the reasons why:

(1) Our full process and criteria are explained here. As you seem to agree with from your comment above we need clear and simple rules for what is and what isn’t included (incl. because we have a very small team and need to prioritize). Currently a very brief summary of these rules/the process would be: first determine which evaluators to rely on (also note our plans for this year) and then rely on their recommendations. We do not generally have the capacity to review individual charity evaluations, and would only do so and potentially diverge from a trusted evaluator’s recommendation under exceptional circumstances. (I don’t believe we have had such a circumstance this giving season, but may misremember)

(2) There were no strong reasons to diverge with respect to FP’s recommendation of StrongMinds at the time they recommended them—or to do an in-depth review of FP’s evaluation ourselves—and I think there still aren’t. As I said before, you make a few useful points in your post but I think Matt’s reaction and the subsequent discussion satisfactorily explain why Founders Pledge chose to recommend StrongMinds and why your comments don’t (immediately) change their view on this: StrongMinds doesn’t need to meet GiveWell-tier levels of confidence and easily clears FP’s bar in expectation—even with the issues you mention having been taken into account—and nearly all the decision-relevant reasoning is already available publicly in the 2019 report and HLI’s recent review. I would of course be very interested and we could reconsider our view if any ongoing discussion brings to light new arguments or if FP is unable to back up any claims they made, but so far I haven’t seen any red or even orange flags.

(3) The above should be enough for GWWC to not prioritize taking any action related to StrongMinds at the moment, but I happen to have a bit more context here than usual as I was a co-author on the 2019 FP report on StrongMinds, and none of the five issues you raise are a surprise/new to me or change my view of StrongMinds very much. Very briefly on each (note: I don’t have much time / will mostly leave this to Matt / some of my knowledge may be outdated or my memory may be off):

I agree the overall quality of evidence is far short from e.g. GiveWell’s standards (cf. Matt’s comments—and would have agreed on this back in 2019. At this point, I certainly wouldn’t take FP’s 2019 cost-effectiveness analysis literally: I would deflate the results by quite a bit to account for quality of evidence, and I know FP have done so internally for the past ~2 years at least. However, AFAIK such accounting—done reasonably—isn’t enough to change the overall conclusion of StrongMinds meeting the cost-effectiveness bar in wellbeing terms. I should also note that HLI’s cost-effectiveness analysis seems to take into account more pieces of evidence, though I haven’t reviewed it; just skimmed it.

As you say yourself, The 2019 FP report already accounted for social desirability bias to some extent, and it further highlights this bias as one of its key uncertainties (section 3.8, p.31).

I disagree with depression being overweighted here for various reasons, including that DALYs plausibly underweight mental health (see section 1, p.8-9 of the FP mental health report. Also note that HLI’s recent analysis—AFAIK—doesn’t rely on DALY’s in any way.

I don’t think the reasons StrongMinds mention for not collecting more evidence (than they already are) are as unreasonable as you seem to think. I’d need to delve more into the specifics to form a view here, but just want to reiterate StrongMinds’s first reason that running high-quality studies is generally very expensive, and may often not be the best decision for a charity from a cost-effectiveness standpoint. Even though I think the sector as a whole could probably still do with more (of the right type of) evidence generation, from my experience I would guess it’s also relatively common charities collect more evidence (of the wrong kind) than would be optimal.

I don’t like what I see in at least some of the examples of communication you give—and if I were evaluating StrongMinds currently I would certainly want to give them this feedback (in fact I believe I did back in 2018, which I think prompted them to make some changes). However, though I’d agree that these provide some update on how thoroughly one should check claims StrongMinds makes more generally, I don’t think they should meaningfully change one’s view on the cost-effectiveness of StrongMinds’s core work.

(4) Jeff suggested (and some others seem to like) the idea of GWWC changing its inclusion criteria and only recommending/top-rating organisations for which an up-to-date public evaluation is available. This is something we discussed internally in the lead-up to this giving season, but we decided against it and I still feel that was and is the right decision (though I am open to further discussion/arguments):

There are only very few charities for which full public and up-to-date evaluations are available, and coverage for some worldviews/promising cause areas is structurally missing. In particular, there are currently hardly any full public and up-to-date evaluations in the mental health/subjective well-being, longtermist and “meta” spaces. And note that - by this standard—we wouldn’t be able to recommend any funds except for those just regranting to already-established recommendations.

If the main reason for this was that we don’t know of any cost-effective places to donate in these areas/according to these worldviews, I would have agreed that we should just go with what we know or at least highlight that standards are much lower in these areas.

However, I don’t think this is the case: we do have various evaluators/grantmakers looking into these areas (though too few yet IMO!) and arguably identifying very cost-effective donation opportunities (in expectation), but they often don’t prioritise sharing these findings publicly or updating public evaluations regularly. Having worked at one of those myself (FP), my impression is this is generally for very good reasons, mainly related to resource constraints/prioritisation as Jeff notes himself.

In an ideal world—where these resource constraints wouldn’t exist—GWWC would only recommend charities for which public, up-to-date evaluations are available. However, we do not live in that ideal world, and as our goal is primarily to provide guidance on what are the best places to give to according to a variety of worldviews, rather than what are the best explainable/publicly documented places to give, I think the current policy is the way to go.

Obviously it is very important that we are transparent about this, which we aim to do by clearly documenting our inclusion criteria, explaining why we rely on our trusted evaluators, and highlighting the evidence that is publicly available for each individual charity. Providing this transparency has been a major focus for us this giving season, and though I think we’ve made major steps in the right direction there’s probably still room for improvement: any feedback is very welcome!

Note that one reason why more public evaluations would seem to be good/necessary is accountability: donors can check and give feedback on the quality of evaluations, providing the right incentives and useful information to evaluators. This sounds great in theory, but in my experience public evaluation reports are almost never read by donors (this post is an exception, which is why I’m so happy with it, even though I don’t agree with the author’s conclusions), and they are a very high resource cost to create and maintain—in my experience writing a public report can take up about half of the total time spent on an evaluation (!). This leaves us with an accountability and transparency problem that I think is real, and which is one of the main reasons for our planned research direction this year at GWWC.

Lastly, FWIW I agree that we actively recommend StrongMinds (and this is our intention), even though we generally recommend donors to give to funds over individual charities.

I believe this covers (nearly) all of the GWWC-related comments I’ve seen here, but please let me know if I’ve missed anything!

This is an excellent response from a transparency standpoint, and increases my confidence in GWWC even though I don’t agree with everything in it.

One interesting topic for a different discussion—although not really relevant to GWWC’s work—is the extent to which recommenders should condition an organization’s continued recommendation status on obtaining better data if the organization grows (or even after a suitable period of time). Among other things, I’m concerned that allowing recommendations that were appropriate under criteria appropriate for a small/mid-size organization to be affirmed on the same evidence as an organization grows could disincentivize organizations from commissioning RCTs where appropriate. As relevant here, my take on an organization not having a better RCT is significantly different in the context of an organization with about $2MM a year in room for funding (which was the situation when FP made the recommendation, p. 31 here) than one that is seeking to raise $20MM over the next two years.

Thanks for the response!

FWIW I’m not asking for immediate action, but a reconsideration of the criteria for endorsing a recommendation from a trusted evaluator.

I’m not proposing changing your approach to recommending funds, but for recommending charities. In cases where a field has only non-public or stale evaluations then fund managers are still in a position to consider non-public information and the general state of the field, check in with evaluators about what kind of stale the current evaluations are at, etc. And in these cases I think the best you can do is say that this is a field where GWWC currently doesn’t have any recommendations for specific charities, and only recommends giving via funds.

I wasn’t suggesting you were, but Simon certainly was. Sorry if that wasn’t clear.

As GWWC gets its recommendations and information directly from evaluators (and aims to update its recommendations regularly), I don’t see a meaningful difference here between funds vs charities in fields where there are public up-to-date evaluations and where there aren’t: in both cases, GWWC would recommend giving to funds over charities, and in both cases we can also highlight the charities that seem to be the most cost-effective donation opportunities based on the latest views of evaluators. GWWC provides a value-add to donors here, given some of these recommendations wouldn’t be available to them otherwise (and many donors probably still prefer to donate to charities over donating to funds / might not donate otherwise).

Sorry, yes, I forgot your comment was primarily a response to Simon!

I’m generally comfortable donating via funds, but this requires a large degree of trust in the fund managers. I’m saying that I trust them to make decisions in line with the fund objectives, often without making their reasoning public. The biggest advantage I see to GWWC continuing to recommend specific charities is that it supports people who don’t have that level of trust in directing their money well. This doesn’t work without recommendations being backed by public current evaluations: if it just turns into “GWWC has internal reasons to trust FP which has internal reasons to recommend SM” then this advantage for these donors is lost.

Note that this doesn’t require that most donors read the public evaluations: these lower-trust donors still (rightly!) understand that their chances of being seriously misled are much lower if an evaluator has written up a public case like this.

So in fields where there are public up-to-date evaluations I think it’s good for GWWC to recommend funds, with individual charities as a fallback. But in fields where there aren’t, I think GWWC should recommend funds only.

What to do about people who can’t donate to funds is a tricky case. I think what I’d like to see is funds saying something like, if you want to support our work the best thing is to give to the fund, but the second best is to support orgs X, Y, Z. This recommendation wouldn’t be based on a public evaluation, but just on your trust in them as a funder.

I especially think it’s important to separate when someone would be happy giving to a fund if not for the tax etc consequences vs when someone wants the trust/public/epistemic/etc benefits of donating to a specific charity based on a public case.

I think trust is one of the reasons why a donor may or may not decide to give to a fund over a charity, but there are others as well, e.g. a preference for supporting more specific causes or projects. I expect donors with these other reasons (who trust evaluators/fund managers but would still prefer to give to individual charities (as well)) will value charity recommendations in areas for which there are no public and up-to-date evaluations available.

Note that this is basically equivalent to the current situation: we recommend funds over charities but highlight supporting charities as the second-best thing, based on recommendations of evaluators (who are often also fund managers in their area).

Thinking more, other situations in which a donor might want to donate to specific charities despite trusting the grantmaker’s judgement include:

Preference adjustments. Perhaps you agree with a fund in general, but you think they value averting deaths too highly relative to improving already existing lives. By donating to one of the charities they typically fund that focuses on the latter you might shift the distribution of funds in that direction. Or maybe not; your donation also has the effect of decreasing how much additional funding the charity needs, and the fund might allocate more elsewhere.

Ops skepticism. When you donate through a fund, in addition to trusting the grantmakers to make good decisions you’re also trusting the fund’s operations staff to handle the money properly and that your money won’t be caught up in unrelated legal trouble. Donating directly to a charity avoids these risks.

Yeah agreed. And another one could be as a way of getting involved more closely with a particularly charity when one wants to provide other types of support (advice, connections) in addition to funding. E.g. even though I don’t think this should help a lot, I’ve anecdotally found it helpful to fund individual charities that I advise, because putting my personal donation money on the line motivates me to think even more critically about how the charity could best use its limited resources.

Thanks again for engaging in this discussion so thoughtfully Jeff! These types of comments and suggestions are generally very helpful for us (even if I don’t agree with these particular ones).

Fair enough. I think one important thing to highlight here is that though the details of our analysis have changed since 2019, the broad strokes haven’t — that is to say, the evidence is largely the same and the transformation used (DALY vs WELLBY), for instance, is not super consequential for the rating.

The situation is one, as you say, of GIGO (though we think the input is not garbage) and the main material question is about the estimated effect size. We rely on HLI’s estimate, the methodology for which is public.

I think your (2) is not totally fair to StrongMinds, given the Ozler RCT. No matter how it turns out, it will have a big impact on our next reevaluation of StrongMinds.

Edit: To be clearer, we shared updated reasoning with GWWC but the 2019 report they link, though deprecated, still includes most of the key considerations for critics, as evidenced by your observations here, which remain relevant. That is, if you were skeptical of the primary evidence on SM, our new evaluation would not cause you to update to the other side of the cost-effectiveness bar (though it might mitigate less consequential concerns about e.g. disability weights).

And with deworming, there are stronger reasons to be willing to make moderately significant funding decisions on medium-quality evidence: another RCT would cost a lot and might not move the needle that much due to the complexity of capturing/measuring the outcomes there, while it sounds like a well-designed RCT here would be in the ~ $1MM range and could move the needle quite a bit (potentially in either direction from where I think the evidence base is currently).

Thanks for this! Useful to get some insight into the FP thought process here.

(emphasis added)

Minor nitpick (I haven’t personally read FP’s analysis / work on this):

Appendix C (pg 31) details the recruitment process, where they teach locals about what depression is prior to recruitment. The group they sample from are groups engaging in some form of livelihood / microfinance programmes, such as hairdressers. Other groups include churches and people at public health clinic wait areas. It’s not clear to me based on that description that we should take at face value that the reason for very very high incoming PHQ-9 scores is that these groups are “severely traumatised” (though it’s clearly a possibility!)

RE: priors about low effectiveness of therapeutic interventions—if the group is severely traumatised, then while I agree this might make us feel less skeptical about the astounding effect size, it should also make us more skeptical about the high success rates, unless we have reason to believe that severe depression in severely traumatised populations in this context is easier to treat than moderate / mild depression.

Thank you for linking to that appendix describing the recruitment process. Could the initial high scores be driven by demand effects from SM recruiters describing depression symptoms and then administering the PHQ-9 questionnaire? This process of SM recruiters describing symptoms to participants before administering the tests seems reminiscent of old social psychology experiments (e.g. power posing being driven in part by demand effects).

No worries! Yeah, I think that’s definitely plausible, as is something like this (“People in targeted communities often incorrectly believe that StrongMinds will provide them with cash or material goods and may therefore provide misleading responses when being diagnosed”). See this comment for another perspective.

I think the main point I was making is just that it’s unclear to me that high PHQ-9 scores in this context necessarily indicate a history of severe trauma etc.

While StrongMinds runs a programme that explicitly targets refugees, who’re presumably much more likely to be traumatized, this made up less than 8% of their budget in 2019.

However, some studies seem to find very high rates of depression prevalence in Uganda (one non-representative meta-analysis found a prevalence of 30%). If a rate like this did characterise the general population, then I wouldn’t be surprised that the communities they work in (which are typically poorer / rural / many are in Northern Uganda) have very high incoming PHQ scores for reasons genuinely related to high psychological distress.

Whether they are a hairdresser or an entrepreneur living in this context seems like it could be pulling on our weakness to the conjunction fallacy. I.e., it seems less likely that someone has a [insert normal sounding job] and trauma while living in an ex-warzone than what we’d guess if we only knew th at someone was living in an ex-warzone.

Oh that’s interesting RE: refugees! I wonder what SM results are in that group—do you know much about this?

Iirc, the conjunction fallacy iirc is something like:

For the following list of traits / attributes, is it more likely that “Jane Doe is a librarian” or “Jane Doe is a librarian + a feminist”? And it’s illogical to pick the latter because it’s a perfect subset of the former, despite it forming a more coherent story for system 1.

But in this case, using the conjunction fallacy as a defence is like saying “i’m going to recruit from the ‘librarian + feminist’ subset for my study, and this is equivalent to sampling all librarians”, which I think doesn’t make sense to me? Clearly there might be something about being both a librarian + feminist that makes you different to the population of librarians, even if it’s more likely for any given person to be a librarian than a ‘librarian + feminist’ by definition.

I might be totally wrong and misunderstanding this though! But also to be clear, I’m not actually suggesting that just because someone’s a hairdresser or a churchgoer that they can’t have a history of severe trauma. I’m saying when Matt says “The effect sizes observed are very large, but it’s important to place in the context of StrongMinds’ work with severely traumatized populations”, I’m interpreting this to mean that due to the population having a history of severe trauma, we should expect larger effect sizes than other populations with similar PHQ-9 scores. But clearly there are different explanations for high initial PHQ-9 scores that don’t involve severe trauma, so it’s not clear that I should assume there’s a history of severe trauma based on just the PHQ-9 score or the recruitment methodology.

The StrongMinds pre-post data I have access to (2019) indicates that the Refugee programme has pre-post mean difference in PHQ9 of 15.6, higher than the core programme of 13.8, or their peer / volunteer-delivered or youth programmes (13.1 and 12). They also started with the highest baseline PHQ: 18.1 compared to 15.8 in the core programme.

Is there any way we can get more details on this? I recently made a blogpost using Bayesian updates to correct for post-decision surprise in GiveWell’s estimates, which led to a change in the ranking of New Incentives from 2nd to last in terms of cost effectiveness among Top Charities. I’d imagine (though I haven’t read the studies) that the uncertainty in the Strong Minds CEA is / should be much larger.

For that reason, I would have guessed that Strong Minds would not fare well post-Bayesian adjustment, but it’s possible you just used a different (reasonable) prior than I did, or there is some other consideration I’m missing?

Also, even risk neutral evaluators really should be using Bayesian updates (formally or informally) in order to correct for post-decision surprise. (I don’t think you necessarily disagree with me on this, but it’s worth emphasizing that valuing GW-tier levels of confidence doesn’t imply risk aversion.)

“we estimate StrongMinds at roughly 6x GD”—this seems to be about 2⁄3 what HLI estimate the relative impact to be (https://forum.effectivealtruism.org/posts/zCD98wpPt3km8aRGo/happiness-for-the-whole-household-accounting-for-household) - it’s not obvious to me how and why your estimates differ—are you able to say what is the reason for the difference? (Edited to update to a more recent analysis by HLI)

FP’s model doesn’t seem to be public, but CEAs are such an uncertain affair that aligning even to 2⁄3 level is a pretty fair amount of convergence.

Thanks for writing this post!

I feel a little bad linking to a comment I wrote, but the thread is relevant to this post, so I’m sharing in case it’s useful for other readers, though there’s definitely a decent amount of overlap here.

TL; DR

I personally default to being highly skeptical of any mental health intervention that claims to have ~95% success rate + a PHQ-9 reduction of 12 points over 12 weeks, as this is is a clear outlier in treatments for depression. The effectiveness figures from StrongMinds are also based on studies that are non-randomised and poorly controlled. There are other questionable methodology issues, e.g. surrounding adjusting for social desirability bias. The topline figure of $170 per head for cost-effectiveness is also possibly an underestimate, because while ~48% of clients were treated through SM partners in 2021, and Q2 results (pg 2) suggest StrongMinds is on track for ~79% of clients treated through partners in 2022, the expenses and operating costs of partners responsible for these clients were not included in the methodology.

(This mainly came from a cursory review of StrongMinds documents, and not from examining HLI analyses, though I do think “we’re now in a position to confidently recommend StrongMinds as the most effective way we know of to help other people with your money” seems a little overconfident. This is also not a comment on the appropriateness of recommendations by GWWC / FP)

(commenting in personal capacity etc)

Edit:

Links to existing discussion on SM. Much of this ends up touching on discussions around HLI’s methodology / analyses as opposed to the strength of evidence in support of StrongMinds, but including as this is ultimately relevant for the topline conclusion about StrongMinds (inclusion =/= endorsement etc):

StrongMinds should not be a top-rated charity (yet)

Comments (1, 2) about outsider perception of HLI as an advocacy org

Comment about ideal role of an org like HLI, as well as trying to decouple the effectiveness of StrongMinds with whether or not WELLBYs / subjective wellbeing scores are valuable or worth more research on the margin.

Twitter exchange between Berk Özler and Johannes Haushofer, particularly relevant given Özler’s role in an upcoming RCT of StrongMinds in Uganda (though only targeted towards adolescent girls)

Evaluating StrongMinds: how strong is the evidence? and the comment section. In particular:

Thread 1

Thread 2

James Snowden’s analysis of household spillovers

GiveWell’s Assessment of Happier Lives Institute’s Cost-Effectiveness Analysis of StrongMinds

Comments in the post: The Happier Lives Institute is funding constrained and needs you!

Greg claims “study registration reduces expected effect size by a factor of 3”

Topline finding weighted 13% from StrongMinds RCT, where d = 1.72

“this is a very surprising mistake for a diligent and impartial evaluator to make”

Greg commits to: “donat[ing] 5k USD if the [Baird] RCT reports an effect size greater than d = 0.4 − 2x smaller than HLI’s estimate of ~ 0.8, and below the bottom 0.1% of their monte carlo runs.”

Comment thread on discussion being harsh and “epistemic probation”

James and Alex push back on some claims they consider to be misleading.

Learning from our mistakes: how HLI plans to improve

Update on the Baird RCT

I want to second this! Not a mental health expert, but I have depression and so have spent a fair amount of time looking into treatments / talking to doctors / talking to other depressed people / etc.

I would consider a treatment extremely good if it decreased the amount of depression a typical person experienced by (say) 20%. If a third of people moved from the “depression” to “depression-free” category I would be very, very impressed. Ninety-five percent of people moving from “depressed” to “depression free” sets off a lot of red flags for me, and makes me think the program has not successfully measured mental illness.

(To put this in perspective: 95% of people walking away depression-free would make this far effective than any mental health intervention I’m aware of at any price point in any country. Why isn’t anyone using this to make a lot of money among rich American patients?)

I think some adjustment is appropriate to account for the fact that people in the US are generally systematically different from people in (say) Uganda in a huge range of ways which might lead to significant variation in the quality of existing care, or the nature of their problems and their susceptibility to treatment. As a general matter I’m not necessarily surprised if SM can relatively easily achieve results that would be exceptional or impossible among very different demographics.

That said, I don’t think these kinds of considerations explain a 95% cure rate, I agree that sounds extreme and intuitively implausible.

Thank you. I’m a little ashamed to admit it, but in an earlier draft I was much more explicit about my doubts about the effectiveness of SM’s intervention. I got scared because it rested too much on my geneal priors about intervention and I hadn’t finished enough of a review of the literature in which to call BS. (Although I was comfortable doing so privately, which I guess tells you that I haven’t learned from the FTX debacle)

I also noted the SM partners issue, although I couldn’t figure out whether or not it was the case re: costs so I decided to leave it out. I would definitely like to see SM address that concern.

HLI do claim to have seen some private data from SM, so it’s unlikely (but plausible) that HLI do have enough confidence, but everyone else is still in the dark.

I’m a researcher at SoGive conducting an independent evaluation of StrongMinds which will be published soon. I think the factual contents of your post here are correct. However, I suspect that after completing the research, I would be willing to defend the inclusion of StrongMinds on the GGWC list, and that the SoGive write-up will probably have a more optimistic tone than your post. Most of our credence comes from the wider academic literature on psychotherapy, rather than direct evidence from StrongMinds (which we agree suffers from problems, as you have outlined).

Regarding HLI’s analysis, I think it’s a bit confusing to talk about this without going into the details because there are both “estimating the impact” and “reframing how we think about moral-weights” aspects to the research. Ascertaining what the cost and magnitude of therapy’s effects are must be considered separately from the “therapy will score well when you use subjective-well-being as the standard by which therapy and cash transfers and malaria nets are graded” issue. As of now I do roughly think that HLI’s numbers regarding what the costs and effect sizes of therapy are on patients are in the right ballpark. We are borrowing the same basic methodology for our own analysis. You mentioned being confused by the methodology - there are a few points that still confuse me as well, but we’ll soon be publishing a spreadsheet model with a step by step explainer on the aspects of the model that we are borrowing, which may help.

If you ( @Simon_M or anyone else wishing to work at a similar level of analysis) is planning on diving into these topics in depth, I’d love to get in touch on the Forum and exchange notes.

Regarding the level of evidence: SoGive’s analysis framework outlines a “gold standard” for high impact, with “silver” and “bronze” ratings assigned to charities with lower-but-still-impressive cost-effectiveness ratings. However, we also distinguish between “tentative” ratings and “firm” ratings, to acknowledge that some high impact opportunities are based on more speculative estimates which may be revised as more evidence comes in. I don’t want to pre-empt our final conclusions on StrongMinds, but I wouldn’t be surprised if “Silver (rather than Gold)” and/or “Tentative (rather than Firm)” ended up featuring in our final rating. Such a conclusion still would be a positive one, on the basis of which donation and grant recommendations could be made.

There is precedent for effective altruists recommending donations to charities for which the evidence is still more tentative. Consider that Givewell recommends “top charities”, but also recommends less proven potentially cost-effective and scalable programs (formerly incubation grants). Identifying these opportunities allows the community to explore new interventions, and can unlock donations that counterfactually would not have been made, as different donors may make different subjective judgment calls about some interventions, or may be under constraints as to what they can donate to.

Having established that there are different criteria that one might look at in order to determine when an organization should be included in a list, and that more than one set of standards which may be applied, the question arises: What sort of standards does the GWWC top charities list follow, and is StrongMinds really out of place with the others? Speaking the following now personally, informally and not on behalf of any current or former employer: I would actually say that StrongMinds has much more evidence backing than many of the other charities on this list (such as THL, Faunalytics, GFI, WAI, which by their nature don’t easily lend themselves to RCT data). Even if we restrict our scope to the arena of direct (excluding e.g. excluding pandemic research orgs) global health interventions, I wouldn’t be surprised if bright and promising potential stars such as Suvita and LEEP are actually at a somewhat similar stage as StrongMinds—they are generally evidence-based enough to deserve their endorsement on this list, but I’m not sure they’ve been as thoroughly vetted by external evaluators the way more established organizations such as Malaria Consortium might be. Because of all this, I don’t think StrongMinds seems particularly out of place next to the other GWWC recommendations. (Bearing in mind again that I want to speak casually as an individual for this last paragraph, and I am not claiming special knowledge of all the orgs mentioned for the purposes of this statement).

Finally, it’s great to see posts like this on the EA forum, thanks for writing it!

I might be being a bit dim here (I don’t have the time this week to do a good job of this), but I think of all the orgs evaluating StrongMinds, SoGive’s moral weights are most likely to find favourably for StrongMinds. Given that, I wonder what you expect you’d rate them at if you altered your moral weights to be more inline with FP and HLI?

(Source)

This is a ratio of 4:1 for averting a year of severe depression vs doubling someone’s consumption.

For context Founders Pledge who have a ratio somewhere around 1.3:1. Income doubling : DALY is 0.5 : 1. And severe depression corresponds to a DALY weighting of 0.658 in their CEA. (I understand they are shifting to a WELLBY framework like HLI, but I don’t think it will make much difference).

HLI is harder to piece together, but roughly speaking they see doubling income as having 1.3 WELLBY and severe depression has having a 1.3 WELLBY effect. A ratio of 1.3:1 (similar to FP)

Thanks for your question Simon, and it was very eagle-eyed of you to notice the difference in moral weights. Good sleuthing! (and more generally, thank you for provoking a very valuable discussion about StrongMinds)

I run SoGive and oversaw the work (then led by Alex Lawsen) to produce our moral weights. I’d be happy to provide further comment on our moral weights, however that might not be the most helpful thing. Here’s my interpretation of (the essence of) your very reasonable question:

I have a simple answer to this: no, it isn’t.

Let me flesh that out. We have (at least) two sources of information:

Academic literature

Data from StrongMinds (e.g. their own evaluation report on themselves, or their regular reporting)

And we have (at least) two things we might ask about:

(a) How effective is the intervention that StrongMinds does, including the quality of evidence for it?

(b) How effective is the management team at StrongMinds?

I’d say that the main crux is the fact that our assessment of the quality of evidence for the intervention (item (a)) is based mostly on item 1 (the academic literature) and not on item 2 (data from StrongMinds).

This is the driver of the comments made by Ishaan above, not the moral weights.

And just to avoid any misunderstandings, I have not here said that the evidence base from the academic literature is really robust—we haven’t finished our assessment yet. I am saying that (unless our remaining work throws up some surprises) it will warrant a more positive tone than your post, and that it may well demonstrate a strong enough evidence base + good enough cost-effectiveness that it’s in the same ballpark as other charities in the GWWC list.

I don’t understand how that’s possible. If you put 3x the weight on StrongMind’s cost-effectiviness viz-a-vis other charities, changing this must move the needle on cost-effectiveness more than anything else. It’s possible to me it could have been “well into the range of gold-standard” and now it’s “just gold-standard” or “silver-standard”. However if something is silver standard, I can’t see any way in which your cost-effectivness being adjusted down by 1/3rd doesn’t massively shift your rating.

I feel like I’m being misunderstood here. I would be very happy to speak to you (or Ishaan) on the academic literature. I think probably best done in a more private forum so we can tease out our differences on this topic. (I can think of at least one surprise you might not have come across yet).

Ishaan’s work isn’t finished yet, and he has not yet converted his findings into the SoGive framework, or applied the SoGive moral weights to the problem. (Note that we generally try to express our findings in terms of the SoGive framework and other frameworks, such as multiples of cash, so that our results are meaningful to multiple audiences).

Just to reiterate, neither Ishaan nor I have made very strong statements about cost-effectiveness, because our work isn’t finished yet.

That sounds great, I’ll message you directly. Definitely not wishing to misunderstand or misinterpret—thank you for your engagement on this topic :-)

To expand a little on “this seems implausible”: I feel like there is probably a mistake somewhere in the notion that anyone involves thinks that <doubling income as having 1.3 WELLBY and severe depression has having a 1.3 WELLBY effect.>

The mistake might be in your interpretation of HLI’s document (it does look like the 1.3 figure is a small part of some more complicated calculation regarding the economic impacts of AMF and their effect on well being, rather than intended as a headline finding about the cash to well being conversion rate). Or it could be that HLI has an error or has inconsistencies between reports. Or it could be that it’s not valid to apply that 1.3 number to “income doubling” SoGive weights for some reason because it doesn’t actually refer to the WELLBY value of doubling.

I’m not sure exactly where the mistake is, so it’s quite possible that you’re right, or that we are both missing something about how the math behind this works which causes this to work out, but I’m suspicious because it doesn’t really fit together with various other pieces of information that I know. For instance - it doesn’t really square with how HLI reported Psychotherapy is 9x GiveDirectly when the cost of treating one person with therapy is around $80, or how they estimated that it took $1000 worth of cash transfers to produce 0.92 SDs-years of subjective-well-being improvement (“totally curing just one case of severe depression for a year” should correspond to something more like 2-5 SD-years).

I wish I could give you a clearer “ah, here is where i think the mistake is” or perhaps a “oh, you’re right after all” but I too am finding the linked analysis a little hard to follow and am a bit short on time (ironically, because I’m trying to publish a different piece of Strongminds analysis before a deadline). Maybe one of the things we can talk about once we schedule a call is how you calculated this and whether it works? Or maybe HLI will comment and clear things up regarding the 1.3 figure you pulled out and what it really means.

Replied here

Good stuff. I haven’t spent that much time looking at HLIs moral weights work but I think the answer is “Something is wrong with how you’ve constructed weights, HLI is in fact weighing mental health harder than SoGive”. I think a complete answer to this question requires me checking up on your calculations carefully, but I haven’t done so yet, so it’s possible that this is right.

If if were true that HLI found anything on the order of roughly doubling someone’s consumption improved well being as much as averting 1 case of depression, that would be very important as it would mean that SoGive moral weights fail some basic sanity checks. It would imply that we should raise our moral weight on cash-doubling to at least match the cost of therapy even under a purely subjective-well-being oriented framework to weighting. (why not pay 200 to double income, if it’s as good as averting depression and you would pay 200 to avert depression?) This seems implausible.

I haven’t actually been directly researching the comparative moral weights aspect, personally—I’ve been focusing primarily on <what’s the impact of therapy on depression in terms of effect size> rather than on the “what should the moral weights be” question (though I have put some attention to the “how to translate effect sizes into subjective intuitions” question, but that’s not quite the same thing). That said when I have more time I will look more deeply into this and check if our moral weights are failing some sort of sanity check on this order, but, I don’t think that they are.

Regarding the more general question of “where would we stand if we altered our moral weights to be something else”, ask me again in a month or so when all the spreadsheets are finalized, moral weights should be relatively easy to adjust once the analysis is done.

(as sanjay alludes to in the other thread, I do think all this is a somewhat separate discussion from the GWWC list—my main point with the GWWC list was that StrongMinds is not in the big picture actually super out of place with the others, in terms of how evidence-backed it is relative to the others, especially when you consider the big picture of the background academic literature about the intervention rather than their internal data. But I wanted to address the moral weights issue directly as it does seem like an important and separate point.)

I would recommend my post here. My opinion is—yes—SoGive’s moral weights do fail a basic sanity check.

1 year of averted depression is 4 income doublings

1 additional year of life (using GW life-expectancies for over 5s) is 1.95 income doublings.

ie SoGive would thinks depression is worse than death. Maybe this isn’t quite a “sanity check” but I doubt many people have that moral view.

I think cost-effectiveness is very important for this. StrongMinds isn’t so obviously great that we don’t need to consider the cost.

Yes, this is a great point which I think Jeff has addressed rather nicely in his new post. When I posted this it wasn’t supposed to be a critique of GWWC (I didn’t realise how bad the situation there was at the time) as much as a critique of StrongMinds. Now I see quite how bad it is, I’m honestly at a loss for words.

I replied in the moral weights post w.r.t. “worse than death” thing. (I think that’s a fundamentally fair, but fundamentally different point from what I meant re: sanity checks w.r.t not crossing hard lower bounds w.r.t. the empirical effects of cash on well being vs the empirical effect of mental health interventions on well being)

This is a great, balanced post which I appreciate thanks. Especially the point that there is a decent amount of RCT data for strongminds compared to other charities on the list.

Edit 03-01-23: I have now replied more elaborately here

Hi Simon, thanks for this post! I’m research director at GWWC, and we really appreciate people engaging with our work like this and scrutinising it.

I’m on holiday currently and won’t be able to reply much more in the coming few days, but will check this page again next Tuesday at the latest to see if there’s anything more I/the GWWC team need to get back on.

For now, I’ll just very quickly address your two key claims that GWWC shouldn’t have recommended StrongMinds as a top-rated charity and that we should remove it now, both of which I disagree with.

Our process and criteria for making charity recommendations are outlined here. Crucially, note that we generally don’t do (and don’t have capacity to do) individual charity research: we almost entirely rely on our trusted evaluators—including Founders Pledge—for our recommendations. As a research team, we plan to specialize in providing guidance on which evaluators to rely rather than in doing individual charity evaluation research.

In the case of StrongMinds, they are a top-rated charity primarily because Founders Pledge recommended them to us, as you highlight. There were no reasons for us to doubt the quality of FP’s research supporting this recommendation at the time, and though you make a few good points on StrongMinds that showcase some of the uncertainties/caveats/counterarguments involved in this recommendation, I think Matt Lerner adequately addresses these in his reply above, so I don’t see reason for us to consider diverging from FP’s recommendation now either.

I do have a takeaway from your post on our communication. On our website we currently state

“StrongMinds meets our criteria to be a top-rated charity because one of our trusted evaluators, Founders Pledge, has conducted an extensive evaluation highlighting its cost-effectiveness as part of its report on mental health.”

We then also highlight HLI’s recent report as evidence supporting this recommendation. However, I think we should have been/be clearer on the fact that it’s not only FP’s 2019 report that supports this recommendation, but their ongoing research, some of which isn’t public yet (even though the report still represents their overall view, as Matt Lerner mentions above). I’ll make sure to edit this.

Thanks again for your post, and I look forward to engaging more next week at the latest if there are any other comments/questions you or anyone else have.

I just want to add my support for GWWC here. I strongly support the way they have made decisions on what to list to date:

As a GWWC member who often donates through the GWWC platform I think it is great that they take a very broad brush and have lots of charities that people might see as top on the platform. I think if their list got to small they would not be able to usefully serve the GWWC donor community (or other donors) as well.

I would note that (contrary to what some of the comments suggest) that GWWC recommend giving to Funds and do recommend giving to these charities (so they do not explicitly recommend Strong Minds). In this light I see the listing of these charities not as recommendations but as convenience for donors who are going to be giving there.

I find GWWC very transparent. Simon says ideally “GWWC would clarify what their threshold is for Top Charity”. On that specific point I don’t see how GWWC could be any more clear. Every page explains that a top charity is one that has been listed as top by an evaluator GWWC trust. Although I do agree with Simon more description of how GWWC choose certain evaluators could be helpful.

That said I would love it if going forwards GWWC could find the time. To evaluate the evaluators and the Funds and their recommendations (for example I have some concerns about the LTFF and know others do too, I know there have been concerns about ACE in the past etc).

I would not want GWWC to unlist Strong Minds from their website but I could imagine them adding a section on the Strong Minds page saying “The GWWC team views” that says: “this is listed as it is a FP top but our personal views are that …. meaning this might or might not be a good place to give especially if you care about … etc”

(Conflict of interest note: I don’t work at GWWC or FP but I do work at a FP recommended charity and at a charity who’s recommendations make it into the GWWC criteria so I might be bias).

I agree, and I’m not advocating removing StrongMinds from the platform, just removing the label “Top-rated”. Some examples of charities on the platform which are not top-rated include: GiveDirectly, SCI, Deworm the World, Happier Lives Institute, Fish Welfare Initiative, Rethink Priorities, Clean Air Task Force...

I’m afraid to say I believe you are mistaken here, as I explained in my other comment. The recommendations section clearly includes top-charities recommended by trusted evaluators and explicitly includes StrongMinds. There is also a two-tier labelling of “Top-rated” and not-top-rated and StrongMinds is included in the former. Both of these are explicit recommendations afaic.

I’m not really complaining about transparency as much as I would like the threshold to include requirements for trusted evaluators to have public reasoning and a time-threshold on their recommendations.

Again, to repeat myself. Me either! I just want them to remove the “Top-rated” label.

Ah. Good point. Replied to the other thread here: https://forum.effectivealtruism.org/posts/ffmbLCzJctLac3rDu/strongminds-should-not-be-a-top-rated-charity-yet?commentId=TMbymn5Cyqdpv5diQ .

Recognizing GWWC’s limited bandwidth for individual charity research, what would you think of the following policy: When GWWC learns of a charity recommendation from a trusted recommender, it will post a thread on this forum and invite comments about whether the candidate is in the same ballpark as the median top-rated organization in that cause area (as defined by GWWC, so “Improving Human Well-Being”). Although GWWC will still show significant deference to its trusted evaluators in deciding how to list organizations, it will include one sentence on the organization’s description linking to the forum notice-and-comment discussion. It will post a new thread on each listed organization at 2-3 year intervals, or when there is reason to believe that new information may materially affect the charity’s evaluation.

Given GWWC’s role and the length of its writeups, I don’t think it is necessary for GWWC to directly state reasons why a donor might reasonably choose not to donate to the charity in question. However, there does need to be an accessible way for potential donors to discover if those reasons might exist. While I don’t disagree with using FP as a trusted evaluator, its mission is not primarily directed toward producing public materials written with GWWC-type donors in mind. Its materials do not meet the bar I suggested in another comment for advisory organizations to GWWC-type donors: “After engaging with the recommender’s donor-facing materials about the recommended charity for 7-10 minutes, most potential donors should have a solid understanding of the quality of evidence and degree of uncertainty behind the recommendation; this will often include at least a brief mention of any major technical issues that might significantly alter the decision of a significant number of donors.” That is not a criticism of FP because it’s not trying to make recommendations to GWWC-type donors.

So giving the community an opportunity to state concerns/reservations (if any) and link to the community discussion seems potentially valuable as a way to meet this need without consuming much in the way of limited GWWC research resources.

Thanks for the suggestion Jason, though I hope the longer comment I just posted will clarify why I think this wouldn’t be worth doing.

edited (see bottom)

I’d like to flag that I think it’s bad that my friend (yes I’m biased) has done a lot of work to criticise something (and I haven’t read pushback against that work) but won’t affect the outcome because of work that he and we cannot see.

Is there a way that we can do a little better than this?

Some thoughts:

Could he be allowed to sign an NDA to read Founder’s pledge’s work?

Would you be interested in forecasts that Stronger Minds wont be a GWWC top charity by say 2025?

Could I add this criticism and a summary of your response to Stronger Minds EA wiki page so that others can see this criticism and it doesn’t get lost?

Can anyone come up with other suggestions?

edits:

Changed “disregarded” the sentence with “won’t affect the outcome”

Tbh I think this is a bit unfair: his criticism isn’t being disregarded at all. He received a substantial reply from FP’s research director Matt Lerner—even while he’s on holiday—within a day, and Matt seems very happy to discuss this further when he’s back to work.

I should also add that almost all of the relevant work is in fact public, incl. the 2019 report and HLI’s analysis this year. I don’t think what FP has internally is crucial to interpreting Matt’s responses.

I do like the forecasting idea though :).

I am sure there is a better word than “disregarded”. Apologies for being grumpy, have edited.

This seems like legitimate criticism. Matt says so. But currently, it feels like nothing might happen as a result. You have secret info, end of discussion. This is a common problem within charity evaluation, I think—someone makes some criticism, someone disagrees and so it gets lost to the sands of time.

I guess my question is, how can this work better? How can this criticism be stored and how can your response of “we have secret info, trust us” be a bit more costly for you now (with appropriate rewards later).

If you are interested in forecasting, would you prefer a metaculus or manifold market?

Eg if you like manifold, you can bet here (there is a lot of liquidity and the market currently heavily thinks GWWC will revoke its recommendation. If you disagree you can win money that can be donated to GWWC and status. This is one way to tax and reward you for your secret info)

Is this form of the market the correct wording? If so I’ll write a metaculus version.