Concerns with Intentional Insights

A recent facebook post by Jeff Kaufman raised concerns about the behavior of Intentional Insights (InIn), an EA-aligned organization headed by Gleb Tsipursky. In discussion arising from this, a number of further concerns were raised.

This post summarizes the concerns found with InIn. It also notes some concerns which were mistaken and unfounded, and facts that arose which reflect well on InIn.

This post was contributed to by Jeff Kaufman, Gregory Lewis, Oliver Habryka, Carl Shulman, and Claire Zabel. They disclose relevant conflicts of interest below.

Outline

| 1 | Exaggerated claims of affiliation or endorsement |

| 1.1 | Kerry Vaughan of CEA |

| 1.2 | Giving What We Can (GWWC) |

| 1.3 | Animal Charity Evaluators (ACE) |

| 2 | Astroturfing |

| 2.1 | The Intentional Insights blog |

| 2.2 | The Effective Altruism forum |

| 2.3 | LessWrong |

| 2.4 | |

| 2.4.1 | Soliciting upvotes and denying it |

| 2.4.2 | Not disclosing paid support |

| 2.5 | Amazon |

| 3 | Misleading figures |

| 4 | Dubious practices |

| 4.1.1 | Paid contractors’ expected ‘volunteering’ |

| 4.1.2 | Further details regarding contractor ‘volunteering’ |

| 4.2 | Amazon bestseller |

| 5 | Inflated social media impact |

| 5.1 | |

| 5.2 | The Life You Can Save donations |

| 5.3 | |

| 5.4 | |

| 5.5 | Presentations of media article traffic and reach |

| 5.5.1 | TIME article |

| 5.5.2 | Huffington Post |

| 6 | Mistaken/Unfair accusations |

| 6.1 | Supposed linearity of Twitter follower increase |

| 6.2 | Objections to Intensional Insights staff ‘liking’ Intentional Insights content |

| 6.3 | ‘Paid likes’ from clickfarms |

| 7 | Positives |

| 7.1 | Jon Behar |

| 7.2 | Additional donations |

| 7.3 | Placement of articles in TIME and the Huffington Post |

| 8 | Policy responses from InIn |

| 8.1 | Post-criticism conflict-of-interest policy |

| 8.2 | Post-criticism Facebook boosting |

| 9 | Disclosures |

| 10 | Response comments from Gleb Tsipursky |

1. Exaggerated claims of affiliation or endorsement

Intentional Insights claims ‘active collaborations’ with a number of Effective Altruist groups in its Theory of Change document which was on its “About” page (August 21, 2016).

In a number of cases InIn makes use of the name of an effective altruist organization without asking for that organization’s consent, based on minor interactions such as the organization answering questions about web traffic. From the ‘Effective Altruism impact of Intentional Insights’ document (August 19, 2016):

As detailed below, we observe that after learning of such claims and use of their names, some of these groups had asked InIn to stop. Yet even in some of these cases InIn had not altered the mentions in its promotional materials months later. Tsipursky also does not appear to have adopted a practice of checking with organizations before using their names in InIn promotional materials.

1.1. Kerry Vaughan of CEA

Tsipursky previously posted notes from a Skype conversation with Kerry Vaughan without his consent, and suggested he had endorsed Intentional Insights where he had not:

Tsipursky later apologized, edited the post, and said he had updated. Yet he later engaged in similar behavior (see sections 1.2 and 1.3 below).

1.2. Giving What We Can (GWWC)

Gleb has taken the Giving What We Can pledge, and contributed an article on the Giving What We Can blog on December 23, 2015. He also mentioned and linked to GWWC in his articles elsewhere.

Michelle Hutchinson, Executive Director of Giving What We Can, wrote to Tsipursky in May 2016 asking him to cease “claiming to be supported by Giving What We Can.” However, the use of Giving What We Can’s name as an ‘active collaboration’ was not removed from Intentional Insights’ website, and remained in both of the above InIn documents as of October 15, 2016.

1.3. Animal Charity Evaluators (ACE)

In the InIn impact document Tsipursky quotes Leah Edgerton of ACE:

Erika Alonso of ACE subsequently made the following statement:

2. Astroturfing

Astroturfing is giving the misleading impression of unaffiliated (“grassroots”) support. In GiveWell’s first year its cofounders engaged in astroturfing, and this was taken very seriously by its board. Among other responses, the GiveWell board demoted one of the co-founders and fined both $5,000 each. Tsipursky expressly claimed not to engage in astroturfing:

However, astroturfing is widespread across the Intentional Insights social media presence (documented in the sections below). Tsipursky did qualify his statement with “we are not asking people to do these sorts of activities in their paid time”, but lack of payment isn’t enough to prevent misleading people about the nature of the support. In any case, the distinction between contractors’ paid and unpaid time is blurry (see section 4.1.1).

2.1. The Intentional Insights blog

Paid contractors for Intentional Insights leave complimentary remarks on the Intentional Insights blog, and the Intentional Insights account replies with gratitude, as if the comments were by strangers. At no stage do they disclose the financial relationship that exists between them. In the screenshot below (source, Candice, John, Beatrice, Jojo, and Shyam are all Intentional Insights contractors.

The most recent examples of this happened in late August 2016, after the initial post and discussion with Tsipursky on Jeff’s Facebook wall, and during the drafting of this document.

2.2. The Effective Altruism forum

Tsipursky has done the same thing on the Effective Altruism forum. Here is one instance (note that “Nyor” also goes by “Jojo”):

Here is another example (note that “Anthonyemuobo” is a professional handle used by one of Tsipursky’s acknowledged contractors, “Sargin”):

2.3. LessWrong

Tsipursky posted a link to some of his wife and InIn co-founder’s writing in February 2016, without noting this connection:

This is a minor lapse, one which Gleb claimed to have learned from and updated. Yet similar behavior continued:

In March 2016, Intentional Insights’ contractors created accounts and started posting non-specific praise on Tsipursky’s LessWrong posts:

These are all people Tsipursky pays, but none of them acknowledged it in their comments or their posts in the welcome thread. Additionally, Tsipursky did not acknowledge this relationship when he thanked them for their remarks.

LessWrong user gjm pointed out that this was misleading, and Tsipursky acknowledged this was a problem and commented on Sargin’s welcome post:

While Tsipursky knew both Beatrice Sargin and Alex Wenceslao had posted similar comments, since he had replied to them, he waited for these to be discovered and pointed out before acting:

This happened a third time, with JohnC2015:

2.4. Facebook

2.4.1. Soliciting upvotes and denying it

Tsipursky claimed “when I make a post on the EA Forum and LW I will let people who are involved with InIn know about it, for their consideration, and explicitly don’t ask them to upvote”:

In the comment Tsipursky denies soliciting upvotes, and demands that accusations that he did be substantiated or withdrawn. Six hours later someone responded with a screenshot of a post Tsipursky had made to the Intentional Insights Insiders group showing Tsipursky soliciting upvotes:

Tsipursky’s response, a couple hours later in the same thread:

Tsipursky either genuinely believed posts like the above do not ask for upvotes, or he believed statements that are misleading on common-sense interpretation are acceptable providing they are arguably ‘true’ on some tendentious reading. Neither is reassuring. [He subsequently conceded this was ‘less than fully forthcoming’.]

2.4.2. Not disclosing paid support

Intentional Insights proposed producing EA T-Shirts, and received multiple criticisms. Tsipursky claimed he had run the design by multiple people. Again, Tsipursky did not disclose that at least five of them were people he pays:

2.5. Amazon

Tsipursky’s contractor posted a 5-star review for his self-help book on Amazon without disclosing the affiliation:

Tsipursky emailed copies of his self-help book to Intentional Insights volunteers, including contractors, who responded by posting 5-star reviews on Amazon:

He later followed up with:

This is true but incomplete: the 8th review is by Asraful Islam, a volunteer affiliated to Intentional Insights.

Another Intentional Insights affiliate, unpaid at that time but now a paid virtual assistant, Elle Acquino, posted another 5-star review, not in the top 10. In that review, however, the connection to Tsipursky and his nonprofit institute was disclosed.

3. Misleading figures

In December 2015 and January 2016, Tsipursky repeatedly claimed that his articles were shared thousands of times as evidence of the effectiveness of his approach. In fact, he had been reporting Facebook ‘likes’ and all views on Stumbleupon as shares, greatly exaggerating the extent of social media engagement.

The initial point reflected a common issue with the interpretation of social media activity counters on websites. After this was explained to him Tsipursky claimed to have updated on the correction. However, a June 2016 document on Intentional Insight’s Effective Altruism Impact again reported views as shares, exaggerating sharing by many times.

4. Dubious practices

4.1.1. Paid contractors’ expected ‘volunteering’

Tsipursky only takes on contractors who spend at least two hours “volunteering” for Intentional Insights for each paid hour:

In a follow-up discussion, Tsipursky suggested that contractors could temporarily reduce their volunteer hours in special circumstances, but he would not affirm that contractors would be allowed to simply say no to “volunteering”:

Depending on the nature of the volunteer work, this requirement seems potentially unethical, effectively requiring that contractors do three times as much work for a fixed amount of money. We also suggest this relationship undermines the distinction Tsipursky offers between ‘paid’ and ‘volunteer time’ and the defence that the promotion his contractors undertake on his behalf is innocuous as it occurs in their ‘volunteer time’.

4.1.2. Further details regarding contractor ‘volunteering’

Subsequent to the preparation of the above section Tsipursky provided additional information about how he came into contact with contractors, their donations, prior unpaid volunteering, wages, and other information as evidence of genuine support. They provide that, but also support concerns regarding linkage of paid and unpaid work and financial interests.

Tsipursky states the following regarding initial meetings and hiring:

Tsipursky stated the following regarding the length of unpaid volunteering prior to the first paid work:

He also notes donations by contractors, implemented by reducing their paid hours or paid hour wage rate, as evidence of genuine support:

I have pointed out many times that there is plenty of evidence showing that those folks who do contracting are passionate enthusiasts for InIn. Let’s take the example of John Chavez, who the document brought up. He chose to respond to a fundraising email to our supporter listserve in June 2016 – long before Jeff Kaufman’s original post – by donating $50 per month to InIn out of his $300 monthly salary:This is bigger than a typical GWWC member, at over 15% of his annual income. Let me repeat – he voluntarily, out of his own volition in response to a fundraising that went out to all of our supporters, chose to make this donation. Just to be clear, we send out fundraising letters regularly, so it’s not like this was some special occasion. It was just that – as he said in the letter – it happened to fall on the 1-year anniversary of him joining InIn and he felt inspired and moved by the mission and work of the organization to give.

Before you go saying John is unique, here is another screenshot of a donation from another contractor who in October 2015, in response to a fundraising email, made a $10/month donation:

Again, voluntarily, out of her own volition, she chose to make this donation.

Tsipursky also indicates that paid and unpaid hours by contractors constitute only a minority of work hours at InIn, with most hours contributed by volunteers without financial compensation:

Regarding wages and requirement/expectations of unpaid volunteering, Tsipursky wrote the following:

The Upwork (formerly known as Odesk) freelancer marketplace on which contractors are hired has a minimum wage of $3.00 per hour. Combined with the expected unpaid volunteering the typical wage would be $1.00, 1/3rd of the minimum for the platform.

John is given as an example of a higher paid contractor at $7.5 per hour. However, this is combined with 3 hours of unpaid volunteering for each paid hour, rather than 2, for a combined wage of $1.875 per hour, prior to his donation of 1⁄3 of that wage.

In effect, the expectation of volunteering systematically circumvents the Upwork minimum wage for contractors. However, it should be noted that the Upwork minimum wage is a corporate policy, and not a national or local labor law. Contractors in low-income countries may be earning substantially more than the local minimum wages or average incomes. For example, according to Wikipedia the hourly minimum wage in US dollars at nominal exchange rates is $0.54 in Nigeria. In the Philippines minimum wages vary by location and sector, but Wikipedia lists a range of ~$0.6-$1.2 per hour for non-agricultural workers, with the latter group in the capital of Manila. So the wage per combined (paid+volunteer) hour of work would not appear to be in conflict with legal minimum wages in contractors’ jurisdictions. Furthermore in a number of these jurisdictions the minimum wage is closer to the median wage, and unemployment is high.

Regarding the link between paid and unpaid hours, Tsipursky describes it as an informal understanding:

In aggregate the additional statements provide evidence of pre-existing support for InIn from new contractors. However, they also confirm a linkage of paid and unpaid labor, and contractor financial interests in promotional activity occurring during ‘volunteer’ hours.

4.2. “Best-selling author”

Tsipursky includes being a ‘best-selling author’ in his standard bio. For example, on his Patreon:

And:

And on his Amazon author page:

Normally, a reader would take “best-selling author” to mean hitting a major best-seller list like the New York Times, which indicates that very many people have decided to buy the book, and is a hard signal to fake. In Tsipursky’s case, “best-selling author” means that his book was very briefly the top seller in a sub-sub-category of Amazon. Further, he reports offering his book for free and encouraging friends and contractors to download and review it. In its first two weeks the book sold 50 copies at $3 each. Cumulatively it has sold 500 copies at $3 each, and been downloaded 3500+ times free. In contrast, NYT bestseller status requires thousands of sales over the first week. Amazon bestseller status is calculated hourly by category: in small categories three purchases in an hour can win the #1 bestselling author label.

Many of those giving the book 5 star reviews are social contacts of Tsipursky, some of them paid or volunteer Intentional Insights staff, but do not disclose this association (see section 2.5).

As of August 22, 2016 the book is ranked as follows:

In light of this, calling oneself a ‘bestselling author’ on this sort of performance is potentially misleading.

We note that the practice of claiming bestselling author status using bestseller lists that involve very small actual sales may be widespread. This does not, however, prevent it from being misleading or controversial. For example, when Brent Underwood attained Amazon best-seller status using a few dollars in less than an hour with a book that was simply a picture of his foot, media coverage generally suggested that this highlighted a problematic practice.

5. Inflated social media impact

5.1. Facebook

Tsipursky has cited social media engagement as evidence of impact. However, in many cases it appears that this engagement is illusory. In the case of Facebook, it appears to have resulted from paid Facebook post boosting, which led to hundreds of likes on posts from clickfarms, in a process described by Veritasium: clickfarm accounts like enormous numbers of things they have not been directly paid to like in order to manipulate Facebook’s algorithms. Facebook boosting systematically attracts these clickfarm accounts, a risk which is exacerbated by boosting to regions where clickfarms are located (although clickfarms also have fake accounts purporting to be from all around the world).

In the case of InIn posts, InIn paid for that boosting. In February 2016, Tsipursky argued that this was resulting in genuine engagement and reach:

For a number of InIn blog posts with large numbers of likes (for example 318 for this recent one) these likes appear to be primarily the result of clickfarms. Accounts liking this post like vast numbers of disparate things. Here are some random selections from the middle of the list of that post:

There is further circumstantial evidence: the likes are often from accounts in low-income countries with substantial clickfarm operations. Tsipursky defended this as coincidental overlap caused by Intentional Insight’s targeting of low-income countries, however countries with similar demographics without large click farm operations are not well represented.

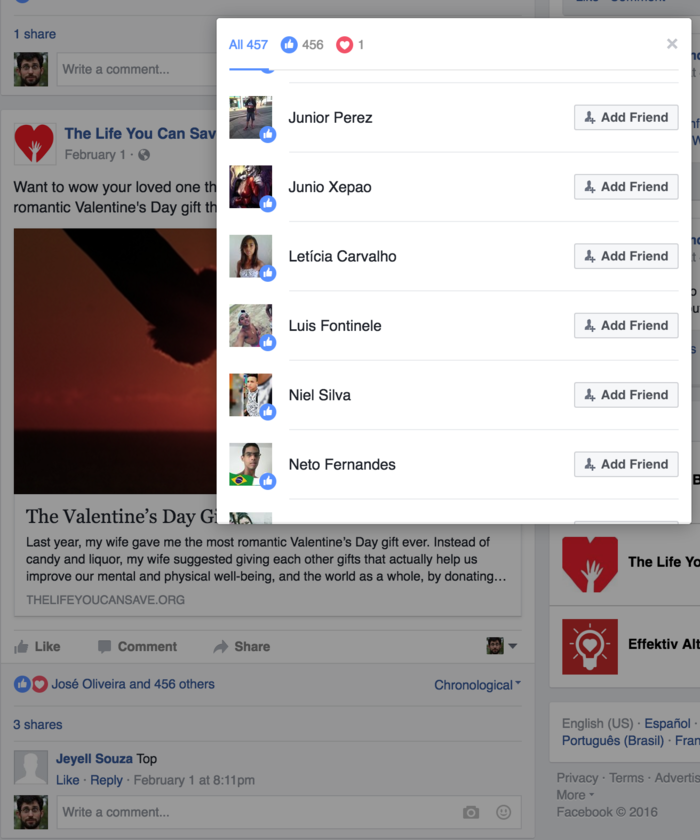

In arguing for the impact of his writing, Tsipursky cited a post on the TLYCS blog that got 500 likes in its first day on the TLYCS blog while typical posts got 100-200 likes:

However, this appears to also be a case of Facebook ad boosting eliciting engagement from clickfarms, this time by a former TLYCS employee (subsequently asked to stop by TLYCS) rather than InIn, according to this statement from TLYCS’ Jon Behar:

The profiles contributing the likes and whose profiles show no other engagement with TLYCS, or with EA ideas:

After Jeff Kaufman raised concerns about the pattern of Facebook likes in February 2016, Tsipursky doesn’t seem to have looked into the issue further prior to the August 2016 discussion, when outside observers provided indisputable evidence and explained the role of boosting in generating clickfarm likes. While the boosting-clickfarm link is counterintuitive, the lack of any other engagement by the clickfarmers was apparent both before and after the raising of concerns in February. Failure to examine the ineffectiveness of these social media channels, even after concerns were raised, raises questions about InIn’s practices as an outreach and content marketing organization.

5.2. The Life You Can Save donations

In his “Effective Altruism impact of Intentional Insights” document (archived copy), Tsipursky claims that content he has published with The Life You Can Save is able to “regularly reach an audience of over 5,000, at least 12% of whom make a donation” suggesting over 600 donations per article, based on a reference letter from a former TLYCS employee. However, these figures were incorrect, and TLYCS estimates that the total number of visitors who landed on Tsipursky’s blog posts at the TLYCS blog was ~3,000 (rather than tens of thousands), with donations directly from those page totalling likely 2-3 (rather than hundreds).

While the reference letter Tsipurksy cites could easily give that false impression, it is implausible in light of other information available to him about the impact of his pieces. For example, Tsipursky also cites an article in a major news outlet as producing two donations to GiveDirectly totalling $500:

Since two donations is far less than ~600, this “12% of 5,000 views” number was clearly not sanity checked before being used to argue the case for Intentional Insights to EAs and in a fundraising document aimed at EAs. It’s possible that Tsipursky simply took a surprisingly good estimate from a partner organization at face value, but one might expect an expert in marketing to investigate why this channel was performing so much better than his other channels.

5.3. Twitter

Tsipursky implied that his 10k Twitter followers represent organic interest:

The InIn account is following approximately as many accounts as follow it, 11.7k to 11.4k. Oliver observed that many of these accounts have “100% follow-back” in their descriptions. It seems like they’re offering an exchange: InIn adds these accounts as followers, and they add his back in return, or vice versa. This is not an indication of actual interest from fans, and these accounts have almost no organic engagement with InIn such as retweets:

5.4. Pinterest

InIn follows over 20,000 people on Pinterest, far more people than follow it. As on the InIn Facebook page and Twitter, follower engagement is extremely low, and dominated by persons affiliated with InIn, suggesting the vast majority of followers are not genuine.

Examining the profiles of followers, there appears to be a very high rate of clickfarm/advertising accounts. Here are 10 randomly selected InIn Pinterest follower accounts. 10 out of 10 appear to be spam/advertising/clickfarm accounts:

5.5. Presentations of media article traffic and reach

5.5.1. TIME article

In the InIn EA impact document we see this:

The document does not make clear that the article did not appear in the print magazine, so print readers would not be exposed to it there. Online, we are left to anchor on a figure of 65 million views, without any reference to the actual views of the article (which were tremendously lower).

Somewhat later in the document we see this:

As another example, here are numbers in a spreadsheet we set up recently to track clicks to EA nonprofit websites from the Time piece we published.

However, while the article made the case for GiveWell recommended charities and EA charity evaluators, only 132 clicks reached those organizations through the article, 70 of which did not immediately bounce, according to InIn’s traffic figures. Specifically, in the original InIn spreadsheet the ‘signed up to newsletter or converted in other ways″ column had a value of 13 for ACE, and 1 ‘clicked on donate button’

The corrected spreadsheet shows a value of 2 rather than 13 for ‘signed up to newsletter.’

5.5.2. Huffington Post

The InIn EA impact document also included this discussion of a Huffington Post article:

However, he provided no evidence of reaching new audiences via the placement in the HuffIngton Post. Instead, he provided an example of an already supportive facebook friend, who apparently encountered the article from Tsipursky’s Facebook page, not the Huffington Post.

6. Mistaken/Unfair accusations

6.1. Supposed linearity of Twitter follower increase

It was suggested that Tsipursky’s twitter page shows surprisingly linear increases in followers over time (e.g. +8 followers a day for 10 days in a row, which may be indicative of click-farming. This piece of evidence is likely mistaken, as the tool used (sharecounter) probably linearly interpolates days where they do not record a user’s Twitter followers, and thus the apparent linearity is an artifact.

6.2. Objections to Intensional Insights staff ‘liking’ Intentional Insights content

In the course of the original discussion of Jeff’s post on Facebook, numerous people took exception to staff or volunteers ‘liking’ or supporting InIn content. This criticism is misguided: this is common practice both for nonprofits generally and within the EA community: many EAs affiliated to a given group ‘like’ or share content without disclosing their affiliation. Although issues around appropriate disclosure can be subtle, acts like this on social media do not on reflection seem significant enough to warrant disclosure of interests to the authors of this document.

6.3. ‘Paid likes’ from clickfarms

In the February 2016 discussion it was suggested the Tsipursky might be directly paying for likes from clickfarms. However, as discussed in section 5.1, while the likes in question appear to have resulted from paid Facebook boosting, and to be from clickfarms, they were not directly paid for. Instead, the boosting attracted clickfarm likes through an accidental process explained well the linked Veritasium video.

7. Positives

In the course of research into and discussion around InIn, some facts that reflect well on InIn were discovered. These are listed below. We don’t think this comprises all evidence favourable of InIn: the impact document, Tsipursky’s post on the EA forum, and the Intentional Insights website offer further evidence. (We have not looked at these closely enough to have a view on them.)

7.1. Jon Behar

One TLYCS employee who was worked with Tsipursky on Giving Games says Tsipursky has made helpful introductions:

Behar is also quoted in the InIn EA impact doc as saying:

7.2. Additional donations

TLYCS has information indicating that Tsipursky’s posts combined drove about two or three donations, and the Huffington Post article resulted in to donations to GiveDirectly totaling $500. Tracking donations is hard, so this is definitely an underestimate.

7.3. Placement of articles in TIME and the Huffington Post

Tsipursky’s articles in TIME and the Huffington Post got lots of exposure for EA ideas. Additionally, being able to get articles placed there is impressive.

8. Policy responses from InIn

During discussions with Tsipursky regarding drafts of this document he mentioned some InIn policy changes made in response to the criticisms. This section does not reflect any other changes InIn may have made, primarily because we haven’t been able to put in the time to follow up on each practice and see whether it has continued. We also note that Tsipursky provided additional information regarding Amazon sales, contractor names, and payment practices upon request for this document.

8.1. Post-criticism conflict-of-interest policy

Following the discussion under Jeff Kaufman’s post in August 2016, InIn created a conflicts of interest policy document:

8.2. Post-criticism Facebook boosting

Tsipursky now states:

Regarding InIn social media policy, we are making sure to avoid boosting any more posts to clickfarm countries. We’re generally not boosting posts right now to anyone but fans of the page who live in the US and other rich countries. We found we couldn’t ban identifiable clickfarm accounts from the FB page, unfortunately, so we’re being really cautious about boosting posts.

9. Disclosures

Many people contributed to this document, some of them anonymously. Below are disclosures from people who contributed substantially and want to be clear about any potential conflicts of interest. None of the individuals below contributed on behalf of an employer or organization, and their contributions should not be taken to imply any stance on the part of any organization with which they are affiliated.

-

Jeff Kaufman has donated to the Centre for Effective Altruism (CEA), 80,000 Hours, and Giving What We Can. He has volunteered for Animal Charity Evaluators in a very minor capacity. His wife, Julia Wise, works for CEA and serves on the board of GiveWell.

-

Gregory Lewis has previously worked as a volunteer for Giving What We Can and 80,000 hours. He has donated to Giving What We Can and the Global Priorities Project.

-

Oliver Habryka currently works for CEA, and has been active in EA community organizing in a variety of roles.

-

Carl Shulman currently works for the Future of Humanity Institute, and consults for the Open Philanthropy Project. He previously worked for the Machine Intelligence Research Institute (MIRI). He has previously done some consulting and volunteering for the Center for Effective Altruism, especially 80,000 Hours. His wife is executive director of the Center for Applied Rationality and a board member of MIRI.

-

Claire Zabel works at the Open Philanthropy Project, and serves on the board of Animal Charity Evaluators. She has donated to a variety of EA organizations and has close ties with other people in the EA community.

10. Response comments from Gleb Tsipursky

Tsipursky has responded in the comments below: part one, part two, part three.

- Decision-making and decentralisation in EA by (26 Jun 2023 11:34 UTC; 271 points)

- 2018 AI Alignment Literature Review and Charity Comparison by (LessWrong; 18 Dec 2018 4:46 UTC; 190 points)

- The biggest risk of free-spending EA is not optics or motivated cognition, but grift by (14 May 2022 2:13 UTC; 183 points)

- Thoughts on EA, post-FTX by (10 Sep 2023 11:05 UTC; 144 points)

- 's comment on Effective Aspersions: How the Nonlinear Investigation Went Wrong by (20 Dec 2023 2:08 UTC; 135 points)

- 2018 AI Alignment Literature Review and Charity Comparison by (18 Dec 2018 4:48 UTC; 118 points)

- Ask (Everyone) Anything — “EA 101” by (5 Oct 2022 10:17 UTC; 110 points)

- The Craft & The Community—A Post-Mortem & Resurrection by (LessWrong; 2 Nov 2017 3:45 UTC; 91 points)

- EA Forum: Data analysis and deep learning by (12 May 2020 17:39 UTC; 82 points)

- Against responsibility by (LessWrong; 31 Mar 2017 21:12 UTC; 82 points)

- EA Market Testing by (30 Sep 2021 15:17 UTC; 81 points)

- Milan Griffes on EA blindspots by (18 Mar 2022 16:17 UTC; 79 points)

- 's comment on calebp’s Quick takes by (1 May 2025 19:05 UTC; 77 points)

- The Ethics of Posting: Real Names, Pseudonyms, and Burner Accounts by (9 Mar 2023 22:53 UTC; 63 points)

- 's comment on Why & How to Make Progress on Diversity & Inclusion in EA by (27 Oct 2017 3:04 UTC; 40 points)

- Setting Community Norms and Values: A response to the InIn Open Letter by (26 Oct 2016 22:44 UTC; 38 points)

- 's comment on Effective Aspersions: How the Nonlinear Investigation Went Wrong by (LessWrong; 20 Dec 2023 2:12 UTC; 36 points)

- 's comment on Effective Aspersions: How the Nonlinear Investigation Went Wrong by (LessWrong; 20 Dec 2023 2:29 UTC; 33 points)

- 's comment on How my community successfully reduced sexual misconduct by (13 Mar 2023 1:41 UTC; 32 points)

- 's comment on Apology by (23 Mar 2019 12:05 UTC; 29 points)

- [defunct] Moderation Action List (warnings and bans) by (LessWrong; 6 Mar 2018 19:18 UTC; 29 points)

- Decentralized Exclusion by (LessWrong; 13 Mar 2023 15:50 UTC; 26 points)

- 's comment on Rationality, EA and being a movement by (11 Jul 2019 2:43 UTC; 23 points)

- 's comment on Objections to Value-Alignment between Effective Altruists by (2 Oct 2020 11:00 UTC; 21 points)

- 's comment on Aaron Gertler’s Quick takes by (24 Apr 2022 7:31 UTC; 12 points)

- Effective Altruism Forum web traffic from Google Analytics by (31 Dec 2016 21:23 UTC; 10 points)

- 's comment on calebp’s Quick takes by (1 May 2025 19:41 UTC; 9 points)

- 's comment on Effective Aspersions: How the Nonlinear Investigation Went Wrong by (22 Dec 2023 15:05 UTC; 9 points)

- 's comment on How x-risk projects are different from startups by (5 Apr 2019 18:24 UTC; 9 points)

- Effective Altruism as Global Catastrophe Mitigation by (LessWrong; 8 Jun 2018 4:17 UTC; 9 points)

- 's comment on Rational Politics Project by (16 Jan 2017 17:53 UTC; 8 points)

- 's comment on ialdabaoth is banned by (LessWrong; 13 Dec 2019 9:32 UTC; 8 points)

- [CEA Update] October 2016 by (15 Nov 2016 14:49 UTC; 7 points)

- Effective Altruism as Global Catastrophe Mitigation by (8 Jun 2018 4:35 UTC; 7 points)

- 's comment on AllAmericanBreakfast’s Shortform by (LessWrong; 24 Sep 2020 15:31 UTC; 6 points)

- 's comment on A Letter to the Bulletin of Atomic Scientists by (24 Nov 2022 11:55 UTC; 5 points)

- 's comment on Rationalism for the masses by (LessWrong; 3 Oct 2018 23:25 UTC; 5 points)

- 's comment on Advisory panel at CEA by (7 Mar 2017 13:57 UTC; 4 points)

- 's comment on Setting Community Norms and Values: A response to the InIn Open Letter by (27 Oct 2016 18:38 UTC; 4 points)

- 's comment on Intentional Insights and the EA Movement – Q & A by (16 Jan 2017 17:53 UTC; 4 points)

- 's comment on The Craft & The Community—A Post-Mortem & Resurrection by (LessWrong; 4 Nov 2017 21:10 UTC; 4 points)

- 's comment on Erich_Grunewald’s Quick takes by (23 Aug 2022 20:50 UTC; 3 points)

- 's comment on Setting Community Norms and Values: A response to the InIn Open Letter by (27 Oct 2016 3:13 UTC; 3 points)

- Should effective altruism be more “cool”? by (LessWrong; 22 Jun 2024 20:42 UTC; 3 points)

- 's comment on Voting is like donating hundreds of thousands to charity by (LessWrong; 3 Nov 2016 10:11 UTC; 3 points)

- 's comment on Against responsibility by (LessWrong; 3 Apr 2017 5:59 UTC; 3 points)

- 's comment on Anonymous EA comments by (7 Feb 2017 22:30 UTC; 2 points)

- 's comment on When does it make sense to support/oppose political candidates on EA grounds? by (19 Oct 2020 9:16 UTC; 2 points)

- 's comment on EA Hotel with free accommodation and board for two years by (7 Jun 2018 17:48 UTC; 1 point)

- 's comment on Support Promoting Effective Giving—Intentional Insights by (26 Oct 2016 17:13 UTC; 0 points)

- 's comment on The Best of EA in 2016: Nomination Thread by (8 Nov 2016 17:42 UTC; 0 points)

- Rational Politics Project by (8 Jan 2017 13:28 UTC; -12 points)

My fellow contributors and I aimed in this document to have as little of an ‘editorial line’ as possible: we were not all in complete agreement on what this should be, so thought it better to discuss the appropriate interpretation of the data we provide in the comments. I offer mine below: in addition to the disclaimers and disclosures above, I stress I am speaking for myself, and not on behalf of any other contributor.

I believe InIn and Tsipursky are toxic to the EA community. I strongly recommend that EAs do not spend time or money on InIn going forward, nor any future projects Tsipursky may initiate. Insofar as there may be ways for EA organisations to insulate themselves from InIn, I urge them to avail themselves of these opportunities.

A key factor in this extremely adverse judgement is my extremely adverse view of InIn’s product. InIn’s material is woeful: a mess of misguided messaging (superdonor, the t-shirts, ‘effective giving’ versus ‘effective altruism’, etc. etc.), crowbarred in aspirational pop-psychology ‘insights’, tacky design and graphics, and oleaginous self-promotion seeping through wherever it can (see, for example, the free sample of Gleb’s erstwhile ‘amazon bestseller’). Although mercifully little of InIn’s content has anything to do with EA, whatever does reflects poorly on it (c.f. prior remarks about people collaborating with Tsipursky as a damage limitation exercise). I have yet to meet an EA with view of InIn’s content better than mediocre-to-poor.

Due to this, the fact that the social ‘reach’ of InIn is mostly illusory may be a blessing in disguise: I am genuinely uncertain whether low-quality promotion of sort-of EA is better than nothing given it may add noise to higher quality signal notwithstanding the (likely fairly scant) counterfactual donations it may elicit. In any case, that it is illusory is a black mark against InIn’s instrumental competencies necessary for being an effective outreach organisation.

What I find especially shocking is that this meagre output is the result of gargantuan amounts of time spent. Tsipursky states across assistants, volunteers, and staff, about 1000 hours are spent on InIn each week: if so, InIn is likely the leader among all EA orgs for hours spent—yet, by any measure of outputs, it is comfortably among the worst.

Would that it just be a problem of InIn being ineffective. The document above illustrates not only a wide-ranging pattern of at-best-shady practices, but a meta-pattern of Tsipursky persisting with these practices despite either being told not to or saying himself he wasn’t doing them or won’t do them again. This record challenging to reconcile with Tsipursky acting in good faith, although I can fathom the possibility given the breadth and depth of his incompetence. Regardless of intention, I am confident the pattern of dodgy behaviour will continue with at most cosmetic modification, and it will continue to prove recalcitrant to any attempts to explain or persuade Tsipursky of his errors.

These issues incur further costs to Effective Altruism. There are obvious risks that donors ‘fall for’ InIn’s self-promotion and donate to it instead of something better. There are similar reputational risks of InIn’s behaviour damaging the EA brand independent of any risks from its content. Internally, acts like this may act to burn important commons in how individuals and organisations interact in the EA community. Finally, although in part self-inflicted, monitoring and reporting these things sucks up time and energy from other activities: although my time is basically worthless, the same cannot be said for the other contributors.

In sum: InIn’s message is at best a cargo cult version of EA with dubious value. Despite being an outreach organisation, it is incompetent at fundamental competencies for its mission. A shocking number of volunteer hours are being squandered. Tsipursky is incapable of conducting himself to commonsense standards of probity, leave alone higher ones that should apply to the leader of an EA organisation. This behaviour incurs further external and internal costs to the EA movement. I see essentially no prospect of these problems being substantially remediated such that InIn’s benefit to the community outweigh its costs, still moreso such that it would be competitive with other EA groups or initiatives. Stay away.

[Edit: I previously said ‘[InIn] is comfortably the worst [in terms of outputs]’, it has been pointed out there may be other groups with similarly poor performance, so I’ve (much belatedly) changed the wording.]

I suspect the reason InIn’s quality is low is because, given their reputation disadvantage, they cannot attract and motivate the best writers and volunteers. I strongly relate to your concerns about the damage that could be done if InIn does not improve. I have severely limited my own involvement with InIn because of the same things you describe. My largest time contribution by far has been in giving InIn feedback about reputation problems and general quality. A while back, I felt demoralized with the problems, myself, and decided to focus more on other things instead. That Gleb is getting so much attention for these problems right now has potential to be constructive.

Gleb can’t improve InIn until he really understands the problem that’s going on. I think this is why Intentional Insights has been resistant to change. I hope I provided enough insight in my comment about social status instincts for it to be possible for us all to overcome the inferential distance.

I’m glad to see that so many people have come together to give Gleb feedback on this. It’s not just me trying to get through to him by myself anymore. I think it’s possible for InIn to improve up to standards with enough feedback and a lot of work on Gleb’s part. I mean, that is a lot of work for Gleb, but given what I’ve seen of his interest in self-improvement and his level of dedication to InIn, I believe Gleb is willing to go through all of that and do whatever it takes.

Really understanding what has gone wrong with Intentional Insights is hard, and it will probably take him months. After he understands the problems better, he will need a new plan for the organization. All of that is a lot of work. It will take a lot of time.

I think Gleb is probably willing to do it. This is a man who has a tattoo of Intentional Insights on his forearm. Because I believe Gleb would probably do just about anything to make it work, I would like to suggest an intervention.

In other words, perhaps we should ask him to take a break from promoting Intentional Insights for a while in order to do a bunch of self-improvement, make his major updates and plan out a major version upgrade for Intentional Insights.

Perhaps I didn’t get the memo, but I don’t think we’ve tried organizing in order to demand specific constructive actions first before talking about shutting down Intentional Insights and/or driving Gleb out of the EA movement.

The world does need an org that promotes rationality to a broader audience… and rationalists aren’t exactly known for having super people skills… Since Gleb is so dedicated and is willing to work really hard, and since we’ve all finally organized in public to do something about this, maybe we aught to try using this new source of leverage to heave him onto the right track.

Hello Kathy,

I have read your replies on various comment threads on this post. If you’ll forgive the summary, your view is that Tsipursky’s behaviour may arise from some non-malicious shortcomings he has, and that, with some help, these can be mitigated, thus leading InIn to behave better and do more good. In medicalese, I’m uncertain of the diagnosis, strongly doubt the efficacy of the proposed management plan, and I anticipate a bleak prognosis. As I recommend generally, I think your time and laudable energy is better spent elsewhere.

A lot of the subsequent discussion has looked at whether Tsipursky’s behaviour is malicious or not. I’d guess in large part it is not: deep incompetence combined with being self-serving and biased towards ones org to succeed probably explain most of it—regrettably, Tsipursky’s response to this post (e.g. trumped-up accusations against Jeff and Michelle, pre-emptive threats if his replies are downvoted, veiled hints at ‘wouldn’t it be bad if someone in my position started railing against EA’, etc.) seem to fit well with malice.

Yet this is fairly irrelevant. Tsipursky is multiply incompetant: at creating good content, at generating interest in his org (i.e. almost all of its social media reach is ilusory), at understanding the appropriate ambit for promotional efforts, at not making misleading statements, and at changing bad behaviour. I am confident that any EA I know in a similar position would not have performed as badly. I highly doubt this can all be traced back to a single easy-to-fix flaw. Furthermore, I understand multiple people approached Tsipursky multiple times about these issues; the post documents problems occurring over a number of months. The outside view is not favourable to yet further efforts.

In any case, InIn’s trajectory in the EA community is probably fairly set at this point. As I write this, InIn is banned from the FB group, CEA has officially disavowed it, InIn seems to have lost donors and prospective donations from EAs, and my barometer of ‘EA public opinion’ is that almost all EAs who know of InIn and Tsipursky have very adverse attitudes towards both. Given the understandable reticience of EAs towards corporate action like this, one can anticipate these decisions have considerable inertia. A nigh-Damascene conversion of Tsipursky and InIn would be required for these things to begin to move favourably to InIn again.

In light of all this, attempting to ‘reform InIn’ now seems almost as ill-starred as trying to reform a mismanaged version of homeopaths without borders: such a transformation is required to be surely worth starting afresh. The opportunity cost is also substantial as there are other better performing EA outreach orgs (i.e. all of them), which promise far greater returns on the margin for basically any return one migh be interested in. Please help them out instead.

I’m not completely sure what’s going on with Gleb, but I feel a great deal of concern for people with Asperger’s, and I think it made me overly sympathetic in this case. Thank you for this.

One thing to consider is that too much charity for Gleb is actively harmful for people with ASDs in the community.

If I am at a party of a trusted friend and know they’ve only invited people the trust, and someone hurts my feelings, I’m likely to ascribe it to a misunderstanding and talk it out with them. If I’m at a party where lots of people have been jerks to me before, and someone hurts my feelings, I’m likely to assume this person is a jerk too and withdraw.

By saying “I’m updating” and then committing the same problems again, Gleb is lessening the value of the words. He is teaching people it’s not worth correcting others, because they won’t change. This is most harmful to the people who most need the most direct feedback and the longest lead time to incorporate it.

Wow. More excellent arguments. More updates on my side. You’re on fire. I almost never meet people who can change my mind this much. I would like to add you as a friend.

[This was originally a comment calling for Gleb to leave the EA community with various supporting arguments, but I’ve decided I don’t endorse online discussions as a mechanism for asking people to leave EA. See this comment of mine for more.]

He wrote that he is a ‘monthly donor’ to CFAR.

On the other hand a cynic might note that he has used his interactions with CFAR to promote himself and his organization, e.g. his linked favorable review of CFAR comes with a few plugs for Intentional Insights, and CFAR (or rather the erroneous acronym-unpacking ‘Center for Advanced Rationality’) appeared as a collaboration in InIn promotional documents. My understanding is that the impression that he was aligned with CFAR (and EA) had also made some CFAR donors more open to InIn fundraising pitches.

He has also taken the Giving What We Can pledge, but I don’t know what that means. He has said he and his wife fund most of InIn’s budget (which would presumably be more than 10% of his income) and claims that it is highly effective, so might take that to satisfy his pledge.

[Disclosure: my wife is the executive director of CFAR, but I am speaking only for myself.]

Note: I am socially peripheral to EA-the-community and philosophically distant from EA-the-intellectual-movement; salt according to taste.

While I understand the motivation behind it, and applaud this sort of approach in general, I think this post and much of the public discussion I’ve seen around Gleb are charitable and systematic in excess of reasonable caution.

My first introduction to Gleb was Jeff’s August post, read before there were any comments up, and it seemed very clear that he was acting in bad faith and trying to use community norms of particular communication styles, owning up to mistakes, openness to feedback, etc. to disarm those engaging honestly and enable the con to go on longer. I don’t think I’m an especially untrusting person (quite the opposite, really), but even if that’s the case nearly every subsequent revealed detail and interaction confirmed this. Gleb responds to criticism he can’t successfully evade by addressing it in only the most literal and superficial manner, and continues on as before. It is to the point that if I were Gleb, and had somehow honestly stumbled this many times and fell into this pattern over and over, I would feel I had to withdraw on the grounds that no one external to my own thought processes could possibly reasonably take me seriously and that I clearly had a lot of self-improvement to do before engaging in a community like this in the future.

The responses to this behavior that I’ve seen are overwhelmingly of the form of taking Gleb seriously, giving him the benefit of the doubt where none should exist, providing feedback in good faith, and responding positively to the superficial signs Gleb gives of understanding. This is true even for people who I know have engaged with him before. I’m not completely confident of this, but the pattern looks like people are applying the standards of charity and forgiveness that would be appropriate for any one of these incidences in isolation, not taking into account that the overall pattern of behavior makes such charitable interpretations increasingly implausible. On top of that, some seem to have formed clear final opinions that Gleb is not acting in good faith, yet still use very cautious language and are hesitant to take a single step beyond what they can incontrovertibly demonstrate to third parties.

A few examples from this post, not trying to be comprehensive:

Using the word “concerns” in the title and introductory matter

noting that Gleb doesn’t “appear” to have altered his practices around name-dropping

Saying “Tsipursky either genuinely believed posts like the above do not ask for upvotes, or he believed statements that are misleading on common-sense interpretation are acceptable providing they are arguably ‘true’ on some tendentious reading.” without bringing up the possibility of him knowing exactly what he’s doing and just lying

Calling Gleb’s self-proclaimed bestselling author status only “potentially” misleading.

Moreover, the fully comprehensive nature of the post and the painstaking lengths it goes to separate out definitely valid issues from potentially invalid ones seems to be part of the same pattern. No one, not even Gleb, is claiming that these instances didn’t happen or that he is being set up, yet this post seems to be taking on a standard appropriate for an adversarial court of law.

And this is a problem, because in addition to wasting people’s time it causes people less aware of these issues to take Gleb more seriously, encourages him to continue behaving as he has been, and I suspect in some cases inclines even the more knowledgeable people involved to trust Gleb too much in the future, despite whatever private opinions they may have of his reliability. At some point there needs to be a way for people to say “no, this is enough, we are done with you” in the face of bad behavior; in this case if that is happening at all it is being communicated behind-the-scenes or by people silently failing to engage. That makes it much harder for the community as a whole to respond appropriately.

I take your point as “aren’t we being too nice to this guy?” but I actually really like the approach taken here, which seems extremely fair-minded and diligent. My suspicion is this sort of stuff is long-term really valuable because it establishes good norms for something that will likely recur in future. I’d be much more inclined to act with honesty if I believed people would do an extremely thorough public invesitigation into everything I’d said, rather than just calling me names and walking away.

I don’t understand what you’re claiming here. Are you saying you’d be honest in a community if you thought it would investigate you a lot to determine your honesty, but dishonest otherwise? Why not just be honest in all communities, and leave the ones you don’t like?

I think he means that it is human behaviour to do that, not that he does it himself.

I literally still don’t understand. I can understand the motivation to be an asshole in communities you think won’t treat you fairly, but why be a lying asshole? I think the OP wrote “honesty” and meant something else.

I think the common point of intervention for people telling mis-truths, is not holding themselves back when they don’t really have enough evidence. A person might be about to write of a quick reply, and in most communities, know that they’re not going to be held accountable for any mischaracterisations of others’ opinions, or referring inaccurately to studies and data. In those communities, the comments are awful. In communities where you know that, if you do this over a sustained period, Carl Shulman, Jeff Kaufman, Oliver Habryka, Gregory Lewis and more are gonna write tens of thousands of words documenting your errors, you’ll be more likely to note when you haven’t quite substantiated the comment you’re about to hit ‘send’ on.

There’s an important difference between repeatedly making errors, jumping to conclusions, or being attached to a preconceived notion (all of which which I’ve personally done in front of Carl plenty of times), and the sort of behavior described in the OP, which seems more like intentional misrepresentation for the sake of climbing a social status gradient.

I’d like to agree partially with MichaelPlant and Paul_Crowley, in so far as I’m glad that I’m part of a community that responds to problems in such a charitable and diligent manner. However, I feel they missed the most important point of shlevy’s comment. Without arguing for a less fair-mined and thoughtful response, we can still ask the following: Gleb started InIn back in 2014; why did it take us two years to get to the point where we were able to call him out on his bad behaviour? This could’ve been called out much earlier.

I think the answer looks like this:

Firstly, Gleb has learned the in-group signals of communicating in good-faith (for example, at every criticism, he says he has “updated”, and he says ‘thank you’ for criticism). This alone is not a problem—it would merely take a few people to realise this, call it out, and then he could be asked to leave the community.

There’s a second part however, which is that once a person has learned (from experience) that Gleb is acting in bad faith, the next time that person comes to the discussion, everybody else sees the standard signals of good-faith communication, and as such the person may be hesitant to treat Gleb as they would treat someone else who was clearly acting in bad faith. This is because they would be seen as unnecessarily harsh by people without the background experiences—as was seen multiple times in the original Facebook thread, when people (who did not have the past experience with Gleb) were confused by the harshness of the criticism, and criticised the tone of the conversation. My guess for the fundamental reason that we are having this conversation now, is that Jeff Kaufman bravely made his beliefs about Gleb common knowledge—he made a blog post about InIn, after which everyone else realised “Oh, everyone else believes this too. I’m not worried any more that everyone will think negatively of me for acting as though Gleb is acting in bad faith. I will now let out the piled up problems I have with Gleb’s behaviour.”

To re-iterate, it’s delightful to be part of a community that responds to this sort of situation by spending ~100s of hours (collectively) and ~100k words (I’m counting the original Facebook thread as well as the post here) analysing the situation and producing a considered, charitable yet damning report. However, it’s important to realise that there are communities out there for whom Gleb would’ve been outed in months rather than years, and without the time of many top researchers in the community wasted.

I’m not sure what the correct norms to have are. I’d suggest that we should be more trusting that when someone in the community criticises someone else not in the community, they’re doing it for good reasons. However, writing that out is almost self-refuting—that’s what all insular communities are doing. Perhaps appointing a small group of moderators for the community to whom we trust. That’s how good online communities often work, perhaps the model can be extended to the EA community (which is significantly more than just an online community). I certainly want to sustain the excellent norms of charity, diligence and respect that we currently have, something necessary to any successful intellectual project.

I just want to highlight that I feel like part of this post is based on a false premise; you mention InIn was started in 2014. While that may be true, all of the incidents in EA (and Less Wrong) circles cited above date to November 2015 or later. Gleb’s very first submission in the EA forum is in October 2015. By saying ‘it took two years’ and then talking about ‘months rather than years’ you give the impression that Gleb could have been excluded sometime back in 2015 and would have been elsewhere, which I think is pretty misleading (though presumably unintentionally so).

The truth is that it took a little over 9 months from Gleb’s first post to Jeff’s major public criticism. 9 months and a decent amount of time is not trivial. But let’s not overstate the problem.

“There’s a second part however, which is that once a person has learned (from experience) that Gleb is acting in bad faith, the next time that person comes to the discussion, everybody else sees the standard signals of good-faith communication, and as such the person may be hesitant to treat Gleb as they would treat someone else who was clearly acting in bad faith. This is because they would be seen as unnecessarily harsh by people without the background experiences—as was seen multiple times in the original Facebook thread, when people (who did not have the past experience with Gleb) were confused by the harshness of the criticism, and criticised the tone of the conversation.”

I do strongly agree with this. I had some very frustrating conversations around that thread.

Pretty much agree with you and shlevy here, except that the wasting hundreds of collective hours carefully checking that Gleb is acting in bad faith seems more like a waste to me.

If the EA community were primarily a community that functioned in person, it would be easier and more natural to deal with bad actors like Gleb; people could privately (in small conversations, then bigger ones, none of which involve Gleb) discuss and come to a consensus about his badness, that consensus could spread in other private smallish then bigger conversations none of which involve Gleb, and people could either ignore Gleb until he goes away, or just not invite him to stuff, or explicitly kick him out in some way.

But in a community that primarily functions online, where by default conversations are public and involve everyone, including Gleb, the above dynamic is a lot harder to sustain, and instead the default approach to ostracism is public ostracism, which people interested in charitable conversational norms understandably want to avoid. But just not having ostracism at all isn’t a workable alternative; sometimes bad actors creep into your community and you need an immune system capable of rejecting them. In many online communites this takes the form of a process for banning people; I don’t know how workable this would be for the EA community, since my impression is that it’s spread out across several platforms.

Seems worth establishing the fact that bad actors exist, will try to join our community, and engage in this pattern of almost plausibly deniable shamelessly bad behavior. I think EAs often have a mental block around admitting that in most of the world, lying is a cheap and effective strategy for personal gain; I think we make wrong judgments because we’re missing this key fact about how the world works. I think we should generalize from this incident, and having a clear record is helpful for doing so.

Yes! But… you said your opening line as though it disagreed somehow? I said:

I may be misinterpreting you here; you wrote

and while I think this behavior is in some sense admirable, I think it is on net not delightful, and the huge waste of time it represents is bad on net except to the extent that it leads to better community norms around policing bad actors.

Yup, we are in agreement.

(I was just noting how sweet it was that we do this much more kindly than most other communities. It’s totally not optimal though.)

Yes, insofar communities do that, but typically in emotive and highly biased ways. EA at least has more constructive norms for how these things are discussed. It’s not perfect, and it’s not fast, but here I see people taking pains to be as fair-minded as they can be. (We achieve that to different degrees, but the effort is expected.)

My System 1 doesn’t like this. Giving this power to a group of people and suggesting that we accept their guidance… that feels cultish, and not very compatible with a community of critical thinkers.

Scientific departments have ethics boards. Good online communities (e.g. Hacker News) have moderators. Society as a whole has a justice part of governance, and other groups that check on the decisions made by the courts. Suggesting that it feels cult-y to outsource some of our community norm-enfacement (so as to save the community as a whole significant time input, and make the process more efficient and effective) is… I’m just confused every time someone calls something totally normal ‘cult-y’.

I deliberately said “My System 1 doesn’t like this.” and “that feels cultish” – on an intuitive level, I feel uncomfortable, and I’m trying to work out why. I do see value in having effective gatekeepers.

I’m not even sure what it means to be “banned” from a movement consisting of multiple organisations and many individuals. It may be that if the process is clearly defined, and we know who is making the decision, on whose behalf, I’d be more comfortable with it.

Thanks for clarifying!

Just in case you’re interested: I think the word ‘cultish’ is massively overloaded (with negative connotations) and mis-used. I’d also point out that saying that a statement is one’s gut feeling isn’t equivalent to saying one doesn’t endorse the feeling, and so I felt pretty defensive when you suggested my idea was cultish and not compatible with our community.

I wrote this because I thought you might prefer to know the impacts of your comments rather than not hearing negative feedback. My apologies in advance if that was a false assumption.

Thanks – helpful feedback (and from Owen also). In hindsight I would probably have kept the word “cultish” while being much more explicit about not completely endorsing the feeling.

Something went wrong with the communication channel if you ended up feeling defensive.

However, despite generally agreeing with you about problems with the world “cultish”, I actually think this is a reasonable use-case. It has a lot of connotations, and it was being reported that the description was triggering some of those connotations in the reader. That’s useful information that it may be worth some effort to avoiding it being perceived that way if the idea is pursued (your stack of examples make it pretty clear that it is avoidable).

I think being too nice is a failure mode worth worrying about, and your points are well taken. On the other hand, it seems plausible to me that it does a more effective job of convincing the reader that Gleb is bad news precisely by demonstrating that this is the picture you get when all reasonable charity is extended.

Shlevy, I think I might actually agree with everything you said here with the exception of the characterization of Intentional Insights as a “con”.

I can see the behavior on the outside very clearly. On the outside Gleb has said a list full of incorrect things.

On the inside, the picture is not so clear. What’s going on inside his head?

If this is a con, what in the world does he want? He can’t seem to make money off of this. Con artists have a tendency to do very, very quick things, with a very very low amount of effort, hoping to gain some disproportionate reward. Gleb is doing the opposite. He has invested an enormous amount of time (Not to mention a permanent Intentional Insights tattoo!) and (as far as I know) has been concerned about finances the whole time. He’s not making a disproportionate amount of money off of this… and spreading rationality doesn’t even look like one of those things which a con artist could quickly do for a disproportionate reward… so I am confused.

If I thought Intentional Insights was a con, I’d be right with you trying to make that more obvious to everyone… but I launched my con detector and that test was negative.

Maybe you use a different con detector. Maybe, to you, it is irrelevant whether Gleb is intentionally malicious or merely incompetent. Perhaps you would use the word “con” either way just as people use the word “troll” either way.

For the same reasons that we should face the fact that there’s a major problem with the inaccuracies Intentional Insights outputs, I think we aught to label the problem we’re seeing with Intentional Insights as accurately as possible.

Whether Gleb is incompetent or malicious is really important to me. If Gleb is doing this because of a learning disorder, I would really like to see more mercy. According to Wikipedia’s page on psychological trauma, there are a lot of things about this post which Gleb may be experiencing as traumatic events. For instance: humiliation, rejection, and major loss. (https://en.wikipedia.org/wiki/Psychological_trauma)

As some kind of weird hybrid between a bleeding heart and a shrewd person, I can’t justify anything but minimizing the brutality of a traumatic event for someone with a learning disorder, no matter how destructive it is. At the same time, I agree that ousting destructive people is a necessity if they won’t or can’t change, but I think in the case of an incompetent person, there are a lot of ways in which the community has been too brutal. In the event of a malicious con, we’ve been too charitable, and I’m guilty of this as well. If Gleb really is a con artist, we should be removing him as fast as possible. I just don’t see strong evidence that the problem he has is intentional, nor does it even seem to be clearly differentiated from terrible social skills and general ignorance about marketing.

Our response is too brutal for someone with a learning disorder or other form of incompetence, and it’s too charitable for a con artist. In order to move forward, I think perhaps we aught to stop and solve this disagreement.

Here’s what’s at stake: currently, I intend to advocate for an intervention*. If you convince me that he is a con artist, I will abandon this intent and instead do what you are doing. I’ll help people see the con.

/ (By intervention, I mean: encouraging everyone to tell Gleb we require him to shape up or ship out, to negotiate things like what we mean by shape up and how we would like him to minimize risk while he is improving. If he has a learning disorder, a bit of extra support could go a long way if* the specific problems are identified so the support can target them accurately. I suspect that Gleb needs to see a professional for a learning disorder assessment, especially for Asperger’s.)

I’m open to being convinced that Intentional Insights actually does qualify as some type of con or intends net negative destructive behavior. I don’t see it, but I’d like to synchronize perspectives, whether I “win” or “lose” the disagreement.

I don’t think incompetent and malicious are the only two options (I wouldn’t bet on either as the primary driver of Gleb’s behavior), and I don’t think they’re mutually exclusive or binary.

Also, the main job of the EA community is not to assess Gleb maximally accurately at all costs. Regardless of his motives, he seems less impactful and more destructive than the average EA, and he improves less per unit feedback than the average EA. Improving Gleb is low on tractability, low on neglectedness, and low on importance. Spending more of our resources on him unfairly privileges him and betrays the world and forsakes the good we can do in it.

Views my own, not my employer’s.

That was a truly excellent argument. Thank you.

Thanks Kathy!

Witch hunting and attacks do nothing for anyone.

Which is fine.

People can look at clear and concise summaries like the one above and come to their own conclusion. They don’t need to be told what to believe and they don’t need to be led into a groupthink.

Attacking people who are bad protects other people in the community from having their time wasted or being hurt in other ways by bad people. Try putting yourself in the shoes of the sort of people who engage in witch hunts because they’re genuinely afraid of witches, who if they existed would be capable of and willing to do great harm.

To be clear, it’s admirable to want to avoid witch hunts against people who aren’t witches and won’t actually harm anyone. But sometimes there really are witches, and hunting them is less bad than not.

This approach doesn’t scale. Suppose the EA community eventually identifies 100 people at least as bad as Gleb in it, and so generates 100 separate posts like this (costing, what, 10k hours collectively?) that others have to read and come to their own conclusions about before they know who the bad actors in the EA community are. That’s a lot to ask of every person who wants to join the EA community, not to mention everyone who’s already in it, and the alternative is that newcomers don’t know who not to trust.

The simplest approach that scales (both with the size of the community and with the size of the pool of bad actors in it) is to kick out the worst actors so nobody has to spend any additional time and/or effort wondering / figuring out how bad they are.

Yes, but Gleb isn’t actively hurting anyone. You need an ironclad rationale before deciding to just build a wall in front of people who you think are unhelpful.

Even if you could really have 100 people starting their own organizations related to EA… it’s not relevant. Just because it won’t scale doesn’t mean it’s not the right approach with 1 person. We might think that the time and investment now is worthwhile, whereas if there were enough questionable characters that we didn’t have the time to do this with all of them, then (and only then) we’d be compelled to scale back.

The problem is that Gleb is manufacturing false affiliations in the eyes of outsiders, and outsiders who only briefly glance at lengthy, polite documents like this one are unlikely to realize that’s what’s happening.

Gleb did lots of things and the post describes them, so it’s about more than just manufacturing false affiliations.” The issue is not that the post is too long or contains too many details, that’s a silly thing to complain about. The issue is whether the post should be adversarial and whether it should manufacture a dominant point of view. The answer to that is No.

In the original facebook thread I was highly critical of intentional insights, I have not read all the followup here yet, but I would like to note that after that thread the next “thing” I saw from Intentional Insights was this post about EA marketing. I thought that was a highly competent and interesting contribtuion to the EA community. All of the ongoing concerns about II may stand—but there is clearly a few people associated with the org who have valuable contributions to make to the future of the community,

The most embarrassing aspect of the exclusionary, witch hunt, no-due-diligence point of view which some people are advocating in the comments here is that it probably would have merited the early and permanent exclusion of the Singularity Institute/MIRI from the EA community. Holden wrote a blog on LessWrong saying that he didn’t like their organization and didn’t think they were worth funding. Some assorted complaints have been floating around the web for a long time complaining about them associating with neoreactionaries and about LessWrong being cultists as well as complaints about the way they communicate and write. There’s been a few odd ‘incidents’ (if you can call them that) over the years between MIRI, LessWrong, and the rationalist sphere. It would be easy to jumble all of that together into some kind of meta-post documenting concerns, and there is certainly no shortage of people who are willing and able to write long impassioned posts expressing their feelings and saying that they want nothing to do with SIAI/MIRI and recommending others to adhere to that. We could have done that, lots of people would come out of the woodwork to add their own complaints, the conversation would reach critical mass, and boom—all of a sudden, half the steam behind AI safety goes down the tubes.

It’s easy to find online communities today where people are mind-numbingly dismissive of anything AI-related due to a poorly-argued, critical-mass groupthink against everything LessWrong. Good thing that we’re not one of them.

I agree that it’s important that EA stay open to weird things and not exclude people solely for being low status. I see several key distinctions between early SI/early MIRI and Intentional Insights:

SI was cause focused, II a fundraising org. Causes can be argued on their merits. For fundraising, “people dislike you for no reason” is in and of itself evidence you are bad at fundraising and should stop.

I think this is an important general lesson. Right now “fundraising org” seems to be the default thing for people to start, but it’s actually one of the hardest things to do right and has the worst consequences if it goes poorly. With the exception of local groups, I’d like to see the community norms shift to discourage inexperienced people from starting fundraising groups.

AFAIK, SI wasn’t trying to use the credibility of the EA movement to bolster itself . Gleb is, both explicitly (by repeatedly and persistently listing endorsements he did not receive) and implicitly. As long as he is doing that the proportionate response is criticizing him/distancing him from EA enough to cancel out the benefits.

The effective altruism name wasn’t worth as much when MIRI was getting started. There was no point in faking an endorsement because no one had heard of us. Now that EA has some cachet with people outside the movement there exists the possibility of trying to exploit that cachet, and it makes sense for us to raise the bar on who gets to claim endorsement.

Chronological nitpick: SingInst (which later split into MIRI and CFAR) is significantly older than the EA name and the EA movement, and its birth and growth are attributable in significant part to SingInst and CFAR projects.

My experience (as someone connected to both the rationalist and Oxford/Giving What We Can clusters as EA came into being) is that its birth came out of Giving What We Can, and the communities you mentioned contributed to growth (by aligning with EA) but not so much to birth.

You can equally draw a list of distinctions which point in the other direction: distinctions that would have made it more worthwhile to exclude MIRI than to exclude InIn. I’ve listed some already.

I don’t think this comparison holds water. Briefly, I think SI/MIRI would have mostly attracted criticism for being weird in various ways. As far as I can tell, Gleb is not acting weird; he is acting normal in the sense that he’s making normal moves in a game (called Promote-Your-Organization-At-All-Costs) that other people in the community don’t want him playing, especially not in a way that implicates other EA orgs by association.

Whatever you think of that object-level point, an independent meta-level point: it’s also possible that the EA movement excluding SI/MIRI at some point would have been a reasonable move in expectation. Any policy for deciding who to kick out necessarily runs the risk of both false positives and false negatives, and pointing out that a particular policy would have caused some false positive or false negative in the past is not a strong argument against it in isolation.

They’ve attracted criticism for more substantial reasons; many academics didn’t and still don’t take them seriously because they have an unusual point of view. And other people believe that they are horrible people who are in between neoreactionary racists and a Silicon Valley conspiracy to take people’s money. It’s easy to pick up on something being a little off-putting and then get carried down the spiral of looking for and finding other problems. The original and underlying reason people have been pissed about InIn this entire time is that they are aesthetically displeased by their content. “It comes across as spammy and promotional”. An obvious typical mind fallacy. If you can fall for that then you can fall for “Eliezer’s writing style is winding and confusing.”

Highly implausible.

AI safety is a large issue. MIRI has done great work and has itself benefited tremendously from its involvement. Besides that, there have been many benefits to EA for aligning with rationalists more generally.

Yes, but people are taking this case to be a true positive that proves the rule, which is no better.

Some of the criticisms I’ve read of MIRI are so nasty that I hesitate to rehash them all here for fear of changing the subject and side tracking the conversation. I’ll just say this:

MIRI has been accused of much worse stuff than this post is accusing Gleb of right now. Compared to that weird MIRI stuff, Gleb looks like a normal guy who is fumbling his way through marketing a startup. The weird stuff MIRI / Eliezer did is really bizarre. For just one example, there are places in The Sequences where Eliezer presented his particular beliefs as The Correct Beliefs. In the context of a marketing piece, that would be bad (albeit in a mundane way that we see often), but in the context of a document on how to think rationally, that’s more like… egregious blasphemy. It’s a good thing the guy counter-balanced whatever that behavior was with articles like “Screening Off Authority” and “Guardians of the Truth”.

Do some searches for web marketing advice sometime, and you’ll see that Gleb might have actually been following some kind of instructions in some of the cases listed above. Not the best instructions, mind you… but somebody’s serious attempt to persuade you that some pretty weird stuff is the right thing to do. This is not exactly a science… it’s not even psychology. We’re talking about marketing. For instance, paying Facebook to promote things can result in problems… yet this is recommended by a really big company, Facebook. :/

There are a few complaints against him that stand out as a WTF… (Then again, if you’re really scouring for problems, you’re probably going to find the sorts of super embarrassing mistakes people only make when they’re really exhausted or whatever. I don’t know what to make of every single one of these examples yet.)