Sharing Information About Nonlinear

Added (11th Sept): Nonlinear have commented that they intend to write a response, have written a short follow-up, and claim that they dispute 85 claims in this post. I’ll link here to that if-and-when it’s published.

Added (11th Sept): One of the former employees, Chloe, has written a lengthy comment personally detailing some of her experiences working at Nonlinear and the aftermath.

Added (12th Sept): I’ve made 3 relatively minor edits to the post. I’m keeping a list of all edits at the bottom of the post, so if you’ve read the post already, you can just go to the end to see the edits.

Added (15th Sept): I’ve written a follow-up post saying that I’ve finished working on this investigation and do not intend to work more on it in the future. The follow-up also has a bunch of reflections on what led up to this post.

Added (12th Dec): Nonlinear has written a lengthy reply, which you can read here.

Epistemic status: Once I started actively looking into things, much of my information in the post below came about by a search for negative information about the Nonlinear cofounders, not from a search to give a balanced picture of its overall costs and benefits. I think standard update rules suggest not that you ignore the information, but you think about how bad you expect the information would be if I selected for the worst, credible info I could share, and then update based on how much worse (or better) it is than you expect I could produce. (See section 5 of this post about Mistakes with Conservation of Expected Evidence for more on this.) This seems like a worthwhile exercise for at least non-zero people to do in the comments before reading on. (You can condition on me finding enough to be worth sharing, but also note that I think I have a relatively low bar for publicly sharing critical info about folks in the EA/x-risk/rationalist/etc ecosystem.)

tl;dr: If you want my important updates quickly summarized in four claims-plus-probabilities, jump to the section near the bottom titled “Summary of My Epistemic State”.

When I used to manage the Lightcone Offices, I spent a fair amount of time and effort on gatekeeping — processing applications from people in the EA/x-risk/rationalist ecosystem to visit and work from the offices, and making decisions. Typically this would involve reading some of their public writings, and reaching out to a couple of their references that I trusted and asking for information about them. A lot of the people I reached out to were surprisingly great at giving honest references about their experiences with someone and sharing what they thought about someone.

One time, Kat Woods and Drew Spartz from Nonlinear applied to visit. I didn’t know them or their work well, except from a few brief interactions that Kat Woods seems high-energy, and to have a more optimistic outlook on life and work than most people I encounter.

I reached out to some references Kat listed, which were positive to strongly positive. However I also got a strongly negative reference — someone else who I informed about the decision told me they knew former employees who felt taken advantage of around things like salary. However the former employees reportedly didn’t want to come forward due to fear of retaliation and generally wanting to get away from the whole thing, and the reports felt very vague and hard for me to concretely visualize, but nonetheless the person strongly recommended against inviting Kat and Drew.

I didn’t feel like this was a strong enough reason to bar someone from a space — or rather, I did, but vague anonymous descriptions of very bad behavior being sufficient to ban someone is a system that can be straightforwardly abused, so I don’t want to use such a system. Furthermore, I was interested in getting my own read on Kat Woods from a short visit — she had only asked to visit for a week. So I accepted, though I informed her that this weighed on my mind. (This is a link to the decision email I sent to her.)

(After making that decision I was also linked to this ominous yet still vague EA Forum thread, that includes a former coworker of Kat Woods saying they did not like working with her, more comments like the one I received above, and links to a lot of strongly negative Glassdoor reviews for Nonlinear Cofounder Emerson Spartz’s former company “Dose”. Note that more than half of the negative reviews are for the company after Emerson sold it, but this is a concerning one from 2015 (while Emerson Spartz was CEO/Cofounder): “All of these super positive reviews are being commissioned by upper management. That is the first thing you should know about Spartz, and I think that gives a pretty good idea of the company’s priorities… care more about the people who are working for you and less about your public image”. A 2017 review says “The culture is toxic with a lot of cliques, internal conflict, and finger pointing.” There are also far worse reviews about a hellish work place which are very worrying, but they’re from the period after Emerson’s LinkedIn says he left, so I’m not sure to what extent he is responsible he is for them.)

On the first day of her visit, another person in the office privately reached out to me saying they were extremely concerned about having Kat and Drew in the office, and that they knew two employees who had had terrible experiences working with them. They wrote (and we later discussed it more):

Their company Nonlinear has a history of illegal and unethical behavior, where they will attract young and naive people to come work for them, and subject them to inhumane working conditions when they arrive, fail to pay them what was promised, and ask them to do illegal things as a part of their internship. I personally know two people who went through this, and they are scared to speak out due to the threat of reprisal, specifically by Kat Woods and Emerson Spartz.

This sparked (for me) a 100-200 hour investigation where I interviewed 10-15 people who interacted or worked with Nonlinear, read many written documents and tried to piece together some of what had happened.

My takeaway is that indeed their two in-person employees had quite horrendous experiences working with Nonlinear, and that Emerson Spartz and Kat Woods are significantly responsible both for the harmful dynamics and for the employees’ silence afterwards. Over the course of investigating Nonlinear I came to believe that the former employees there had no legal employment, tiny pay, a lot of isolation due to travel, had implicit and explicit threats of retaliation made if they quit or spoke out negatively about Nonlinear, simultaneously received a lot of (in my opinion often hollow) words of affection and claims of familial and romantic love, experienced many further unpleasant or dangerous experiences that they wouldn’t have if they hadn’t worked for Nonlinear, and needed several months to recover with friends and family afterwards before they felt able to return to work.

(Note that I don’t think the pay situation as-described in the above quoted text was entirely accurate, I think it was very small — $1k/month — and employees implicitly expected they would get more than they did, but there was mostly not salary ‘promised’ that didn’t get given out.)

After first hearing from them about their experiences, I still felt unsure about what was true — I didn’t know much about the Nonlinear cofounders, and I didn’t know which claims about the social dynamics I could be confident of. To get more context, I spent about 30+ hours on calls with 10-15 different people who had some professional dealings with at least one of Kat, Emerson and Drew, trying to build up a picture of the people and the org, and this helped me a lot in building my own sense of them by seeing what was common to many people’s experiences. I talked to many people who interacted with Emerson and Kat who had many active ethical concerns about them and strongly negative opinions, and I also had a 3-hour conversation with the Nonlinear cofounders about these concerns, and I now feel a lot more confident about a number of dynamics that the employees reported.

For most of these conversations I offered strict confidentiality, but (with the ex-employees’ consent) I’ve here written down some of the things I learned.

In this post I do not plan to name most of the people I talked to, but two former employees I will call “Alice” and “Chloe”. I think the people involved mostly want to put this time in their life behind them and I would encourage folks to respect their privacy, not name them online, and not talk to them about it unless you’re already good friends with them.

Conversation with Kat on March 7th, 2023

Returning to my initial experience: on the Tuesday of their visit, I still wasn’t informed about who the people were or any details of what happened, but I found an opportunity to chat with Kat over lunch.

After catching up for ~15 mins, I indicated that I’d be interested in talking about the concerns I raised in my email, and we talked in a private room for 30-40 mins. As soon as we sat down, Kat launched straight into stories about two former employees of hers, telling me repeatedly not to trust one of the employees (“Alice”), that she has a terrible relationship with truth, that she’s dangerous, and that she’s a reputational risk to the community. She said the other employee (“Chloe”) was “fine”.

Kat Woods also told me that she expected to have a policy with her employees of “I don’t say bad things about you, you don’t say bad things about me”. I am strongly against this kind of policy on principle (as I told her then). This and other details raised further red flags to me (i.e. the salary policy) and I wanted to understand what happened.

Here’s an overview of what she told me:

When they worked at Nonlinear, Alice and Chloe had expenses covered (room, board, food) and Chloe also got a monthly bonus of $1k/month.

Alice and Chloe lived in the same house as Kat, Emerson and Drew. Kat said that she has decided to not live with her employees going forward.

She said that Alice, who incubated their own project (here is a description of the incubation program on Nonlinear’s site), was able to set their own salary, and that Alice almost never talked to her (Kat) or her other boss (Emerson) about her salary.

Kat doesn’t trust Alice to tell the truth, and that Alice has a history of “catastrophic misunderstandings”.

Kat told me that Alice was unclear about the terms of the incubation, and said that Alice should have checked in with Kat in order to avoid this miscommunication.

Kat suggested that Alice may have quit in substantial part due to Kat missing a check-in call over Zoom toward the end.

Kat said that she hoped Alice would go by the principle of “I don’t say bad things about you, you don’t say bad things about me” but that the employee wasn’t holding up her end and was spreading negative things about Kat/Nonlinear.

Kat said she gives negative references for Alice, advises people “don’t hire her” and not to fund her, and “she’s really dangerous for the community”.

She said she didn’t have these issues with her other employee Chloe, she said she was “fine, just miscast” for her role of “assistant / operations manager”, which is what led to her quitting. Kat said Chloe was pretty skilled but did a lot of menial labor tasks for Kat that she didn’t enjoy.

The one negative thing she said about Chloe was that she was being paid the equivalent of $75k[1] per year (only $1k/month, the rest via room and board), but that at one point she asked for $75k on top of all expenses being paid and that was out of the question.[2]

A High-Level Overview of The Employees’ Experience with Nonlinear

Background

The core Nonlinear staff are Emerson Spartz, Kat Woods, and Drew Spartz.

Kat Woods has been in the EA ecosystem for at least 10 years, cofounding Charity Science in 2013 and working there until 2019. After a year at Charity Entrepreneurship, in 2021 she cofounded Nonlinear with Emerson Spartz, where she has worked for 2.5 years.

Nonlinear has received $599,000 from the Survival and Flourishing Fund in the first half of 2022, and $15,000 from Open Philanthropy in January 2022.

Emerson primarily funds the project through his personal wealth from his previous company Dose and from selling Mugglenet.com (which he founded). Emerson and Kat are romantic partners, and Emerson and Drew are brothers. They all live in the same house and travel across the world together, jumping from AirBnb to AirBnb once or twice per month. The staff they hire are either remote, or live in the house with them.

My current understanding is that they’ve had around ~4 remote interns, 1 remote employee, and 2 in-person employees (Alice and Chloe). Alice was the only person to go through their incubator program.

Nonlinear tried to have a fairly high-commitment culture where the long-term staff are involved very closely with the core family unit, both personally and professionally. However they were given exceedingly little financial independence, and a number of the social dynamics involved seem really risky to me.

Alice and Chloe

Alice travelled with Nonlinear from November 2021 to June 2022 and started working for the org from around February, and Chloe worked there from January 2022 to July 2022. After talking with them both, I learned the following:

Neither were legally employed by the non-profit at any point.

Chloe’s and Alice’s finances (along with Kat’s and Drew’s) all came directly from Emerson’s personal funds (not from the non-profit). This left them having to get permission for their personal purchases, and they were not able to live apart from the family unit while they worked with them, and they report feeling very socially and financially dependent on the family during the time they worked there.

Chloe’s salary was verbally agreed to come out to around $75k/year. However, she was only paid $1k/month, and otherwise had many basic things compensated i.e. rent, groceries, travel. This was supposed to make traveling together easier, and supposed to come out to the same salary level. While Emerson did compensate Alice and Chloe with food and board and travel, Chloe does not believe that she was compensated to an amount equivalent to the salary discussed, and I believe no accounting was done for either Alice or Chloe to ensure that any salary matched up. (I’ve done some spot-checks of the costs of their AirBnbs and travel, and Alice/Chloe’s epistemic state seems pretty reasonable to me.)

Alice joined as the sole person in their incubation program. She moved in with them after meeting Nonlinear at EAG and having a ~4 hour conversation there with Emerson, plus a second Zoom call with Kat. Initially while traveling with them she continued her previous job remotely, but was encouraged to quit and work on an incubated org, and after 2 months she quit her job and started working on projects with Nonlinear. Over the 8 months she was there Alice claims she received no salary for the first 5 months, then (roughly) $1k/month salary for 2 months, and then after she quit she received a ~$6k one-off salary payment (from the funds allocated for her incubated organization). She also had a substantial number of emergency health issues covered.[3]

Salary negotiations were consistently a major stressor for Alice’s entire time at Nonlinear. Over her time there she spent through all of her financial runway, and spent a significant portion of her last few months there financially in the red (having more bills and medical expenses than the money in her bank account) in part due to waiting on salary payments from Nonlinear. She eventually quit due to a combination of running exceedingly low on personal funds and wanting financial independence from Nonlinear, and as she quit she gave Nonlinear (on their request) full ownership of the organization that she had otherwise finished incubating.

From talking with both Alice and Nonlinear, it turned out that by the end of Alice’s time working there, since the end of February Kat Woods had thought of Alice as an employee that she managed, but that Emerson had not thought of Alice as an employee, primarily just someone who was traveling with them and collaborating because she wanted to, and that the $1k/month plus other compensation was a generous gift.

Alice and Chloe reported that Kat, Emerson, and Drew created an environment in which being a valuable member of Nonlinear included being entrepreneurial and creative in problem-solving — in practice this often meant getting around standard social rules to get what you wanted was strongly encouraged, including getting someone’s favorite table at a restaurant by pressuring the staff, and finding loopholes in laws pertaining to their work. This also applied internally to the organization. Alice and Chloe report being pressured into or convinced to take multiple actions that they seriously regretted whilst working for Nonlinear, such as becoming very financially dependent on Emerson, quitting being vegan, and driving without a license in a foreign country for many months. (To be clear I’m not saying that these laws are good and that breaking them is bad, I’m saying that it sounds to me from their reports like they were convinced to take actions that could have had severe personal downsides such as jail time in a foreign country, and that these are actions that they confidently believe they would not have taken had it not been due to the strong pressures they felt from the Nonlinear cofounders and the adversarial social environment internal to the company.) I’ll describe these events in more detail below.

They both report taking multiple months to recover after ending ties with Nonlinear, before they felt able to work again, and both describe working there as one of the worst experiences of their lives.

They both report being actively concerned about professional and personal retaliation from Nonlinear for speaking to me, and told me stories and showed me some texts that led me to believe that was a very credible concern.

An assortment of reported experiences

There are a lot of parts of their experiences at Nonlinear that these two staff found deeply unpleasant and hurtful. I will summarize a number of them below.

I think many of the things that happened are warning flags, I also think that there are some red lines, I’ll discuss my thoughts on which are the red lines in my takeaways at the bottom of this post.

My Level of Trust in These Reports

Most of the dynamics were described to me as accurate by multiple different people (low pay, no legal structure, isolation, some elements of social manipulation, intimidation), leading me to have high confidence in them, and Nonlinear themselves confirmed various parts of these accounts.

People whose word I would meaningfully update on about this sort of thing have vouched for Chloe’s word as reliable.

The Nonlinear staff and a small number of other people who visited during Alice and Chloe’s employment have strongly questioned Alice’s trustworthiness and suggested she has told outright lies. Nonlinear showed me texts where people who had spoken with Alice came away with the impression that she was paid $0 or $500, which is inaccurate (she was paid ~$8k on net, as she told me).

That said, I personally found Alice very willing and ready to share primary sources with me upon request (texts, bank info, etc), so I don’t believe her to be acting in bad faith.

In my first conversation with her, Kat claimed that Alice had many catastrophic miscommunications, but that Chloe was (quote) “fine”. In general nobody questioned Chloe’s word and broadly the people who told me they questioned Alice’s word said they trusted Chloe’s.

Personally I found all of their fears of retaliation to be genuine and earnest, and in my opinion justified.

Why I’m sharing these

I do have a strong heuristic that says consenting adults can agree to all sorts of things that eventually hurt them (i.e. in accepting these jobs), even if I paternalistically might think I could have prevented them from hurting themselves. That said, I see clear reasons to think that Kat and Emerson intimidated these people into accepting some of the actions or dynamics that hurt them, so some parts do not seem obviously consensual to me.

Separate from that, I think it’s good for other people to know what they’re getting into, so I think sharing this info is good because it is relevant for many people who have any likelihood of working with Nonlinear. And most importantly to me, I especially want to do it because it seems to me that Nonlinear has tried to prevent this negative information from being shared, so I am erring strongly on the side of sharing things.

(One of the employees also wanted to say something about why she contributed to this post, and I’ve put it in a footnote here.[4])

Highly dependent finances and social environment

Everyone lived in the same house. Emerson and Kat would share a room, and the others would make do with what else was available, often sharing bedrooms.

Nonlinear primarily moved around countries where they typically knew no locals and the employees regularly had nobody to interact with other than the cofounders, and employees report that they were denied requests to live in a separate AirBnb from the cofounders.

Alice and Chloe report that they were advised not to spend time with ‘low value people’, including their families, romantic partners, and anyone local to where they were staying, with the exception of guests/visitors that Nonlinear invited. Alice and Chloe report this made them very socially dependent on Kat/Emerson/Drew and otherwise very isolated.

The employees were very unclear on the boundaries of what would and wouldn’t be paid for by Nonlinear. For instance, Alice and Chloe report that they once spent several days driving around Puerto Rico looking for cheaper medical care for one of them before presenting it to senior staff, as they didn’t know whether medical care would be covered, so they wanted to make sure that it was as cheap as possible to increase the chance of senior staff saying yes.

The financial situation is complicated and messy. This is in large-part due to them doing very little accounting. In summary Alice spent a lot of her last 2 months with less than €1000 in her bank account, sometimes having to phone Emerson for immediate transfers to be able to cover medical costs when she was visiting doctors. At the time of her quitting she had €700 in her account, which was not enough to cover her bills at the end of the month, and left her quite scared. Though to be clear she was paid back ~€2900 of her outstanding salary by Nonlinear within a week, in part due to her strongly requesting it. (The relevant thing here is the extremely high level of financial dependence and wealth disparity, but Alice does not claim that Nonlinear failed to pay them.)

One of the central reasons Alice says that she stayed on this long was because she was expecting financial independence with the launch of her incubated project that had $100k allocated to it (fundraised from FTX). In her final month there Kat informed her that while she would work quite independently, they would keep the money in the Nonlinear bank account and she would ask for it, meaning she wouldn’t have the financial independence from them that she had been expecting, and learning this was what caused Alice to quit.

One of the employees interviewed Kat about her productivity advice, and shared notes from this interview with me. The employee writes:

During the interview, Kat openly admitted to not being productive but shared that she still appeared to be productive because she gets others to do work for her. She relies on volunteers who are willing to do free work for her, which is her top productivity advice.

The employees report that some interns later gave strongly negative feedback on working unpaid, and so Kat decided that she would no longer have interns at all.

Severe downsides threatened if the working relationship didn’t work out

In a conversation between Emerson Spartz and one of the employees, the employee asked for advice for a friend that wanted to find another job while being employed, without letting their current employer know about their decision to leave yet. Emerson reportedly immediately stated that he now has to update towards considering that the said employee herself is considering leaving Nonlinear. He went on to tell her that he gets mad at his employees who leave his company for other jobs that are equally good or less good; he said he understands if employees leave for clearly better opportunities. The employee reports that this led them to be very afraid of leaving the job, both because of the way Emerson made the update on thinking the employee is now trying to leave, as well as the notion of Emerson being retaliative towards employees that leave for “bad reasons”.

For background context on Emerson’s business philosophy: Alice quotes Emerson advising the following indicator of work progress: “How much value are you able to extract from others in a short amount of time?”[5] Another person who visited described Emerson to me as “always trying to use all of his bargaining power”. Chloe told me that, when she was negotiating salaries with external partners on behalf of Nonlinear, Emerson advised her when negotiating salaries, to offer “the lowest number you can get away with”.

Many different people reported that Emerson Spartz would boast about his business negotiations tactics to employees and visitors. He would encourage his employees to read many books on strategy and influence. When they read the book The 48 Laws of Power he would give examples of him following the “laws” in his past business practices.

One story that he told to both employees and visitors was about his intimidation tactics when involved in a conflict with a former teenage mentee of his, Adorian Deck.

(For context on the conflict, here’s links to articles written about it at the time: Hollywood Reporter, Jacksonville, Technology & Marketing Law Blog, and Emerson Spartz’s Tumblr. Plus here is the Legal Contract they signed that Deck later sued to undo.)

In brief, Adorian Deck was a 16 year-old who (in 2009) made a Twitter account called “OMGFacts” that quickly grew to having 300,000+ followers. Emerson reached out to build companies under the brand, and agreed to a deal with Adorian. Less than a year later Adorian wanted out of the deal, claiming that Emerson had made over $100k of profits and he’d only seen $100, and sued to end the deal.

According to Emerson, it turned out that there’s a clause unique to California (due to the acting profession in Los Angeles) where even if a minor and their parent signs a contract, it isn’t valid unless the signing is overseen by a judge, and so they were able to simply pull out of the deal.

But to this day Emerson’s company still owns the OMGfacts brand and companies and Youtube channels.

(Sidenote: I am not trying to make claims about who was “in the right” in these conflicts, I am reporting these as examples of Emerosn’s negotiation tactics that he reportedly engages in and actively endorses during conflicts.)

Emerson told versions of this story to different people who I spoke to (people reported him as ‘bragging’).

In one version, he claimed that he strong-armed Adorian and his mother with endless legal threats and they backed down and left him with full control of the brand. This person I spoke to couldn’t recall the details but said that Emerson tried to frighten Deck and his mother, and that they (the person Emerson was bragging to) found it “frightening” and thought the behavior was “behavior that’s like 7 standard deviations away from usual norms in this area.”

Another person was told the story in the context of the 2nd Law from “48 Laws of Power”, which is “Never put too much trust in friends, learn how to use enemies”. The summary includes

“Be wary of friends—they will betray you more quickly, for they are easily aroused to envy. They also become spoiled and tyrannical… you have more to fear from friends than from enemies.”

For this person who was told the Adorian story, the thing that resonated most when he told it was the claim that he was in a close, mentoring relationship with Adorian, and leveraged knowing him so well that he would know “exactly where to go to hurt him the most” so that he would back off. In that version of the story, he says that Deck’s life-goal was to be a YouTuber (which is indeed Deck’s profession until this day — he produces about 4 videos a month), and that Emerson strategically contacted the YouTubers that Deck most admired, and told them stories of Deck being lazy and trying to take credit for all of Emerson’s work. He reportedly threatened to do more of this until Deck relented, and this is why Deck gave up the lawsuit. The person said to me “He loved him, knew him really well, and destroyed him with that knowledge.”[6]

I later spoke with Emerson about this. He does say that he was working with the top YouTubers to create videos exposing Deck, and this is what brought Deck back to the negotiating table. He says that he ended up renegotiating a contract where Deck receives $10k/month for 7 years. If true, I think this final deal reflects positively on Emerson, though I still believe the people he spoke to were actively scared by their conversations with Emerson on this subject. (I have neither confirmed the existence of the contract nor heard Deck’s side of the story.)

He reportedly told another negotiation story about his response to getting scammed in a business deal. I won’t go into the details, but reportedly he paid a high-price for the rights to a logo/trademark, only to find that he had not read the fine print and had been sold something far less valuable. He gave it as an example of the “Keep others in suspended terror: cultivate an air of unpredictability” strategy from The 48 Laws of Power:

Be deliberately unpredictable. Behavior that seems to have no consistency or purpose will keep them off-balance, and they will wear themselves out trying to explain your moves. Taken to an extreme, this strategy can intimidate and terrorize.

In that business negotiation, he (reportedly) acted unhinged. According to the person I spoke with, he said he’d call the counterparty and say “batshit crazy things” and yell at them, with the purpose of making them think he’s capable of anything, including dangerous and unethical things, and eventually they relented and gave him the deal he wanted.

Someone else I spoke to reported him repeatedly saying that he would be “very antagonistic” toward people he was in conflict with. He reportedly gave the example that, if someone tried to sue him, he would be willing to go into legal gray areas in order to “crush his enemies” (a phrase he apparently used a lot), including hiring someone to stalk the person and their family in order to freak them out. (Emerson denies having said this, and suggests that he was probably describing this as a strategy that someone else might use in a conflict that one ought to be aware of.)

After Chloe eventually quit, Alice reports that Kat/Emerson would “trash talk” her, saying she was never an “A player”, criticizing her on lots of dimensions (competence, ethics, drama, etc) in spite of previously primarily giving Chloe high praise. This reportedly happened commonly toward other people who ended or turned down working together with Nonlinear.

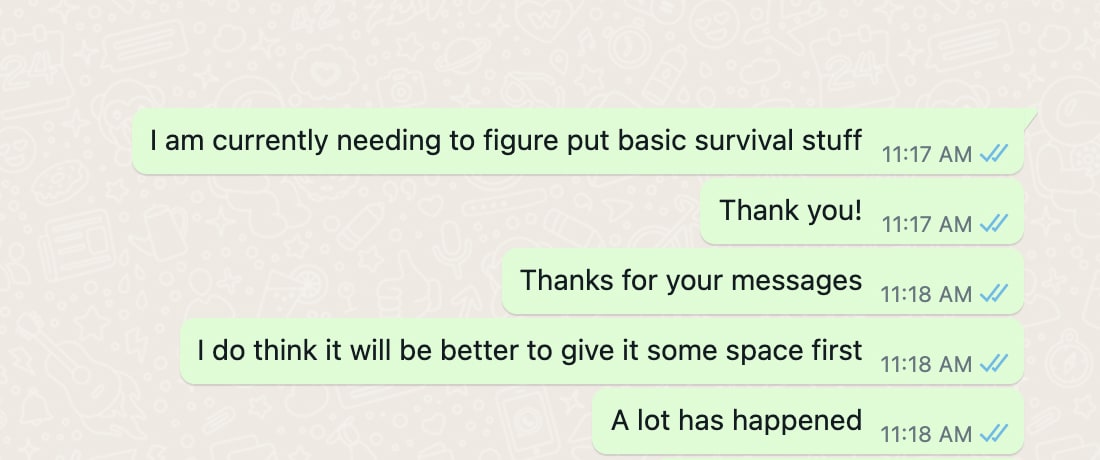

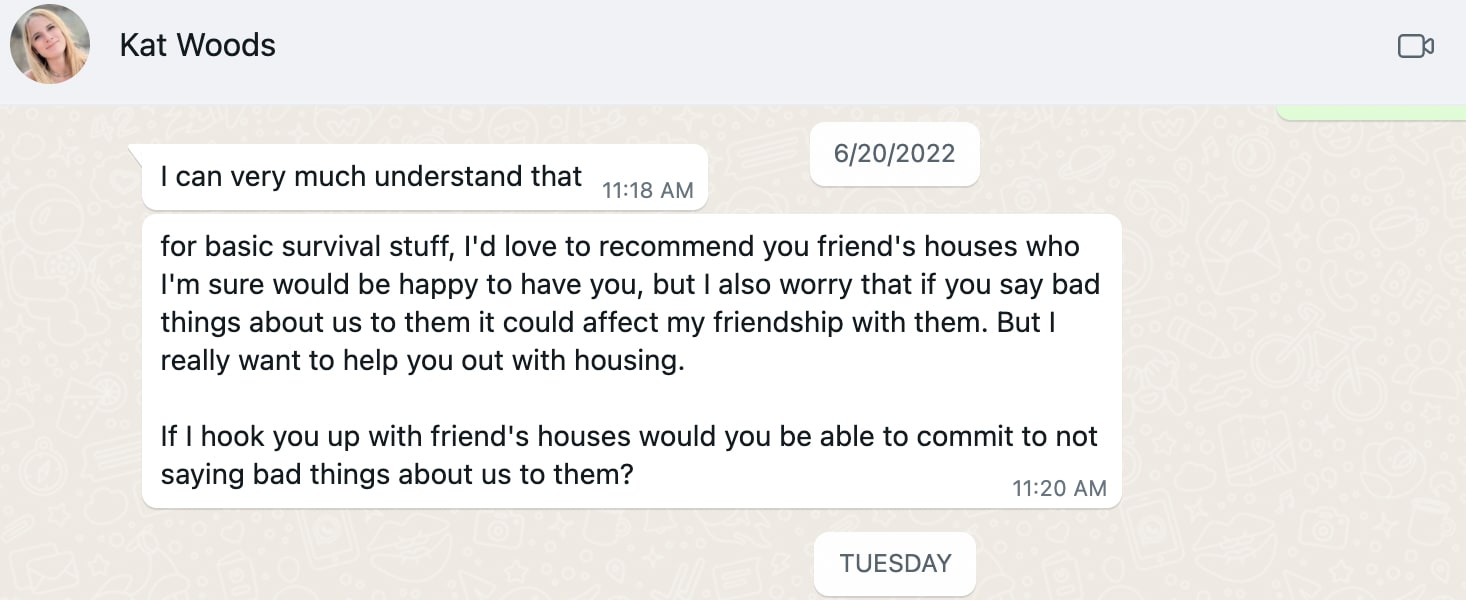

Here are some texts between Kat Woods and Alice shortly after Alice had quit, before the final salary had been paid.

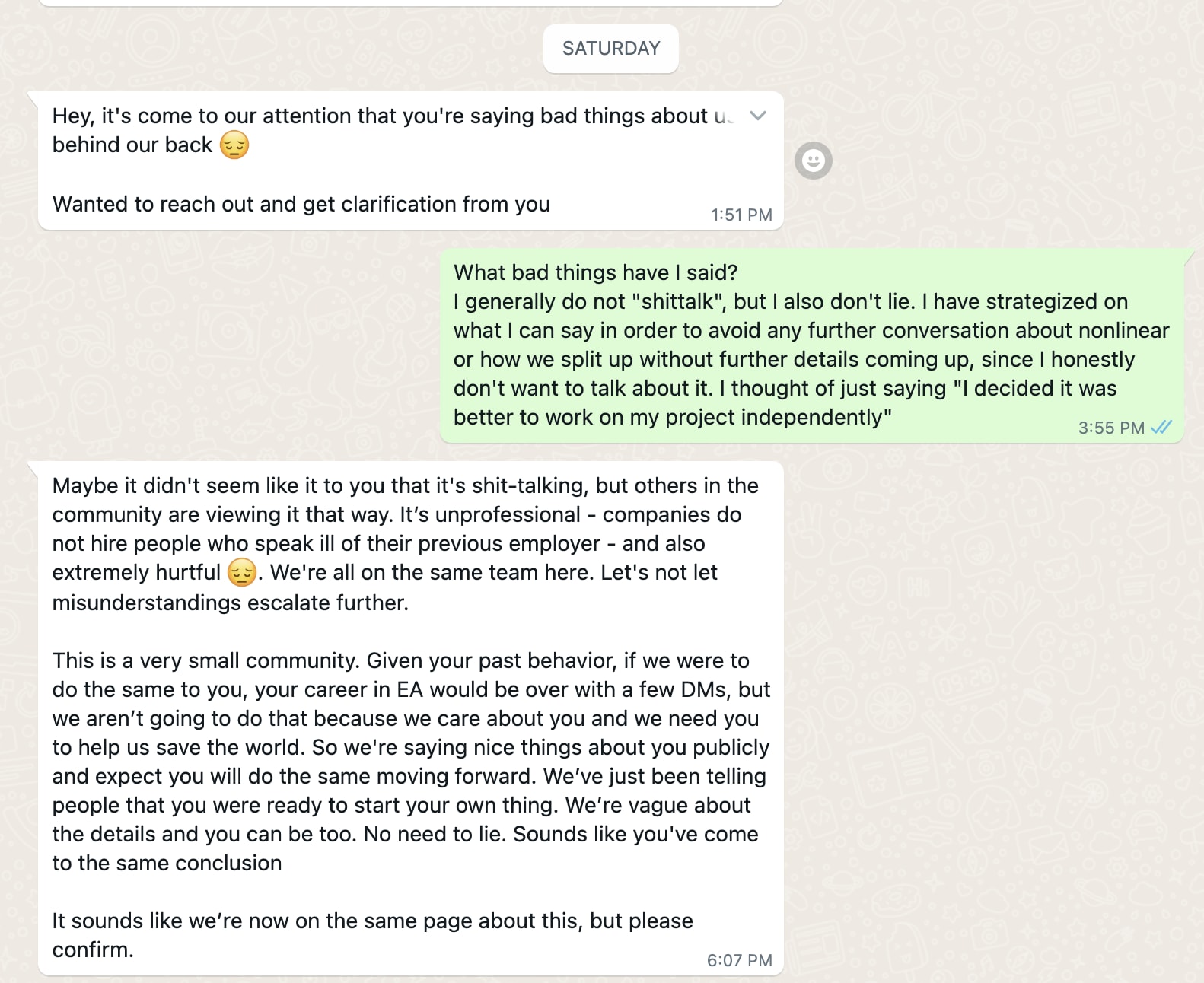

A few months later, some more texts from Kat Woods.

(I can corroborate that it was difficult to directly talk with the former employee and it took a fair bit of communication through indirect social channels before they were willing to identify themselves to me and talk about the details.)

Effusive positive emotion not backed up by reality, and other manipulative techniques

Multiple people who worked with Kat reported that Kat had a pattern of enforcing arbitrary short deadlines on people in order to get them to make the decision she wants e.g. “I need a decision by the end of this call”, or (in an email to Alice) “This is urgent and important. There are people working on saving the world and we can’t let our issues hold them back from doing their work.”

Alice reported feeling emotionally manipulated. She said she got constant compliments from the founders that ended up seeming fake.

Alice wrote down a string of the compliments at the time from Kat Woods (said out loud and that Alice wrote down in text), here is a sampling of them that she shared with me:

“You’re the kind of person I bet on, you’re a beast, you’re an animal, I think you are extraordinary”

“You can be in the top 10, you really just have to think about where you want to be, you have to make sacrifices to be on the top, you can be the best, only if you sacrifice enough”

“You’re working more than 99% because you care more than 99% because you’re a leader and going to save the world”

“You can’t fail if you commit to [this project], you have what it takes, you get sh*t done and everyone will hail you in EA, finally an executor among us.”

Alice reported that she would get these compliments near-daily. She eventually had the sense that this was said in order to get something out of her. She reported that one time, after a series of such compliments, the Kat Woods then turned and recorded a near-identical series of compliments into their phone for a different person.

Kat Woods reportedly several times cried while telling Alice that she wanted the employee in their life forever and was worried that this employee would ever not be in Kat’s life.

Other times when Alice would come to Kat with money troubles and asking for a pay rise, Alice reports that Kat would tell them that this was a psychological issue and that actually they had safety, for instance they could move back in with their parents, so they didn’t need to worry.

Alice also reports that she was explicitly advised by Kat Woods to cry and look cute when asking Emerson Spartz for a salary improvement, in order to get the salary improvement that she wanted, and was told this was a reliable way to get things from Emerson. (Alice reports that she did not follow this advice.)

Many other strong personal costs

Alice quit being vegan while working there. She was sick with covid in a foreign country, with only the three Nonlinear cofounders around, but nobody in the house was willing to go out and get her vegan food, so she barely ate for 2 days. Alice eventually gave in and ate non-vegan food in the house. She also said that the Nonlinear cofounders marked her quitting veganism as a ‘win’, as they thad been arguing that she should not be vegan.

(Nonlinear disputes this, and says that they did go out and buy her some vegan burgers food and had some vegan food in the house. They agree that she quit being vegan at this time, and say it was because being vegan was unusually hard due to being in Puerto Rico. Alice disputes that she received any vegan burgers.)

Alice said that this generally matched how she and Chloe were treated in the house, as people generally not worth spending time on, because they were ‘low value’ (i.e. in terms of their hourly wage), and that they were the people who had to do chores around the house (e.g. Alice was still asked to do house chores during the period where she was sick and not eating).

By the same reasoning, the employees reported that they were given 100% of the menial tasks around the house (cleaning, tidying, etc) due to their lower value of time to the company. For instance, if a cofounder spilled food in the kitchen, the employees would clean it up. This was generally reported as feeling very demeaning.

Alice and Chloe reported a substantial conflict within the household between Kat and Alice. Alice was polyamorous, and she and Drew entered into a casual romantic relationship. Kat previously had a polyamorous marriage that ended in divorce, and is now monogamously partnered with Emerson. Kat reportedly told Alice that she didn’t mind polyamory “on the other side of the world”, but couldn’t stand it right next to her, and probably either Alice would need to become monogamous or Alice should leave the organization. Alice didn’t become monogamous. Alice reports that Kat became increasingly cold over multiple months, and was very hard to work with.[7]

Alice reports then taking a vacation to visit her family, and trying to figure out how to repair the relationship with Kat. Before she went on vacation, Kat requested that Alice bring a variety of illegal drugs across the border for her (some recreational, some for productivity). Alice argued that this would be dangerous for her personally, but Emerson and Kat reportedly argued that it is not dangerous at all and was “absolutely risk-free”. Privately, Drew said that Kat would “love her forever” if she did this. I bring this up as an example of the sorts of requests that Kat/Emerson/Drew felt comfortable making during Alice’s time there.

Chloe was hired by Nonlinear with the intent to have them do executive assistant tasks for Nonlinear (this is the job ad they responded to). After being hired and flying out, Chloe was informed that on a daily basis their job would involve driving e.g. to get groceries when they were in different countries. She explained that she didn’t have a drivers’ license and didn’t know how to drive. Kat/Emerson proposed that Chloe learn to drive, and Drew gave her some driving lessons. When Chloe learned to drive well enough in parking lots, she said she was ready to get her license, but she discovered that she couldn’t get a license in a foreign country. Kat/Emerson/Drew reportedly didn’t seem to think that mattered or was even part of the plan, and strongly encouraged Chloe to just drive without a license to do their work, so she drove ~daily for 1-2 months without a license. (I think this involved physical risks for the employee and bystanders, and also substantial risks of being in jail in a foreign country. Also, Chloe basically never drove Emerson/Drew/Kat, this was primarily solo driving for daily errands.) Eventually Chloe had a minor collision with a street post, and was a bit freaked out because she had no idea what the correct protocols were. She reported that Kat/Emerson/Drew didn’t think that this was a big deal, but that Alice (who she was on her way to meet) could clearly see that Chloe was distressed by this, and Alice drove her home, and Chloe then decided to stop driving.

(Car accidents are the second most common cause of death for people in their age group. Insofar as they were pressured to do this and told that this was safe, I think this involved a pretty cavalier disregard for the safety of the person who worked for them.)

Chloe talked to a friend of hers (who is someone I know fairly well, and was the first person to give me a negative report about Nonlinear), reporting that they were very depressed. When Chloe described her working conditions, her friend was horrified, and said she had to get out immediately since, in their words, “this was clearly an abusive situation”. The friend offered to pay for flights out of the country, and tried to convince her to quit immediately. Eventually Chloe made a commitment to book a flight by a certain date and then followed through with that.

Lax on legalities and adversarial business practices

I did not find the time to write much here. For now I’ll simply pass on my impressions.

I generally got a sense from speaking with many parties that Emerson Spartz and Kat Woods respectively have very adversarial and very lax attitudes toward legalities and bureaucracies, with the former trying to do as little as possible that is asked of him. If I asked them to fill out paperwork I would expect it was filled out at least reluctantly and plausibly deceptively or adversarially in some way. In my current epistemic state, I would be actively concerned about any project in the EA or x-risk ecosystems that relied on Nonlinear doing any accounting or having a reliable legal structure that has had the basics checked.

Personally, if I were giving Nonlinear funds for any project whatsoever, including for regranting, I’d expect it’s quite plausible (>20%) that they didn’t spend the funds on what they told me, and instead will randomly spend it on some other project. If I had previously funded Nonlinear for any projects, I would be keen to ask Nonlinear for receipts to show whether they spent their funds in accordance with what they said they would.

This is not a complete list

I want to be clear that this is not a complete list of negative or concerning experiences, this is an illustrative list. There are many other things that I was told about that I am not including here due to factors like length and people’s privacy (on all sides). Also I split them up into the categories as I see them; someone else might make a different split.

Perspectives From Others Who Have Worked or Otherwise Been Close With Nonlinear

I had hoped to work this into a longer section of quotes, but it seemed like too much back-and-forth with lots of different people. I encourage folks to leave comments with their relevant impressions.

For now I’ll summarize some of what I learned as follows:

Several people gave reports consistent with Alice and Chloe being very upset and distressed both during and after their time at Nonlinear, and reaching out for help, and seeming really strongly to want to get away from Nonlinear.

Some unpaid interns (who worked remotely for Nonlinear for 1-3 months) said that they regretted not getting paid, and that when they brought it up with Kat Woods she said some positive sounding things and they expected she would get back to them about it, but that never happened during the rest of their internships.

Many people who visited had fine experiences with Nonlinear, others felt much more troubled by the experience.

One person said to me about Emerson/Drew/Kat:

“My subjective feeling is like ‘they seemed to be really bad and toxic people’. And they at the same time have a decent amount of impact. After I interacted repeatedly with them I was highly confused about the dilemma of people who are mistreating other people, but are doing some good.”

Another person said about Emerson:

“He seems to think he’s extremely competent, a genius, and that everyone else is inferior to him. They should learn everything they can from him, he has nothing to learn from them. He said things close to this explicitly. Drew and (to a lesser extent) Kat really bought into him being the new messiah.”

One person who has worked for Kat Woods (not Alice or Chloe) said the following:

I love her as a person, hate her as a boss. She’s fun, has a lot of ideas, really good socialite, and I think that that speaks to how she’s able to get away with a lot of things. Able to wear different masks in different places. She’s someone who’s easy to trust, easy to build social relationships with. I’d be suspicious of anyone who gives a reference who’s never been below Kat in power.

Ben: Do you think Kat is emotionally manipulative?

I think she is. I think it’s a fine line about what makes an excellent entrepreneur. Do whatever it takes to get a deal signed. To get it across the line. Depends a lot on what the power dynamics are, whether it’s a problem or not. If people are in equal power structures it’s less of a problem.

There were other informative conversations that I won’t summarize. I encourage folks who have worked with or for Nonlinear to comment with their perspective.

Conversation with Nonlinear

After putting the above together, I got permission from Alice and Chloe to publish, and to share the information I had learned as I saw fit. So I booked a call with Nonlinear, sent them a long list of concerns, and talked with Emerson, Kat and Drew for ~3 hours to hear them out.

Paraphrasing Nonlinear

On the call, they said their primary intention in the call was to convince me that Alice is a bald-faced liar. They further said they’re terrified of Alice making false claims about them, and that she is in a powerful position to hurt them with false accusations.

Afterwards, I wrote up a paraphrase of their responses. I shared it with Emerson and he replied that it was a “Good summary!”. Below is the paraphrase of their perspective on things that I sent them, with one minor edit for privacy. (The below is written as though Nonlinear is speaking, but to be clear this 100% my writing.)

We hired one person, and kind-of-technically-hired a second person. In doing so, our intention wasn’t just to have employees, but also to have members of our family unit who we traveled with and worked closely together with in having a strong positive impact in the world, and were very personally close with.

We nomadically traveled the globe. This can be quite lonely so we put a lot of work into bringing people to us, often having visitors in our house who we supported with flights and accommodation. This probably wasn’t perfect but in general we’d describe the environment as “quite actively social”.

For the formal employee, she responded to a job ad, we interviewed her, and it all went the standard way. For the gradually-employed employee, we initially just invited her to travel with us and co-work, as she seemed like a successful entrepreneur and aligned in terms of our visions for improving the world. Over time she quit her existing job and we worked on projects together and were gradually bringing her into our organization.

We wanted to give these employees a pretty standard amount of compensation, but also mostly not worry about negotiating minor financial details as we traveled the world. So we covered basic rent/groceries/travel for these people. On top of that, to the formal employee we gave a $1k/month salary, and to the semi-formal employee we eventually did the same too. For the latter employee, we roughly paid her ~$8k over the time she worked with us.

From our perspective, the gradually-hired employees gave a falsely positive impression of their financial and professional situation, suggesting they’d accomplished more than they had and were earning more than they had. They ended up being fairly financially dependent on us and we didn’t expect that.

Eventually, after about 6-8 months each, both employees quit. Overall this experiment went poorly from our perspective and we’re not going to try it in future.

For the formal employee, we’re a bit unsure about why exactly she quit, even though we did do exit interviews with her. She said she didn’t like a lot of the menial work (which is what we hired her for), but didn’t say that money was the problem. We think it is probably related to everyone getting Covid and being kind of depressed around that time.

For the other employee, relations got bad for various reasons. She ended up wanting total control of the org she was incubating with us, rather than 95% control as we’d discussed, but that wasn’t on the table (the org had $250k dedicated to it that we’d raised!), and so she quit.

When she was leaving, we were financially supportive. On the day we flew back from the Bahamas to London, we paid all our outstanding reimbursements (~$2900). We also offered to pay for her to have a room in London for a week as she got herself sorted out. We also offered her rooms with our friends if she promised not to tell them lies about us behind our backs.

After she left, we believe she told a lot of lies and inaccurate stories about us. For instance, two people we talked to had the impression that we either paid her $0 or $500, which is demonstrably false. Right now we’re pretty actively concerned that she is telling lots of false stories in order to paint us in a negative light, because the relationship didn’t work out and she didn’t get control over her org (and because her general character seems drama-prone).

There were some points around the experiences of these employees that we want to respond to.

First; the formal employee drove without a license for 1-2 months in Puerto Rico. We taught her to drive, which she was excited about. You might think this is a substantial legal risk, but basically it isn’t, as you can see here, the general range of fines for issues around not-having-a-license in Puerto Rico is in the range of $25 to $500, which just isn’t that bad.

Second; the semi-employee said that she wasn’t supported in getting vegan food when she was sick with Covid, and this is why she stopped being vegan. This seems also straightforwardly inaccurate, we brought her potatoes, vegan burgers, and had vegan food in the house. We had been advising her to 80⁄20 being a vegan and this probably also weighed on her decision.

Third; the semi-employee was also asked to bring some productivity-related and recreational drugs over the border for us. In general we didn’t push hard on this. For one, this is an activity she already did (with other drugs). For two, we thought it didn’t need prescription in the country she was visiting, and when we found out otherwise, we dropped it. And for three, she used a bunch of our drugs herself, so it’s not fair to say that this request was made entirely selfishly. I think this just seems like an extension of the sorts of actions she’s generally open to.

Finally, multiple people (beyond our two in-person employees) told Ben they felt frightened or freaked out by some of the business tactics in the stories Emerson told them. To give context and respond to that:

I, Emerson, have had a lot of exceedingly harsh and cruel business experience, including getting tricked or stabbed-in-the-back. Nonetheless, I have often prevailed in these difficult situations, and learned a lot of hard lessons about how to act in the world.

The skills required to do so seem to me lacking in many of the earnest-but-naive EAs that I meet, and I would really like them to learn how to be strong in this way. As such, I often tell EAs these stories, selecting for the most cut-throat ones, and sometimes I try to play up the harshness of how you have to respond to the threats. I think of myself as playing the role of a wise old mentor who has had lots of experience, telling stories to the young adventurers, trying to toughen them up, somewhat similar to how Prof Quirrell[8] toughens up the students in HPMOR through teaching them Defense Against the Dark Arts, to deal with real monsters in the world.

For instance, I tell people about my negotiations with Adorian Deck about the OMGFacts brand and Twitter account. We signed a good deal, but a California technicality meant he could pull from it and take my whole company, which is a really illegitimate claim. They wouldn’t talk with me, so I was working with top YouTubers to make some videos publicizing and exposing his bad behavior. This got him back to the negotiation table and we worked out a deal where he got $10k/month for seven years, which is not a shabby deal, and meant that I got to keep my company!

It had been reported to Ben that Emerson said he would be willing to go into legal gray areas in order to “crush his enemies” (if they were acting in very reprehensible and norm-violating ways). Emerson thinks this has got to be a misunderstanding, that he was talking about what other people might do to you, which is a crucial thing to discuss and model.

(Here I cease pretending-to-be-Nonlinear and return to my own voice.)

My thoughts on the ethics and my takeaways

Summary of My Epistemic State

Here are my probabilities for a few high-level claims relating to Alice and Chloe’s experiences working at Nonlinear.

Emerson Spartz employs more vicious and adversarial tactics in conflicts than 99% of the people active in the EA/x-risk/AI Safety communities: 95%

Alice and Chloe were more dependent on their bosses (combining financial, social, and legally) than employees are at literally every other organization I am aware of in the EA/x-risk/AI Safety ecosystem: 85%[9]

In working at Nonlinear Alice and Chloe were both took on physical and legal risks that they strongly regretted, were hurt emotionally, came away financially worse off, gained ~no professional advancement from their time at Nonlinear, and took several months after the experience to recover: 90%

Alice and Chloe both had credible reason to be very scared of retaliation for sharing negative information about their work experiences, far beyond that experienced at any other org in the EA/x-risk/AI Safety ecosystem: 85%[10]

General Comments From Me

Going forward I think anyone who works with Kat Woods, Emerson Spartz, or Drew Spartz, should sign legal employment contracts, and make sure all financial agreements are written down in emails and messages that the employee has possession of. I think all people considering employment by the above people at any non-profits they run should take salaries where money is wired to their bank accounts, and not do unpaid work or work that is compensated by ways that don’t primarily include a salary being wired to their bank accounts.

I expect that if Nonlinear does more hiring in the EA ecosystem it is more-likely-than-not to chew up and spit out other bright-eyed young EAs who want to do good in the world. I relatedly think that the EA ecosystem doesn’t have reliable defenses against such predators. These are not the first, nor sadly the last, bright-eyed well-intentioned people who I expect to be taken advantage of and hurt in the EA/x-risk/AI safety ecosystem, as a result of falsely trusting high-status people at EA events to be people who will treat them honorably.

(Personal aside: Regarding the texts from Kat Woods shown above — I have to say, if you want to be allies with me, you must not write texts like these. A lot of bad behavior can be learned from, fixed, and forgiven, but if you take actions to prevent me from being able to learn that the bad behavior is even going on, then I have to always be worried that something far worse is happening that I’m not aware of, and indeed I have been quite shocked to discover how bad people’s experiences were working for Nonlinear.)

My position is not greatly changed by the fact that Nonlinear is overwhelmingly confident that Alice is a “bald-faced liar”. From my current perspective, they probably have some legitimate grievances against her, but that in no way makes it less costly to our collective epistemology to incentivize her to not share her own substantial grievances. I think the magnitude of the costs they imposed on their employees-slash-new-family are far higher than I or anyone I know would have expected was happening, and they intimidated both Alice and Chloe into silence about those costs. If it were only Alice then I would give this perspective a lot more thought/weight, but Chloe reports a lot of the same dynamics and similar harms.

To my eyes, the people involved were genuinely concerned about retaliation for saying anything negative about Nonlinear, including the workplace/household dynamics and how painful their experiences had been for them. That’s a red line in my book, and I will not personally work with Nonlinear in the future because of it, and I recommend their exclusion from any professional communities that wish to keep up the standard of people not being silenced about extremely negative work experiences. “First they came for the epistemology. We don’t know what happened after that.”

Specifically, the things that cross my personal lines for working with someone or viewing them as an ally:

Kat Woods attempted to offer someone who was really hurting, and in a position of strong need, very basic resources with the requirement of not saying bad things about her.

Kat Woods’ texts that read to me as a veiled threat to destroy someone’s career for sharing negative information about her.

Emerson Spartz reportedly telling multiple people he will use questionably legal methods in order to crush his enemies (such as spurious lawsuits and that he would hire a stalker to freak someone out).

Both employees were actively afraid that Emerson Spartz would retaliate and potentially using tactics like spurious lawsuits and further things that are questionably legal, and generally try to destroy their careers and leave them with no resources. It seems to me (given the other reports I’ve heard from visitors) that Emerson behaved in a way that quite understandably led them to this epistemic state, and I consider that to be his responsibility to not give his employees this impression.

I think in almost any functioning professional ecosystem, there should be some general principles like:

If you employ someone, after they work for you, unless they’ve done something egregiously wrong or unethical, they should be comfortable continuing to work and participate in this professional ecosystem.

If you employ someone, after they work for you, they should feel comfortable talking openly about their experience working with you to others in this professional ecosystem.

Any breaking of the first rule is very costly, and any breaking of the second rule is by-default a red-line for me not being willing to work with you.

I do think that there was a nearby world where Alice, having run out of money, gave in and stayed at Nonlinear, begging them for money, and becoming a fully dependent and subservient house pet — a world where we would not have learned the majority of this information. I think we’re not that far from that world, I think a weaker person than Alice might have never quit, and it showed a lot of strength to quit at the point where you have ~no runway left and you have heard the above stories about the kinds of things Emerson Spartz considers doing to former business partners that he is angry with.

I’m very grateful to the two staff members involved for coming forward and eventually spending dozens of hours clarifying and explaining their experiences to me and others who were interested. To compensate them for their courage, the time and effort spent to talk with me and explain their experiences at some length, and their permission to allow me to publish a lot of this information, I (using personal funds) am going to pay them each $5,000 after publishing this post.

I think that whistleblowing is generally a difficult experience, with a lot riding on the fairly personal account from fallible human beings. It’s neither the case that everything reported should be accepted without question, nor that if some aspect is learned to be exaggerated or misreported that the whole case should be thrown out. I plan to reply to further questions here in the comments, I also encourage everyone involved to comment insofar as they wish to answer questions or give their own perspective on what happened.

Addendum

This is a list of edits made post-publication.

“Alice worked there from November 2021 to June 2022” became “Alice travelled with Nonlinear from November 2021 to June 2022 and started working for the org from around February”

“using Lightcone funds” became “using personal funds”

“I see clear reasons to think that Kat, Emerson and Drew intimidated these people” became “I see clear reasons to think that Kat and Emerson intimidated these people”.

- ^

In a later conversation, Kat clarified that the actual amount discussed was $70k.

- ^

Comment from Chloe:

In my resignation conversation with Kat, I was worried about getting into a negotiation conversation where I wouldn’t have strong enough reasons to leave. To avoid this, I started off by saying that my decision to quit is final, and not an ultimatum that warrants negotiation of what would make me want to stay. I did offer to elaborate on the reasons for why I was leaving. As I was explaining my reasons, she still insisted on offering me solutions to things I would say I wanted, to see if that would make me change my mind anyway. One of the reasons I listed was the lack of financial freedom in not having my salary be paid out as a salary which I could allocate towards decisions like choices in accommodation for myself, as well as meals and travel decisions. She wanted to know how much I wanted to be paid. I kept evading the question since it seemed to tackle the wrong part of the problem. Eventually I quoted back the number I had heard her reference to when she’d talk about what my salary is equivalent to, suggesting that if they’d pay out the 75k as a salary instead of the compensation package, then that would in theory solve the salary issue. There was a miscommunication around her believing that I wanted that to be paid out on top of the living expenses—I wanted financial freedom and a legal salary. I believe the miscommunication stems from me mentioning that salaries are more expensive for employers to pay out as they also have to pay tax on the salaries, e.g. social benefits, pension (depending on the country). Kat was surprised to hear that and understood it as me wanting a 75k salary before taxes. I do not remember that conversation concluding with her thinking I wanted everything paid for and also 75k.

- ^

Note that Nonlinear and Alice gave conflicting reports about which month she started getting paid, February vs April. It was hard for me to check as it’s not legally recorded and there’s lots of bits of monetary payments unclearly coded between them.

- ^

Comment from one of the employees:

I had largely moved on from the subject and left the past behind when Ben started researching it to write a piece with his thoughts on it. I was very reluctant at first (and frightened at the mere thought), and frankly, will probably continue to be. I did not agree to post this publicly with any kind of malice, rest assured. The guiding thought here is, as Ben asked, “What would you tell your friend if they wanted to start working for this organization?” I would want my friend to be able to make their own independent decision, having read about my experience and the experiences of others who have worked there. My main goal is to create a world where we can all work together towards a safe, long and prosperous future, and anything that takes away from that (like conflict and drama) is bad and I have generally avoided it. Even when I was working at Nonlinear, I remember saying several times that I just wanted to work on what was important and didn’t want to get involved in their interpersonal drama. But it’s hard for me to imagine a future where situations like that are just overlooked and other people get hurt when it could have been stopped or flagged before. I want to live in a world where everyone is safe and cared for. For most of my life I have avoided learning about anything to do with manipulation, power frameworks and even personality disorders. By avoiding them, I also missed the opportunity to protect myself and others from dangerous situations. Knowledge is the best defense against any kind of manipulation or abuse, so I strongly recommend informing yourself about it, and advising others to do so too.

- ^

This is something Alice showed me was written in her notes from the time.

- ^

I do not mean to make a claim here about who was in the right in that conflict. And somewhat in Emerson’s defense, I think some of people’s most aggressive behavior comes out when they themselves have just been wronged — I expect this is more extreme behavior than he would typically respond with. Nonetheless, it seems to me that there was reportedly a close, mentoring relationship — Emerson’s tumblr post on the situation says “I loved Adorian Deck” in the opening paragraph — but that later Emerson reportedly became bitter and nasty in order to win the conflict, involving threatening to overwhelm someone with lawsuits and legal costs, and figure out the best way to use their formerly close relationship to hurt them emotionally, and reportedly gave this as an example of good business strategy. I think this sort of story somewhat justifiably left people working closely with Emerson very worried about the sort of retaliation he might carry out if they were ever in a conflict, or he were to ever view them as an ‘enemy’.

- ^

After this, there were further reports of claims of Kat professing her romantic love for Alice, and also precisely opposite reports of Alice professing her romantic love for Kat. I am pretty confused about what happened.

- ^

Note that during our conversation, Emerson brought up HPMOR and the Quirrell similarity, not me.

- ^

With the exception of some FTX staff.

- ^

One of the factors lowering my number here is that I’m not quite sure what the dynamics are like at places like Anthropic and OpenAI — who have employees sign non-disparagement clauses, and are involved in geopolitics — or whether they would even be included. I also could imagine finding out that various senior people at CEA/EV are terrified of information coming out about them. Also note that I am not including Leverage Research in this assessment.

- Effective Aspersions: How the Nonlinear Investigation Went Wrong by (19 Dec 2023 12:00 UTC; 350 points)

- Practically A Book Review: Appendix to “Nonlinear’s Evidence: Debunking False and Misleading Claims” by (3 Jan 2024 23:16 UTC; 314 points)

- How has FTX’s collapse impacted EA? by (17 Oct 2023 17:02 UTC; 248 points)

- Closing Notes on Nonlinear Investigation by (15 Sep 2023 22:31 UTC; 202 points)

- Effective Aspersions: How the Nonlinear Investigation Went Wrong by (LessWrong; 19 Dec 2023 12:00 UTC; 188 points)

- 's comment on Nonlinear’s Evidence: Debunking False and Misleading Claims by (13 Dec 2023 7:24 UTC; 155 points)

- Nonlinear’s Evidence: Debunking False and Misleading Claims by (12 Dec 2023 13:15 UTC; 152 points)

- Nonlinear’s Evidence: Debunking False and Misleading Claims by (LessWrong; 12 Dec 2023 13:16 UTC; 104 points)

- EA EDA: Looking at Forum trends across 2023 by (11 Jun 2024 18:34 UTC; 103 points)

- A quick update from Nonlinear by (7 Sep 2023 21:26 UTC; 85 points)

- Posts from 2023 you thought were valuable (and underrated) by (21 Mar 2024 23:34 UTC; 82 points)

- 's comment on Nonlinear’s Evidence: Debunking False and Misleading Claims by (22 Dec 2023 4:47 UTC; 79 points)

- 's comment on calebp’s Quick takes by (1 May 2025 19:05 UTC; 77 points)

- A quick update from Nonlinear by (LessWrong; 7 Sep 2023 21:28 UTC; 72 points)

- 's comment on Matt_Sharp’s Quick takes by (7 Sep 2023 12:47 UTC; 47 points)

- 's comment on Closing Notes on Nonlinear Investigation by (20 Sep 2023 20:08 UTC; 33 points)

- 's comment on Nonlinear’s Evidence: Debunking False and Misleading Claims by (LessWrong; 17 Dec 2023 23:23 UTC; 25 points)

- Resource on whistleblowing and other ways of escalating concerns by (9 Nov 2023 19:01 UTC; 24 points)

- 's comment on Sharing Information About Nonlinear by (LessWrong; 7 Sep 2023 14:28 UTC; 22 points)

- Dialogue: What is the optimal frontier for due diligence? by (8 Sep 2023 18:28 UTC; 9 points)

- 's comment on calebp’s Quick takes by (1 May 2025 19:41 UTC; 9 points)

- 's comment on Effective Aspersions: How the Nonlinear Investigation Went Wrong by (LessWrong; 20 Dec 2023 3:41 UTC; 3 points)

On behalf of Chloe and in her own words, here’s a response that might illuminate some pieces that are not obvious from Ben’s post—as his post is relying on more factual and object-level evidence, rather than the whole narrative.

“Before Ben published, I found thinking about or discussing my experiences very painful, as well as scary—I was never sure with whom it was safe sharing any of this with. Now that it’s public, it feels like it’s in the past and I’m able to talk about it. Here are some of my experiences I think are relevant to understanding what went on. They’re harder to back up with chatlog or other written evidence—take them as you want, knowing these are stories more than clearly backed up by evidence. I think people should be able to make up their own opinion on this, and I believe they should have the appropriate information to do so.

I want to emphasize *just how much* the entire experience of working for Nonlinear was them creating all kinds of obstacles, and me being told that if I’m clever enough I can figure out how to do these tasks anyway. It’s not actually about whether I had a contract and a salary (even then, the issue wasn’t the amount or even the legality, it was that they’d be verbally unclear about what the compensation entailed, eg Emerson saying that since he bought me a laptop in January under the premise of “productivity tool”, that meant my January salary was actually higher than it would have been otherwise, even though it was never said that the laptop was considered as part of the compensation when we discussed it, and I had not initiated the purchase of it), or whether I was asked to do illegal things and what constitutes as okay illegal vs not okay illegal—it’s the fact that they threw some impossibly complex setup at us, told us we can have whatever we want, if we are clever enough with negotiating (by us I mostly mean me and Alice). And boy did we have to negotiate. I needed to run a medical errand for myself in Puerto Rico and the amount of negotiating I needed to do to get them to drive me to a different city that was a 30 min drive away was wild. I needed to go there three times, and I knew the answer of anyone driving me would be that it’s not worth their time, at the same time getting taxis was difficult while we were living in isolated mountain towns, and obviously it would have been easiest to have Drew or Emerson drive me. I looked up tourism things to do in that city, and tried to use things like “hey this city is the only one that has a store that sells Emerson’s favorite breakfast cereal and I could stock up for weeks if we could just get there somehow”. Also—this kind of going out of your way to get what you wanted or needed was rewarded with the Nonlinear team members giving you “points” or calling you a “negotiation genius”.

Of course I was excited to learn how to drive—I could finally get my tasks done and take care of myself, and have a means to get away from the team when it became too much to be around them. And this is negotiating for just going to a city that’s a 30 minute drive away—three times. Imagine how much I had to negotiate to get someone to drive me to a grocery store to do weekly groceries, and then add to that salary or compensation package negotiations and negotiate whether I could be relieved from having to learn how to buy weed for Kat in every country we went to. I’m still not sure how to concisely describe the frame they prescribed to us (here’s a great post on frame control by Aella that seems relevant https://aella.substack.com/p/frame-control ), but most saliently it included the heavy pep talk of how we could negotiate anything we wanted if we were clever enough, and if we failed—it was implied that we simply weren’t good enough. People get prescribed an hourly rate, based on how much their time is worth at Nonlinear. On the stack of who has most value, it goes Emerson, Kat, Drew, Alice, Chloe. All this in the context where we were isolated, and our finances mostly controlled by Emerson. I’ll add a few stories from my perspective, of how this plays out in practice.

Note: These stories are roughly 2 to 3 months into my job, this means 2 to 3 months of needing to find clever solutions to problems that ought to be simple, as well as ongoing negotiations with members of the Nonlinear team, to get the basics of my job done.

(⅙)”

…

“When we were flying to the Bahamas from St Martin, I was given a task of packing up all of Nonlinear’s things (mostly Kat & Emerson) into 5 suitcases. Emerson wanted the suitcases to be below the allowed limit if possible. I estimated that the physical weight of their items would exceed the weight limit of 5 suitcases. I packed and repacked the suitcases 5 to 6 times, after each time Emerson would check my work, say that the suitcases are too heavy, and teach me a new rule according to which to throw things out. Eventually I got it done to a level that Emerson was satisfied with. Him and Kat had been working outside the entire time.

In a previous packing scenario I had packed some things like charging cables and similar daily used items too fast, which Emerson did not appreciate, so this time I had left some everyday things around for him to use and grab as the last things. When I said we are packed and ready to go, he looked around the house and got angry at all the things that were lying around that he now had to pack himself—I remember him shouting in anger. I was packing up the cars and didn’t deal with him, just let him be mad in the house. This got Drew pretty frustrated as well, he had witnessed me repacking five bags 5-6 times and also tried to negotiate with Emerson about ditching some things that he refused to leave behind (we carried around 2 mountain bikes, and Emerson tasked me with packing in a beach chair as well). When we got into the car which was packed to the brim, Drew got to driving and as we drove out, he shouted really loudly out of anger. The anger was so real that I parsed it as him making a joke because I could not fathom how angry he was—my immediate response was to laugh. I quickly realized he was serious, I stopped and apologized, to which he responded with something like “no I am actually mad, and you should be too!”—related to how much we had to pack up. (2/6)“

…

“Kat had asked me to buy her a specific blonde hair coloring, at the time she told me it’s urgent since she had grown out her natural hair quite a lot. We were living in St Martin where they simply do not sell extreme blond coloring in the specific shade I needed to find, and Amazon does not deliver to St Martin. I also needed to grab this hair coloring while doing weekly groceries. One important guideline I needed to follow for groceries was that it had to be roughly a 10 min car trip but they were frequently disappointed if I didn’t get all their necessities shopped for from local stores so I naturally ventured further sometimes to make sure I got what they asked for.

I ended up spending hours looking for that blonde hair coloring in different stores, pharmacies, and beauty stores, across multiple weekly grocery trips. I kept Kat updated on this. Eventually I found the exact shade she asked for—Kat was happy to receive this but proceeded to not color her hair with it for another two weeks. Then we had to pack up to travel to the Bahamas. The packing was difficult (see previous paragraph) - we were struggling with throwing unnecessary things out. The hair color had seemed pretty important, and I thought Bahamas would also be a tricky place to buy that haircolor from, so I had packed it in. We get to the airport, waiting in the queue to check in the suitcases. Kat decides to open up the suitcases to see which last minute things we can throw out to make the suitcases lighter. She reaches for the hair color and happily throws it out. My self worth is in a place where I witness her doing this (she knows how much effort I put into finding this), and I don’t even think to say anything in protest—it just feels natural that my work hours are worth just this much. It’s depressing. (3/6)”

…

“There was a time during our stay at St Martin when I was overwhelmed from living and seeing only the same people every single day and needed a day off. Sometimes I’d become so overwhelmed I became really bad at formulating sentences and being in social contexts so I’d take a day off and go somewhere on the island where I could be on my own, away from the whole team—I’ve never before and after experienced an actual lack of being able to formulate sentences just from being around the same people for too long. This was one of these times. We had guests over and the team with the guests had decided in the morning that it’s a good vacation day for going to St Barths. I laid low because I thought since I’m also on a weekend day, it would not be mine to organize (me and Kat would take off Tuesdays and Saturdays, these were sometimes called weekend or vacation days).

Emerson approaches me to ask if I can set up the trip. I tell him I really need the vacation day for myself. He says something like “but organizing stuff is fun for you!”. I don’t know how to respond nor how to get out of it, I don’t feel like I have the energy to negotiate with him so I start work, hoping that if I get it done quickly, I can have the rest of the day for myself.

I didn’t have time to eat, had just woken up, and the actual task itself required to rally up 7 people and figure out their passport situation as well as if they want to join. St Barths means entering a different country, which meant that I needed to check in with the passport as well as covid requirements and whether all 7 people can actually join. I needed to quickly book some ferry tickets there and back for the day, rally the people to the cars and get to the ferry—all of this within less than an hour. We were late and annoyed the ferry employees—but this is one of the things generally ignored by the Nonlinear team, us being late but getting our way is a sign of our agency and how we aren’t NPCs that just follow the prescribed ferry times—they’re negotiable after all, if we can get away with getting to St Barths anyway.

I thought my work was done. We got to the island, my plan was to make the most of it and go on my own somewhere but Emerson says he wants an ATV to travel around with and without an ATV it’s a bit pointless. Everyone sits down at a lovely cafe to have coffee and chit chat, while I’m running around to car and ATV rentals to see what they have to offer. All ATVs have been rented out—it’s tourist season. I check back in, Emerson says I need to call all the places on the island and keep trying. I call all the places I can find, this is about 10 places (small island). No luck. Eventually Emerson agrees that using a moped will be okay, and that’s when I get relieved from my work tasks.